Technology peripherals

Technology peripherals

AI

AI

How to make AI have universal capabilities? New study: Put it to sleep

How to make AI have universal capabilities? New study: Put it to sleep

How to make AI have universal capabilities? New study: Put it to sleep

Neural networks can outperform humans on many tasks, but if you ask an AI system to absorb new memories, they may instantly forget what they have learned before. Now, a new study reveals a new way for neural networks to move through sleep stages and help prevent this amnesia.

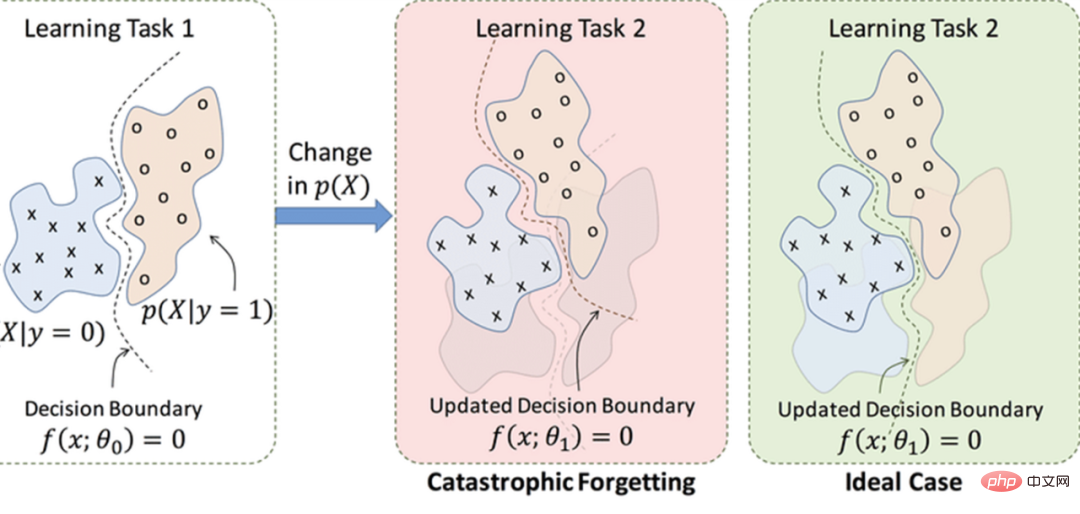

One of the main challenges faced by artificial neural networks is "catastrophic forgetting". When they go to learn a new task, they have an unfortunate tendency to suddenly completely forget what they have learned before.

Essentially, the representation of data by neural networks is a task-oriented data "compression" of the original data, and the newly learned knowledge will overwrite the past data.

This is one of the biggest flaws of current technology compared to human neural networks: in contrast, the human brain is capable of learning new tasks throughout its life; Does not affect its ability to perform previously memorized tasks. We don't entirely know why, but research has long shown that the human brain learns best when learning rounds are interspersed with sleep. Sleep apparently helps incorporate recent experiences into long-term memory banks.

"Reorganizing memories may actually be one of the main reasons why organisms need to go through sleep stages," says Erik Delanois, a computational neuroscientist at the University of California, San Diego.

Can AI also learn to sleep? Some previous research has attempted to address catastrophic amnesia by having AI simulate sleep. For example, when a neural network learns a new task, a strategy called interleaved training simultaneously feeds the machines old data they have learned previously to help them retain past knowledge. This approach was previously thought to mimic the way the brain works during sleep - constantly replaying old memories.

However, scientists had hypothesized that interleaved training would require feeding a neural network all of the data it was originally using to learn an old skill each time it wanted to learn something new. Not only does this require a lot of time and data, but it doesn't appear to be what biological brains do during true sleep - organisms neither have the ability to retain all the data needed to learn old tasks, nor do they have the time to replay it all while sleeping. .

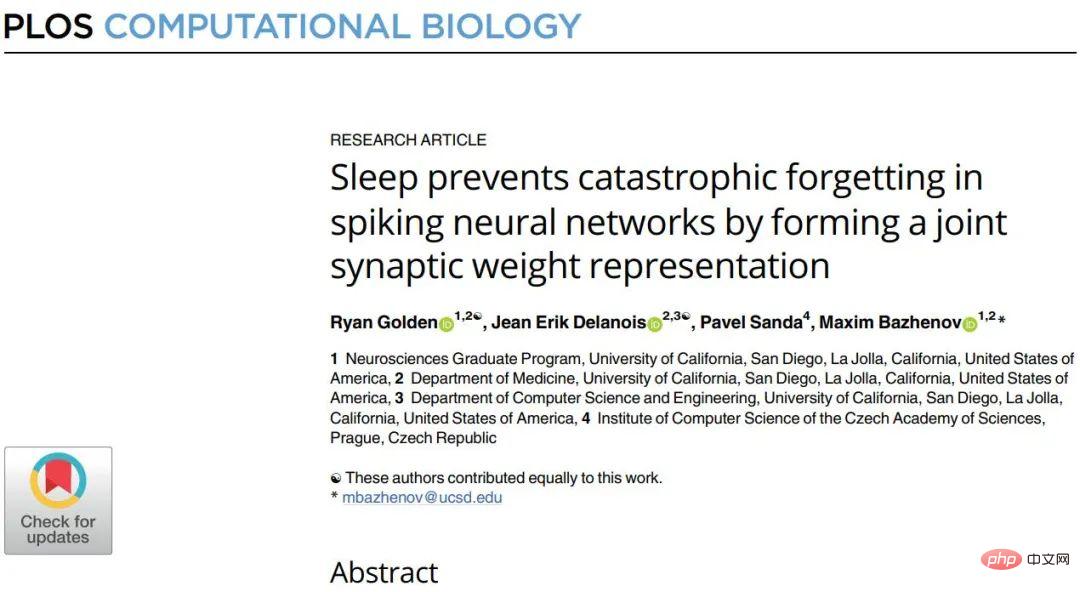

In a new study, researchers analyze the mechanisms behind catastrophic forgetting and the effectiveness of sleep in preventing the problem. Instead of using traditional neural networks, the researchers used a "spike neural network" that is closer to the human brain.

In an artificial neural network, components called neurons are fed data and work together to solve a problem, such as recognizing faces. The neural network repeatedly tweaks its synapses—the connections between its neurons—and sees if the resulting behavioral patterns better lead to solutions. Over time (continuous training), the network discovers which patterns are best for calculating the correct result. Finally it adopts these modes as its default mode, which is thought to partially mimic the human brain's learning process.

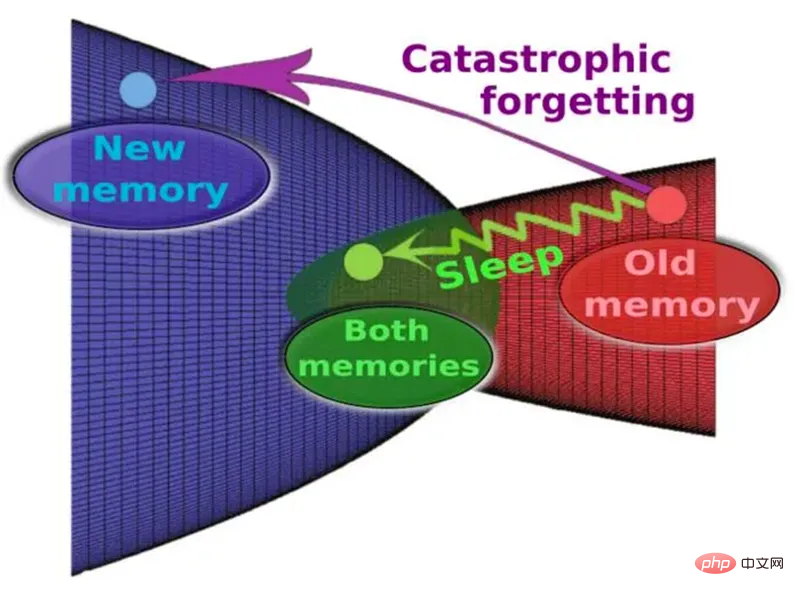

This diagram represents memory in abstract synaptic space and its evolution during and without sleep.

In an artificial neural network, the output of a neuron changes continuously as the input changes. In contrast, in a spiking neural network (SNN), a neuron produces an output signal only after a given number of input signals, a process that is a true reproduction of the behavior of real biological neurons. Because spiking neural networks rarely emit pulses, they transmit less data than typical artificial neural networks and in principle require less power and communication bandwidth.

As expected, the spiking neural network has such a characteristic: catastrophic forgetting occurs during the initial learning process. However, after several rounds of learning, after a period of time, , the set of neurons involved in learning the first task is reactivated. This is closer to what neuroscientists currently think of the sleep process.

Simply put: SNN enables previously learned memory traces to be automatically reactivated during offline processing sleep and to modify synaptic weights without interference.

This study uses multi-layer SNN with reinforcement learning to explore whether catastrophic forgetting can be avoided by interleaving periods of new task training with periods of sleep-like autonomic activity. Notably, the study showed that catastrophic forgetting can be prevented by periodically interrupting reinforcement learning during new tasks, similar to sleep stages.

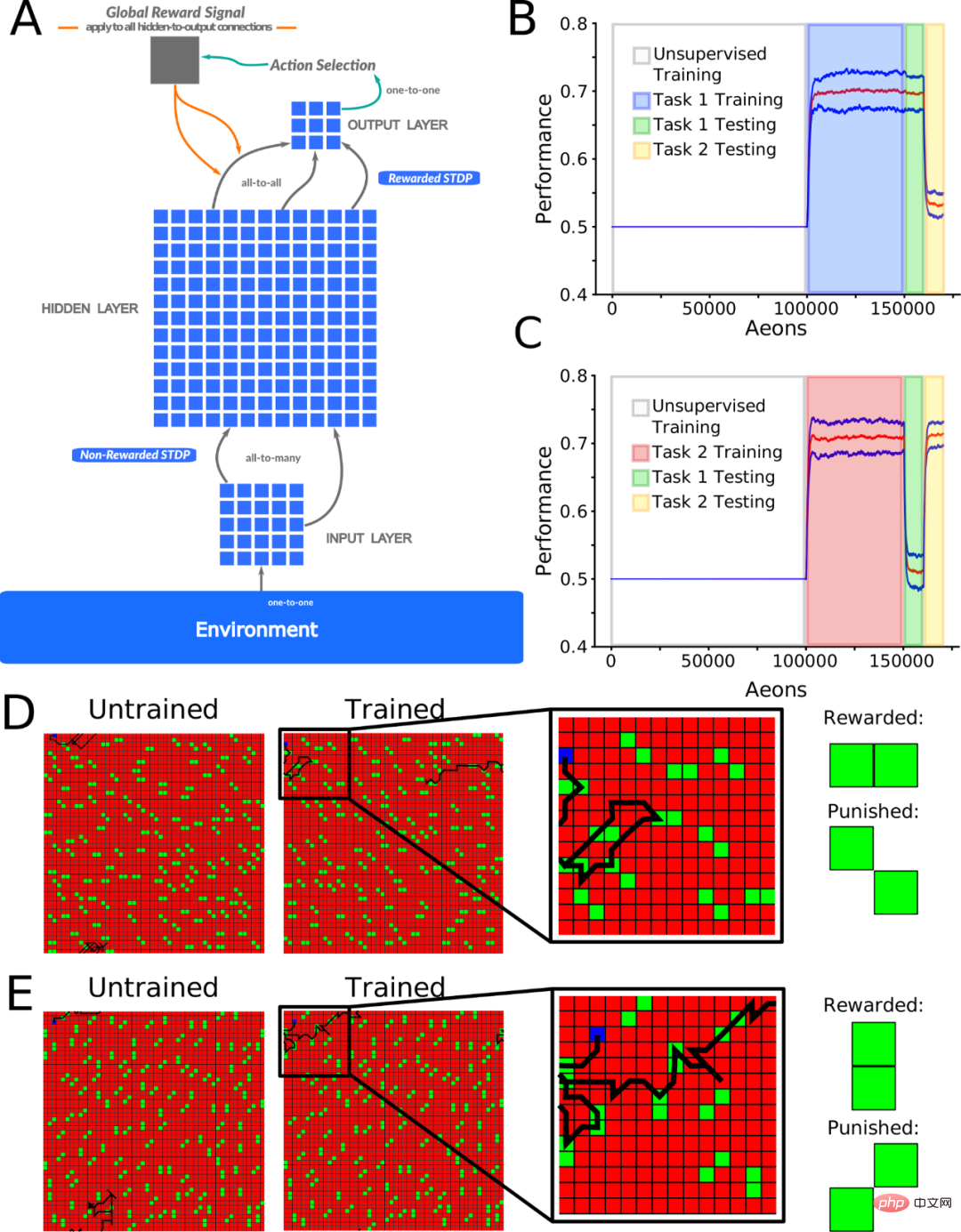

Figure 1A shows a feedforward spiking neural network used to simulate a signal from input to output. The neurons located between the input layer (I) and the hidden layer (H) undergo unsupervised learning (implemented using non-rewarded STDP), and the neurons between the H layer and the output (O) layer undergo reinforcement learning (implemented using rewarded STDP). ).

Unsupervised learning allows the hidden layer neurons to learn different particle patterns from different spatial locations in the input layer, while the reward STDP enables the output layer neurons to learn based on the detected particles in the input layer. Motion decisions for particle pattern types.

#The researchers trained the network in two complementary ways. In either task, the network learns to distinguish between reward and punishment particle patterns, with the goal of getting as much reward as possible. The task considers pattern discriminability (ratio of spent reward to penalty particles) as a measure of performance with a chance of 0.5. All reported results are based on at least 10 experiments with different random network initializations.

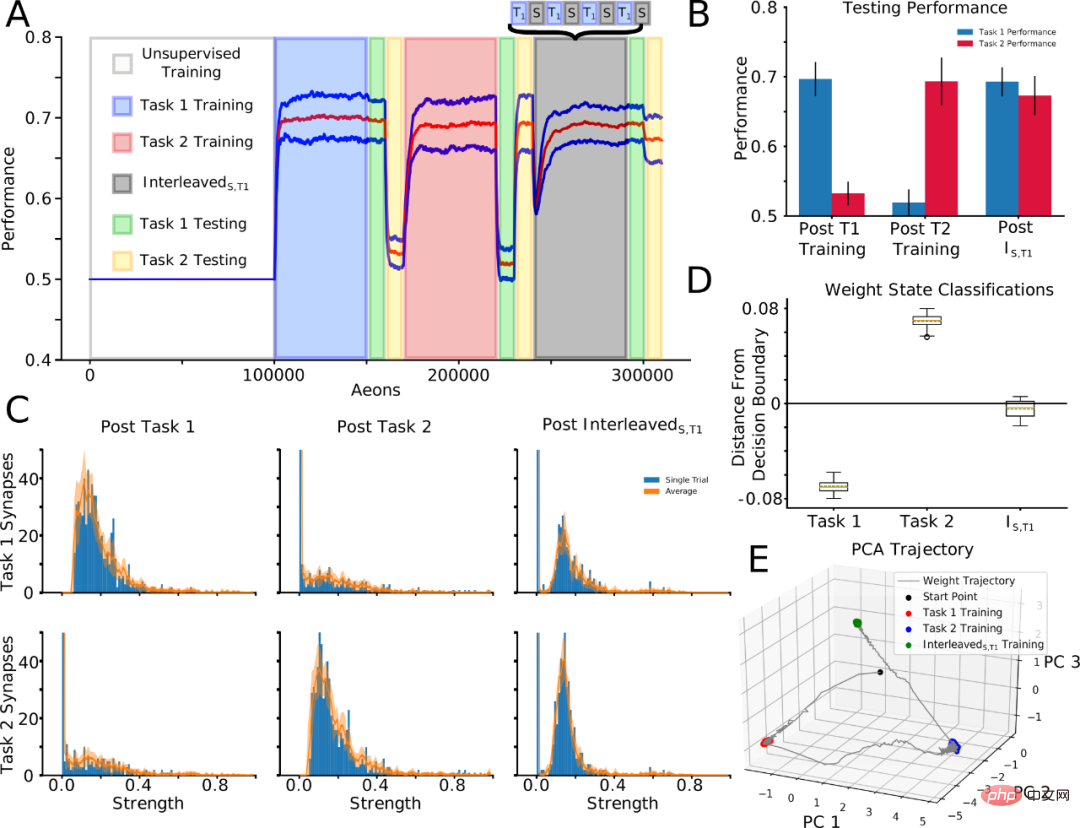

To reveal synaptic weight dynamics during training and sleep, the researchers next tracked "task-related" synapses, that is, in the top 10% of the distribution after training on a specific task Identified synapses. Task 1 was trained first, then task 2, and task-relevant synapses were identified after each task training. Next continue training task 1 again, but interleave it with sleep time (interleaved training): T1→T2→InterleavedS,T1. Sequential training of Task 1 - Task 2 resulted in Task 1 being forgotten, but after InterleavedS, Task 1 was relearned and Task 2 was also retained (Figures 4A and 4B).

Importantly, this strategy allows us to compare synaptic weights after training on InterleavedS,T1 with synaptic weights identified as task-relevant after training on Task 1 and Task 2 alone (Fig. 4C). The distribution structure of Task 1-related synapses formed after Task 1 training (Fig. 4C; top left) was disrupted after Task 2 training (top middle) but partially restored after InterleavedS, T1 training (top right). The distribution structure of Task 2-related synapses after Task 2 training (bottom center) is absent after Task 1 training (bottom left) and is partially preserved after InterleavedS, T1 training (bottom right).

It should be noted that this qualitative pattern can be clearly observed within a single trial (Figure 4C; blue bars) and can also be generalized across trials (Figure 4C; orange Wire). Therefore, sleep can preserve important synapses while merging new synapses.

Figure 4. Interleaved periods of new task training and sleep allow for the integration of synaptic information relevant to the new task while preserving the old task information.

"Interestingly, we did not explicitly store data related to early memories in order to artificially replay them during sleep to prevent forgetting," said the study's co-author Said author Pavel Sanda, a computational neuroscientist at the Institute of Computer Science of the Czech Academy of Sciences.

New strategies have been found to help prevent catastrophic forgetting. The spiking neural network was able to perform both tasks after going through sleep-like stages, and the researchers believe their strategy helped preserve synaptic patterns associated with the old and new tasks.

"Our work demonstrates the practicality of developing biologically inspired solutions," Delanois said.

The researchers note that their findings are not limited to spiking neural networks. Sanda said upcoming work shows that sleep-like stages could help "overcome catastrophic forgetting in standard artificial neural networks."

The study was published on November 18 in the journal PLOS Computational Biology.

Paper: "Sleep prevents catastrophic forgetting in spiking neural networks by forming a joint synaptic weight representation"

Paper address: https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1010628

The above is the detailed content of How to make AI have universal capabilities? New study: Put it to sleep. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

How to implement multi-search engine batch search in Google Chrome console?

Apr 04, 2025 pm 02:24 PM

How to implement multi-search engine batch search in Google Chrome console?

Apr 04, 2025 pm 02:24 PM

How to use Google Chrome console to perform batch searches for multiple search engines? In daily work or study, it is often necessary to be in multiple search engines at the same time...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem of transparent image with blank projection transformation result in OpenCV.js. When using OpenCV.js for image processing, sometimes you will encounter the image after projection transformation...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

Implementation method of task scheduling notification In task scheduling, the Quartz timer uses cron expression to determine the execution time of the task. Now we are facing this...