PyTorch 1.12 is officially released. Friends who have not updated can update.

PyTorch 1.12 is here just a few months after PyTorch 1.11 was launched! This version consists of more than 3124 commits since version 1.11, completed by 433 contributors. Version 1.12 includes major improvements and many bug fixes.

With the release of the new version, the most discussed thing may be that PyTorch 1.12 supports the Apple M1 chip.

In fact, as early as May this year, PyTorch officially announced that it officially supports GPU-accelerated PyTorch machine learning model training on the M1 version of Mac. Previously, PyTorch training on Mac could only utilize the CPU, but with the release of PyTorch version 1.12, developers and researchers can take advantage of Apple GPUs to significantly speed up model training.

PyTorch GPU training acceleration is implemented using Apple Metal Performance Shaders (MPS) as the backend. The MPS backend extends the PyTorch framework to provide scripts and functionality for setting up and running operations on Mac. MPS optimizes compute performance using core power fine-tuned for the unique characteristics of each Metal GPU family. The new device maps machine learning computational graphs and primitives onto the MPS Graph framework and MPS-provided tuning kernels.

Every Mac equipped with Apple’s self-developed chips has a unified memory architecture, allowing the GPU to directly access complete memory storage. PyTorch officials say this makes Mac an excellent platform for machine learning, allowing users to train larger networks or batch sizes locally. This reduces the costs associated with cloud-based development or the need for additional local GPU computing power. The unified memory architecture also reduces data retrieval latency and improves end-to-end performance.

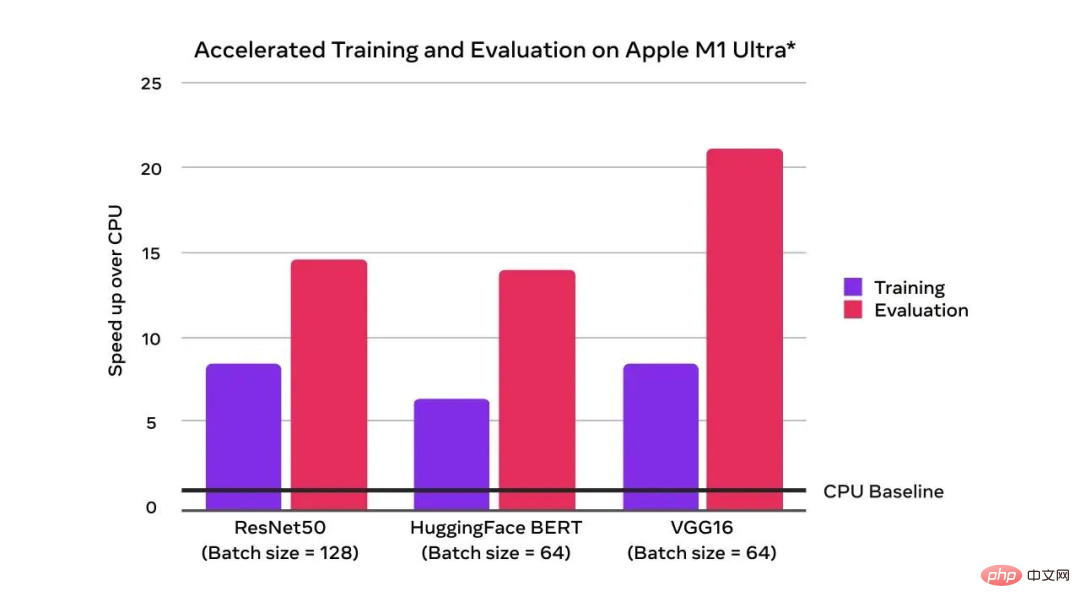

It can be seen that compared with the CPU baseline, GPU acceleration has achieved exponential training performance improvements:

With the blessing of GPU, the training and evaluation speed exceeds that of CPU

The picture above is Apple’s use of Apple M1 Ultra (20 The results are tested on a Mac Studio system with 128GB memory, 64-core GPU, and 2TB SSD. The test models are ResNet50 (batch size = 128), HuggingFace BERT (batch size = 64) and VGG16 (batch size = 64). Performance tests are conducted using specific computer systems and reflect the approximate performance of Mac Studio.

PyTorch officials have released a new beta version for users to try: TorchArrow. This is a machine learning preprocessing library for batch data processing. It's high-performance, Pandas-style, and has an easy-to-use API to speed up user preprocessing workflows and development.

Currently, PyTorch natively supports complex numbers, complex autograd, the complex module and a large number of complex operations (linear algebra and fast Fourier transform). Complex numbers are already used in many libraries, including torchaudio and ESPNet, and PyTorch 1.12 further extends complex number functionality with complex convolution and the experimental complex32 data type, which supports half-precision FFT operations. Due to a bug in the CUDA 11.3 package, if users want to use plural numbers, it is officially recommended to use the CUDA 11.6 package.

Forward-mode AD allows calculation of directional derivatives (or equivalently Jacobian vector products) in the forward pass. PyTorch 1.12 significantly improves forward-mode AD coverage.

PyTorch now supports multiple CPU and GPU fastpath implementations (BetterTransformer), the Transformer encoder module, including implementations of TransformerEncoder, TransformerEncoderLayer, and MultiHeadAttention (MHA). In the new version, BetterTransformer is 2x faster in many common scenarios, depending on the model and input features. The new version of the API supports compatibility with the previous PyTorch Transformer API, which will accelerate existing models if they meet fastpath execution requirements, as well as read models trained with previous versions of PyTorch.  In addition, the new version has some updates:

In addition, the new version has some updates:

For more information, please see: https://pytorch.org/blog/pytorch-1.12-released/.

The above is the detailed content of PyTorch 1.12 released, officially supports Apple M1 chip GPU acceleration and fixes many bugs. For more information, please follow other related articles on the PHP Chinese website!