Technology peripherals

Technology peripherals

AI

AI

Is it expected to replace Deepfake? Revealing how awesome this year's most popular NeRF technology is

Is it expected to replace Deepfake? Revealing how awesome this year's most popular NeRF technology is

Is it expected to replace Deepfake? Revealing how awesome this year's most popular NeRF technology is

What, you don’t know NeRF yet?

As the hottest AI technology in the field of computer vision this year, NeRF can be said to be widely used and has a bright future.

The friends on Station B have used this technology in new ways.

To attract good news

So, what exactly is NeRF?

NeRF (Neural Radiance Fields) is a concept first proposed in the best paper at the 2020 ECCV conference. It pushes implicit expression to a new level, using only 2D posed images as supervision , which can represent complex three-dimensional scenes.

One stone stirred up a thousand waves. Since then, NeRF has developed rapidly and been applied to many technical directions, such as "new viewpoint synthesis, three-dimensional reconstruction", etc.

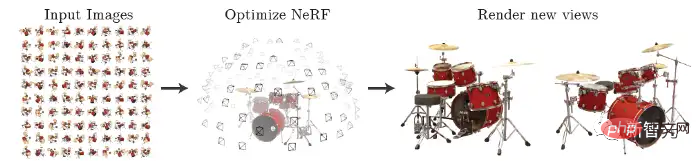

NeRF inputs sparse multi-angle images with poses for training to obtain a neural radiation field model. According to this model, clear photos from any viewing angle can be rendered, as shown in the figure below. It can also be briefly summarized as using an MLP to implicitly learn a three-dimensional scene.

#Netizens will naturally compare NeRF with the equally popular Deepfake.

A recent article published by MetaPhysics took stock of the evolutionary history, challenges and advantages of NeRF, and predicted that NeRF will eventually replace Deepfake.

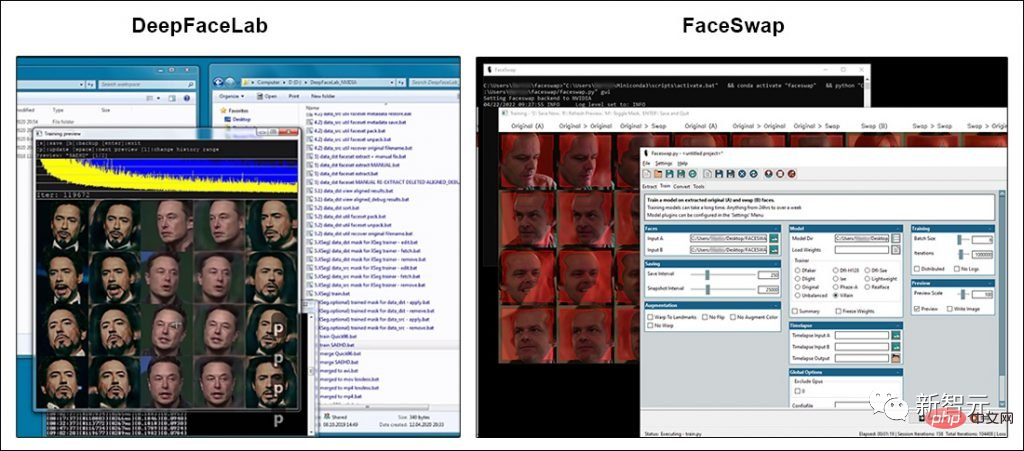

Most of the eye-catching topics about deepfake technology refer to the two open source software packages that have become popular since deepfakes entered the public eye in 2017: DeepFaceLab (DFL) and FaceSwap.

While both packages have extensive user bases and active developer communities, neither project deviates significantly from the GitHub code.

Of course, the developers of DFL and FaceSwap have not been idle: it is now possible to train deepfake models using larger input images, although this requires more expensive GPUs.

#But in fact, in the past three years, the improvement in deepfake image quality promoted by the media is mainly due to end users.

They have accumulated "time-saving and rare" experience in data collection, as well as the best methods to train models (sometimes a single experiment can take weeks), and learned how to leverage and extend the original 2017 code. The outermost limit.

Some in the VFX and ML research communities are trying to break through the "hard limits" of the popular deepfake package by extending the architecture so that machine learning models can be trained on images up to 1024×1024.

The pixels are twice the current actual range of DeepFaceLab or FaceSwap, closer to the resolutions useful in film and television production.

Let’s learn about NeRF together~

Unveiling

NeRF (Neural Radiance Fields), which appeared in 2020, is a method that passes through the neural A method of reconstructing objects and environments by splicing photos from multiple viewpoints within the network.

It achieves the best results for synthesizing complex scene views by optimizing the underlying continuous volumetric scene function using a sparse set of input views.

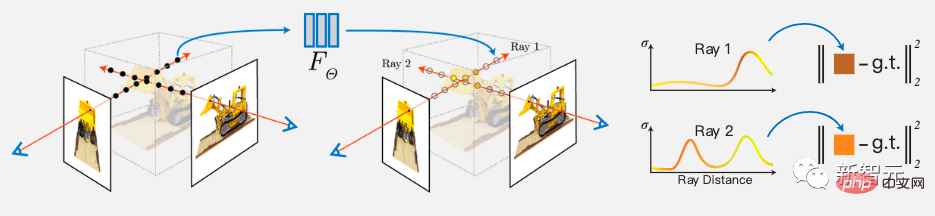

The algorithm also uses a fully connected deep network to represent a scene, its input is a single continuous 5D coordinate (spatial position (x, y, z) and viewing direction (θ, φ)), and its output is the Volumetric density at a spatial location and associated emission amplitude brightness.

The view is synthesized by querying 5D coordinates along the camera ray, and using classic volume rendering techniques to project the output color and density into the image.

Implementation process:

First represent a continuous scene as a 5D vector value function, whose input is a 3D position and 2D viewing direction, corresponding to The output of is an emission color c and volume density σ.

In practice, the 3D Cartesian unit vector d is used to represent the direction. This continuous 5D scene representation is approximated with an MLP network and its weights are optimized.

Additionally, the representation is encouraged to be consistent across multiple views by restricting the network to predict volume density σ as a function of position x, while also allowing RGB color c to be predicted as a function of position and viewing direction.

To achieve this, the MLP first processes the input 3D coordinates x with 8 fully connected layers (using ReLU activation and 256 channels per layer), and outputs σ and 256-dimensional feature vectors.

This feature vector is then concatenated with the viewing direction of the camera ray and passed to an additional fully connected layer that outputs the view-dependent RGB color.

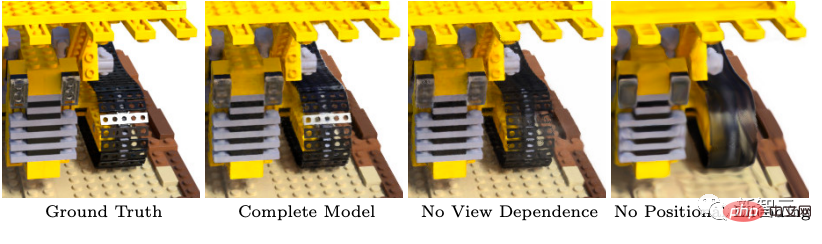

In addition, NeRF also introduces two improvements to achieve the representation of high-resolution complex scenes. The first is positional encoding to help MLP represent high-frequency functions, and the second is a stratified sampling process to enable it to efficiently sample high-frequency representations.

As we all know, the position encoding in the Transformer architecture can provide the discrete position of the mark in the sequence as the input of the entire architecture. NeRF uses position coding to map continuous input coordinates to a higher dimensional space, making it easier for MLP to approximate higher frequency functions.

As can be observed from the figure, removing positional encoding will greatly reduce the model's ability to represent high-frequency geometry and texture, ultimately leading to an over-smooth appearance.

Since the rendering strategy of densely evaluating the neural radiation field network at N query points along each camera ray is very inefficient, NeRF finally adopted a hierarchical representation, by proportioning the expected effect of the final rendering Allocate samples to improve rendering efficiency.

In short, NeRF no longer uses only one network to represent the scene, but optimizes two networks at the same time, a "coarse-grained" network and a "fine-grained" network.

The future is promising

NeRF solves the shortcomings of the past, that is, using MLP to represent objects and scenes as continuous functions. Compared with previous methods, NeRF can produce better rendering effects.

However, NeRF also faces many technical bottlenecks. For example, NeRF's accelerator will sacrifice other relatively useful functions (such as flexibility) to achieve low latency, more interactive environments, and less training time.

So, although NeRF is a key breakthrough, it still takes a certain amount of time to achieve perfect results.

Technology is progressing, and the future is still promising!

The above is the detailed content of Is it expected to replace Deepfake? Revealing how awesome this year's most popular NeRF technology is. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

An AI entrepreneurial idea suitable for programmers

Apr 09, 2024 am 09:01 AM

An AI entrepreneurial idea suitable for programmers

Apr 09, 2024 am 09:01 AM

Hello everyone, I am Casson. Many programmer friends want to participate in the development of their own AI products. We can divide the product form into four quadrants based on the "degree of process automation" and "degree of AI application". Among them: the degree of process automation measures "how much of the service process of the product requires manual intervention" and the degree of AI application measures "the proportion of AI application in the product". First, limit the ability of AI to process an AI picture application. The user passes the application within the application. The complete service process can be completed by interacting with the UI, resulting in a high degree of automation. At the same time, "AI image processing" relies heavily on AI capabilities, so AI application is high. The second quadrant is the conventional application development field, such as developing knowledge management applications, time management applications, and high process automation.

Home appliance industry observation: With the support of AI, will whole-house intelligence become the future of smart home appliances?

Jun 13, 2023 pm 05:48 PM

Home appliance industry observation: With the support of AI, will whole-house intelligence become the future of smart home appliances?

Jun 13, 2023 pm 05:48 PM

If artificial intelligence is compared to the fourth industrial revolution, then large models are the food reserves of the fourth industrial revolution. At the application level, it allows the industry to revisit the vision of the Dartmouth Conference in the United States in 1956 and officially begins the process of reshaping the world. According to the definition of major manufacturers, AI home appliances are home appliances with interconnection, human-computer interaction and active decision-making capabilities. AI home appliances can be regarded as the highest form of smart home appliances. However, can the AI-powered whole-house smart model currently on the market become the protagonist of the industry in the future? Will a new competition pattern emerge in the home appliance industry? This article will analyze it from three aspects. Why does the whole house intelligence sound louder than rain? Source: Statista, Zhongan.com, iResearch Consulting, Luotu Technology, National Lock Industry Information Center

Generative AI technology provides strong support for manufacturing companies to reduce costs and increase efficiency

Nov 21, 2023 am 09:13 AM

Generative AI technology provides strong support for manufacturing companies to reduce costs and increase efficiency

Nov 21, 2023 am 09:13 AM

In 2023, generative artificial intelligence (Artificial Intelligence Generated Content, AIGC for short) has become the hottest topic in the technology field. There is no doubt that for the manufacturing industry, how should they benefit from the emerging technology of generative AI? What kind of inspiration can the majority of small and medium-sized enterprises that are implementing digital transformation get from this? Recently, Amazon Cloud Technology worked with representatives from the manufacturing industry to discuss the current development trends of China's manufacturing industry, the challenges and opportunities faced by the digital transformation of traditional manufacturing, and the innovative reshaping of manufacturing by generative artificial intelligence. Share and in-depth discussion of the current application status of generative AI in the manufacturing industry. Mention China's manufacturing industry

IMAX Chinese AI art blockbuster moves theaters to classic landmarks

Jun 10, 2023 pm 01:03 PM

IMAX Chinese AI art blockbuster moves theaters to classic landmarks

Jun 10, 2023 pm 01:03 PM

IMAX China's AI art blockbuster moves theaters to classic landmark Lijiang Time News Recently, IMAX created China's first AI art blockbuster. With the help of AI technology, IMAX theaters "landed" in the Great Wall, Dunhuang, Guilin Lijiang, and Zhangye Danxia. There are many classic domestic landmarks in the area. This AI art blockbuster was created by IMAX in collaboration with digital artists @kefan404 and NEO Digital. It consists of four paintings. IMAX’s iconic super large screen may be spread on Zhangye Danxia’s colorful nature “canvas”, or it may carry thousands of years of history. Dunhuang, a city with rich cultural heritage, stands next to each other, blending into the landscape of Guilin's Li River, or overlooking the majestic Great Wall among the mountains. People can't help but look forward to the day when their imaginations will come true. Since 2008 in Tokyo, Japan

Huawei Yu Chengdong said: Hongmeng may have powerful artificial intelligence large model capabilities

Aug 04, 2023 pm 04:25 PM

Huawei Yu Chengdong said: Hongmeng may have powerful artificial intelligence large model capabilities

Aug 04, 2023 pm 04:25 PM

Huawei Managing Director Yu Chengdong posted an invitation to the HDC conference on Weibo today, suggesting that Hongmeng may have AI large model capabilities. According to his follow-up Weibo content, the invitation text was generated by the smart voice assistant Xiaoyi. Yu Chengdong said that Hongmeng World will soon bring a smarter and more considerate new experience. According to previously exposed information, Hongmeng 4 is expected to make significant progress in AI capabilities this year, further consolidating AI as the core feature of the Hongmeng system.

AI technology accelerates iteration: large model strategy from Zhou Hongyi's perspective

Jun 15, 2023 pm 02:25 PM

AI technology accelerates iteration: large model strategy from Zhou Hongyi's perspective

Jun 15, 2023 pm 02:25 PM

Since this year, Zhou Hongyi, the founder of 360 Group, has been inseparable from one topic in all his public speeches, and that is artificial intelligence large models. He once called himself "the evangelist of GPT" and was full of praise for the breakthroughs achieved by ChatGPT, and he was firmly optimistic about the resulting AI technology iterations. As a star entrepreneur who is good at expressing himself, Zhou Hongyi's speeches are often full of witty remarks, so his "sermons" have also created many hot topics and indeed added fuel to the fire of large AI models. But for Zhou Hongyi, being an opinion leader is not enough. The outside world is more concerned about how 360, the company he runs, responds to this new wave of AI. In fact, within 360, Zhou Hongyi has already initiated a change for all employees. In April, he issued an internal letter requesting every employee and every employee of 360

The advantages of AI customer service substitution are fully demonstrated. It will take time for demand matching and popular application.

Apr 12, 2023 pm 07:34 PM

The advantages of AI customer service substitution are fully demonstrated. It will take time for demand matching and popular application.

Apr 12, 2023 pm 07:34 PM

From the era of manual call centers, it has experienced the application of IVR process design, online customer service systems, etc., and has developed to today's artificial intelligence (AI) customer service. As an important window for serving customers, the customer service industry has always stood at the forefront of the times, constantly using new technologies to develop new productivity, and moving towards high efficiency, high quality, high service, and personalized, all-weather customer service. With the increase in the number of customers and the rapid increase in labor service costs, how to use new generation information technologies such as artificial intelligence and big data to promote the transformation of customer service centers in various industries from labor-intensive to intelligent, refined, and refined? Technology transformation and upgrading has become an important issue facing many industries. Benefiting from the continuous advancement of artificial intelligence technology and the rapid development of scenario-based applications,

Why is information equality the most important positive significance that AI brings to mankind? Explore the stories of the future

Sep 21, 2023 pm 06:21 PM

Why is information equality the most important positive significance that AI brings to mankind? Explore the stories of the future

Sep 21, 2023 pm 06:21 PM

In a world full of future technologies, artificial intelligence has become an indispensable assistant in human life. However, artificial intelligence is not only to facilitate our lives, it is also changing the structure and operation of human society in a quiet way. One of the most important positive implications is that information equality eliminates the digital divide so that everyone can equally enjoy the convenience brought by technology. In the current digital era, informatization has become an important force in promoting social development. However, we are also facing a real problem, that is, there is a digital divide, which prevents some people from enjoying the convenience brought by technology. Therefore, equal rights in informatization are particularly important. It can eliminate the digital divide, allow everyone to equally share the fruits of scientific and technological development, and achieve overall social progress.