Technology peripherals

Technology peripherals

AI

AI

'Sparse coding' moves from theory to practice! Professor Ma Yi's new work in NeurIPS 2022: Sparse convolution performance and robustness surpass ResNet

'Sparse coding' moves from theory to practice! Professor Ma Yi's new work in NeurIPS 2022: Sparse convolution performance and robustness surpass ResNet

'Sparse coding' moves from theory to practice! Professor Ma Yi's new work in NeurIPS 2022: Sparse convolution performance and robustness surpass ResNet

Although deep neural networks have strong empirical performance in image classification, such models are often regarded as "black boxes" and are most criticized for being "difficult to explain" .

In contrast, sparse convolutional models are also powerful tools for analyzing natural images, which assume that a signal can be represented by a convolutional model. It is expressed as a linear combination of several elements in the convolutional dictionary, which has good theoretical interpretability and biological rationality.

But in practical applications, the sparse convolution modelAlthough it works in principle, it does not perform as well as it should when compared with empirically designed deep networks. Performance advantages.

Recently, Professor Ma Yi’s research group published a new paper at NeurIPS 2022, reviewing the application of sparse convolution models in image classification, and The mismatch between the empirical performance and interpretability of sparse convolutional models is successfully addressed.

Paper link: https://arxiv.org/pdf/2210.12945.pdf

Code link: https://github.com/Delay-Xili/SDNet

proposed in the article can The micro-optimization layer uses Convolutional Sparse Coding (CSC) to replace the standard quasi-convolutional layer.

The results show that compared with traditional neural networks, these models have equally strong empirical performance on CIFAR-10, CIFAR-100 and ImageNet datasets.

By exploiting the robust recovery properties of sparse modeling, the researchers further show that with only a simple appropriate trade-off between sparse regularization and data reconstruction terms, these models can More robust to input corruption and adversarial perturbations in testing.

Professor Ma Yi received a double bachelor's degree in automation and applied mathematics from Tsinghua University in 1995, and then studied at the University of California, Berkeley, USA, and in 1997 Obtained a master's degree in EECS, a master's degree in mathematics and a doctorate in EECS in 2000.

After graduation, he taught at the University of Illinois at Urbana-Champaign and became the youngest associate professor in the history of the Department of Electrical and Computer Engineering.

In 2009, he served as a senior researcher in the Visual Computing Group of Microsoft Research Asia. In 2014, he joined the School of Information Science and Technology of ShanghaiTech University full-time.

Joined the University of California, Berkeley and Tsinghua-Berkeley Shenzhen Institute in 2018. He is currently a professor in the Department of Electrical Engineering and Computer Science at the University of California, Berkeley, and is also an IEEE Fellow, ACM Fellow, and SIAM Fellow

Professor Ma Yi’s research interests include 3D computer vision, low-dimensional models for high-dimensional data, scalability optimization and machine learning. Recent research topics include large-scale 3D geometric reconstruction and Interaction and relationship between low-dimensional models and deep networks.

Sparse ConvolutionAlthough deep convolutional networks (ConvNets) have become the mainstream method for image classification and surpass other models in performance, their internal components The specific data meaning of convolutions, nonlinear functions, and normalization has not yet been explained.

Sparse data modeling has been widely used in many signal and image processing, supported by the ability to learn interpretable representations and strong theoretical guarantees (such as handling corrupted data) application, but its classification performance on datasets such as ImageNet is still not as good as empirical deep models.

Even sparse models with strong performance still have defects:

1) The network structure needs to be specially designed, which limits the applicability of the model;

2) The calculation speed of training is several orders of magnitude slower;

3) It does not show obvious advantages in interpretability and robustness.

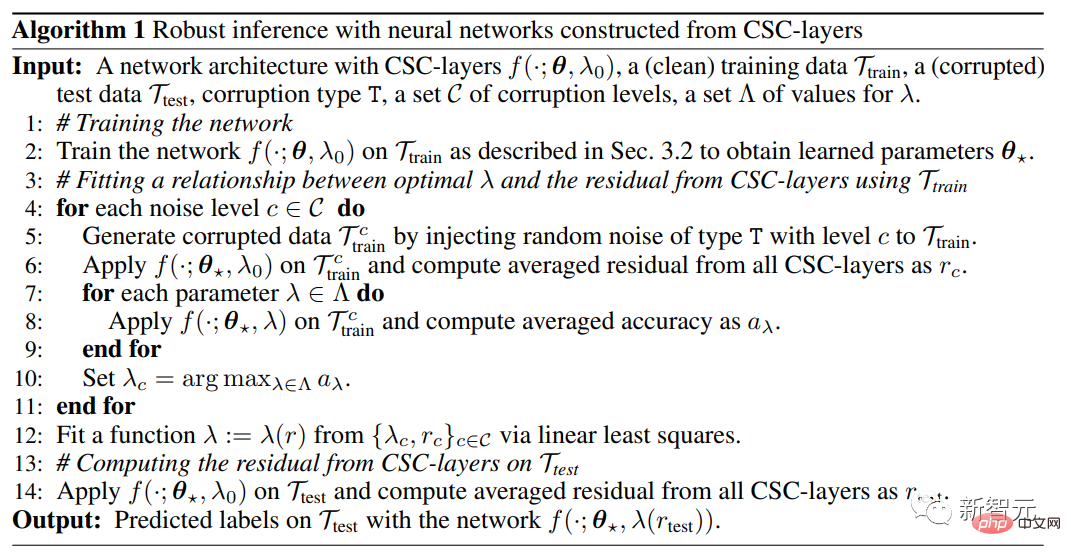

The researchers proposed a visual recognition framework in this paper, demonstrating that sparse modeling can be combined with deep learning through a simple design, assuming that the layer input can be represented by all data points. Represented by several atoms in a shared dictionary, it achieves the same performance as standard ConvNets while having better hierarchical interpretability and stability.

This method encapsulates sparse modeling in an implicit layer and uses it as a replacement for the convolutional layer in standard ConvNets.

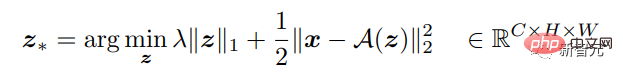

Relative to the explicit function used in the classic fully connected or convolutional layer, the hidden layer uses an implicit function. The hidden layer in this paper is defined based on the optimization problem of the input and weight parameters of the layer, and the output of the hidden layer is the solution to the optimization problem.

Given a multidimensional input signal, the function of the layer can be defined to perform reverse mapping to a better sparse output. The number of output channels can be different from the input, so that the above can be found An optimal sparse solution to Lasso-type optimization problems.

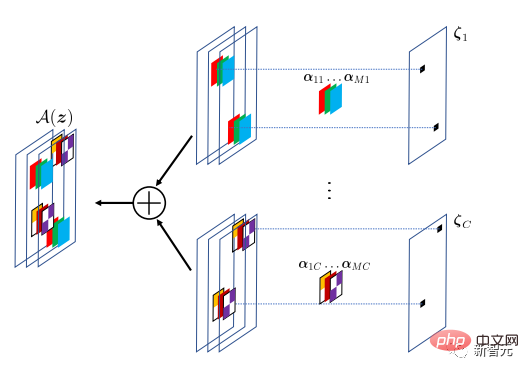

The hidden layer implements the convolutional sparse coding (CSC) model, where the input signal is approximated by a sparse linear combination of atoms in a convolutional dictionary . This convolutional dictionary can be regarded as the parameters of the CSC layer, which is trained through backpropagation.

The goal of the CSC model is to reconstruct the input signal through the A(z) operator, where the feature map z specifies the convolution filter in A position and value. To be tolerant to modeling differences, the reconstruction does not need to be exact.

Based on the determined input-output mapping of the CSC layer, forward propagation can be performed by solving the relevant optimization, and by deriving the optimal coefficient solution relative to the input x and parameters The gradient of A is used to perform backpropagation.

The entire network with CSC layer can then be trained from the labeled data in an end-to-end manner by minimizing the cross-entropy loss.

Experimental results

Classification performance comparison

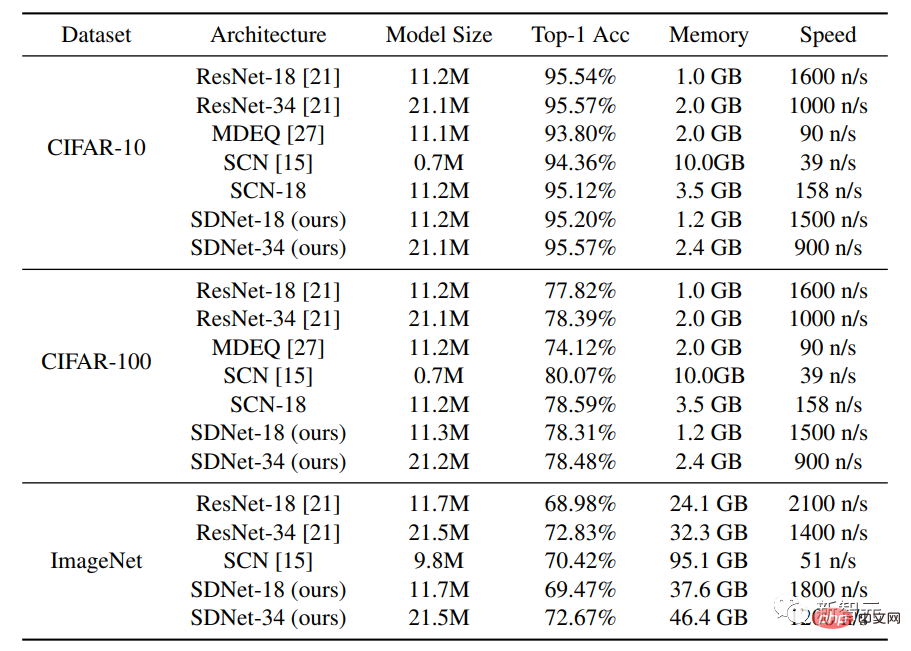

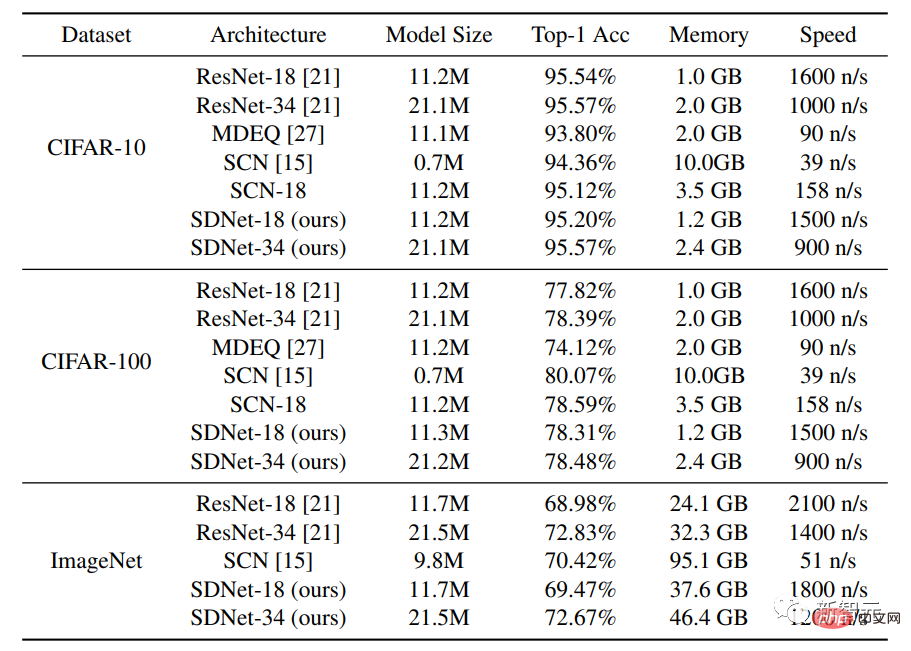

The data sets used in the experiment are CIFAR-10 and CIFAR-100. Each data set contains 50,000 training images and 10,000 test images. The size of each image is 32 ×32, RGB channel.

In addition to comparing the method with the standard network architectures ResNet-18 and ResNet-34, the researchers also compared it with the MDEQ model with a hidden layer architecture and SCN with a sparse modeling architecture. Compare.

The experimental results can be seen that under similar model scale, the Top-1 accuracy of SDNet-18/34 is the same as that of ResNet-18/ 34 is similar or higher, while having similar inference speed. The results demonstrate the potential of this network as a powerful alternative to existing data-driven models, as SDNet models have additional advantages in handling damaged images.

After comparing the SDNet-18 model with the MDEQ model of similar model size, it can be found that SDNet-18 is not only more accurate than MDEQ, but also much faster (>7 times). It should be noted that MDEQ cannot handle damaged data like SDNet.

The SCN network that also uses sparse modeling has achieved Top-1 accuracy, but an important shortcoming of SCN is that its training speed is very slow. The reason may be that SCN uses Compared with the convolutional sparse coding model, it needs to solve more sparse coding problems in each forward propagation and cannot benefit from parallel computing.

Robust inference handles input perturbations

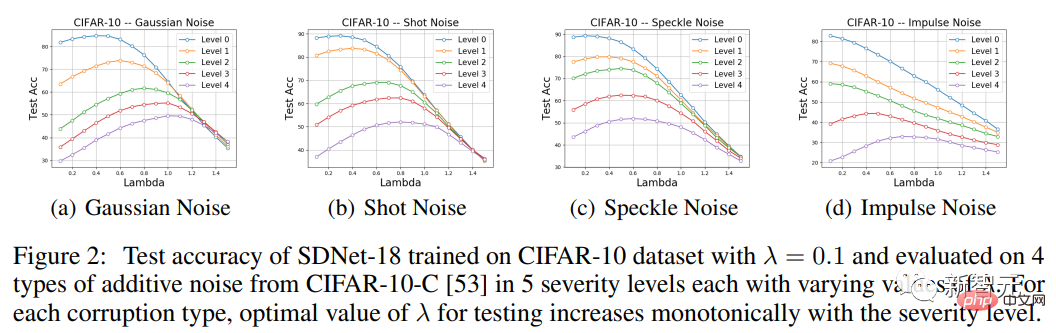

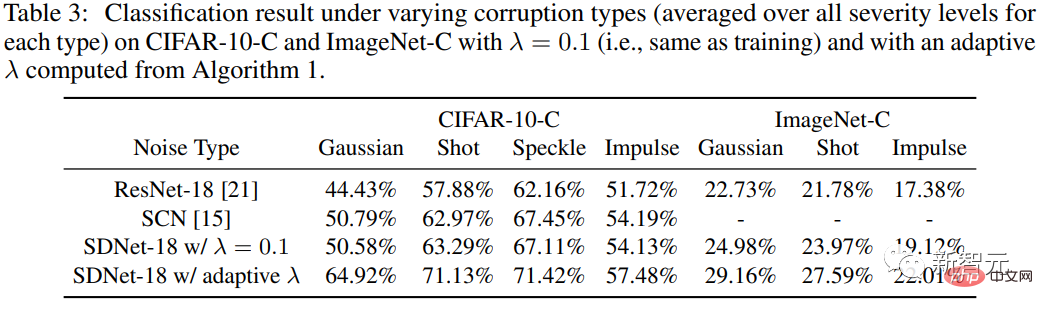

To test the method’s robustness to input perturbations, the researchers used We present the CIFAR-10-C dataset, in which the data is corrupted by different types of synthetic noise and varying severities.

Since the CSC layer in the model penalizes the entry-wise difference between the input signal and the reconstructed signal, SDNet should theoretically be more suitable for processing additive noise.

So the experimental part mainly focuses on the four types of additive noise in CIFAR-10-C, namely Gaussian noise, shot noise, speckle noise and impulse noise, then evaluate the accuracy of SDNet-18 and compare its performance with ResNet-18.

It can be seen that for various types of noise and different severities (except impulse noise of levels 0, 1 and 2), appropriate Choosing a different lambda value from the one used during training can help improve test performance.

Specifically, the accuracy curve as a function of λ exhibits a unimodal shape, with performance first increasing and then decreasing. Furthermore, in each data corruption type, the λ value at which performance peaks are reached increases monotonically with the severity of the corruption, an observation consistent with expectations.

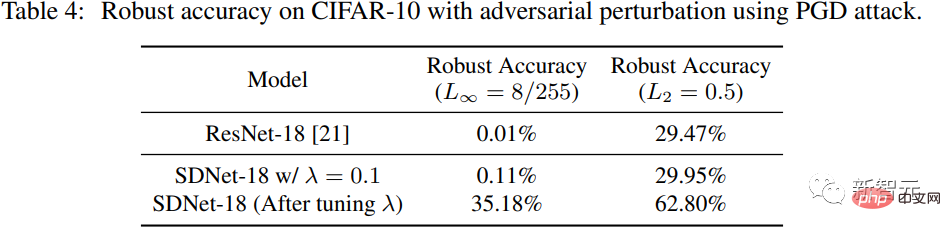

Dealing with adversarial perturbations

The researchers used PGD on SDNet (λ =0.1) Generate adversarial perturbations, the L∞ paradigm of the perturbation is 8/255, and the L2 paradigm of the perturbation is 0.5.

##Compared with ResNet-18, we can see that when λ=0.1, SDNet’s The performance is not much better than ResNet, but the robustness accuracy can be greatly improved by adjusting the parameter λ.

The above is the detailed content of 'Sparse coding' moves from theory to practice! Professor Ma Yi's new work in NeurIPS 2022: Sparse convolution performance and robustness surpass ResNet. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1381

1381

52

52

New feature in PHP version 5.4: How to use callable type hint parameters to accept callable functions or methods

Jul 29, 2023 pm 09:19 PM

New feature in PHP version 5.4: How to use callable type hint parameters to accept callable functions or methods

Jul 29, 2023 pm 09:19 PM

New feature of PHP5.4 version: How to use callable type hint parameters to accept callable functions or methods Introduction: PHP5.4 version introduces a very convenient new feature - you can use callable type hint parameters to accept callable functions or methods . This new feature allows functions and methods to directly specify the corresponding callable parameters without additional checks and conversions. In this article, we will introduce the use of callable type hints and provide some code examples,

What do product parameters mean?

Jul 05, 2023 am 11:13 AM

What do product parameters mean?

Jul 05, 2023 am 11:13 AM

Product parameters refer to the meaning of product attributes. For example, clothing parameters include brand, material, model, size, style, fabric, applicable group, color, etc.; food parameters include brand, weight, material, health license number, applicable group, color, etc.; home appliance parameters include brand, size, color , place of origin, applicable voltage, signal, interface and power, etc.

PHP Warning: Solution to in_array() expects parameter

Jun 22, 2023 pm 11:52 PM

PHP Warning: Solution to in_array() expects parameter

Jun 22, 2023 pm 11:52 PM

During the development process, we may encounter such an error message: PHPWarning: in_array()expectsparameter. This error message will appear when using the in_array() function. It may be caused by incorrect parameter passing of the function. Let’s take a look at the solution to this error message. First, you need to clarify the role of the in_array() function: check whether a value exists in the array. The prototype of this function is: in_a

i9-12900H parameter evaluation list

Feb 23, 2024 am 09:25 AM

i9-12900H parameter evaluation list

Feb 23, 2024 am 09:25 AM

i9-12900H is a 14-core processor. The architecture and technology used are all new, and the threads are also very high. The overall work is excellent, and some parameters have been improved. It is particularly comprehensive and can bring users Excellent experience. i9-12900H parameter evaluation review: 1. i9-12900H is a 14-core processor, which adopts the q1 architecture and 24576kb process technology, and has been upgraded to 20 threads. 2. The maximum CPU frequency is 1.80! 5.00ghz, which mainly depends on the workload. 3. Compared with the price, it is very suitable. The price-performance ratio is very good, and it is very suitable for some partners who need normal use. i9-12900H parameter evaluation and performance running scores

C++ function parameter type safety check

Apr 19, 2024 pm 12:00 PM

C++ function parameter type safety check

Apr 19, 2024 pm 12:00 PM

C++ parameter type safety checking ensures that functions only accept values of expected types through compile-time checks, run-time checks, and static assertions, preventing unexpected behavior and program crashes: Compile-time type checking: The compiler checks type compatibility. Runtime type checking: Use dynamic_cast to check type compatibility, and throw an exception if there is no match. Static assertion: Assert type conditions at compile time.

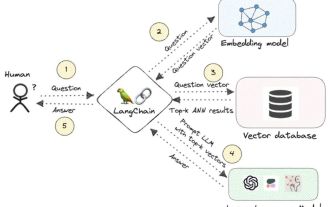

Knowledge graph: the ideal partner for large models

Jan 29, 2024 am 09:21 AM

Knowledge graph: the ideal partner for large models

Jan 29, 2024 am 09:21 AM

Large language models (LLMs) have the ability to generate smooth and coherent text, bringing new prospects to areas such as artificial intelligence conversation and creative writing. However, LLM also has some key limitations. First, their knowledge is limited to patterns recognized from training data, lacking a true understanding of the world. Second, reasoning skills are limited and cannot make logical inferences or fuse facts from multiple data sources. When faced with more complex and open-ended questions, LLM's answers may become absurd or contradictory, known as "illusions." Therefore, although LLM is very useful in some aspects, it still has certain limitations when dealing with complex problems and real-world situations. In order to bridge these gaps, retrieval-augmented generation (RAG) systems have emerged in recent years. The core idea is

Several common encoding methods

Oct 24, 2023 am 10:09 AM

Several common encoding methods

Oct 24, 2023 am 10:09 AM

Common encoding methods include ASCII encoding, Unicode encoding, UTF-8 encoding, UTF-16 encoding, GBK encoding, etc. Detailed introduction: 1. ASCII encoding is the earliest character encoding standard, using 7-bit binary numbers to represent 128 characters, including English letters, numbers, punctuation marks, control characters, etc.; 2. Unicode encoding is a method used to represent all characters in the world The standard encoding method of characters, which assigns a unique digital code point to each character; 3. UTF-8 encoding, etc.

C++ program to find the value of the inverse hyperbolic sine function taking a given value as argument

Sep 17, 2023 am 10:49 AM

C++ program to find the value of the inverse hyperbolic sine function taking a given value as argument

Sep 17, 2023 am 10:49 AM

Hyperbolic functions are defined using hyperbolas instead of circles and are equivalent to ordinary trigonometric functions. It returns the ratio parameter in the hyperbolic sine function from the supplied angle in radians. But do the opposite, or in other words. If we want to calculate an angle from a hyperbolic sine, we need an inverse hyperbolic trigonometric operation like the hyperbolic inverse sine operation. This course will demonstrate how to use the hyperbolic inverse sine (asinh) function in C++ to calculate angles using the hyperbolic sine value in radians. The hyperbolic arcsine operation follows the following formula -$$\mathrm{sinh^{-1}x\:=\:In(x\:+\:\sqrt{x^2\:+\:1})}, Where\:In\:is\:natural logarithm\:(log_e\:k)