Technology peripherals

Technology peripherals

AI

AI

2023 is coming, the annual outlook of Ng Enda, Bengio and other big guys! Are rational AI models coming?

2023 is coming, the annual outlook of Ng Enda, Bengio and other big guys! Are rational AI models coming?

2023 is coming, the annual outlook of Ng Enda, Bengio and other big guys! Are rational AI models coming?

In less than 3 days, 2022 will pass.

On the occasion of bidding farewell to the old and welcoming the new, Ng Enda, Bengio and other AI tycoons gathered together at DeepLearning.ai to look forward to 2023 in their own eyes.

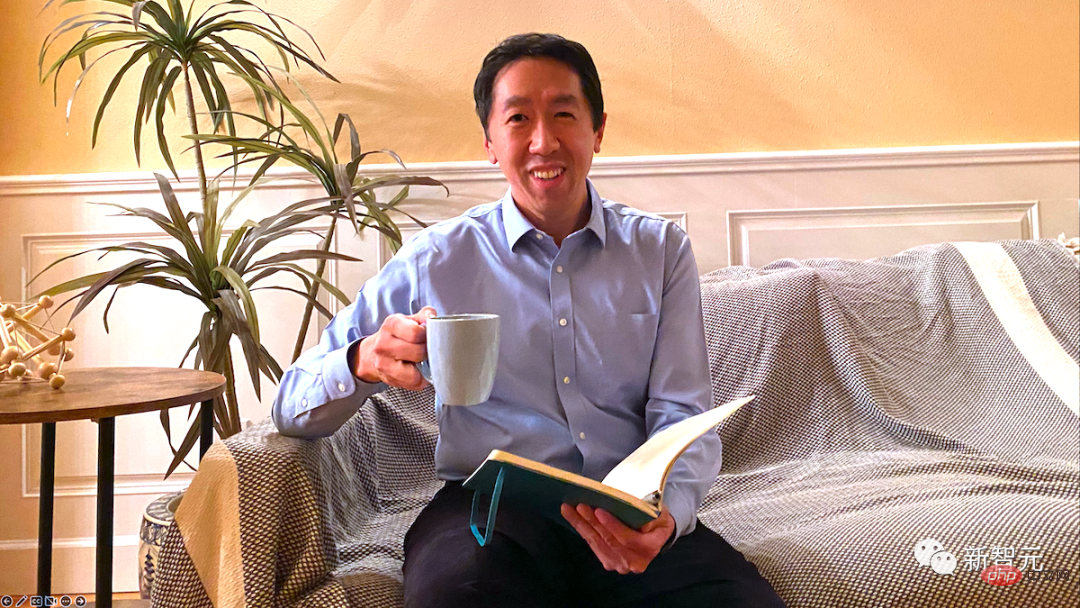

As the founder of DeepLearning.ai, Andrew Ng first delivered a welcome speech and recalled the years when he first started doing research, and prepared for this multi-faceted The discussion got off to a good start with the participation of some big shots.

Dear friends:

As we enter the new year, Let us think of 2023 not as a single, isolated year, but as the first of many years in the future where we will accomplish our long-term goals. Some results take a long time to achieve, but we can do this more effectively if we envision a path rather than simply going from one milestone to the next.

When I was young, I barely made any concrete connections between short-term actions and long-term results. I would always focus on the next goal, project, or research paper, saying there was a vague 10-year goal but no clear path to get there.

10 years ago I built my first machine learning course in a week (often filming at 2am). This year, I updated the course content for the Machine Learning major and planned the entire course better (although some shots are still taken at 2 a.m., but the number is reduced!).

#In previous businesses, I tended to build a product and then think about how to get it to customers. Nowadays, even in the initial stages, I think more about the needs of my clients.

#Feedback from friends and mentors can help you shape your vision. A big step in my growth was learning to trust the advice of certain experts and mentors and trying to understand it. For example, I have friends who are experts in global geopolitics, and they sometimes advise me to invest more in specific countries.

#I cannot come to this conclusion myself because I don’t know much about these countries. But I’ve learned to explain my long-term plans, ask for their feedback, and listen carefully when they point me in different directions.

#Now, one of my top goals is to democratize AI innovation. Allow more people to build customized artificial intelligence systems and benefit from them. While the road to this goal was long and difficult, I could see the steps to get there, and the advice of friends and mentors greatly shaped my thinking.

# As 2023 approaches, how far into the future can you plan? Do you want to gain expertise on a topic, advance your career, or solve a technical problem? Make path assumptions (even untested ones) and solicit feedback to test and refine them?

#Have a dream for 2023.

Happy New Year!

Andrew Ng

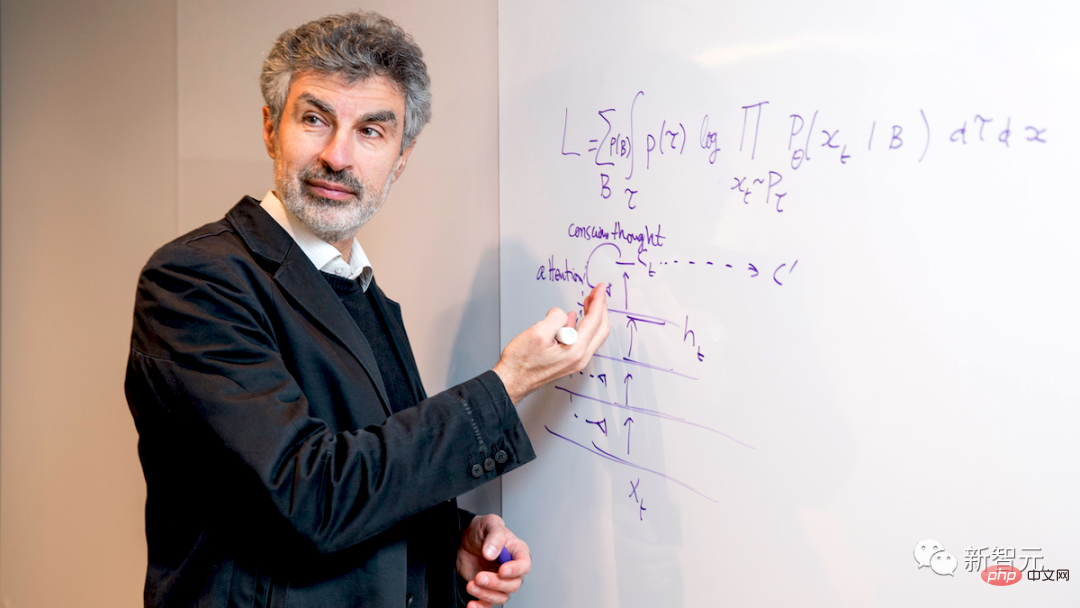

Yoshua Bengio: Looking for rational AI models

In the past, the progress of deep learning was mainly about "making miracles with great effort": adopting the latest architecture, improving hardware, and expanding computing power, data, and scale. Do we already have the architecture we need and all that's left is to develop better hardware and data sets so that we can continue to scale? Is there anything missing now?

#I think it is missing, and I hope to find these missing things in the next year.

#I have been working with neuroscientists and cognitive neuroscientists to study the gap between state-of-the-art systems and humans. Simply increasing the model size will not fill this gap. Instead, building into current models a human-like ability to discover and reason about high-level concepts and their relationships may make the gap even wider.

# Consider the number of examples required to learn a new task, known as “sample complexity.” Training a deep learning model to play a new video game requires a lot of gameplay that humans can learn very quickly. But computers need to consider countless possibilities to plan an efficient route from A to B. Humans don't need it.

Humans can select the right pieces of knowledge and fit those pieces together into a relevant set of explanations, answers, or plans. Furthermore, given a set of variables, humans are very good at determining which are causes and which are effects. The current artificial intelligence technology is not close to human level in this ability.

Typically, AI systems are highly confident in the correctness of the answers and solutions they generate, even if they are actually wrong. This problem might be a funny joke in an application like a text generator or a chatbot, but it could be life-threatening in a self-driving car or a medical diagnostic system.

# Current AI systems behave the way they do, in part because they are designed to do so. For example, a text generator is trained only to predict the next word, rather than to build an internal data structure or to explain the concepts it operates on and the relationships between them.

#But I think we can design AI systems that can track the meaning behind things and reason about them while still taking advantage of current deep learning methods of many advantages. This addresses challenges ranging from excessive sample complexity to overconfidence inaccuracies.

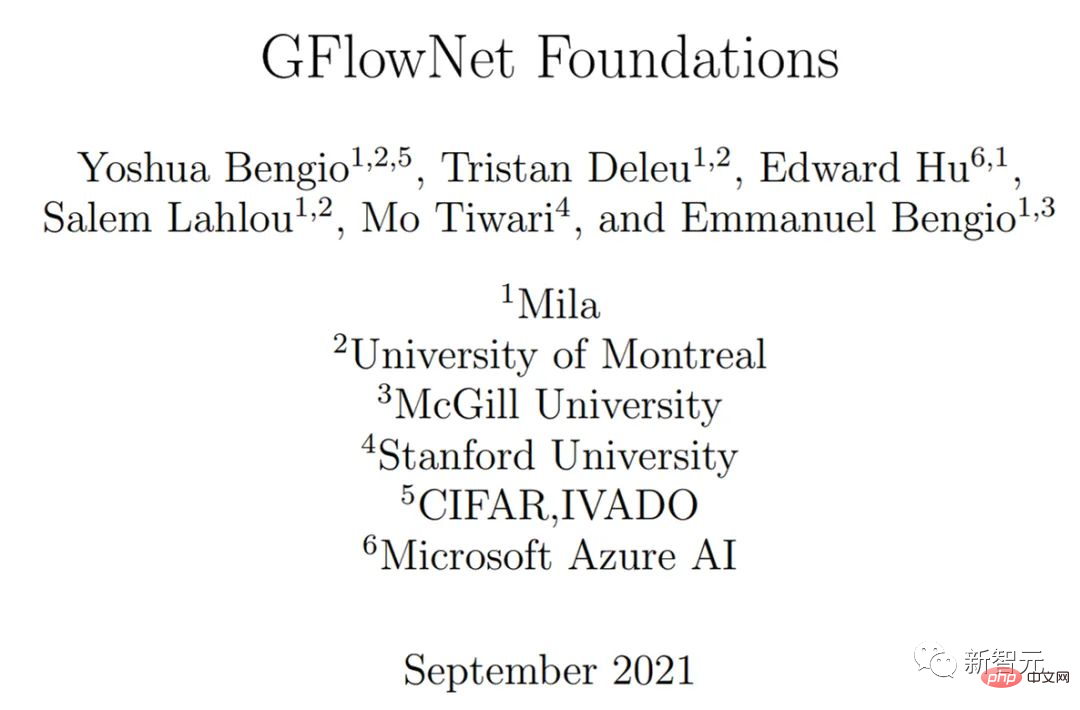

Paper link: https://arxiv.org/pdf/2111.09266.pdf

I am very interested in "generative flow network" GFlowNets, which is a new method of training deep networks that our team started a year ago. The idea was inspired by the way humans reason through a series of steps, adding new relevant information at each step.

#This is like reinforcement learning in that the model sequentially learns policies to solve the problem. It is also like generative modeling in that it can sample solutions that correspond to probabilistic reasoning.

#If you think of an interpretation of an image, your thought can be converted into a sentence, but it is not the sentence itself. Instead, it contains semantic and relational information about the concepts in the sentence. Generally, we represent this semantic content as a graph, where each node is a concept or variable.

#I don't think this is the only solution and I look forward to seeing a variety of approaches. Through diversified exploration, we will have a greater chance of finding what is currently missing in the field of AI and bridging the gap between current humans and human-level AI.

Yoshua Bengio is Professor of Computer Science at the University of Montreal and Scientific Director of the Mila-Québec Institute for Artificial Intelligence. He received the 2018 Turing Award along with Geoffrey Hinton and Yann LeCun for his groundbreaking contributions to deep learning.

##Alon Halevy: Personal Data Timeline

Alon Halevy is an Israeli-American computer scientist and an expert in the field of data integration. He served as a research scientist at Google from 2005 to 2015, where he was responsible for Google Data Fusion Tables.

He is a member of ACM and received the Presidential Award (PECASE) in 2000. He is also the founder of technology companies Nimble Technology (now Actuate Corporation) and Transformic Inc.

In the outlook for 2023, Halevy focuses on the construction of personal data timeline.

#How do companies and organizations use user data? This important issue has received widespread attention in both technical and policy circles.

In 2023, there is an equally important question that deserves more attention: how can we, as individuals, use the data generated to improve our health and increase Vitality and productivity?

#We generate all kinds of data every day. Photos capture our life experiences, cell phones record our workouts and locations, and internet services record what we consume and buy.

We also record various wishes: trips we want to take and restaurants we want to try, books and movies we plan to enjoy, and social activities we want to have. .

# Soon, smart glasses will record our experiences in more detail. However, this data is scattered across many applications. In order to better summarize past experiences, we need to organize past memories from different applications every day.

What if all the information could be combined into a personal timeline to help us move toward our goals, hopes, and dreams? In fact, someone had this idea a long time ago.

As early as 1945, American scientist Vannevar Bush designed a product called memex ). In the 1990s, Gordon Bell and his colleagues at Microsoft Research built MyLifeBits, which can store all the information of a person's life.

#But when we keep all data in one place, protecting privacy and preventing the misuse of information is obviously a key issue.

#Currently, no one company owns all of our data, nor is it authorized to store all of our data. Therefore, a collaborative effort is needed to build the technology to support personal timelines, including protocols for data exchange, encrypted storage, and secure processing.

#There are two technical challenges that need to be solved urgently when establishing a personal timeline.

#The first challenge is to ask intelligent questions about the system. Although we have made significant progress in question answering based on text and multimodal data, in many cases, intelligent question answering requires us to reason explicitly about the set of answers.

This is the basis of the database system. For example, to answer "Which cafes did I visit in Tokyo?" or "How many half marathons did I run in two hours?" requires retrieval collections as intermediate answers. At present, this task cannot be completed in natural language processing.

To draw more inspiration from the database, the system also needs to be able to explain the source of the answer and determine whether the answer is correct and complete.

The second challenge in building a personal timeline is how to develop personal data axis analysis technology to improve the user's quality of life.

#According to positive psychology, people can create positive experiences for themselves and develop better habits for better development. An AI agent that has access to our daily lives and goals can remind us of things we need to accomplish and avoid.

Of course, what we choose to do is up to us, but I believe that a person who has a comprehensive view of our daily activities will have better memory and planning skills Artificial intelligence will benefit everyone greatly.

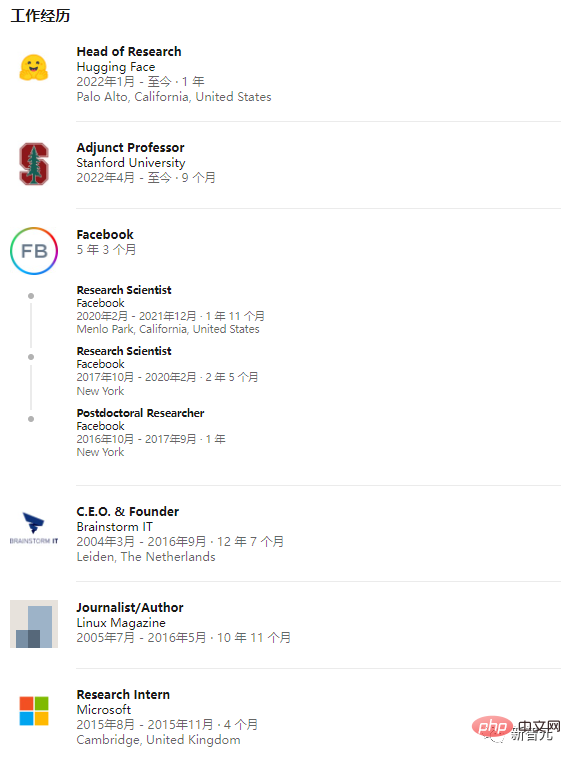

Douwe Kiela: Less hype, more caution

##Douwe Kiela is an adjunct professor of symbolic systems at Stanford University. After completing his master's and doctoral degrees at the University of Cambridge, Kiela worked as a researcher at IBM, Microsoft, and Facebook AI, and as the research director of Hugging Face.

In his New Year outlook, Kiela expressed his wishes for the development of artificial intelligence systems.

#This is the year we really see artificial intelligence start to go mainstream. Systems like Stable Diffusion and ChatGPT have completely captured the public imagination.

#These are exciting times, and we are on the cusp of something great: It is no exaggeration to say that this shift in capabilities will be the same as the Industrial Revolution, Have a disruptive impact.

##But amid the excitement, we should be wary of hype, be extra cautious, and conduct research and development responsibly.

For large language models, regardless of whether these systems actually "make sense", laypeople will anthropomorphize them because of their ability to perform human The most representative thing: producing language.

#However, we must educate the public about the capabilities and limitations of these artificial intelligence systems, because the public mostly thinks of computers as old-fashioned symbolic processors, such as , they were good at math but not good at art, whereas currently the situation is exactly the opposite.

Modern AI has a serious flaw, and its systems can easily be accidentally misused or intentionally abused. Not only do they produce misinformation, they appear so confident that people believe them.

These AI systems lack adequate understanding of the complex, multimodal human world, and do not possess what philosophers call “folk psychology,” or interpretation. and the ability to predict the behavior and mental states of others.

Currently, AI systems are still unsustainably resource-intensive products, and we know very little about the relationship between the input training data and the output model.

At the same time, while model scaling can greatly improve effectiveness—for example, certain features only appear when the model reaches a certain size—there are also signs that as this model scales, A system that is more prone to bias and even more unfair.

#So my hope for 2023 is that we can improve on these issues. Research into multimodality, localization, and interaction can enable systems to better understand the real world and human behavior, and thus better understand humans.

Studying alignment, attribution, and uncertainty can make AI systems safer, less prone to hallucinations, and build more accurate reward models. Data-centric AI promises to demonstrate more efficient scaling laws that more effectively transform data into robust and fair models.

Paper link: https://arxiv.org/pdf/2007.14435.pdf

Finally, we should pay more attention to the ongoing evaluation crisis of artificial intelligence. We need more granular and comprehensive measurements of our data and models to ensure we can describe our progress and limitations and understand us from the perspective of ecological validity (e.g., real-world use cases of AI systems) What you really want to gain from the development of artificial intelligence.

Been Kim: Using scientific research to explain the model

Been Kim is A scientist from Google Brain, graduated from MIT, his research field is interactive machine learning.

While excited about the creativity and many achievements that AI has shown in the past year, she also put forward her thoughts on the future of AI. Some thoughts on the research.

It’s an exciting time for AI, with achievements in generative art and many other applications There have been fascinating advances,

While these directions are exciting, I think we need to engage in less heady work, not just what AI can create More stuff, or how big of a model you can design:

#Go back to basics and make studying artificial intelligence models a goal of scientific inquiry.

Why do you do this?

The interpretability field aims to create tools to generate explanations for the output of complex models, helping us explore the relationship between AI and humans.

#One tool, for example, takes an image and a classification model and generates an explanation in the form of weighted pixels. The higher the weight of a pixel, the more important it is. For example, the more impact its value has on the output, the more important it may be, but how importance is defined varies from tool to tool.

#While generative AI has had some success, many tools have proven to work in ways we didn’t expect.

For example, the explanations of an untrained model are quantitatively and qualitatively indistinguishable from those of a trained model, and despite producing the same output, the explanations often vary. changes with small changes in input.

Furthermore, there isn’t much causal connection between the model’s output and the tool’s interpretation. Other work has shown that a good interpretation of a model's output does not necessarily have a positive impact on how people use the model.

#What does this mismatch between expectations and results mean, and what should we do about it? It suggests we need to examine how we build these tools.

#Currently we take an engineering-centric approach: trial and error. We build the tool based on intuition (e.g. we generate weights for each block of pixels instead of individual pixels, the interpretation is more intuitive).

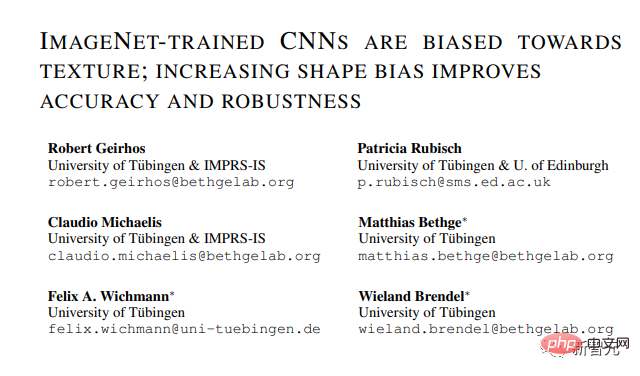

## Paper link: https://arxiv.org/pdf/1811.12231.pdf

A team at the University of Tübingen found that neural networks see more textures (like an elephant’s skin) than shapes (an elephant’s outline) even when we interpret images The silhouette of the elephant is seen possibly in the form of collectively highlighted pixels.

This research tells us that models may not see shapes but textures, which is called inductive bias — due to its architecture or because we optimize it trend in a particular class of models.

Revealing this tendency can help us understand models, just as revealing human tendencies can be used to understand human behavior (such as unfair decisions).

#This method often used to understand humans can also help us understand models. For models, because of the way their internal structures are built, we have another tool: theoretical analysis.

Work in this direction has produced exciting theoretical results on the behavior of models, optimizers, and loss functions. Some utilize classical tools from statistics, physics, dynamical systems or signal processing, and many tools from different fields have yet to be explored in the study of artificial intelligence.

# Pursuing science does not mean we should stop practicing: science allows us to build tools based on principles and knowledge, and practice turns ideas into reality.

Paper link: https://hal.inria.fr/inria-00112631/document

Practice can also inspire science: What works well in practice can provide reference for scientifically structured model structures, just like the high-performance convolutional network in 2012 inspired many Same as the theoretical paper analyzing why convolution helps generalization.

Reza Zadeh: Let ML models actively learn

Reza Zadeh is the founder and CEO of the computer vision company Matroid , graduated from Stanford University. His research fields are machine learning, distributed computing and discrete applied mathematics. He is also an early member of Databricks.

# He believes that the upcoming 2023 will be a year for active learning to take off.

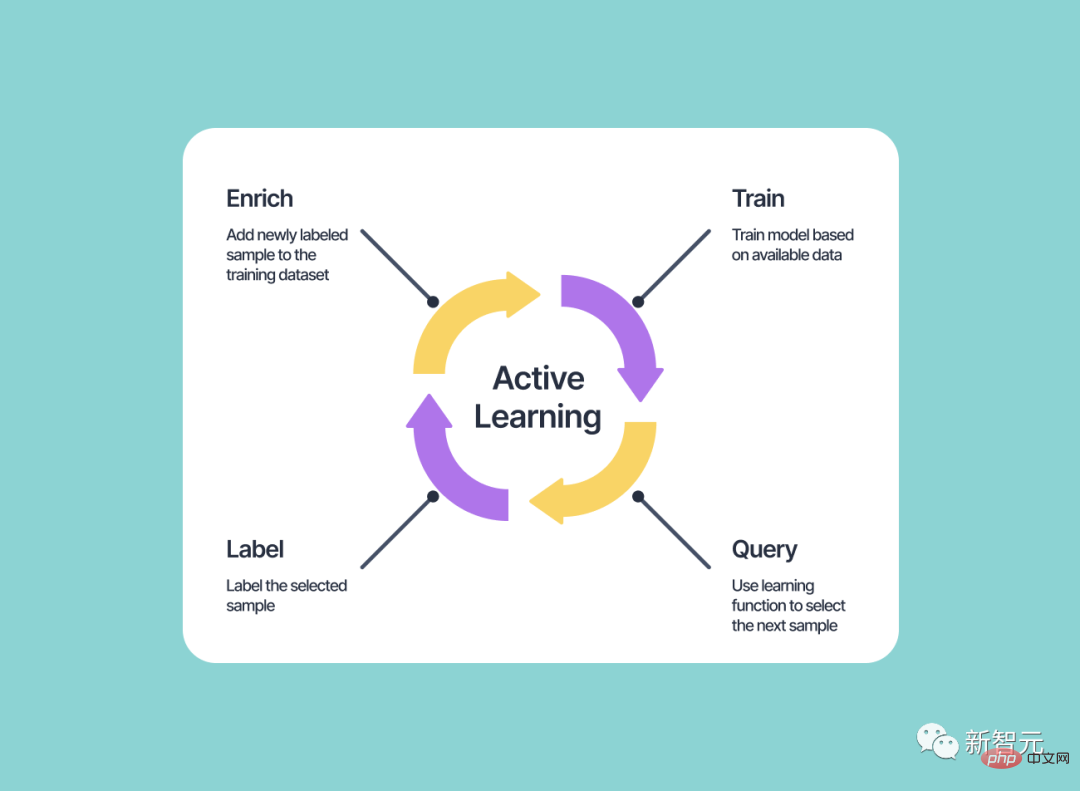

As we enter the new year, there is hope that the explosion of generative AI will be in Active Learning ) has brought significant progress.

This technique enables an ML system to generate its own training examples and label them, whereas in most other forms of machine learning, the algorithm is given a fixed set of examples and typically only Be able to learn from these examples.

So what can active learning bring to machine learning systems?

- Adapt to changing conditions

- From fewer tags Learn

- Let people understand the most valuable/difficult examples

- Achieve more High Performance

The idea of active learning has been around for decades but never really caught on. Previously, it was difficult for algorithms to generate images or sentences that humans could evaluate and advance learning algorithms.

#But with the popularity of image and text generation AI, active learning is expected to achieve major breakthroughs. Now when a learning algorithm is unsure of the correct labels for some part of its encoding space, it can proactively generate data from that part for input.

Active learning has the potential to revolutionize the way machine learning is done because it allows systems to improve and adapt over time.

Rather than relying on a fixed set of labeled data, an active learning system can seek out new information and examples to help it better understand what it is trying to solved problem.

#This can lead to more accurate and effective machine learning models and reduce the need for large amounts of labeled data.

#I am looking forward to the latest advances in active learning in generative AI. As we enter the new year, we are likely to see more machine learning systems implementing active learning techniques, and 2023 could be the year that active learning really takes off.

The above is the detailed content of 2023 is coming, the annual outlook of Ng Enda, Bengio and other big guys! Are rational AI models coming?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place