Technology peripherals

Technology peripherals

AI

AI

NeurIPS 2022 | DetCLIP, a new open domain detection method, improves reasoning efficiency by 20 times

NeurIPS 2022 | DetCLIP, a new open domain detection method, improves reasoning efficiency by 20 times

NeurIPS 2022 | DetCLIP, a new open domain detection method, improves reasoning efficiency by 20 times

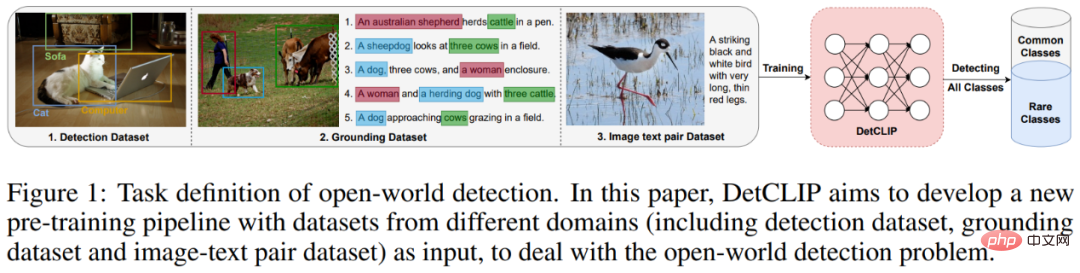

The open domain detection problem refers to the problem of how to implement any category detection in the downstream scenario by using a large number of image and text pairs crawled from the Internet or a certain category of manually annotated data for training in the upstream. The applications of open domain detection methods in the industry mainly include road object detection in autonomous driving systems, cloud full scene detection, etc.

##Paper address: https://arxiv.org/abs/2209.09407

This article shares the NeurIPS 2022 selected paper "DetCLIP: Dictionary-Enriched Visual-Concept Paralleled Pre-training for Open-world Detection". This paper proposes a joint and efficient method for multiple data sources for the open-domain detection problem. Parallel training framework, while constructing additional knowledge base to provide implicit relationships between categories. At the same time, DetCLIP won first place in the zero-shot detection track with an average detection index of 24.9% in the ECCV2022 OdinW (Object Detection in the Wild[1]) competition organized by Microsoft.

Problem IntroductionWith the popularity of multi-modal pre-training models (such as CLIP) trained based on image and text pairs crawled from the Internet, and its use in zero -shot has demonstrated excellent performance in the field of classification, and more and more methods are trying to migrate this ability to open-domain dense prediction (such as arbitrary category detection, segmentation, etc.). Existing methods often use pre-trained large classification models for feature level distillation [1] or learn by pseudo-labeling captions and self-training [2], but this is often limited by the performance of large classification models. And the problem of incomplete caption annotation.

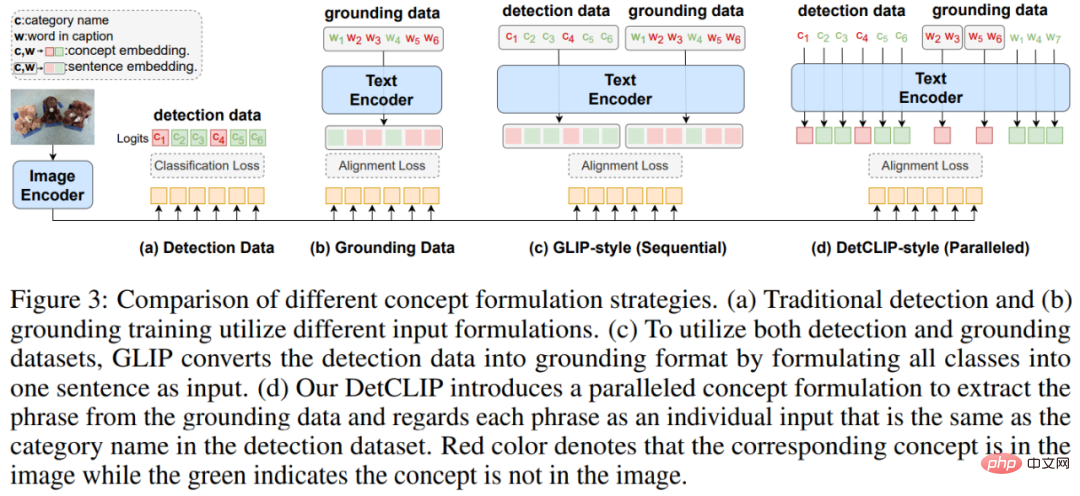

The existing SOTA open domain detection model GLIP[3] performs joint training of multiple data sources by converting the format of detection data into the format of Grounding data, taking full advantage of the advantages of different data sources. (The detection data set has relatively complete annotations for common categories, while the Grounding data set has a wider range of category cover intervals). However, we found that the way of concatenating category nouns leads to a reduction in the overall learning efficiency of the model, and that directly using category words as text input cannot provide fine-grained a priori relationships between categories.

Figure 1: Multiple data sources jointly pre-trained open domain detection model pipeline

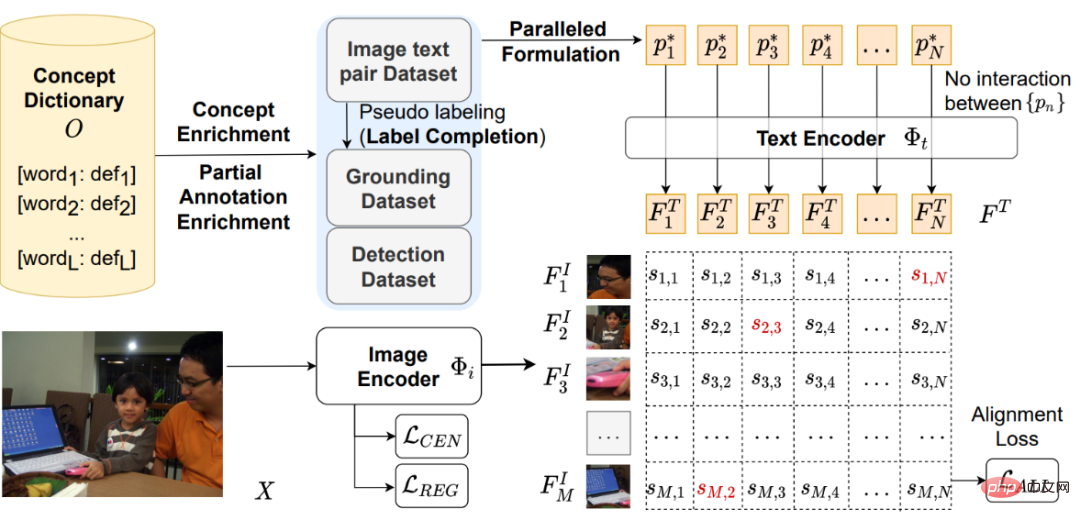

Model frameworkAs shown in the figure below, based on the ATSS[4] single-stage detection model, DetCLIP includes an image encoder To obtain the image features of the detection box, and a text encoderTo obtain the text features of the category. Then based on the above image features and text features, the corresponding classification alignment loss, center point loss and regression loss## are calculated. #.

##Figure 2: DetCLIP model frameworkAs shown in the upper right and upper left of Figure 2, the main innovations of this article are 1) proposing a framework for joint training of objects and texts from multiple data sources using parallel input to optimize training efficiency; 2) building an additional object knowledge base to assist the open domain Detection training.

Multiple data sources parallel input pre-training framework

Compared with GLIP, which converts detection data into grounding form (serial) by splicing category nouns, we use Extract the corresponding noun phrases in the grounding data and the categories in the detection as independent inputs and input them into the text encoder (in parallel) to avoid unnecessary attention calculations and achieve higher training efficiency.

Figure 3: Comparison between DetCLIP parallel input pre-training framework and GLIP

Object knowledge Library

In order to solve the problem of non-uniform category space in different data sources (the same category name is different, or the category contains, etc.) and to provide a priori information for the relationship between categories, we constructed an object knowledge base to achieve more efficient training.

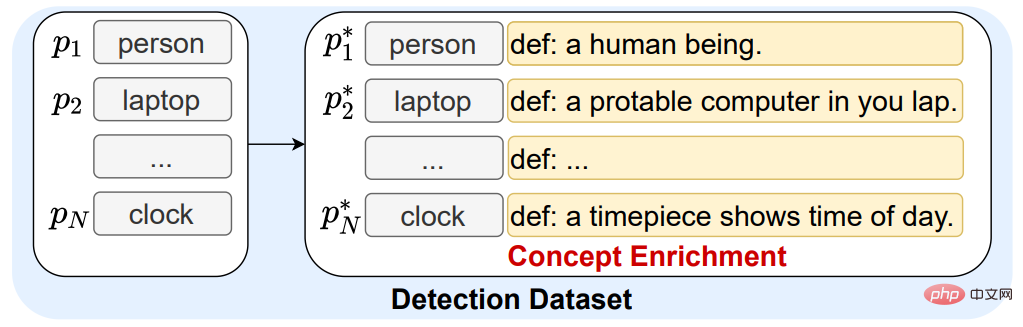

Construction: We simultaneously construct the object knowledge base by comprehensively integrating the categories in the detection data, the noun phrases in the image-text pair, and the corresponding definitions.

Usage: 1. We use the definition of the object knowledge base to expand the category words in the existing detection data to provide prior information on the relationship between categories (Concept Enrichment) .

Figure 4: Example of expanding category word definitions using object knowledge base

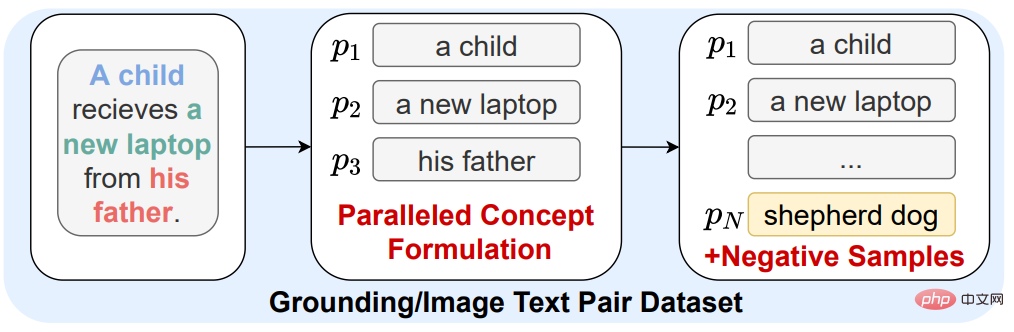

2. Due to the problem of incomplete caption annotation in the grounding data and image-caption data (the categories that appear on the image do not appear in the caption), these images can be used as negative samples when training. The number of categories is very small, which makes the model less distinguishable for some uncommon categories. Therefore, we randomly select object nouns from the object knowledge base as negative sample categories to improve the model's discrimination of rare category features (Negative Samples).

Figure 5: Introducing categories in the object knowledge base as negative sample categories

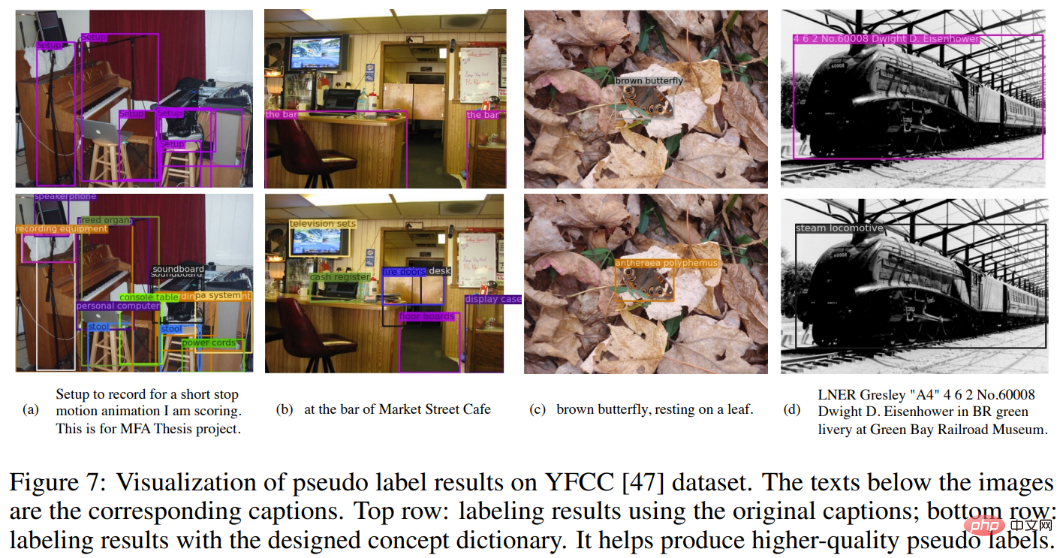

3. For the image-text pair data without frame annotation, we use Huawei Noah's self-developed large model FILIP [5] and pre-trained RPN to annotate it so that it can be transformed Train on normal grounding data. At the same time, in order to alleviate the problem of incomplete annotation of objects in the picture in the caption, we use all category phrases in the object knowledge base as candidate categories for pseudo-labeling (second line), and only use the category annotation effect in the caption (first line). row) The comparison is as follows:

Figure 6: Introducing categories in the object knowledge base as candidate categories for false labeling

Experimental results

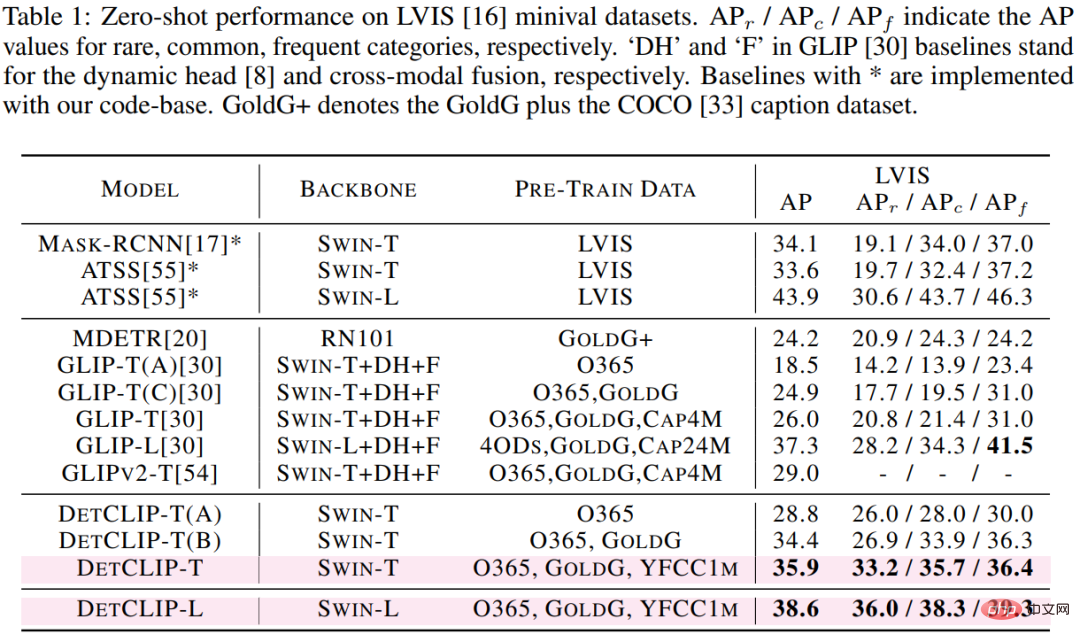

We verified the open domain detection performance of the proposed method on the downstream LVIS detection data set (1203 class), as can be seen Based on the swin-t backbone-based architecture, DetCLIP has achieved a 9.9% AP improvement compared to the existing SOTA model GLIP, and a 12.4% AP improvement in the Rare category, although we only use less than half of GLIP. Data volume, please note that the training set does not contain any images in LVIS.

Table 1: Comparison of Zero-shot transfer performance of different methods on LVIS

In terms of training efficiency, based on the same hardware conditions of 32 V100s, the training time of GLIP-T is 5 times that of DetCLIP-T (10.7K GPU hrs vs. 2.0K GPU hrs). In terms of test efficiency, based on a single V100, DetCLIP-T's inference efficiency of 2.3 FPS (0.4 seconds per image) is 20 times higher than GLIP-T's 0.12 FPS (8.6 seconds per image). We also separately studied the impact of DetCLIP's key innovations (parallel framework and object knowledge base) on accuracy.

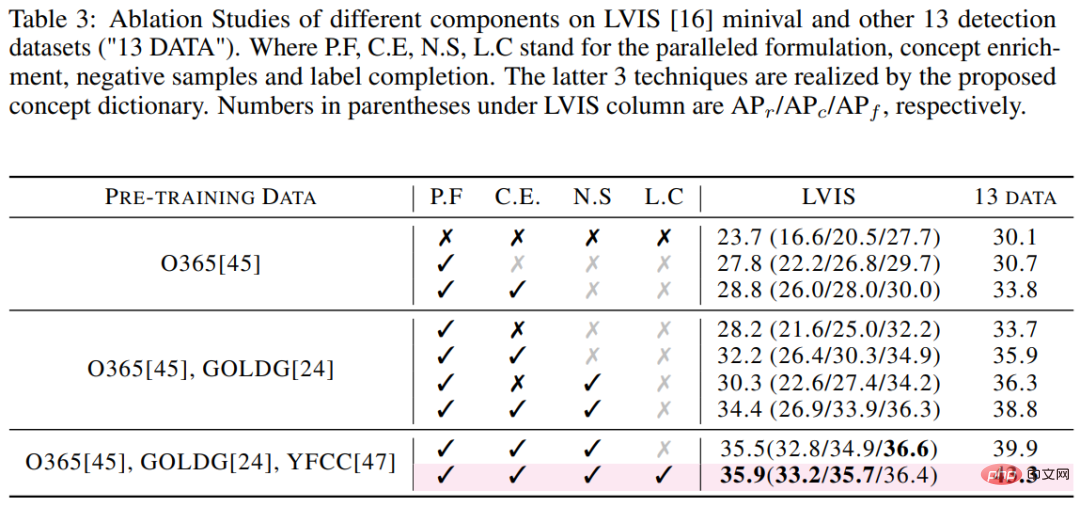

Table 3: DetCLIP ablation study results on LVIS data set

Visualization Results

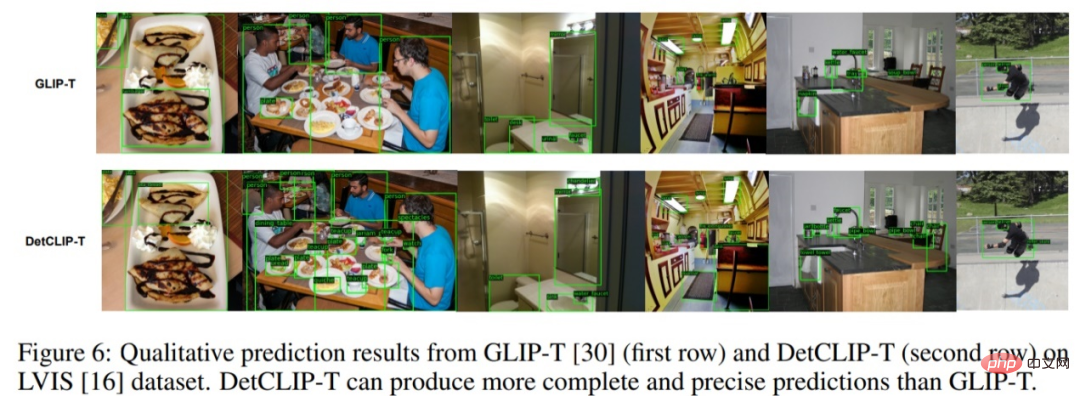

As shown in the figure below, based on the same swin-t backbone, the visualization effect on the LVIS data set has been significantly improved compared to GLIP, especially in the annotation of rare categories and The completeness of the annotation.

Figure 7: Visual comparison of the prediction results of DetCLIP and GLIP on the LVIS data set

The above is the detailed content of NeurIPS 2022 | DetCLIP, a new open domain detection method, improves reasoning efficiency by 20 times. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

If you need to know how to use filtering with multiple criteria in Excel, the following tutorial will guide you through the steps to ensure you can filter and sort your data effectively. Excel's filtering function is very powerful and can help you extract the information you need from large amounts of data. This function can filter data according to the conditions you set and display only the parts that meet the conditions, making data management more efficient. By using the filter function, you can quickly find target data, saving time in finding and organizing data. This function can not only be applied to simple data lists, but can also be filtered based on multiple conditions to help you locate the information you need more accurately. Overall, Excel’s filtering function is a very practical

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects