Backend Development

Backend Development

Python Tutorial

Python Tutorial

In order to rent a house in Shanghai, I used Python to crawl through more than 20,000 housing information overnight.

In order to rent a house in Shanghai, I used Python to crawl through more than 20,000 housing information overnight.

In order to rent a house in Shanghai, I used Python to crawl through more than 20,000 housing information overnight.

Recently, due to a sudden change in work, the new office location is far away from the current residence, so I have to rent a new house.

I got on the agency’s eMule and started exploring strange corners of the city.

In the process of switching between various rental apps, I was really worried because the efficiency was really low:

First of all, because I live with my girlfriend Together, the two people need to consider the distance to work at the same time, but the function of finding a house based on commuting time on each platform is relatively useless. Some platforms do not support the selection of multiple locations at the same time, and some platforms can only mechanically obtain the commuting distance from each location. The points with the same duration cannot meet the usage needs.

Secondly, from the perspective of a renter, there are too many rental platforms, and the filtering and sorting logic of each platform is inconsistent, making it difficult to horizontally compare information on similar properties.

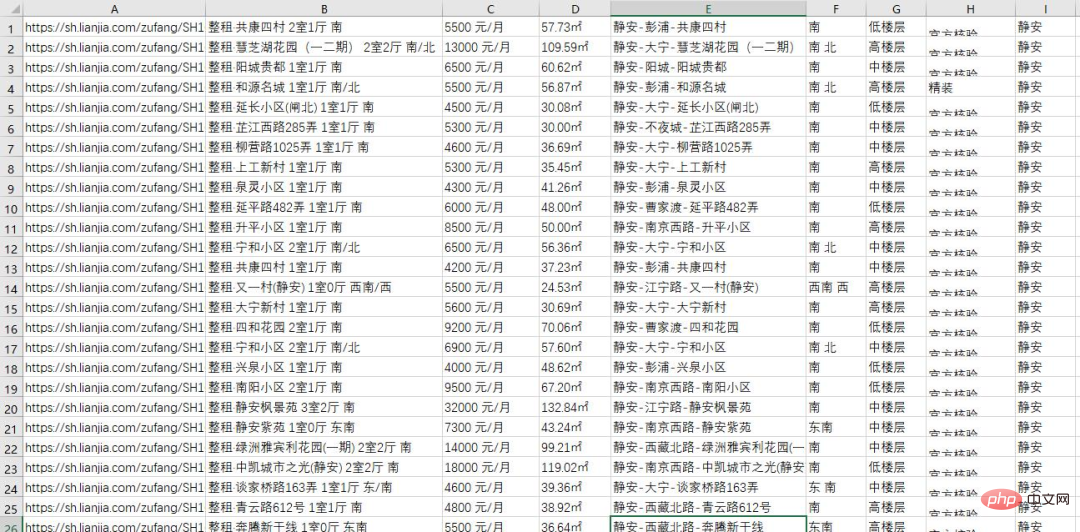

But it doesn’t matter. As a programmer, of course you have to use programmers’ methods to solve problems. So, last night I used a python script to obtain all the housing information of a rental platform in the Shanghai area. There were more than 20,000 pieces in total:

The following is the crawl data The whole process is shared with everyone.

Analyze the page and find the entry point

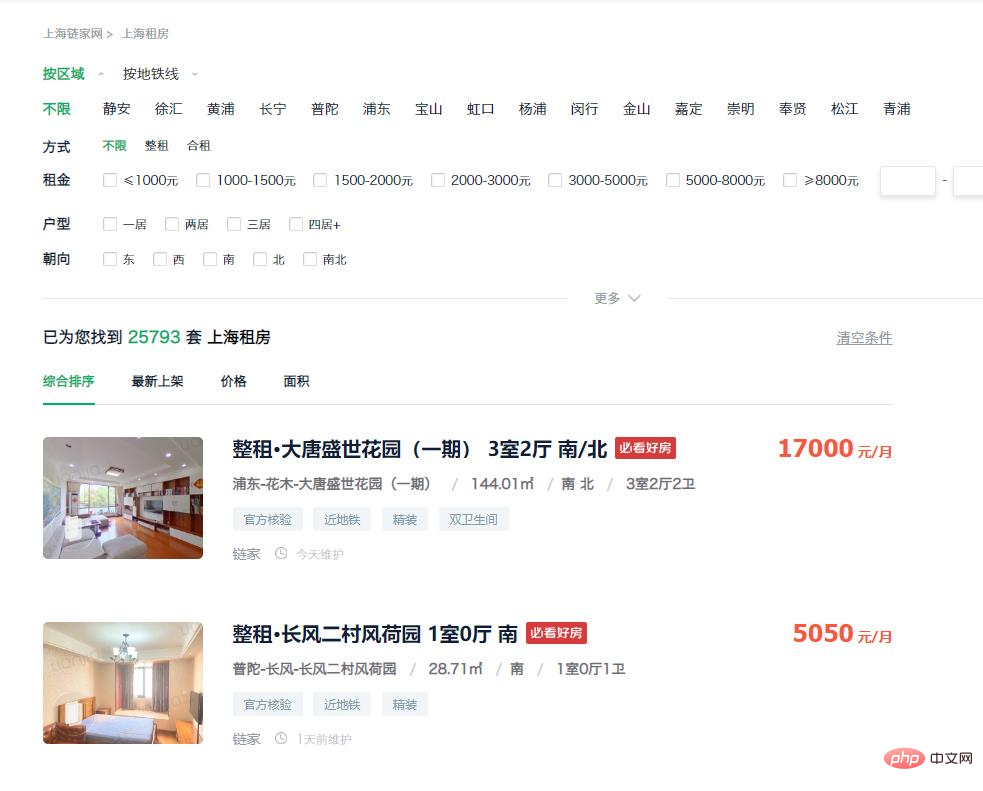

First enter the rental page of the platform. You can see that the house listing on the homepage already includes most of the information we need, and these Information can be obtained directly from the DOM, so consider collecting web page data directly through simulated requests.

https://sh.lianjia.com/zufang/

#So the next step is to consider how to get the url. Through observation, we found that there are more than 20,000 houses in the area, but only the first 100 pages of data can be accessed through the web page. The upper limit of the number displayed on each page is 30, which works out to a total of 3k. It is impossible to obtain all the information. .

But we can solve this problem by adding filter conditions. Select "Jing'an" in the filter item and enter the following url:

https://sh.lianjia.com/zufang/jingan/

You can see that there are more than 2k houses in the area, and the number of data pages is 75. With 30 entries per page, all data can theoretically be accessed. Therefore, all data in the city can be obtained by obtaining housing data in each district separately.

https://sh.lianjia.com/zufang/jingan/pg2/

After clicking the second page button, you enter the above url. You can find that as long as you modify the number after pg, you can enter the corresponding page number.

However, a problem is found here. The data obtained for each visit to the same number of pages is different, which will lead to duplication of collected data. So we click on "Latest on the shelves" in the sorting conditions and enter the following link:

https://sh.lianjia.com/zufang/jingan/pg2rco11/

The order of data obtained by this sorting method is stable. At this point, our idea is: first visit each item separately The first page of the small region, and then obtain the maximum number of pages in the current region through the first page, and then access the simulated request to access each page to obtain all data.

Crawling data

After we have the idea, we need to start writing the code. First, we need to collect all the links. The code is as follows:

# 所有小地区对应的标识

list=['jingan','xuhui','huangpu','changning','putuo','pudong','baoshan','hongkou','yangpu','minhang','jinshan','jiading','chongming','fengxian','songjiang','qingpu']

# 存放所有链接

urls = []

for a in list:

urls.append('https://sh.lianjia.com/zufang/{}/pg1rco11/'.format(a))

# 设置请求头,避免ip被ban

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.9 Safari/537.36'}

# 获取当前小地区第1页的dom信息

res = requests.get('https://sh.lianjia.com/zufang/{}/pg1rco11/'.format(a), headers=headers)

content = res.text

soup = BeautifulSoup(content, 'html.parser')

# 获取当前页面的最大页数

page_num = int(soup.find('div', attrs={'class': 'content__pg'}).attrs['data-totalpage'])

for i in range(2,page_num+1):

# 将所有链接保存到urls中

urls.append('https://sh.lianjia.com/zufang/{}/pg{}rco11/'.format(a,i))After that, we need to do it one by one Process the urls obtained in the previous step and obtain the data in the link. The code is as follows:

num=1

for url in urls:

print("正在处理第{}页数据...".format(str(num)))

res1 = requests.get(url, headers=headers)

content1 = res1.text

soup1 = BeautifulSoup(content1, 'html.parser')

infos = soup1.find('div', {'class': 'content__list'}).find_all('div', {'class': 'content__list--item'})Organize the data and export the file

By observing the page structure, we can get the storage location of each element , find the corresponding page element, and then we can get the information we need.

The complete code is attached here. Interested friends can replace the regional identifier and small regional identifier in the link according to their own needs, and then they can obtain their own Information about your area. The crawling methods of other rental platforms are mostly similar, so I won’t go into details.

import time, re, csv, requests

import codecs

from bs4 import BeautifulSoup

print("****处理开始****")

with open(r'..sh.csv', 'wb+')as fp:

fp.write(codecs.BOM_UTF8)

f = open(r'..sh.csv','w+',newline='', encoding='utf-8')

writer = csv.writer(f)

urls = []

# 所有小地区对应的标识

list=['jingan','xuhui','huangpu','changning','putuo','pudong','baoshan','hongkou','yangpu','minhang','jinshan','jiading','chongming','fengxian','songjiang','qingpu']

# 存放所有链接

urls = []

for a in list:

urls.append('https://sh.lianjia.com/zufang/{}/pg1rco11/'.format(a))

# 设置请求头,避免ip被ban

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.9 Safari/537.36'}

# 获取当前小地区第1页的dom信息

res = requests.get('https://sh.lianjia.com/zufang/{}/pg1rco11/'.format(a), headers=headers)

content = res.text

soup = BeautifulSoup(content, 'html.parser')

# 获取当前页面的最大页数

page_num = int(soup.find('div', attrs={'class': 'content__pg'}).attrs['data-totalpage'])

for i in range(2,page_num+1):

# 将所有链接保存到urls中

urls.append('https://sh.lianjia.com/zufang/{}/pg{}rco11/'.format(a,i))

num=1

for url in urls:

# 模拟请求

print("正在处理第{}页数据...".format(str(num)))

res1 = requests.get(url, headers=headers)

content1 = res1.text

soup1 = BeautifulSoup(content1, 'html.parser')

# 读取页面中数据

infos = soup1.find('div', {'class': 'content__list'}).find_all('div', {'class': 'content__list--item'})

# 数据处理

for info in infos:

house_url = 'https://sh.lianjia.com' + info.a['href']

title = info.find('p', {'class': 'content__list--item--title'}).find('a').get_text().strip()

group = title.split()[0][3:]

price = info.find('span', {'class': 'content__list--item-price'}).get_text()

tag = info.find('p', {'class': 'content__list--item--bottom oneline'}).get_text()

mixed = info.find('p', {'class': 'content__list--item--des'}).get_text()

mix = re.split(r'/', mixed)

address = mix[0].strip()

area = mix[1].strip()

door_orientation = mix[2].strip()

style = mix[-1].strip()

region = re.split(r'-', address)[0]

writer.writerow((house_url, title, group, price, area, address, door_orientation, style, tag, region))

time.sleep(0)

print("第{}页数据处理完毕,共{}条数据。".format(str(num), len(infos)))

num+=1

f.close()

print("****全部完成****")After some operations, we obtained the complete housing information of various local rental platforms. At this point, we can already obtain the data we need through some basic filtering methods.

The above is the detailed content of In order to rent a house in Shanghai, I used Python to crawl through more than 20,000 housing information overnight.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

HadiDB: A lightweight, horizontally scalable database in Python

Apr 08, 2025 pm 06:12 PM

HadiDB: A lightweight, horizontally scalable database in Python

Apr 08, 2025 pm 06:12 PM

HadiDB: A lightweight, high-level scalable Python database HadiDB (hadidb) is a lightweight database written in Python, with a high level of scalability. Install HadiDB using pip installation: pipinstallhadidb User Management Create user: createuser() method to create a new user. The authentication() method authenticates the user's identity. fromhadidb.operationimportuseruser_obj=user("admin","admin")user_obj.

Can mysql workbench connect to mariadb

Apr 08, 2025 pm 02:33 PM

Can mysql workbench connect to mariadb

Apr 08, 2025 pm 02:33 PM

MySQL Workbench can connect to MariaDB, provided that the configuration is correct. First select "MariaDB" as the connector type. In the connection configuration, set HOST, PORT, USER, PASSWORD, and DATABASE correctly. When testing the connection, check that the MariaDB service is started, whether the username and password are correct, whether the port number is correct, whether the firewall allows connections, and whether the database exists. In advanced usage, use connection pooling technology to optimize performance. Common errors include insufficient permissions, network connection problems, etc. When debugging errors, carefully analyze error information and use debugging tools. Optimizing network configuration can improve performance

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

It is impossible to view MongoDB password directly through Navicat because it is stored as hash values. How to retrieve lost passwords: 1. Reset passwords; 2. Check configuration files (may contain hash values); 3. Check codes (may hardcode passwords).

How to solve mysql cannot connect to local host

Apr 08, 2025 pm 02:24 PM

How to solve mysql cannot connect to local host

Apr 08, 2025 pm 02:24 PM

The MySQL connection may be due to the following reasons: MySQL service is not started, the firewall intercepts the connection, the port number is incorrect, the user name or password is incorrect, the listening address in my.cnf is improperly configured, etc. The troubleshooting steps include: 1. Check whether the MySQL service is running; 2. Adjust the firewall settings to allow MySQL to listen to port 3306; 3. Confirm that the port number is consistent with the actual port number; 4. Check whether the user name and password are correct; 5. Make sure the bind-address settings in my.cnf are correct.

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

MySQL can run without network connections for basic data storage and management. However, network connection is required for interaction with other systems, remote access, or using advanced features such as replication and clustering. Additionally, security measures (such as firewalls), performance optimization (choose the right network connection), and data backup are critical to connecting to the Internet.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

How to use AWS Glue crawler with Amazon Athena

Apr 09, 2025 pm 03:09 PM

How to use AWS Glue crawler with Amazon Athena

Apr 09, 2025 pm 03:09 PM

As a data professional, you need to process large amounts of data from various sources. This can pose challenges to data management and analysis. Fortunately, two AWS services can help: AWS Glue and Amazon Athena.