Use Python to analyze 1.4 billion pieces of data

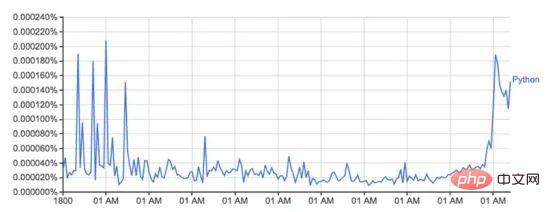

Google Ngram viewer is a fun and useful tool that uses Google's vast treasure trove of data scanned from books to plot changes in word usage over time. For example, the word Python (case sensitive):

This image from books.google.com/ngrams… depicts usage of the word 'Python' Changes over time.

It is driven by Google's n-gram dataset, which records the usage of a specific word or phrase in Google Books for each year the book was printed. While this is not complete (it does not include every book ever published!), there are millions of books in the dataset, spanning the period from the 16th century to 2008. The dataset can be downloaded for free from here.

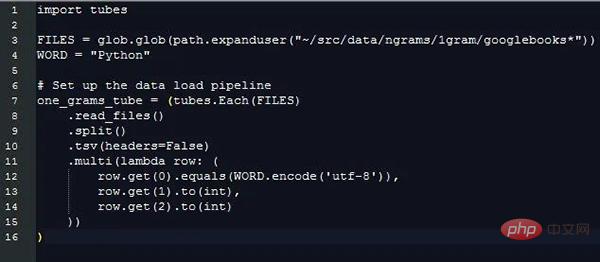

I decided to use Python and my new data loading library PyTubes to see how easy it was to regenerate the plot above.

Challenge

The 1-gram data set can be expanded to 27 Gb of data on the hard disk, which is a large amount of data when read into python. Python can easily process gigabytes of data at a time, but when the data is corrupted and processed, it becomes slower and less memory efficient.

In total, these 1.4 billion pieces of data (1,430,727,243) are scattered in 38 source files, with a total of 24 million (24,359,460) words (and part-of-speech tags, see below), calculated from 1505-2008.

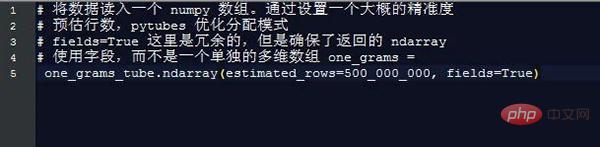

It slows down quickly when processing 1 billion rows of data. And native Python is not optimized to handle this aspect of data. Fortunately, numpy is really good at handling large amounts of data. Using some simple tricks, we can make this analysis feasible using numpy.

Handling strings in python/numpy is complicated. The memory overhead of strings in python is significant, and numpy can only handle strings of known and fixed length. Due to this situation, most of the words are of different lengths, so this is not ideal.

Loading the data

All code/examples below are running on a 2016 Macbook Pro with 8 GB of RAM. If the hardware or cloud instance has better ram configuration, the performance will be better.

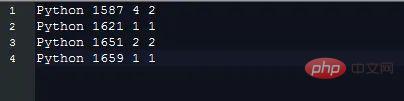

1-gram data is stored in the file in the form of tab key separation, which looks as follows:

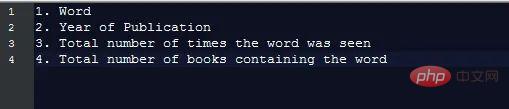

Each piece of data contains the following fields :

In order to generate the chart as required, we only need to know this information, that is:

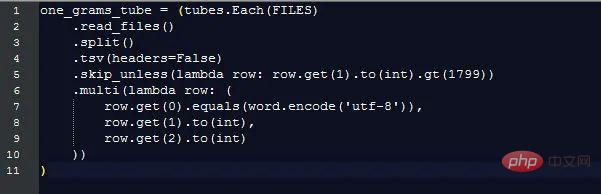

By extracting these Information, the extra cost of processing string data of different lengths is ignored, but we still need to compare the values of different strings to distinguish which rows of data have the fields we are interested in. This is what pytubes can do:

After almost 170 seconds (3 minutes), one_grams is a numpy array containing almost 1.4 billion rows of data, looking like this (adding table headers for illustration):

╒═══════════╤════════╤════ │ ═ ══════╡

│ 0 │ 1799 │ 2 │

├───────────┼──────────┼── ───────┤

│ 0 │ 1804 │ 1 │

├───────────┼─────────┼─ ────────┤

│ 0 │ 1805 │ 1 │

├───────────┼─────────┼ ─────────┤

│ 0 │ 1811 │ 1 │

├───────────┼──────── ┼─────────┤

│ 0 │ 1820 │ ... │

╘═══════════╧═════ ═══╧═════════╛

From here, it's just a matter of using numpy methods to calculate something:

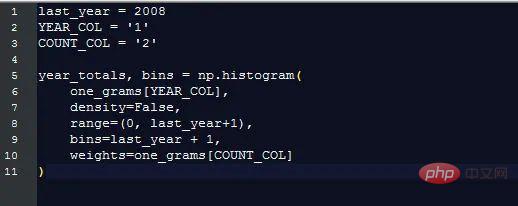

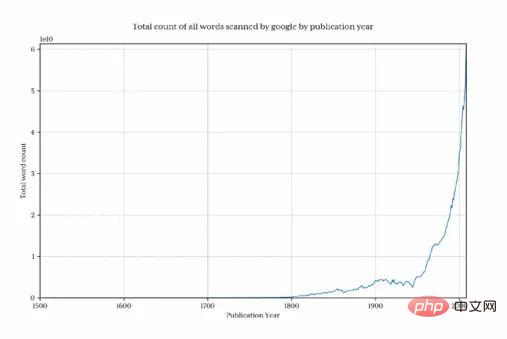

Total word usage per year

Google shows the percentage of occurrences of each word (number of times a word appears in this year/total number of all words in this year), which is more useful than just counting the original words. In order to calculate this percentage, we need to know what the total number of words is.

Fortunately, numpy makes this very easy:

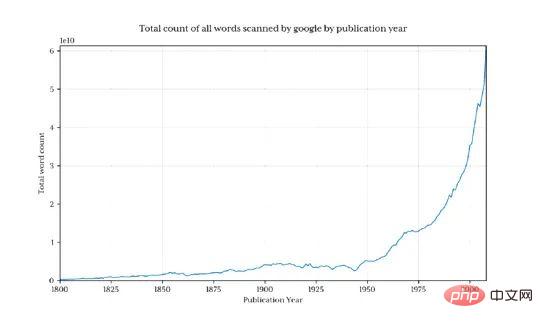

Plot this graph to show how many words Google collects each year:

It is clear that before 1800, the amount of data declined rapidly, thus distorting the final results and hiding the patterns of interest. To avoid this problem, we only import data after 1800:

This returns 1.3 billion rows of data (only 3.7% before 1800)

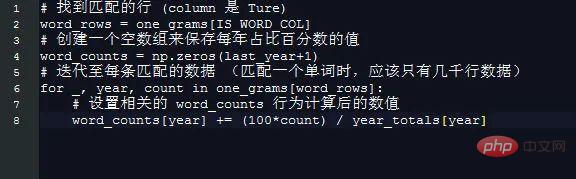

Python’s annual percentage share

Getting Python’s annual percentage share is now particularly simple.

Use a simple trick to create an array based on the year. The 2008 element length means that the index of each year is equal to the number of the year. Therefore, for example, 1995 is just a matter of getting the element of 1995. .

None of this is worth using numpy to do:

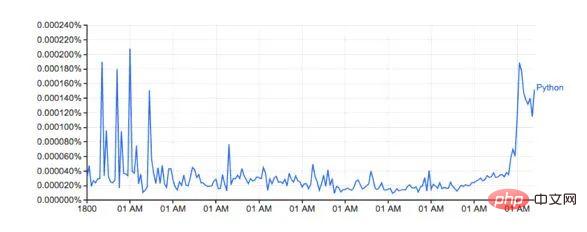

Plot the result of word_counts:

The shape looks similar to Google's version

The actual percentages do not match. I think it is because the downloaded data set contains different words. (For example: Python_VERB). This dataset is not explained very well on the google page, and raises several questions: How do we use Python as a verb?

Does the total calculation amount of ‘Python’ include ‘Python_VERB’? etc

Fortunately, we all know that the method I used produces an icon that is very similar to Google, and the related trends are not affected, so for this exploration, I am not going to try to fix it.

Performance

Google generates the image in about 1 second, which is reasonable compared to the 8 minutes of this script. Google's word count backend works from an explicit view of the prepared dataset.

For example, calculating the total word usage for the previous year in advance and storing it in a separate lookup table will save significant time. Likewise, keeping the word usage in a separate database/file and then indexing the first column will eliminate almost all the processing time.

This exploration really shows that using numpy and the fledgling pytubes with standard commodity hardware and Python, it is possible to load, process and extract arbitrary statistics from a billion rows of data in a reasonable amount of time. Possible,

Language Wars

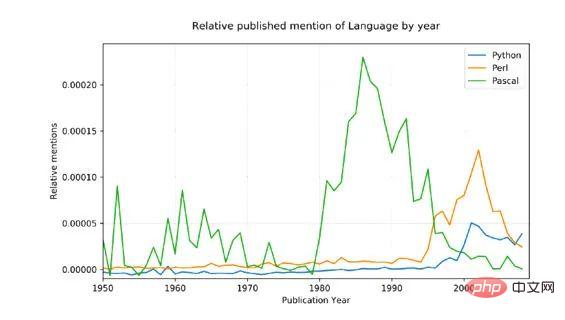

To demonstrate this concept with a slightly more complex example, I decided to compare three related mentioned programming languages: Python, Pascal, and Perl.

The source data is noisy (it contains all English words used, not just mentions of programming languages, and, for example, python has non-technical meanings too!), so to adjust for this, We've done two things:

Only name forms with capital letters are matched (Python, not python)

The total number of mentions for each language has been converted from 1800 to The percentage average for 1960, which should give a reasonable baseline considering Pascal was first mentioned in 1970.

Results:

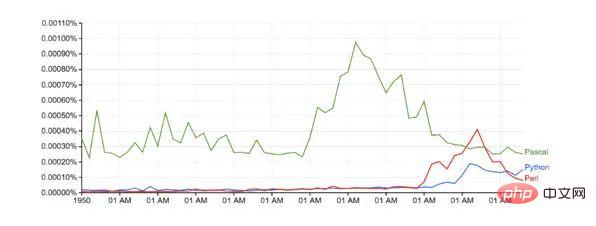

Compared to Google (without any baseline adjustment):

Compared to Google (without any baseline adjustment):

Running time: just over 10 minutes

Future PyTubes improvements

At this stage, pytubes only has the concept of a single integer, which is 64 bits. This means that the numpy arrays generated by pytubes use i8 dtypes for all integers. In some places (like ngrams data), 8-bit integers are a bit overkill and waste memory (the total ndarray is 38Gb, dtypes can easily reduce it by 60%). I plan to add some level 1, 2 and 4 bit integer support (github.com/stestagg/py… )

More filtering logic - Tube.skip_unless() is a relatively simple filter line method, but lacks the ability to combine conditions (AND/OR/NOT). This can reduce the size of loaded data faster in some use cases.

Better string matching - simple tests like: startswith, endswith, contains, and is_one_of can be easily added to significantly improve the effectiveness of loading string data.

The above is the detailed content of Use Python to analyze 1.4 billion pieces of data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

The main reasons for MySQL installation failure are: 1. Permission issues, you need to run as an administrator or use the sudo command; 2. Dependencies are missing, and you need to install relevant development packages; 3. Port conflicts, you need to close the program that occupies port 3306 or modify the configuration file; 4. The installation package is corrupt, you need to download and verify the integrity; 5. The environment variable is incorrectly configured, and the environment variables must be correctly configured according to the operating system. Solve these problems and carefully check each step to successfully install MySQL.

MySQL download file is damaged and cannot be installed. Repair solution

Apr 08, 2025 am 11:21 AM

MySQL download file is damaged and cannot be installed. Repair solution

Apr 08, 2025 am 11:21 AM

MySQL download file is corrupt, what should I do? Alas, if you download MySQL, you can encounter file corruption. It’s really not easy these days! This article will talk about how to solve this problem so that everyone can avoid detours. After reading it, you can not only repair the damaged MySQL installation package, but also have a deeper understanding of the download and installation process to avoid getting stuck in the future. Let’s first talk about why downloading files is damaged. There are many reasons for this. Network problems are the culprit. Interruption in the download process and instability in the network may lead to file corruption. There is also the problem with the download source itself. The server file itself is broken, and of course it is also broken when you download it. In addition, excessive "passionate" scanning of some antivirus software may also cause file corruption. Diagnostic problem: Determine if the file is really corrupt

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

MySQL can run without network connections for basic data storage and management. However, network connection is required for interaction with other systems, remote access, or using advanced features such as replication and clustering. Additionally, security measures (such as firewalls), performance optimization (choose the right network connection), and data backup are critical to connecting to the Internet.

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

MySQL refused to start? Don’t panic, let’s check it out! Many friends found that the service could not be started after installing MySQL, and they were so anxious! Don’t worry, this article will take you to deal with it calmly and find out the mastermind behind it! After reading it, you can not only solve this problem, but also improve your understanding of MySQL services and your ideas for troubleshooting problems, and become a more powerful database administrator! The MySQL service failed to start, and there are many reasons, ranging from simple configuration errors to complex system problems. Let’s start with the most common aspects. Basic knowledge: A brief description of the service startup process MySQL service startup. Simply put, the operating system loads MySQL-related files and then starts the MySQL daemon. This involves configuration

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

MySQL performance optimization needs to start from three aspects: installation configuration, indexing and query optimization, monitoring and tuning. 1. After installation, you need to adjust the my.cnf file according to the server configuration, such as the innodb_buffer_pool_size parameter, and close query_cache_size; 2. Create a suitable index to avoid excessive indexes, and optimize query statements, such as using the EXPLAIN command to analyze the execution plan; 3. Use MySQL's own monitoring tool (SHOWPROCESSLIST, SHOWSTATUS) to monitor the database health, and regularly back up and organize the database. Only by continuously optimizing these steps can the performance of MySQL database be improved.