Author: Chen Wei, Ph.D., storage and computing integration/GPU architecture and AI expert, senior professional title. Zhongguancun Cloud Computing Industry Alliance, expert of China Optical Engineering Society, member of International Computer Federation (ACM), professional member of China Computer Federation (CCF). He used to be the chief scientist of an AI company and the head of 3D NAND design of a major memory chip manufacturer. His main achievements include the first domestic high-power reconfigurable storage and computing processor product architecture (has completed prototype internal testing in a major Internet manufacturer), the first medical Domain-specific AI processor (already applied), the first RISC-V/x86/ARM platform-compatible AI acceleration compiler (already applied in cooperation with Alibaba Pingtouge/Xinlai), China's first 3D NAND chip architecture and design The team was established (benchmarked with Samsung) and the first embedded flash memory compiler in China (benchmarked with TSMC and has been applied at the platform level).

On the last day of September 2022, Tesla’s Artificial Intelligence Day, Tesla’s “Optimus Prime” robot officially debuted. According to Tesla engineers, Artificial Intelligence Day 2022 is the first time that Tesla robots have been "released" without any external support. "He walked dignifiedly and greeted the audience in a general direction. Except for the slightly sluggish movements, everything else is very natural.

Tesla shows off video of robots "working" around the office. A robot named Optimus Prime carried items, watered plants, and even worked autonomously in a factory for a while. "Our goal is to build useful humanoid robots as quickly as possible," Tesla said, adding that their goal is to make the robots less than $20,000, or cheaper than Tesla's electric cars.

The reason why Tesla robots are so powerful is not only Tesla’s own accumulation of AI technology, but also mainly due to Tesla’s strong self-developed AI chips. This AI chip is not a traditional CPU, let alone a GPU. It is a form more suitable for complex AI calculations.

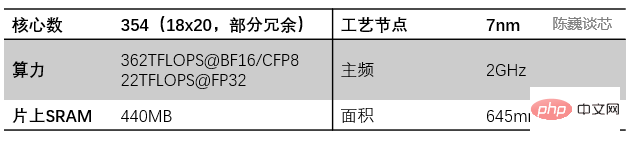

Comparison of D1 processor and other autonomous driving/robotics processors

The reason why Tesla builds its own chip is that the GPU is not specifically designed to handle deep learning training, which makes GPUs are relatively inefficient in computing tasks. The goal of Tesla and Dojo (Dojo is both the name of the training module and the name of the kernel architecture) is to "achieve the best AI training performance. Enable larger and more complex neural network models to achieve high energy efficiency and cost-effectiveness." Computing." Tesla's standard is to build a computer that is better at artificial intelligence computing than any other computer so that they don't need to use a GPU in the future.

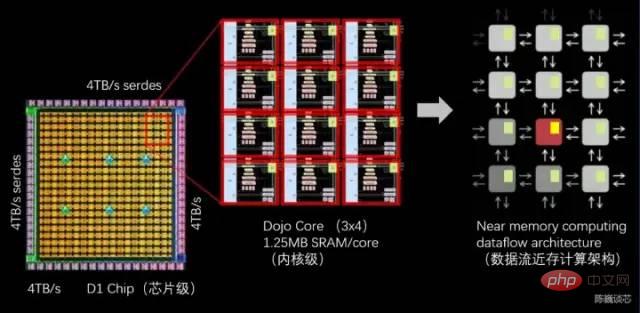

A key point in building supercomputers is how to scale computing power while maintaining high bandwidth (difficult) and low latency (very difficult). The solution given by Tesla is a distributed 2D architecture (planar) composed of powerful chips and a unique grid structure, or a data flow near-memory computing architecture.

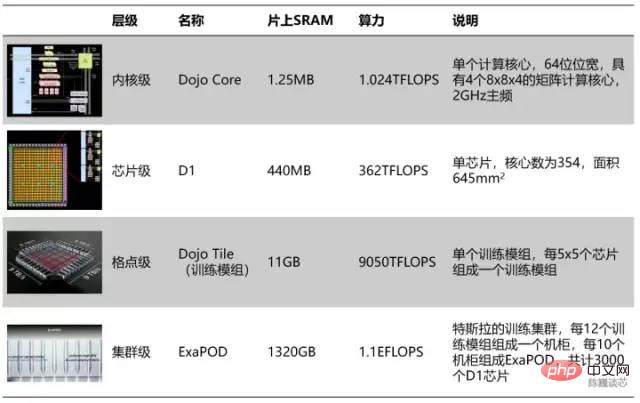

Hierarchical division of Tesla computing units

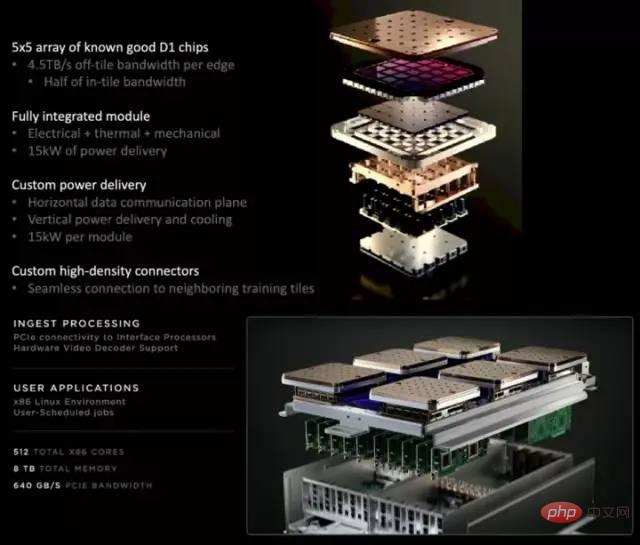

According to the hierarchy, every 354 Dojo cores form a D1 chip, and every 25 chips form a training module. The final 120 training modules form a set of ExaPOD computing clusters, with a total of 3,000 D1 chips.

A Tesla Dojo chip training module can achieve the performance of 6 groups of GPU servers, but the cost is less than a single group of GPU servers. The computing power of a single Dojo server even reached 54PFLOPS. Just 4 Dojo cabinets can replace 72 GPU racks consisting of 4,000 GPUs. Dojo reduces AI computing (training) work that normally takes months to 1 week. This kind of "big computing power can produce miracles" is in line with Tesla's autonomous driving style. Obviously, the chip will also greatly accelerate the progress of Tesla's AI technology.

Of course, this chip module has not yet reached the level of "perfection". Although it adopts the idea of data stream near-memory computing, its computing power and energy efficiency ratio does not exceed that of the GPU. A single server consumes huge power, with the current reaching 2000A, and requires a specially customized power supply. The Tesla D1 chip is already the structural limit of the near-memory computing architecture. If Tesla adopts "in-memory computing" or "in-memory logic" architecture, perhaps the chip performance or energy efficiency ratio will be greatly improved.

The Tesla Dojo chip server consists of 12 Dojo training modules (2 layers, 6 per layer)

The Dojo core is an 8-way decoding core with high throughput and 4-way matrix calculation unit (8x8) and 1.25 MB. Local SRAM. However, the size of the Dojo core is not large. In comparison, Fujitsu's A64FX occupies more than twice the area on the same process node.

Through the structure of the Dojo core, we can see Tesla’s design philosophy on general AI processors:

For Tesla and Musk, Dojo is not only shaped and laid out like a dojo, but its design philosophy is also closely related to the spirit of the dojo, fully embodying "less is more" "Multiple" processor design aesthetics.

Let’s first take a look at the structure and characteristics of each Dojo.

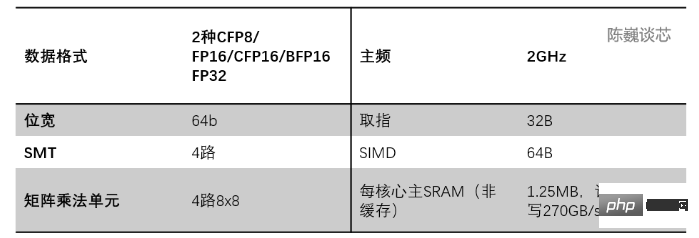

Each Dojo core is a processor with vector calculation/matrix calculation capabilities, and has complete instruction fetching, decoding, and execution components. Dojo core has a CPU-like style that seems to be more adaptable to different algorithms and branching code than GPU. The instruction set of D1 is similar to RISC-V. The processor runs at 2GHz and has four sets of 8x8 matrix multiplication calculation units. It also has a set of custom vector instructions focused on accelerating AI calculations.

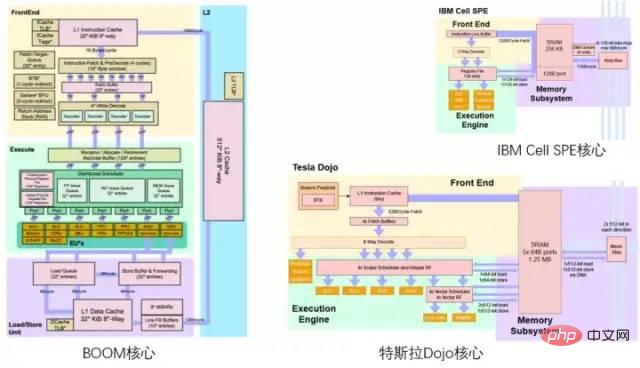

Those who are familiar with the RISC-V field can probably tell that the color scheme of Tesla’s Dojo architecture diagram seems to pay tribute to Berkeley’s BOOM processor architecture diagram, with yellow at the top, green at the bottom purple.

##Comparison between Tesla Dojo core and Berkeley BOOM/IBM Cell core

2.1 D1 core overall architecture

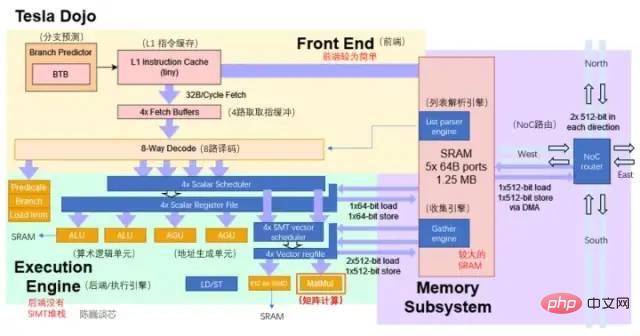

D1 core structure (the blue part is the added/modified details)

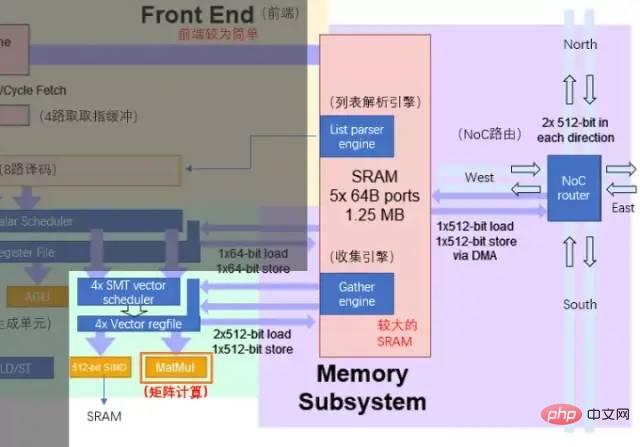

Judging from the current architecture diagram, the Dojo core consists of 4 parts: front-end, execution unit, SRAM and NoC routing. It has fewer control components than both CPU and GPU. It has a CPU-like AGU and an idea similar to GPU tensors. The matrix computing unit of the core (Tensor core).

The core structure of Dojo is more streamlined than BOOM. It does not have components such as Rename to improve the utilization of execution components, and it is also difficult to support virtual memory. However, the advantage of this design is that it reduces the area occupied by the control part and can allocate more area on the chip to the calculation execution unit. Each Dojo core provides 1.024TFLOPS of computing power. It can be seen that almost all the computing power of each is provided by the matrix computing unit. Therefore, the matrix computing unit and SRAM jointly determine the computing energy efficiency ratio of the D1 processor.

Main parameters of Dojo core

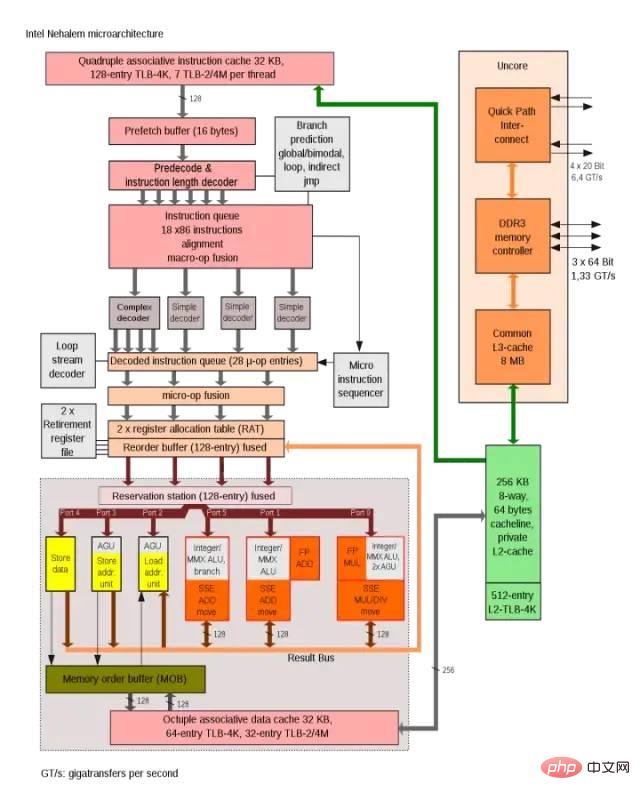

Intel Nehalem architecture uses AGU to improve single-cycle address access efficiency

The connection method of the Dojo kernel is more similar to the SPE kernel connection method in IBM's Cell processor. Key similarities include:

The calculation and matrix multiplication module and the storage of the kernel will be introduced below.

The core of Dojo architecture computing power enhancement is the matrix computing unit. The data interaction between the matrix computing unit and the core SRAM constitutes the main core data handling power consumption.

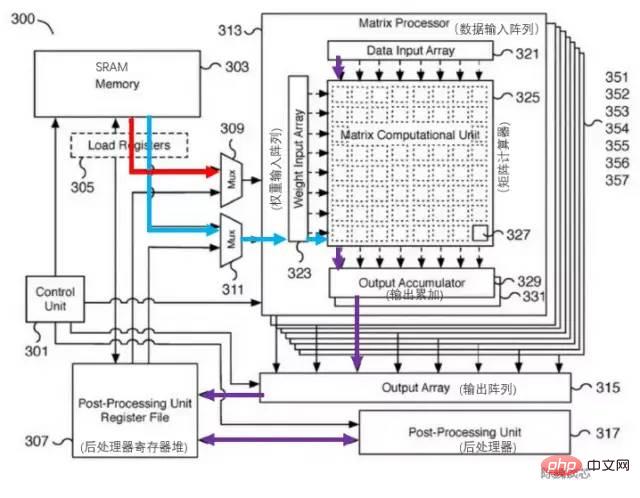

The corresponding patent of Tesla matrix calculation unit is as follows. The key component of this module is an 8x8 matrix-matrix multiplication unit (called a matrix calculator in the figure). The input is the data input array and the weight input array. After calculating the matrix multiplication, the output is directly accumulated. Each Dojo core includes 4-way 8x8 matrix multiplication units.

Tesla Matrix Computing Unit Patent

Since there is only one L1 cache and SRAM on the architecture diagram, it is a bold guess that Tesla has streamlined the RISC-V cache structure in order to save cache area and reduce latency. The 1.25MB SRAM block per core can provide 2x512-bit read (corresponding to the weight and data of AI calculations) and 512-bit write bandwidth for SIMD and matrix computing units, as well as 64-bit read and write capabilities for the integer register file. The main data flow of computation is from SRAM to SIMD and matrix multiplication units.

The main processing flow of the matrix calculation unit is:

Load weights from SRAM to the weight input array through the multiplexer (Mux) (Weight input array), and at the same time load data in SRAM to the data input array (Data input array).

The input data and weights are multiplied in the matrix calculator (Matrix computation Unit) (inner product or outer product?)

The multiplication calculation results are output to the output accumulator (Output accumulator) for accumulation. When calculating here, matrix calculations exceeding 8x8 can be performed by matrix division and splicing.

The accumulated output is passed into the post-processor register file for caching, and then post-processing (operations such as activation, pooling, padding, etc. can be performed).

The entire calculation process is directly controlled by the control unit (Control unit) without CPU intervention.

Data interaction between execution unit and SRAM/NoC

Dojo core The SRAM inside has very large read and write bandwidth and can load at 400 GB/sec and write at 270 GB/sec. The Dojo core instruction set has dedicated network transfer instructions, routed through the NoC, that can move data directly into or out of the SRAM memory of other cores in the D1 chip or even in the Dojo training module.

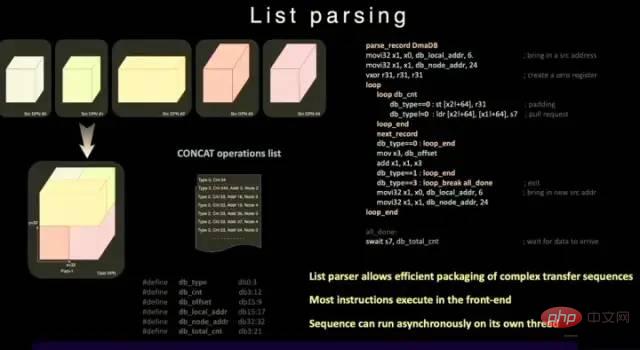

Different from ordinary SRAM, Dojo's SRAM includes a list parser engine and a gather engine. The list parsing function is one of the key features of the D1 chip. The list parsing engine can package complex transmission sequences of different data types to improve transmission efficiency.

List parsing function

In order to further reduce operation delay, area and Complexity,D1 does not support virtual memory. In a normal processor, the memory address used by the program does not directly access the physical memory address, but is converted into a physical address by the CPU using the paging structure set by the operating system.

In the D1 core, the 4-way SMT function allows explicit parallelism in calculations, simplifying the AGU and addressing calculation methods to allow Tesla to access SRAM with low enough latency. The advantage is that it avoids the delay of intermediate L1 data cache.

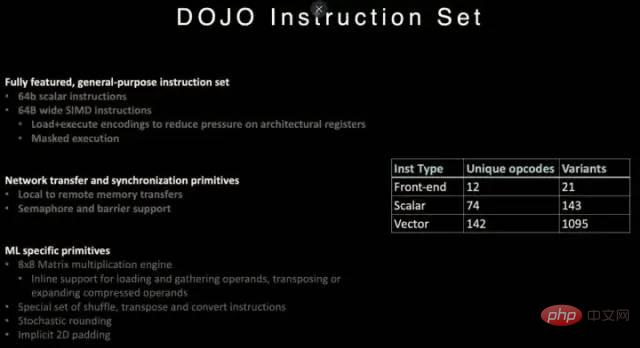

D1 Processor Instruction Set

D1 Reference RISC-V architecture instructions are introduced, and some instructions are customized, especially those related to vector calculations.

The D1 instruction set supports 64-bit scalar instructions and 64-byte SIMD instructions, network transmission and synchronization primitives and specialized primitives related to machine learning/deep learning (such as 8x8 matrix calculations) .

In terms of network data transmission and synchronization primitives, it supports instruction primitives (Primitives) for transmitting data from local storage (SRAM) to remote storage, as well as semaphores (Semaphore) and barriers. Barrier constraints. This allows D1 to support multi-threading, and its memory operation instructions can run on multiple D1 cores.

For machine learning and deep learning, Tesla defines instructions including mathematical operations such as shuffle, transpose, and convert, as well as instructions related to stochastic rounding and padding.

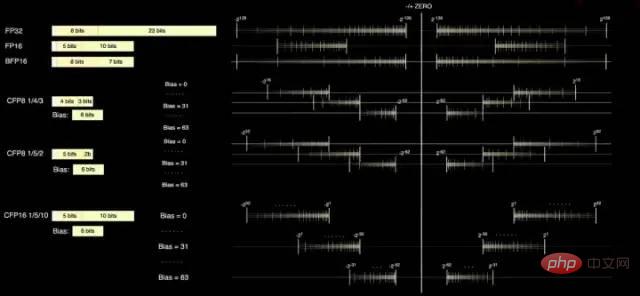

The D1 core has two standard calculation formats, FP32 and FP16, and also has the BFP16 format that is more suitable for Inference. To achieve the performance improvements of mixed-precision computing, the D1 also uses the 8-bit CFP8 format for lower precision and higher throughput.

The advantage of using CFP8 is that it can save more multiplier space to achieve almost the same computing power, which is very helpful to increase the computing power density of D1.

The Dojo compiler can slide around the mantissa precision to cover a wider range and precision. Up to 16 different vector formats can be used at any given time, giving you the flexibility to increase computing power.

D1 processor data format

According to Tesla The information can be calculated using CFP8 inside the matrix multiplication unit (stored in CFP16 format).

The D1 processor is manufactured by TSMC and uses a 7-nanometer manufacturing process. It has 50 billion transistors and a chip area of 645mm², which is smaller than Nvidia’s A100 (826 mm²) and AMD Arcturus (750 mm²).

D1 processor structure

Each D1 processor is composed of 18 x 20 Dojo core splices. There are 354 Dojo cores available in each D1 processor. (The reason why only 354 of the 360 cores are used is for yield and per-processor core stability considerations) It is manufactured by TSMC, using a 7nm manufacturing process, with 50 billion transistors and a chip area of 645mm².

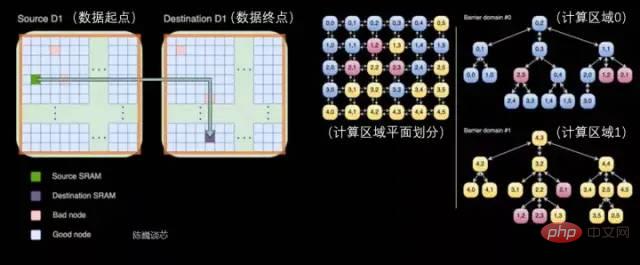

Each Dojo core has a 1.25MB SRAM as the main weight and data storage. Different Dojo cores are connected through on-chip network routing (NoC routing), and different Dojo cores perform data synchronization through complex NoC networks instead of sharing data caches. The NoC can handle 8 packets in 4 directions (southeast, northwestern) across node boundaries, 64 B/per clock cycle in each direction, i.e. one packet input and one packet output into the mesh in all four directions Each adjacent Dojo core. The NoC router can also perform a 64B bidirectional read and write to the SRAM within the core once per cycle.

Cross-processor transfer and task division within the D1 processor

Each Dojo core is a relatively complete CPU-like with matrix computing capabilities (because each core has a separate matrix computing unit and the front end is relatively small, it is called a CPU-like here). Its data flow architecture is somewhat similar. Due to SambaNova's two-dimensional data flow grid structure, data flows directly between each processing core without returning to memory.

The D1 chip runs at 2GHz and has a huge 440MB of SRAM. Tesla focuses its design on distributed SRAM in the computing grid, reducing the frequency of memory access through a large number of faster and closer on-chip storage and the transfer between on-chip storage to improve the performance of the entire system, which has obvious Features of the data flow storage and computing integrated architecture (data flow near-memory computing).

Each D1 chip has 576 bidirectional SerDes channels distributed around it and can be connected to other D1 chips with a single-side bandwidth of 4 TB/sec.

D1 processor chip main parameters

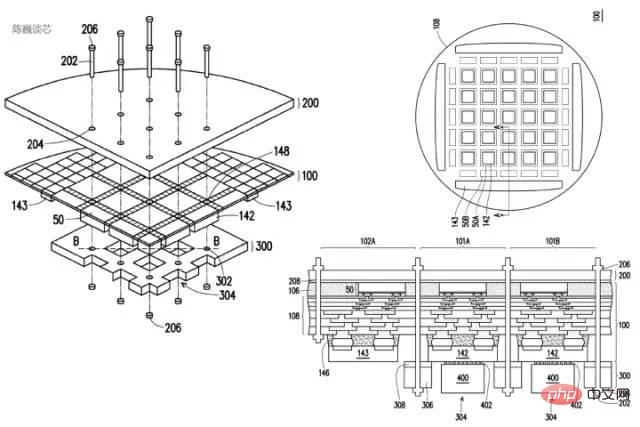

Each D1 training module is arranged in a 5x5 D1 chip array and interconnected in a two-dimensional Mesh structure. The on-chip cross-core SRAM reaches an astonishing 11GB, and of course the power consumption also reaches an astonishing 15kW. The energy efficiency ratio is 0.6TFLOPS/W@BF16/CFP8. (I hope I calculated it wrong, otherwise this energy efficiency ratio is indeed not ideal). External 32GB shared HBM memory. (HBM2e or HBM3)

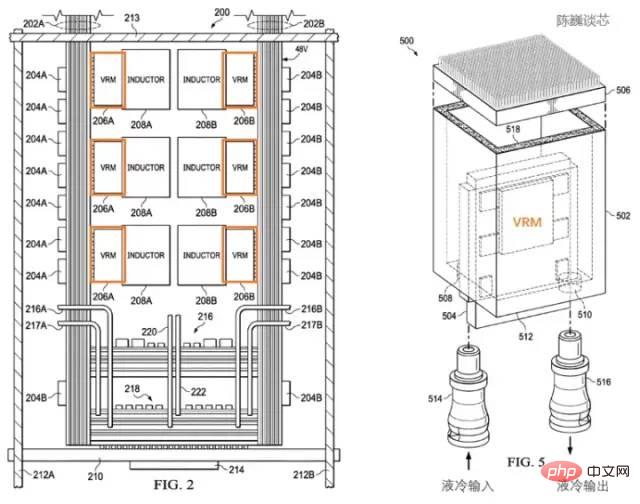

Tesla D1 processor heat dissipation structure patent

Tesla uses a dedicated Power regulation module (VRM) and thermal structure to manage power consumption. There are two main purposes of power consumption management here:

Reduce unnecessary power loss and improve energy efficiency ratio.

Reduce processor module failure caused by thermal deformation.

According to Tesla’s patent, we can see that the power regulation module is perpendicular to the chip itself, which greatly reduces the area occupied by the processor plane and can be cooled through liquid cooling. Quickly balance processor temperatures.

Tesla D1 processor heat dissipation and packaging structure patent

The training module uses InFO_SoW (Silicon on Wafer) packaging to increase the interconnection density between chips. In addition to TSMC's INFO_SoW technology, this package also uses Tesla's own mechanical packaging structure to reduce the failure of the processor module.

40 I/O chips at the outer edge of each training module achieve 36 TB/s aggregate bandwidth, or 10 TB/s span bandwidth. Each layer of training modules is connected to an ultra-fast storage system: 640GB of running memory can provide more than 18TB/s of bandwidth, plus more than 1TB/s of network switching bandwidth.

The data transmission direction is parallel to the chip plane, and the power supply and liquid cooling directions are perpendicular to the chip plane. This is a very beautiful structural design, and different training modules can be interconnected. Through the three-dimensional structure, the power supply area of the chip module is saved and the distance between computing chips is reduced as much as possible.

A Dojo POD cabinet consists of two layers of computing trays and storage systems. Each level of pallet has 6 D1 training modules. A cabinet composed of 12 training modules on two floors can provide 108PFLOPS of deep learning computing power.

##Dojo module and Dojo POD cabinet

3.3 Power management and heat dissipation controlThe heat dissipation of supercomputing platforms has always been an important dimension to measure the level of supercomputing systems.

Thermal design power of D1 chip

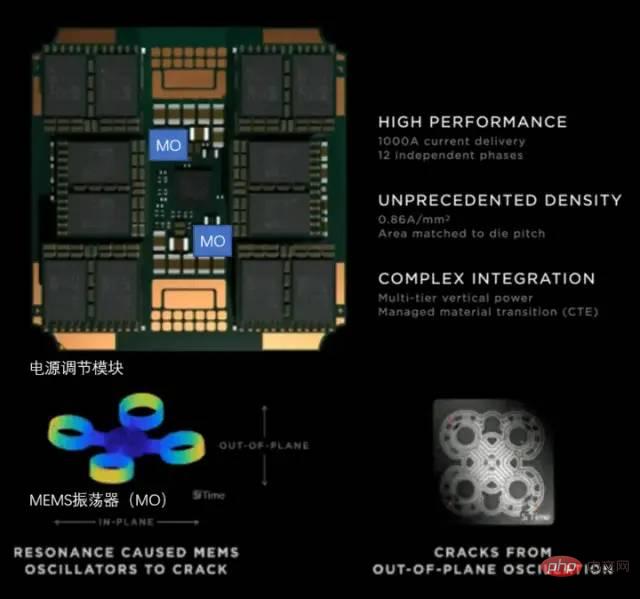

Tesla uses a fully self-developed VRM (Voltage Regulation Module) on the Dojo POD , a single VRM can provide 52V voltage and a huge current of more than 1000A on a circuit less than the area of a 25-cent coin. The current target is 0.86A per square millimeter, with a total of 12 independent power supply phases.

Tesla’s power conditioning module

For high density In terms of chip heat dissipation, the focus is on controlling the coefficient of thermal expansion (CTE). The chip density of the Dojo system is extremely high. If the CTE is slightly out of control, it may cause structural deformation/failure, resulting in connection failure.

Tesla’s self-developed VRM has iterated 14 versions in the past 2 years, using a MEMS oscillator (MO) to sense the thermal deformation of the power regulation module, and finally Fully meets internal requirements for CTE indicators. This method of actively adjusting the power supply through MEMS technology is similar to the active adjustment method of controlling the vibration of the rocket body.

3.4 Compilation ecology of Dojo architecture processor

For AI chips such as D1, the compilation ecology is no less important than the chip itself.

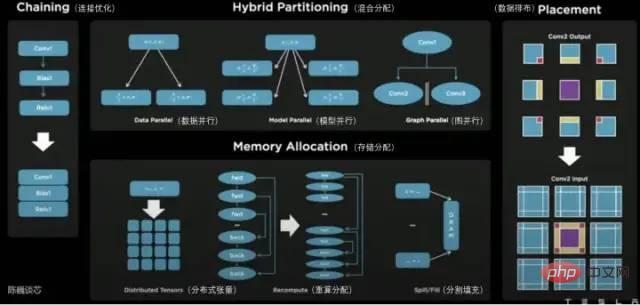

On the D1 processor plane, D1 is divided into matrix computing units. The compilation tool chain is responsible for dividing tasks and configuring data storage, and performs fine-grained parallel computing in a variety of ways to reduce storage usage. The parallel methods supported by the Dojo compiler include data parallelism, model parallelism and graph parallelism. Supported storage allocation methods include distributed tensors, recalculated allocation, and split-fill. The compiler itself can handle dynamic control flow commonly used in various CPUs, including loops and graph optimization algorithms. With the Dojo compiler, users can treat Dojo large-scale distributed systems as an accelerator for overall design and training. The top layer of the entire software ecosystem is based on PyTorch, the bottom layer is based on Dojo driver, and the Dojo compiler and LLVM are used in the middle to form the compilation layer. After adding LLVM here, Tesla can better utilize the various compilation ecosystems already on LLVM for compilation optimization.

Tesla Dojo Compiler

Through Tesla AI Day, we saw the true form of the Tesla robot and learned more about its powerful "inner core".

Tesla’s Dojo core is different from previous CPU and GPU architectures. It can be said to be a streamlined GPU that combines the characteristics of the CPU. I believe it will also be compiled with the CPU. There is a big difference with GPU. In order to increase computing density, Tesla has made extremely streamlined optimizations and provided an actively adjusted power management mechanism.

The Tesla Dojo architecture is not only called a dojo, but its design is also based on simplicity and less as more. Will this architecture become another typical form of computing chip architecture after CPU and GPU? let us wait and see.

The above is the detailed content of Can Tesla supercomputing chip surpass GPGPU?. For more information, please follow other related articles on the PHP Chinese website!