Technology peripherals

Technology peripherals

AI

AI

One GPU, 20 models per second! NVIDIA's new toy uses GET3D to create the universe

One GPU, 20 models per second! NVIDIA's new toy uses GET3D to create the universe

One GPU, 20 models per second! NVIDIA's new toy uses GET3D to create the universe

Abracadabra!

In terms of 2D generated 3D models, Nvidia has unveiled its self-proclaimed "world-class" research: GET3D.

After training on 2D images, the model generates 3D shapes with high-fidelity textures and complex geometric details.

How powerful is it?

Shape, texture, material customization

GET3D gets its name because of its ability to generate explicitly textured 3D meshes (Generate Explicit Textured 3D meshes).

Paper address: https://arxiv.org/pdf/2209.11163.pdf

That is, the shape it creates is in the form of a triangle mesh, like a paper model, covered with a textured material.

#The key is that this model can generate a variety of high-quality models.

For example, various wheels on chair legs; car wheels, lights and windows; animal ears and horns; motorcycle rearview mirrors, Textures on car tires; high heels, human clothes...

#Unique buildings on both sides of the street, different vehicles whizzing by, and different groups of people passing by But...

#It is very time-consuming to create the same 3D virtual world through manual modeling.

Although previous 3D generated AI models are faster than manual modeling, their ability to generate more richly detailed models is still lacking.

Even the latest inverse rendering methods can only generate 3D objects based on 2D images taken from various angles. Developers can only build one 3D object at a time.

GET3D is different.

Developers can easily import generated models into game engines, 3D modelers, and movie renderers to edit them.

#When creators export GET3D-generated models to graphics applications, they can apply realistic lighting effects as the model moves or rotates within the scene.

as the picture shows:

In addition, GET3D can also achieve text-guided shape generation.

# By using StyleGAN-NADA, another AI tool from NVIDIA, developers can use text prompts to add specific styles to images.

For example, you can turn the rendered car into a burned-out car or taxi

Convert an ordinary house Transform into a brick house, a burning house, or even a haunted house.

##Or apply the characteristics of tiger print and panda print to any animal...

It’s like the Simpsons’ “Animal Crossing”...

NVIDIA introduced that when trained on a single NVIDIA GPU, GET3D can generate approximately 20 objects per second.

Here, the larger and more diverse the training data set it learns from, the more diverse and detailed the output will be.

NVIDIA said that the research team used the A100 GPU to train the model on approximately 1 million images in just 2 days.

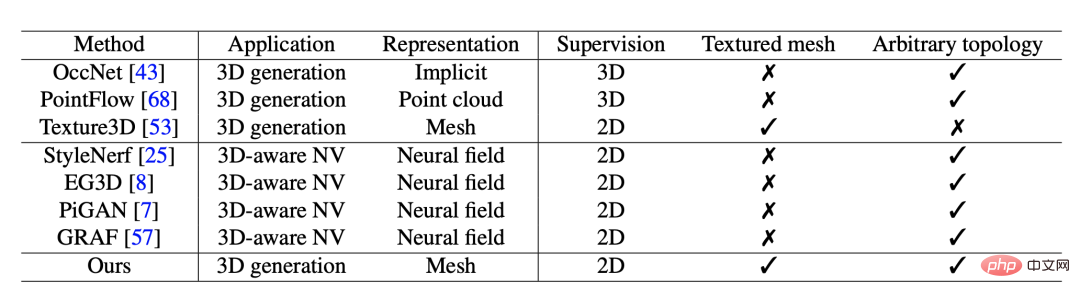

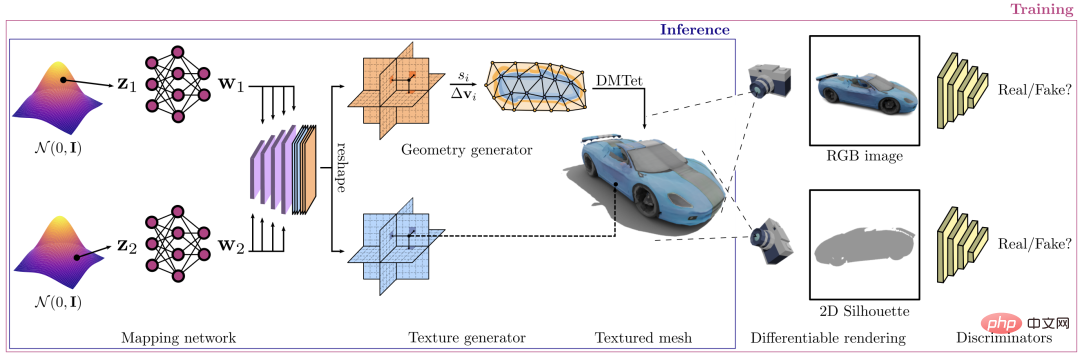

Research methods and processesGET3D framework, its main function is to synthesize textured three-dimensional shapes.

The generation process is divided into two parts: the first part is the geometry branch, which can output surface meshes of any topology. The other part is the texture branch, which produces a texture field from which surface points can be queried.

## During training, a differentiable rasterizer It is used to efficiently render the resulting texture mesh into a two-dimensional high-resolution image. The entire process is separable, allowing adversarial training from images by propagating the gradients of the 2D discriminator.

#Afterwards, the gradients are propagated from the 2D discriminator to the two generator branches.

#The researchers conducted extensive experiments to evaluate the model. They first compared the quality of 3D textured meshes generated by GET3D with existing ones generated using the ShapeNet and Turbosquid datasets.

Next, the researchers optimized the model in subsequent studies based on the comparison results and conducted more experiments.

#GET3D models can achieve phase separation in geometry and texture.

#The figure shows the shape generated by the same geometry hidden code in each row, while changing the texture code.

# Shown in each column are shapes generated by the same texture hiding code while changing the geometry code.

In addition, the researchers inserted the geometry hiding code from left to right in the shapes generated by the same texture hiding code in each row.

# and the shape generated by the same geometry hidden code while inserting the texture code from top to bottom. The results show that each interpolation is meaningful to the generated model. Within each model’s subgraph, GET3D is able to generate smooth transitions between different shapes in all categories. In each line, locally perturb the hidden code by adding a small noise. In this way, GET3D is able to locally generate shapes that look similar but are slightly different. The researchers note that future versions of GET3D could use camera pose estimation technology to let developers train models for the real world. data rather than synthetic datasets. # In the future, through improvements, developers can train GET3D on a variety of 3D shapes in one go, rather than needing to train it on one object category at a time. Sanja Fidler, vice president of artificial intelligence research at Nvidia, said, GET3D takes us away from artificial intelligence-driven 3D content The popularization of creation is one step closer. Its ability to generate textured 3D shapes on the fly could be a game-changer for developers, helping them quickly populate virtual worlds with a variety of interesting objects. The first author of the paper, Jun Gao, is a doctoral student in the machine learning group of the University of Toronto, and his supervisor is Sanja Fidler. #In addition to his excellent academic qualifications, he is also a research scientist at the NVIDIA Toronto Artificial Intelligence Laboratory. His research mainly focuses on deep learning (DL), with the goal of structured geometric representation learning. At the same time, his research also draws insights from human perception of 2D and 3D images and videos. # Such an outstanding top student comes from Peking University. He graduated with a bachelor's degree in 2018. While at Peking University, he worked together with Professor Wang Liwei. #After graduation, he also interned at Stanford University, MSRA and NVIDIA. Jun Gao’s mentor is also a leader in the industry. Fidler is an associate professor at the University of Toronto and a faculty member at the Vector Institute, where she is also a co-founding member. #In addition to teaching, she is also the vice president of artificial intelligence research at NVIDIA, where she leads a research laboratory in Toronto. # Before coming to Toronto, she was a research assistant professor at the Toyota Institute of Technology in Chicago. The institute is located on the campus of the University of Chicago and is considered an academic institution. Fidler’s research areas focus on computer vision (CV) and machine learning (ML), focusing on the intersection of CV and graphics, three-dimensional vision, and 3D reconstruction and synthesis, as well as interactive methods for image annotation, etc.

Introduction to the author

The above is the detailed content of One GPU, 20 models per second! NVIDIA's new toy uses GET3D to create the universe. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

One of the standout features of the recently launched Beelink GTi 14is that the mini PC has a hidden PCIe x8 slot underneath. At launch, the company said that this would make it easier to connect an external graphics card to the system. Beelink has n

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD delivers on its initial March ‘24 promise to launch FSR 3.1 in Q2 this year. What really sets the 3.1 release apart is the decoupling of the frame generation side from the upscaling one. This allows Nvidia and Intel GPU owners to apply the FSR 3.

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

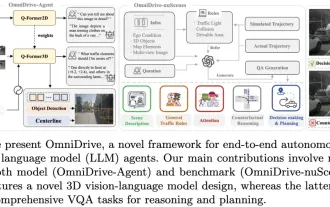

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require