Technology peripherals

Technology peripherals

AI

AI

Surpassing SOTA by 3.27%, Shanghai Jiao Tong University and others proposed a new method of adaptive local aggregation

Surpassing SOTA by 3.27%, Shanghai Jiao Tong University and others proposed a new method of adaptive local aggregation

Surpassing SOTA by 3.27%, Shanghai Jiao Tong University and others proposed a new method of adaptive local aggregation

This article introduces a paper included in AAAI 2023. The paper was written by Hua Yang and Louis Ann from the Shanghai Key Laboratory of Scalable Computing and Systems at Shanghai Jiao Tong University and Queen's University Belfast. Teacher Wang Hao from Nazhou State University jointly completed it.

- Paper link: https://arxiv.org/abs/2212.01197

- Code link (including instructions for using the ALA module): https://github.com/TsingZ0/FedALA

This paper proposes an adaptive local aggregation method for federated learning to deal with the statistical heterogeneity problem in federated learning by automatically capturing the information required by the client from the global model. The author compared 11 SOTA models and achieved an excellent performance of 3.27% beyond the optimal method. The author applied the adaptive local aggregation module to other federated learning methods and achieved an improvement of up to 24.19%.

1 Introduction

Federated learning (FL) helps people fully understand and learn from each other while protecting privacy by keeping user privacy data locally without disseminating it. Uncover the value in your user data. However, since the data between clients is not visible, the statistical heterogeneity of the data (non-independent and identically distributed data (non-IID) and data volume imbalance) has become one of the huge challenges of FL. The statistical heterogeneity of data makes it difficult for traditional federated learning methods (such as FedAvg, etc.) to obtain a single global model suitable for each client through FL process training.

In recent years, personalized federated learning (pFL) methods have received increasing attention due to their ability to cope with the statistical heterogeneity of data. Unlike traditional FL, which seeks a high-quality global model, the pFL approach aims to train a personalized model suitable for each client with the collaborative computing power of federated learning. Existing pFL research on aggregating models on the server can be divided into the following three categories:

(1) Methods to learn a single global model and fine-tune it, including Per-FedAvg and FedRep;

(2) Methods for learning additional personalization models, including pFedMe and Ditto;

(3) Aggregation through personalization ( or local aggregation) methods for learning local models, including FedAMP, FedPHP, FedFomo, APPLE and PartialFed.

The pFL methods in categories (1) and (2) use all information from the global model for local initialization (referring to initializing the local model before local training at each iteration). However, in the global model, only information that improves the quality of the local model (information required by the client that meets the local training goals) is beneficial to the client. Global models generalize poorly because they contain information that is both needed and not required by a single client. Therefore, researchers propose pFL methods in category (3) to capture the information required by each client in the global model through personalized aggregation. However, the pFL methods in category (3) still exist (a) without considering the client's local training goals (such as FedAMP and FedPHP), (b) with high computational and communication costs (such as FedFomo and APPLE), (c) privacy Issues such as leakage (such as FedFomo and APPLE) and (d) mismatch between personalized aggregation and local training targets (such as PartialFed). Furthermore, since these methods make substantial modifications to the FL process, the personalized aggregation methods they use cannot be directly used in most existing FL methods.

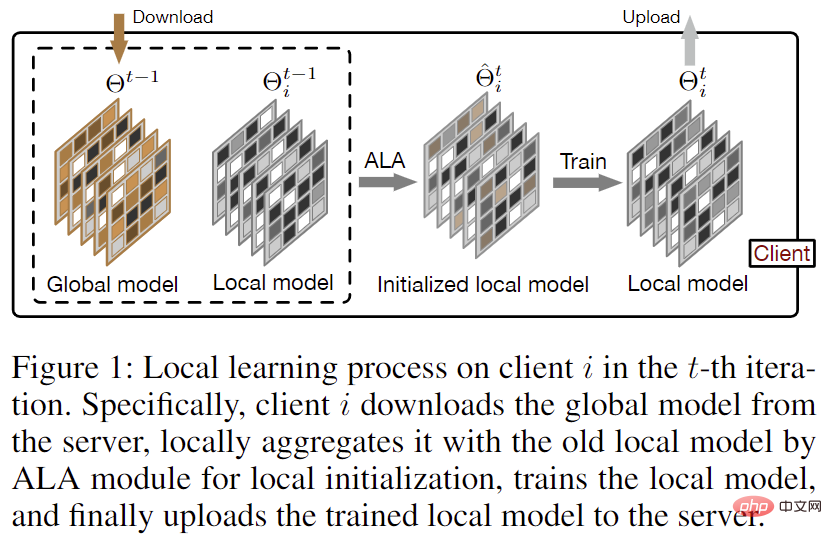

In order to accurately capture the information required by the client from the global model without increasing the communication cost in each iteration compared to FedAvg, the author proposed a method for federation Learning Adaptive Local Aggregation Method (FedALA). As shown in Figure 1, FedALA captures the required information in the global model by aggregating the global model with the local model through the adaptive local aggregation (ALA) module before each local training. Since FedALA only uses ALA to modify the local model initialization process in each iteration compared to FedAvg without changing other FL processes, ALA can be directly applied to most other existing FL methods to improve their individuality. performance.

Figure 1: Local learning process on the client in iteration

2 Method

##2.1 Adaptive Local Aggregation (ALA)

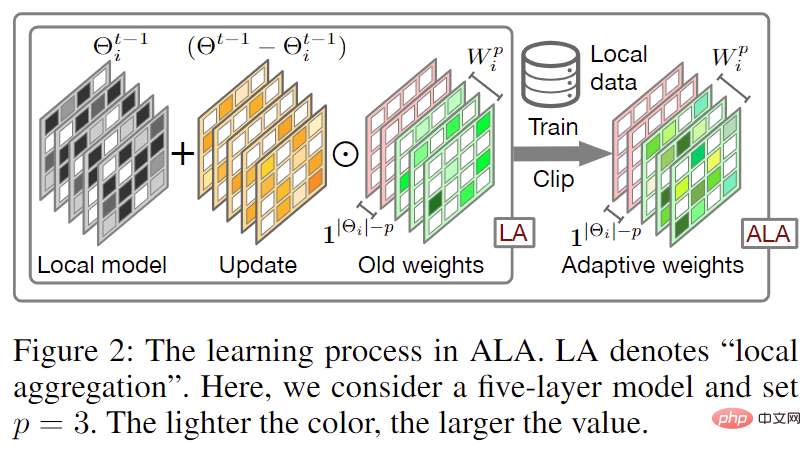

Figure 2: Adaptive Local Aggregation (ALA) process

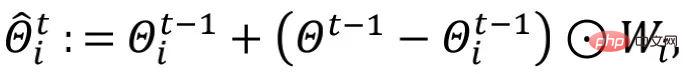

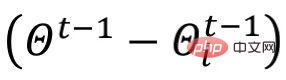

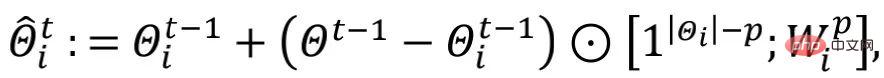

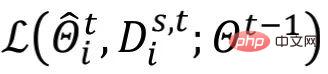

The adaptive local aggregation (ALA) process is shown in Figure 2. Compared with traditional federated learning, the downloaded global model is directly overwritten with the local model to obtain the local initialization model In the way (i.e. ), FedALA performs adaptive local aggregation by learning local aggregation weights for each parameter.

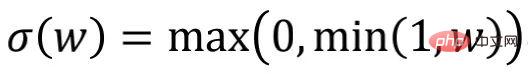

renew". In addition, the author implements regularization through the element-wise weight pruning method  and limits the values in

and limits the values in  to [0,1].

to [0,1].

represents the number of neural network layers in  (or number of neural network blocks),

(or number of neural network blocks),  is consistent with the shape of the low-level network in

is consistent with the shape of the low-level network in  , and

, and  is consistent with the rest of

is consistent with the rest of  The p-layer high-level network has the same shape.

The p-layer high-level network has the same shape.

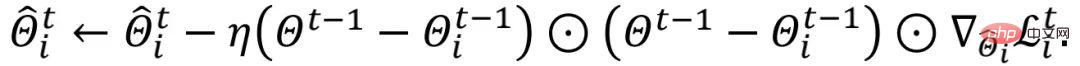

The author initializes all the values in  to 1, and updates

to 1, and updates  based on the old

based on the old  during each round of local initialization. In order to further reduce the computational cost, the author uses random sampling s

during each round of local initialization. In order to further reduce the computational cost, the author uses random sampling s

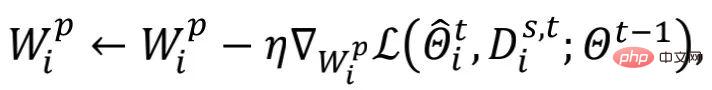

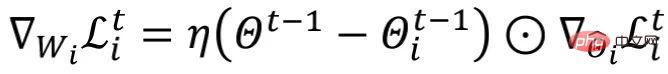

where  is the learning to update

is the learning to update  Rate. In the process of learning

Rate. In the process of learning  , the author froze other trainable parameters except

, the author froze other trainable parameters except  .

.

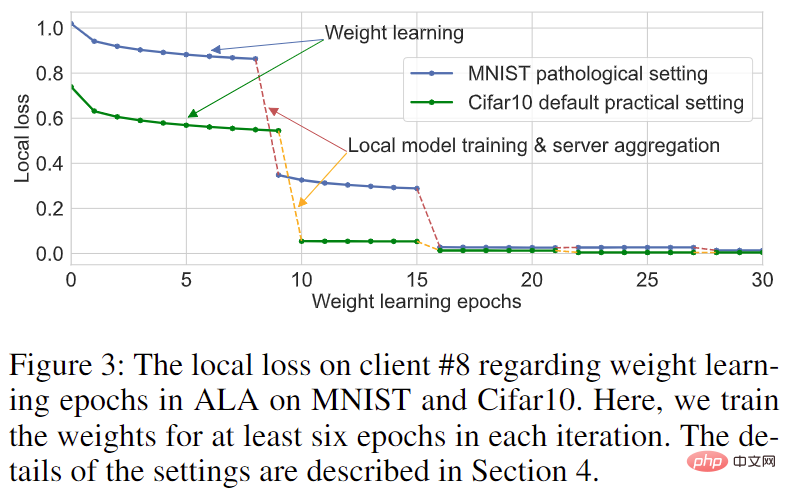

Figure 3: Learning curve of client 8 on MNIST and Cifar10 datasets

By choosing a smaller p value, the parameters required for training in ALA can be greatly reduced without affecting the performance of FedALA. Furthermore, as shown in Figure 3, the authors observed that once it is trained to convergence in the first training session, it does not have a great impact on the local model quality even if it is trained in subsequent iterations. That is, each client can reuse the old  to capture the information it needs. The author adopts the method of fine-tuning

to capture the information it needs. The author adopts the method of fine-tuning  in subsequent iterations to reduce the computational cost.

in subsequent iterations to reduce the computational cost.

2.2 ALA Analysis

Without affecting the analysis, for the sake of simplicity, the author ignores  and assumes

and assumes  . According to the above formula,

. According to the above formula,  can be obtained, where

can be obtained, where  represents

represents  . Authors can think of updating

. Authors can think of updating  in ALA as updating

in ALA as updating  .

.

The gradient term is scaled element by element in each round. Different from the local model training (or fine-tuning) method, the above update process of

is scaled element by element in each round. Different from the local model training (or fine-tuning) method, the above update process of  can perceive the common information in the global model. Between different iteration rounds, the dynamically changing

can perceive the common information in the global model. Between different iteration rounds, the dynamically changing  introduces dynamic information into the ALA module, making it easy for FedALA to adapt to complex environments.

introduces dynamic information into the ALA module, making it easy for FedALA to adapt to complex environments.

3 Experiment

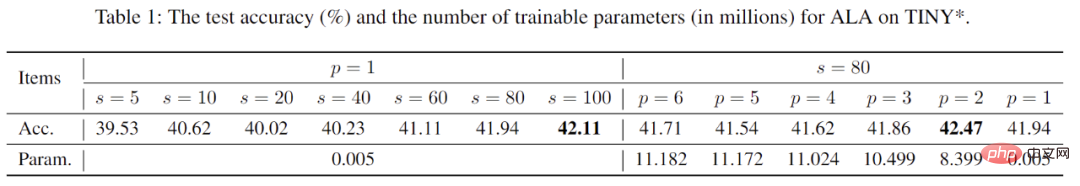

The author used ResNet-18 to compare the hyperparameters s and p on the Tiny-ImageNet data set in a practical data heterogeneous environment. The research on the impact of FedALA is shown in Table 1. For s, using more randomly sampled local training data for ALA module learning can make the personalized model perform better, but it also increases the computational cost. When using ALA, the size of s can be adjusted based on the computing power of each client. As can be seen from the table, FedALA still has outstanding performance even when using extremely small s (such as s=5). For p, different p values have almost no impact on the performance of the personalized model, but there is a huge difference in computational cost. This phenomenon also shows from one aspect the effectiveness of methods such as FedRep, which divides the model and retains the neural network layer close to the output without uploading it to the client. When using ALA, we can use a smaller and appropriate p value to further reduce the computational cost while ensuring the performance capabilities of the personalized model.

Table 1: Research on hyperparameters and their impact on FedALA

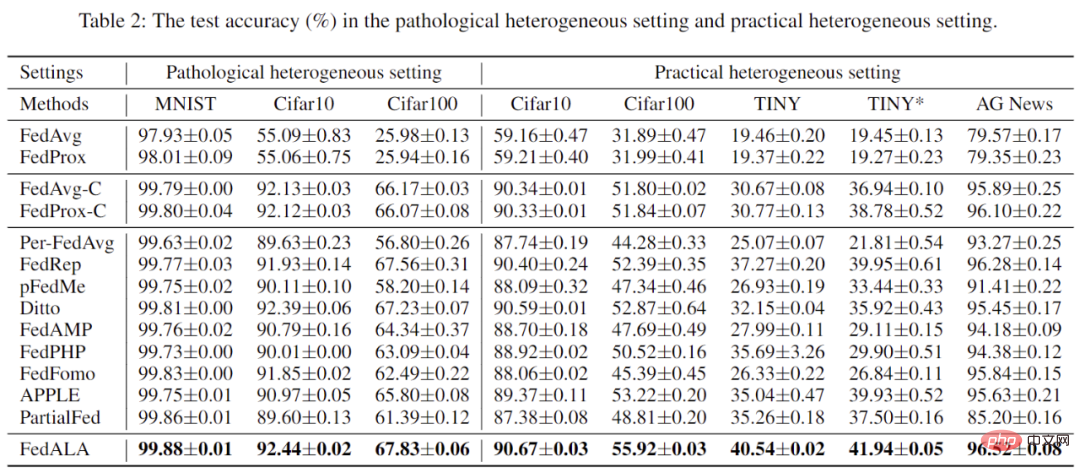

The author compared and analyzed FedALA with 11 SOTA methods in pathological data heterogeneous environment and practical data heterogeneous environment. As shown in Table 2, the data shows that FedALA outperforms these 11 SOTA methods in these cases, where "TINY" means using a 4-layer CNN on Tiny-ImageNet. For example, FedALA outperforms the optimal baseline by 3.27% in the TINY case.

Table 2: Experimental results under pathological and real data heterogeneous environments

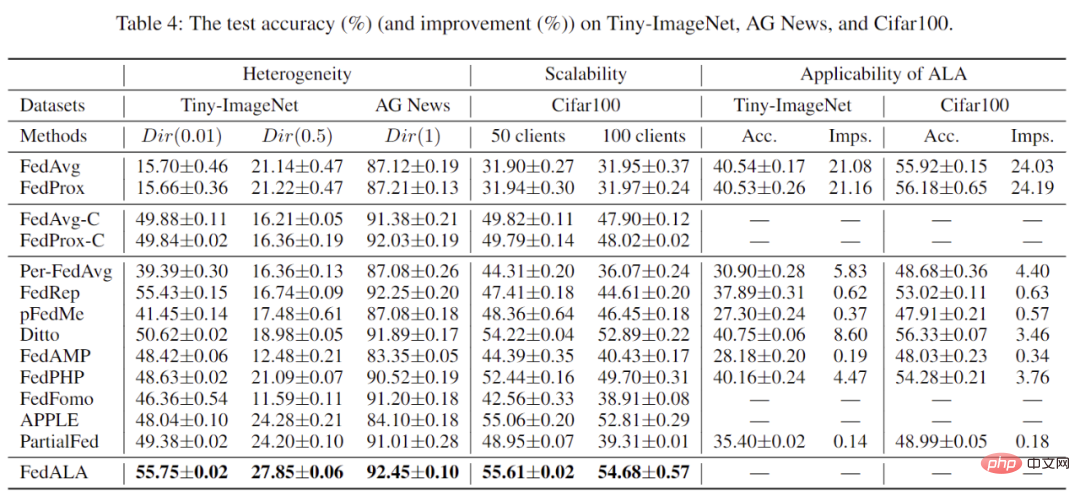

In addition, the author The performance of FedALA was also evaluated under different heterogeneous environments and total number of clients. As shown in Table 3, FedALA still maintains excellent performance under these conditions.

Table 3: Other experimental results

Experiments based on Table 3 As a result, applying the ALA module to other methods can achieve up to 24.19% improvement.

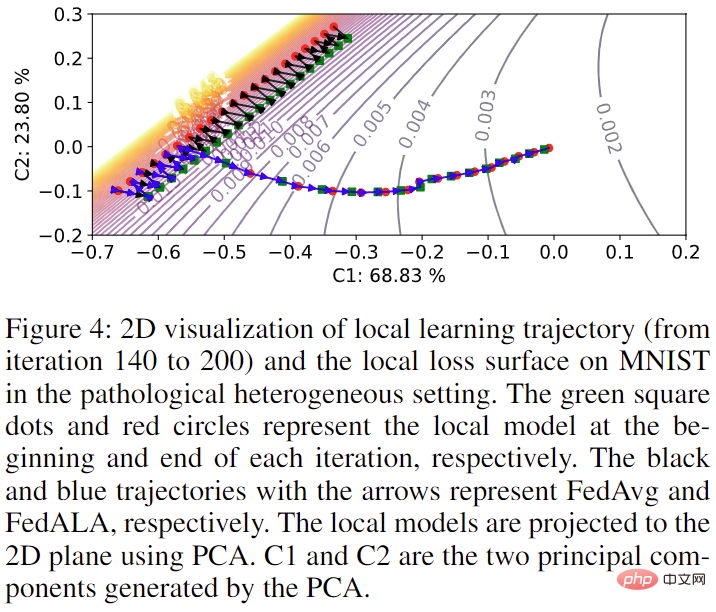

Finally, the author also visualized the impact of the addition of the ALA module on model training in the original FL process on MNIST, as shown in Figure 4. When ALA is not activated, the model training trajectory is consistent with using FedAvg. Once ALA is activated, the model can optimize directly toward the optimal goal with the information required for its training captured in the global model.

Figure 4: Visualization of the model training trajectory on client No. 4

The above is the detailed content of Surpassing SOTA by 3.27%, Shanghai Jiao Tong University and others proposed a new method of adaptive local aggregation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1205

1205

24

24

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.