Technology peripherals

Technology peripherals

AI

AI

The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.

The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.

The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.

Who would have thought that an unexpected LLaMA leak would ignite the biggest innovation spark in the open source LLM field.

A series of outstanding open source alternatives to ChatGPT - "Alpaca Family", then appeared in a dazzling manner.

The friction between open source and API-based distribution is one of the most pressing tensions in the generative AI ecosystem.

In the text-to-image space, the release of Stable Diffusion clearly demonstrates that open source is a viable distribution mechanism for the underlying model.

However, this is not the case in the field of large language models. The biggest breakthroughs in this field, such as models such as GPT-4, Claude and Cohere, are only available through APIs.

Open source alternatives to these models do not demonstrate the same level of performance, especially in the ability to follow human instructions. However, an unexpected leak completely changed this situation.

LLaMA’s “epic” leak

A few weeks ago, Meta AI launched the large language model LLaMA.

LLaMA has different versions, including 7B, 13B, 33B and 65B parameters. Although it is smaller than GPT-3, it can compete with GPT-3 on many tasks. performance is comparable.

LLaMA was not open source at first, but a week after its release, the model was suddenly leaked on 4chan, triggering thousands of downloads.

This incident can be called an "epic leak" because it has become an endless source of innovation in the field of large language models.

In just a few weeks, innovation in LLM agents built on it has exploded.

Alpaca, Vicuna, Koala, ChatLLaMA, FreedomGPT, ColossalChat... Let us review how this explosion of the "alpaca family" was born.

Alpaca In mid-March, the large model Alpaca released by Stanford became popular.

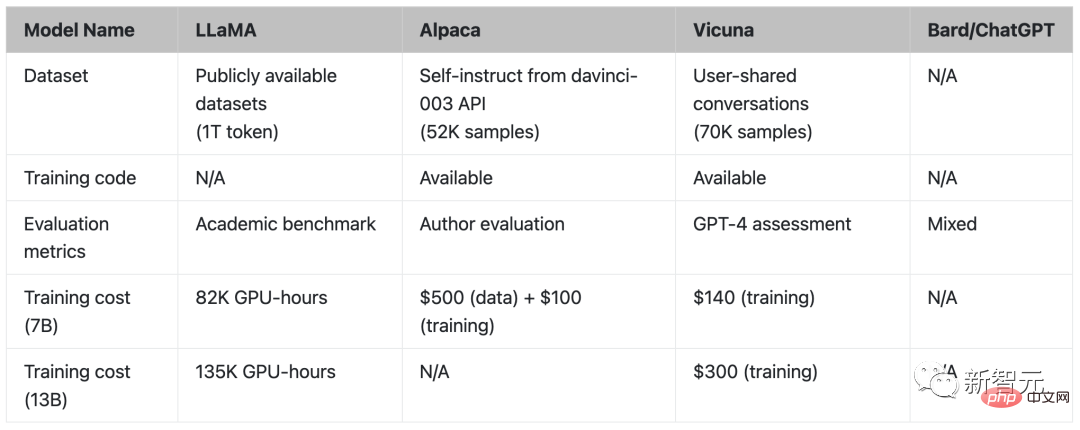

Alpaca is a brand new model fine-tuned from Meta's LLaMA 7B. It only uses 52k data and its performance is approximately equal to GPT-3.5.

The key is that the training cost is extremely low, less than 600 US dollars.

Stanford researchers compared GPT-3.5 (text-davinci-003) and Alpaca 7B and found that the performance of the two models was very similar. Alpaca wins 90 versus 89 times against GPT-3.5.

For the Stanford team, if they want to train a high-quality instruction following model within the budget, they must face two important challenges: Have a powerful pre-training language model, and a high-quality instruction-following data.

Exactly, the LLaMA model provided to academic researchers solved the first problem.

For the second challenge, the paper "Self-Instruct: Aligning Language Model with Self Generated Instructions" gave a good inspiration, that is, using the existing strong language model to automatically generate command data.

The biggest weakness of the LLaMA model is the lack of instruction fine-tuning. One of OpenAI's biggest innovations is the use of instruction tuning on GPT-3.

In this regard, Stanford used an existing large language model to automatically generate demonstrations of following instructions.

Now, Alpaca is directly regarded as "Stable Diffusion of large text models" by netizens.

Vicuna At the end of March, researchers from UC Berkeley, Carnegie Mellon University, Stanford University, and UC San Diego open sourced Vicuna, an LLaMA fine-tuning that matches the performance of GPT-4 Version.

The 13 billion parameter Vicuna is trained by fine-tuning LLaMA on user shared conversations collected by ShareGPT. The training cost is nearly US$300.

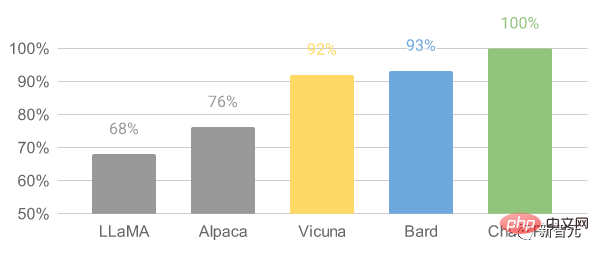

The results show that Vicuna-13B achieves capabilities comparable to ChatGPT and Bard in more than 90% of cases.

For the Vicuna-13B training process, the details are as follows:

First, the researcher starts the ChatGPT conversation About 70K conversations have been collected on the sharing website ShareGPT.

Next, the researchers optimized the training script provided by Alpaca so that the model could better handle multiple rounds of dialogue and long sequences. Then PyTorch FSDP was used for one day of training on 8 A100 GPUs.

In terms of quality assessment of the model, the researchers created 80 different questions and evaluated the model output using GPT-4.

To compare the different models, the researchers combined the output of each model into a single prompt and then had GPT-4 evaluate which model gave the better answer.

##Comparison of LLaMA, Alpaca, Vicuna and ChatGPT

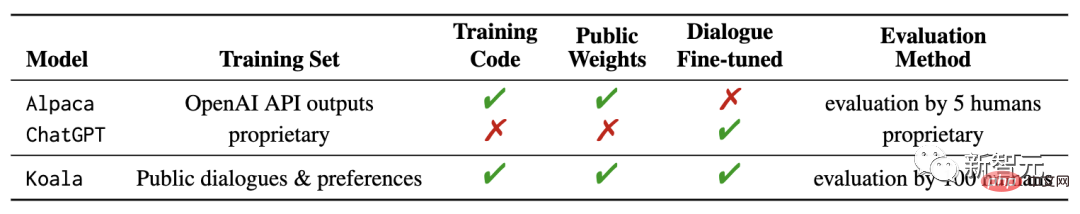

KoalaRecently, UC Berkeley AI Research Institute (BAIR) released a new model "Koala". Compared with the previous use of OpenAI's GPT data for instruction fine-tuning, the difference between Koala is that it uses network acquisition. high-quality data for training.

The research results show that Koala can effectively answer a variety of user queries, and the answers generated are often more popular than Alpaca, and are as effective as ChatGPT at least half of the time. up and down.

The researchers hope that the results of this experiment can further the discussion around the relative performance of large closed-source models versus small public models, especially as the results show that for those that can run locally A small model can achieve the performance of a large model if training data is carefully collected.

#In fact, the experimental results of the Alpaca model released by Stanford University and fine-tuning LLaMA data based on OpenAI's GPT model have shown , the right data can significantly improve smaller open source models.

This is also the original intention of Berkeley researchers to develop and release the Koala model, hoping to provide another experimental proof of the results of this discussion.

Koala fine-tunes free interaction data obtained from the web, with a special focus on including interactions with high-performance closed-source models such as ChatGPT.

The researchers did not pursue crawling as much network data as possible to maximize the data volume, but focused on collecting a small high-quality dataset, including ChatGPT distilled data, open source Data etc.

ChatLLaMANebuly has open sourced ChatLLaMA, a framework that lets us create conversational assistants using our own data.

ChatLLaMA lets us create hyper-personalized ChatGPT-like assistants using our own data and as little computation as possible.

Assuming that in the future, we no longer rely on one large assistant that "rules everyone", everyone can create their own personalized version of ChatGPT assistants, which can support various human needs. kind of demand.

However, creating such a personalized assistant requires effort on many fronts: dataset creation, efficient training using RLHF, and inference optimization.

The purpose of this library is to give developers peace of mind by abstracting away the work required to optimize and collect large amounts of data.

ChatLLaMA is designed to help developers handle a variety of use cases, all related to RLHF training and optimized inference. Here are some use case references:

- Create a ChatGPT-like personalized assistant for vertical specific tasks (legal, medical, gaming, academic research, etc.);

- Want to use limited data on local hardware infrastructure to train an efficient ChatGPT-like assistant;

- Want to create your own personalized version of the ChatGPT-like assistant, At the same time, avoid out-of-control costs;

- I want to know which model architecture (LLaMA, OPT, GPTJ, etc.) best meets my requirements in terms of hardware, computing budget and performance;

- Want to align the assistant with my personal/company values, culture, brand and manifesto.

Built with Electron and React, FreedomGPT is a desktop application that allows users to run LLaMA on their local machine.

The characteristics of FreedomGPT are evident from its name - the questions it answers are not subject to any censorship or security filtering.

This app was developed by Age of AI, an AI venture capital firm.

FreedomGPT is built on Alpaca. FreedomGPT uses Alpaca's distinguishing features, as Alpaca is relatively easier to access and customize than other models.

ChatGPT follows OpenAI’s usage policy and restricts hate, self-harm, threats, violence, and sexual content.

Unlike ChatGPT, FreedomGPT answers questions without bias or favoritism and will not hesitate to answer controversial or controversial topics.

FreedomGPT even answered "How to make a bomb at home", which OpenAI specifically removed from GPT-4.

FreedomGPT is unique because it overcomes censorship restrictions and caters to controversial topics without any guarantees. Its symbol is the Statue of Liberty because this unique and bold large language model symbolizes freedom.

FreedomGPT can even run locally on your computer without the need for an Internet connection.

Additionally, an open source version will be released soon, allowing users and organizations to fully customize it.

ColossalChat

ColossalChat proposed by UC Berkeley requires less than 10 billion parameters to achieve Chinese and English bilingual capabilities, and the effect is equivalent to ChatGPT and GPT-3.5.

In addition, ColossalChat, which is based on the LLaMA model, also reproduces the complete RLHF process and is currently the open source project closest to the original technical route of ChatGPT.

Chinese-English bilingual training data set

ColossalChat released a bilingual data set containing approximately 100,000 Chinese and English question and answer pairs.

This data set is collected and cleaned from real problem scenarios on social media platforms as a seed data set, extended using self-instruct, and the annotation cost is approximately $900.

Compared to datasets generated by other self-instruct methods, this dataset contains more realistic and diverse seed data, covering a wider range of topics.

This data set is suitable for fine-tuning and RLHF training. ColossalChat can achieve better conversational interaction when providing high-quality data, and also supports Chinese.

Complete RLHF pipeline

There are three stages of RLHF algorithm replication:

In RLHF-Stage1, supervised instruction fine-tuning is performed using the above bilingual dataset to fine-tune the model.

In RLHF-Stage2, the reward model is trained by manually ranking different outputs of the same prompt to assign corresponding scores, and then supervising the training of the reward model.

In RLHF-Stage3, the reinforcement learning algorithm is used, which is the most complex part of the training process.

I believe that more projects will be released soon.

No one expected that this unexpected leak of LLaMA would actually ignite the biggest innovation spark in the field of open source LLM.

The above is the detailed content of The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Face detection and recognition technology is already a relatively mature and widely used technology. Currently, the most widely used Internet application language is JS. Implementing face detection and recognition on the Web front-end has advantages and disadvantages compared to back-end face recognition. Advantages include reducing network interaction and real-time recognition, which greatly shortens user waiting time and improves user experience; disadvantages include: being limited by model size, the accuracy is also limited. How to use js to implement face detection on the web? In order to implement face recognition on the Web, you need to be familiar with related programming languages and technologies, such as JavaScript, HTML, CSS, WebRTC, etc. At the same time, you also need to master relevant computer vision and artificial intelligence technologies. It is worth noting that due to the design of the Web side

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.

Just released! An open source model for generating anime-style images with one click

Apr 08, 2024 pm 06:01 PM

Just released! An open source model for generating anime-style images with one click

Apr 08, 2024 pm 06:01 PM

Let me introduce to you the latest AIGC open source project-AnimagineXL3.1. This project is the latest iteration of the anime-themed text-to-image model, aiming to provide users with a more optimized and powerful anime image generation experience. In AnimagineXL3.1, the development team focused on optimizing several key aspects to ensure that the model reaches new heights in performance and functionality. First, they expanded the training data to include not only game character data from previous versions, but also data from many other well-known anime series into the training set. This move enriches the model's knowledge base, allowing it to more fully understand various anime styles and characters. AnimagineXL3.1 introduces a new set of special tags and aesthetics