Technology peripherals

Technology peripherals

AI

AI

Reinforcement learning guru Sergey Levine's new work: Three large models teach robots to recognize their way

Reinforcement learning guru Sergey Levine's new work: Three large models teach robots to recognize their way

Reinforcement learning guru Sergey Levine's new work: Three large models teach robots to recognize their way

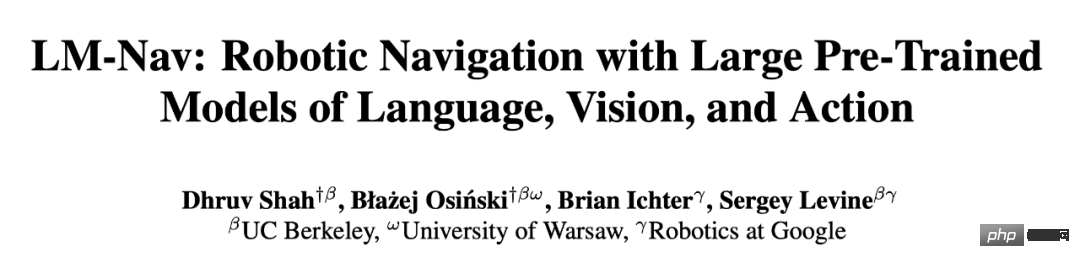

The robot with a built-in large model has learned to follow language instructions to reach its destination without looking at a map. This achievement comes from the new work of reinforcement learning expert Sergey Levine.

Given a destination, how difficult is it to reach it smoothly without navigation tracks?

This task is also very challenging for humans with poor sense of direction. But in a recent study, several academics "taught" the robot using only three pre-trained models.

We all know that one of the core challenges of robot learning is to enable robots to perform a variety of tasks according to high-level human instructions. This requires robots that can understand human instructions and be equipped with a large number of different actions to carry out these instructions in the real world.

For instruction following tasks in navigation, previous work has mainly focused on learning from trajectories annotated with textual instructions. This may enable understanding of textual instructions, but the cost of data annotation has hindered widespread use of this technique. On the other hand, recent work has shown that self-supervised training of goal-conditioned policies can learn robust navigation. These methods are based on large, unlabeled datasets, with post hoc relabeling to train vision-based controllers. These methods are scalable, general, and robust, but often require the use of cumbersome location- or image-based target specification mechanisms.

In a latest paper, researchers from UC Berkeley, Google and other institutions aim to combine the advantages of these two methods to make a self-supervised system for robot navigation applicable to navigation data without any user annotations. , leveraging the ability of pre-trained models to execute natural language instructions. Researchers use these models to build an "interface" that communicates tasks to the robot. This system leverages the generalization capabilities of pre-trained language and vision-language models to enable robotic systems to accept complex high-level instructions.

- Paper link: https://arxiv.org/pdf/2207.04429.pdf

- Code link: https://github.com/blazejosinski/lm_nav

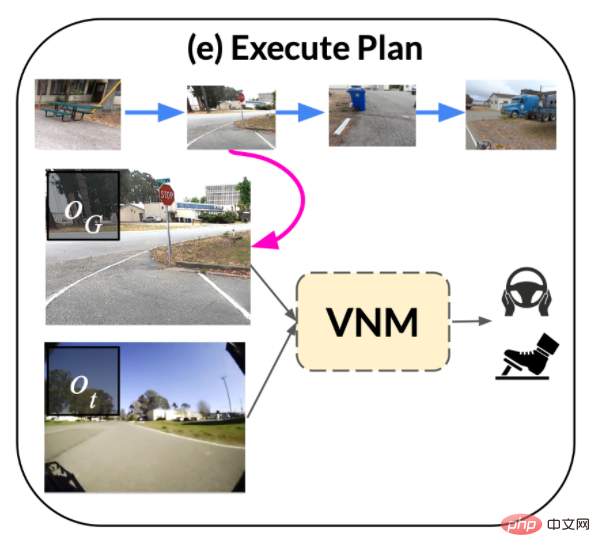

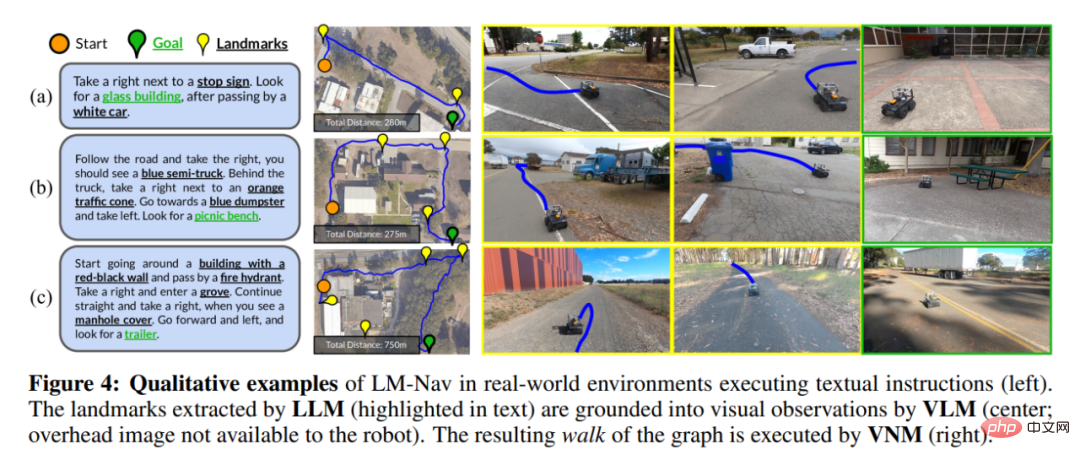

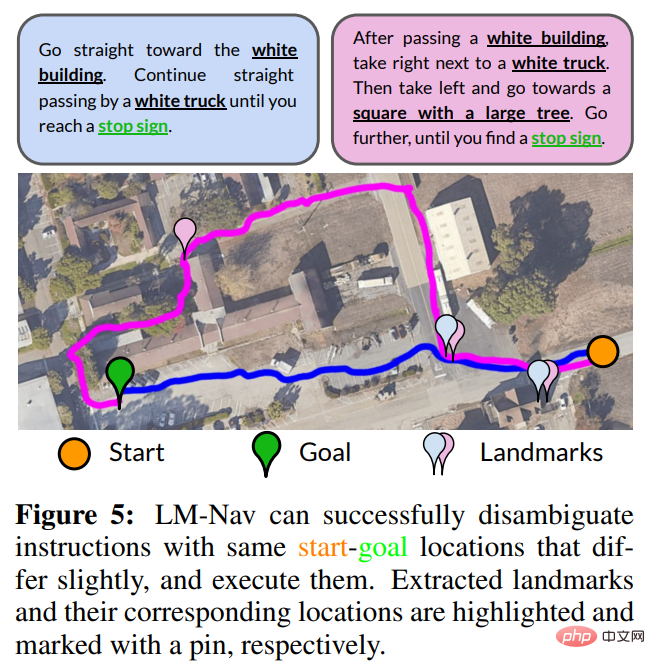

The researchers observed that it is possible to leverage off-the-shelf pre-trained models trained on large corpora of visual and language datasets ( These corpora are widely available and show zero-shot generalization capabilities) to create interfaces that enable specific instruction tracking. To achieve this, the researchers combined the advantages of vision and language robot-agnostic pre-trained models as well as pre-trained navigation models. Specifically, they used a visual navigation model (VNM:ViNG) to create a robot's visual output into a topological "mental map" of the environment. Given a free-form text instruction, a pre-trained large language model (LLM: GPT-3) is used to decode the instruction into a series of text-form feature points. Then, a visual language model (VLM: CLIP) is used to establish these text feature points in the topological map by inferring the joint likelihood of feature points and nodes. A new search algorithm is then used to maximize the probabilistic objective function and find the robot's instruction path, which is then executed by the VNM. The main contribution of the research is the navigation method under large-scale models (LM Nav), a specific instruction tracking system. It combines three large independent pre-trained models - a self-supervised robot control model that leverages visual observations and physical actions (VNM), a visual language model that places images within text but without a concrete implementation environment (VLM), and a large language model that parses and translates text but has no visual basis or embodied sense (LLM) to enable long-view instruction tracking in complex real-world environments. For the first time, researchers instantiated the idea of combining pre-trained vision and language models with target-conditional controllers to derive actionable instruction paths in the target environment without any fine-tuning. Notably, all three models are trained on large-scale datasets, have self-supervised objective functions, and are used out-of-the-box without fine-tuning - training LM Nav does not require human annotation of robot navigation data.

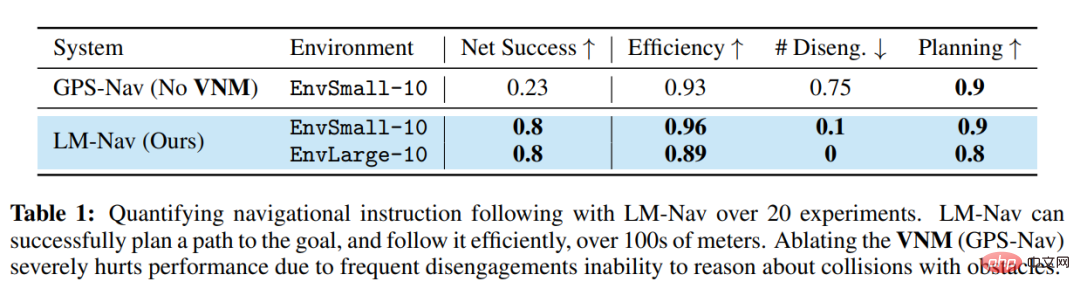

Experiments show that LM Nav is able to successfully follow natural language instructions in a new environment while using fine-grained commands to remove path ambiguity during complex suburban navigation up to 100 meters.

LM-Nav model overview

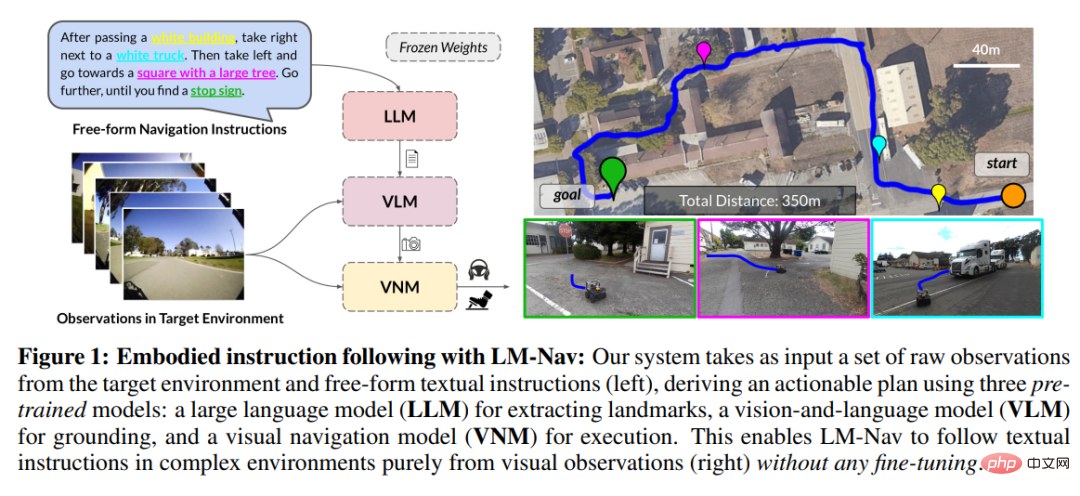

So, how do researchers use pre-trained image and language models to provide text interfaces for visual navigation models?

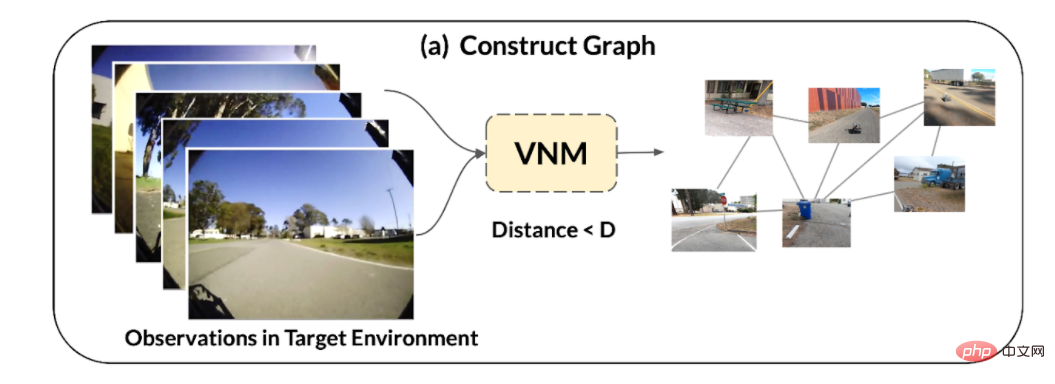

1. Given a set of observations in the target environment, use the target conditional distance function, which is the visual navigation model (VNM) part, infer the connectivity between them, and build a topological map of the connectivity in the environment.

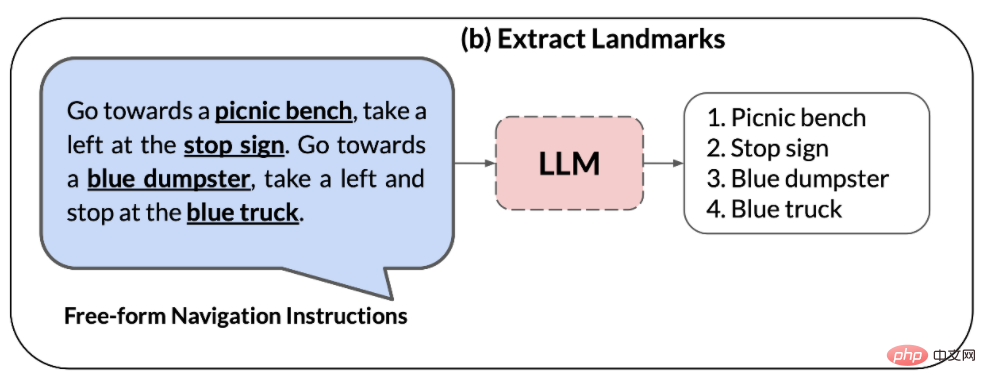

## 2. Large language model (LLM) is used to parse natural language instructions into a series of feature points, these Feature points can be used as intermediate sub-goals for navigation.

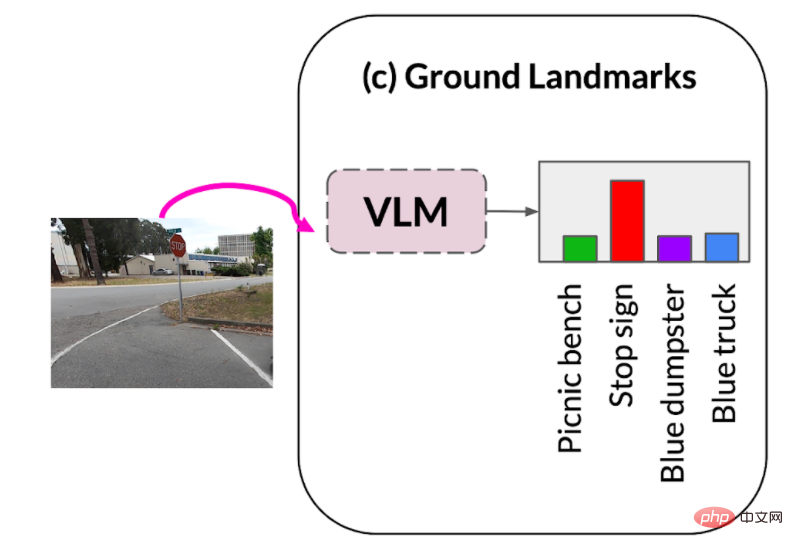

3. Visual-language model (VLM) is used to establish visual observations based on feature point phrases. The vision-language model infers a joint probability distribution over the feature point descriptions and images (forming the nodes in the graph above).

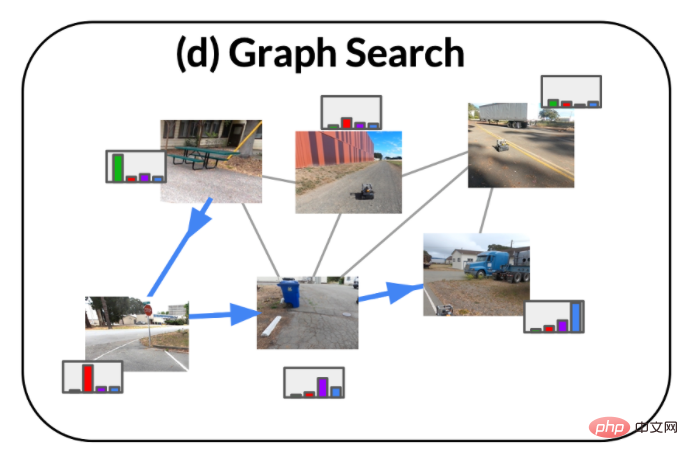

4. Using the probability distribution of VLM and the graph connectivity inferred by VNM, adopts a novel search algorithm , retrieve an optimal instruction path in the environment, which (i) satisfies the original instruction and (ii) is the shortest path in the graph that can achieve the goal.

5. Then, The instruction path is executed by the target condition policy, which is part of the VNM.

In independently evaluating the efficacy of VLM in retrieving feature points, the researchers found that although it is the best off-the-shelf model for this type of task, CLIP is unable to retrieve a small number of "hard" feature points, including Fire hydrants and cement mixers. But in many real-world situations, the robot can still successfully find a path to visit the remaining feature points.

Quantitative Evaluation

Table 1 summarizes the quantitative performance of the system in 20 instructions. In 85% of the experiments, LM-Nav was able to consistently follow instructions without collisions or detachments (an average of one intervention every 6.4 kilometers of travel). Compared to the baseline without navigation model, LM-Nav consistently performs better in executing efficient, collision-free target paths. In all unsuccessful experiments, the failure can be attributed to insufficient capabilities in the planning phase—the inability of the search algorithm to intuitively locate certain “hard” feature points in the graph—resulting in incomplete execution of instructions. An investigation of these failure modes revealed that the most critical part of the system is the VLM's ability to detect unfamiliar feature points, such as fire hydrants, and scenes under challenging lighting conditions, such as underexposed images.

The above is the detailed content of Reinforcement learning guru Sergey Levine's new work: Three large models teach robots to recognize their way. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

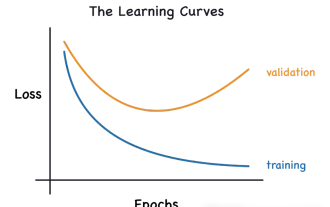

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made