Technology peripherals

Technology peripherals

AI

AI

300 years of artificial intelligence! The father of LSTM writes a 10,000-word long article: Detailed explanation of the development history of modern AI and deep learning

300 years of artificial intelligence! The father of LSTM writes a 10,000-word long article: Detailed explanation of the development history of modern AI and deep learning

300 years of artificial intelligence! The father of LSTM writes a 10,000-word long article: Detailed explanation of the development history of modern AI and deep learning

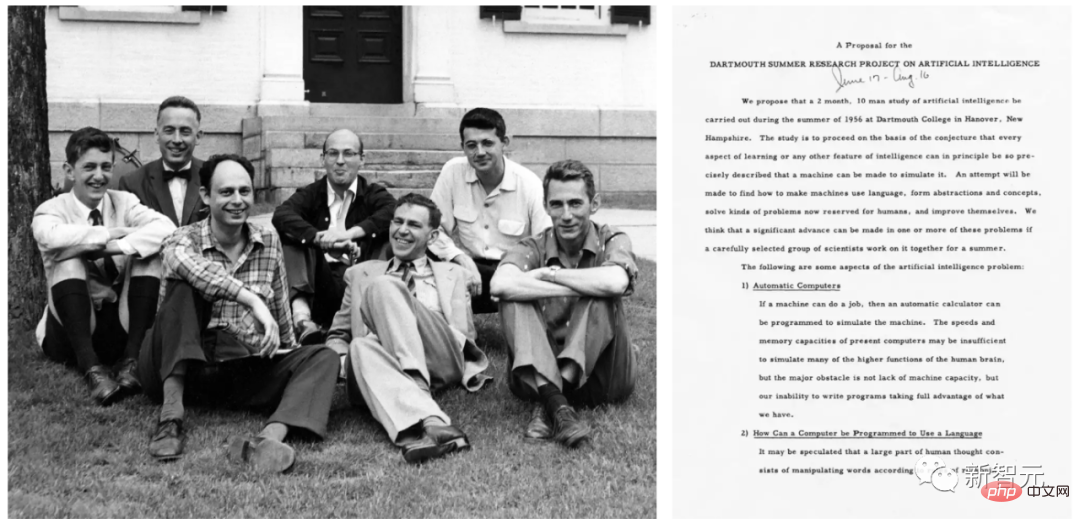

The term "artificial intelligence" was first formally proposed at the Dartmouth Conference in 1956 by John McCarthy and others.

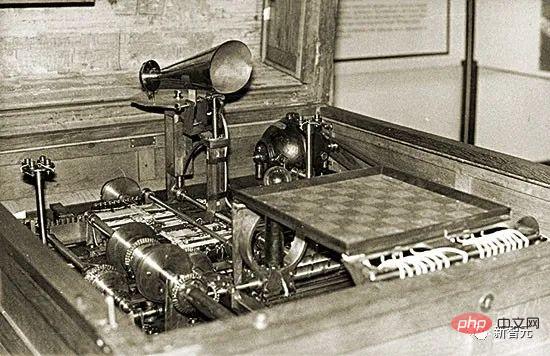

Practical AI was proposed as early as 1914. At that time Leonardo Torres y Quevedo built the first working chess machine terminal player. At the time, chess was considered an activity restricted to the realm of intelligent creatures.

As for the theory of artificial intelligence, it can be traced back to 1931-34. At the time Kurt Gödel identified the fundamental limits of any type of computationally based artificial intelligence.

When the time comes to the 1980s, the history of AI at this time will emphasize topics such as theorem proving, logic programming, expert systems, and heuristic search.

AI history in the early 2000s would place greater emphasis on topics such as support vector machines and kernel methods. Bayesian reasoning and other probabilistic and statistical concepts, decision trees, ensemble methods, swarm intelligence, and evolutionary computation are techniques that drive many successful AI applications.

AI research in the 2020s is more "retro", such as emphasizing deep nonlinear artificial neural networks such as the chain rule and training through gradient descent, especially based on feedback Concepts such as recurrent networks.

Schmidhuber stated that this article corrects the previous misleading "history of deep learning". In his opinion, previous histories of deep learning have ignored much of the seminal work mentioned in the article.

Additionally, Schmidhuber refuted a common misconception that neural networks "were introduced in the 1980s as a tool to help computers recognize patterns and simulate human intelligence." Because in fact, neural networks have appeared before the 1980s.

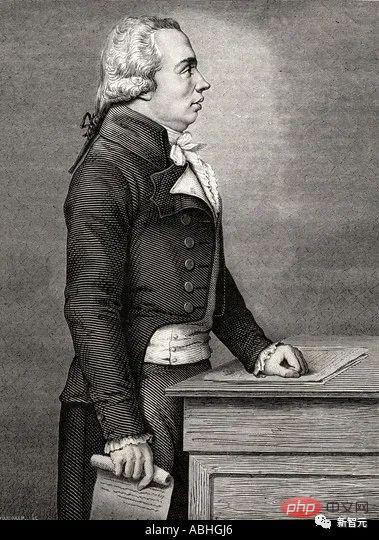

1. 1676: The chain rule of reverse credit allocation

In 1676, Gottfried Wilhelm Leibniz ) published the chain rule of calculus in his memoirs. Today, this rule is at the heart of credit assignment in deep neural networks and is the foundation of modern deep learning.

Gottfried Wilhelm Leibniz

Neural networks have nodes or neurons that compute differentiable functions of inputs from other neurons, which in turn compute differentiable functions of inputs from other neurons. If you want to know the changes in the final function output after modifying the parameters or weights of the earlier functions, you need to use the chain rule.

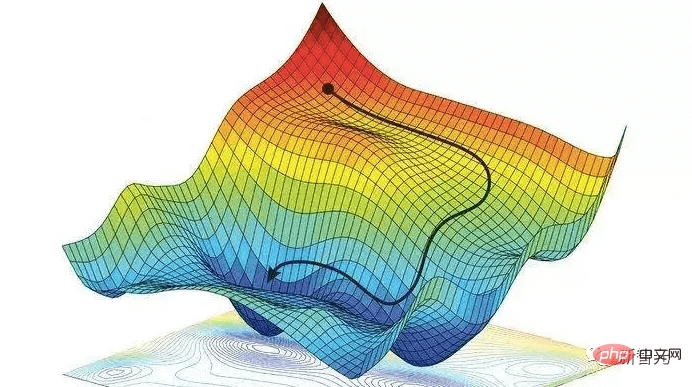

This answer is also used for gradient descent technique. In order to teach the neural network to transform the input pattern from the training set into the desired output pattern, all neural network weights are iteratively changed a little in the direction of maximum local improvement to create a slightly better neural network, and so on, gradually Close to the optimal combination of weights and biases to minimize the loss function.

It is worth noting that Leibniz was also the first mathematician to discover calculus. He and Isaac Newton independently discovered calculus, and the mathematical symbols of calculus he used are more widely used. The symbols invented by Leibniz are generally considered to be more comprehensive and have a wider range of applications.

In addition, Leibniz was "the world's first computer scientist." He designed the first machine in 1673 that could perform all four arithmetic operations, laying the foundation for modern computer science.

2. Early 19th Century: Neural Networks, Linear Regression and Shallow Learning

1805, Published by Adrien-Marie Legendre to what are now commonly referred to as linear neural networks.

##Adélion Marie Legendre

Later, Johann Carl Friedrich Gauss was credited with similar research.

This neural network from more than 2 centuries ago has two layers: an input layer with multiple input units and an output layer. Each input cell can hold a real-valued value and is connected to the output via a connection with real-valued weights.

The output of a neural network is the sum of the products of the inputs and their weights. Given a training set of input vectors and an expected target value for each vector, adjust the weights to minimize the sum of squared errors between the neural network output and the corresponding target.

Of course, this was not called a neural network at that time. It is called least squares, also widely known as linear regression. But it's mathematically the same as today's linear neural networks: same basic algorithm, same error function, same adaptive parameters/weights.

##Johann Carl Friedrich Gauss

This This simple neural network performs "shallow learning" as opposed to "deep learning" which has many non-linear layers. In fact, many neural network courses begin with an introduction to this approach and then move on to more complex and in-depth neural networks.Today, students of all technical subjects are required to take mathematics classes, especially analysis, linear algebra and statistics. In all these fields, many important results and methods are attributed to Gauss: the fundamental theorem of algebra, Gaussian elimination method, Gaussian distribution of statistics, etc.

The man known as "the greatest mathematician since ancient times" also pioneered differential geometry, number theory (his favorite subject) and non-Euclidean geometry. Without his achievements, modern engineering, including AI, would be unthinkable.

3. 1920-1925: The first recurrent neural network

Similar to the human brain, a recurrent neural network (RNN) has feedback connections, so it can follow Directed connections from certain internal nodes to other nodes, ultimately ending at the starting point. This is critical for enabling memory for past events during sequence processing.

Physicists Ernst Ising and Wilhelm Lenz introduced and analyzed the first non-learning RNN architecture in the 1920s: the Ising model (Ising model) . It enters an equilibrium state based on input conditions and is the basis of the first RNN learning model.

In 1972, Shun-Ichi Amari made the Ising model loop architecture adaptive, learning to relate input patterns to output patterns by changing their connection weights Union. This is the world's first learning RNN.

Currently, the most popular RNN is proposed by Schmidhuber The long short-term memory network LSTM. It has become the most cited neural network of the 20th century.

4. 1958: Multi-layer feedforward neural network

In 1958, Frank Rosenblatt combined a linear neural network and a threshold function to design a deeper Hierarchical multilayer perceptron (MLP).

Frank Rosenblatt

Multilayer Perceptron Follow the principles of the human nervous system to learn and make data predictions. It first learns, then stores the data using weights, and uses algorithms to adjust the weights and reduce bias during training, that is, the error between actual values and predicted values.

Since the training of multi-layer feedforward networks often uses the error back propagation algorithm, it is regarded as a standard supervised learning algorithm in the field of pattern recognition, and is used in computational neurology and parallel distributed processing. field, continues to be a subject of research.

5. 1965: The first deep learning

The successful learning of deep feedforward network architecture began in Ukraine in 1965, when Alexey Ivakhnenko and Valentin Lapa were Deep MLP with arbitrarily many hidden layers introduced the first general work-based learning algorithm.

Alexey Ivakhnenko

Given a set of output vectors with corresponding targets With a training set of input vectors, the layers are gradually grown and trained through regression analysis, and then pruned with the help of a separate validation set, where regularization is used to remove redundant units. The number of layers and units per layer are learned in a problem-dependent manner.

Like later deep neural networks, Ivakhnenko’s network learned to create layered, distributed, internal representations of incoming data.

He didn’t call them deep learning neural networks, but that’s what they were. In fact, the term "deep learning" was first introduced to machine learning by Dechter in 1986, and Aizenberg et al. introduced the concept of "neural network" in 2000.

6. 1967-68: Stochastic Gradient Descent

In 1967, Junichi Amari first proposed training neural networks through stochastic gradient descent (SGD).

Shunichi Amari and his student Saito learned internal representations in a five-layer MLP with two modifiable layers, which were trained to perform classification on nonlinear separable pattern classes. Classification.

Rumelhart and Hinton et al. did similar work in 1986 and named it the backpropagation algorithm.

7. 1970: Backpropagation algorithm

In 1970, Seppo Linnainmaa took the lead in publishing the backpropagation algorithm, which is a famous differentiable node Network credit allocation algorithm, also known as "reverse mode of automatic differentiation".

Seppo Linnainmaa

Linnainmaa describes for the first time an arbitrary, discrete Efficient error backpropagation method for neural networks with sparse connections. It is now the basis for widely used neural network software packages such as PyTorch and Google's Tensorflow.

Backpropagation is essentially an efficient way to implement Leibniz’s chain rule for deep networks. Gradient descent, proposed by Cauchy, is used over the course of many experiments to gradually weaken certain neural network connections and strengthen others.

In 1985, when computing costs were about 1,000 times lower than in 1970, when desktop computers were just becoming commonplace in wealthy academic labs, David Rumelhart and others were experimenting with known methods analyze.

David Rumelhart

##Through experiments, Rumelhart and others proved that backpropagation can Useful internal representations are produced in the hidden layers of neural networks. At least for supervised learning, backpropagation is often more effective than the above-mentioned deep learning by Amari Shunichi through the SGD method.

Before 2010, many people believed that training multi-layer neural networks required unsupervised pre-training. In 2010, Schmidhuber's team together with Dan Ciresan showed that deep FNNs can be trained with simple backpropagation and do not require unsupervised pre-training at all for important applications.

8. 1979: The first convolutional neural networkIn 1979, Kunihiko Fukushima developed a convolutional neural network for pattern recognition at STRL. Neural network model: Neocognitron.

Fukushima Kunihiko

But this Neocognitron in today’s terms , called convolutional neural network (CNN), is one of the greatest inventions of the basic structure of deep neural networks and is also the core technology of current artificial intelligence.

The Neocognitron introduced by Dr. Fukushima is the first neural network to use convolution and downsampling, and is also the prototype of a convolutional neural network.

The artificial multi-layer neural network with learning capabilities designed by Fukushima Kunihiko can imitate the brain's visual network. This "insight" has become the basis of modern artificial intelligence technology. Dr. Fukushima's work has led to a range of practical applications, from self-driving cars to facial recognition, from cancer detection to flood prediction, and more to come.

In 1987, Alex Waibel combined the neural network with convolution with weight sharing and backpropagation, and proposed the concept of delayed neural network (TDNN).

Since 1989, Yann LeCun's team has contributed to the improvement of CNN, especially in images.

Yann LeCun

In late 2011, Schmidhuber’s team sped up significantly The training speed of deep CNNs has made them more popular in the machine learning community. The team launched a GPU-based CNN: DanNet, which is deeper and faster than early CNNs. In the same year, DanNet became the first purely deep CNN to win a computer vision competition.

The residual neural network (ResNet) proposed by four scholars from Microsoft Research won the first place in the 2015 ImageNet large-scale visual recognition competition.

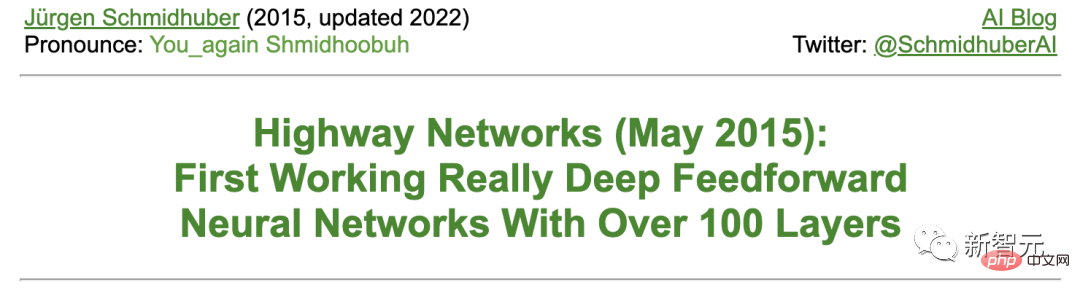

Schmidhuber said that ResNet is an early version of the high-speed neural network (Highway Net) developed by his team. This is the first truly effective deep feedforward neural network with hundreds of layers, compared to previous neural networks that had only dozens of layers at most.

9. 1987-1990s: Graph Neural Network and Stochastic Delta RuleThe deep learning architecture that can manipulate structured data (such as graphics) was developed by Pollack in 1987 proposed and expanded and improved by Sperduti, Goller and Küchler in the early 1990s. Today, graph neural networks are used in many applications.

Paul Werbos and R. J. Williams et al. analyzed the method of implementing gradient descent in RNN. Teuvo Kohonen's Self-Organizing Map has also become popular.

Teuvo Kohonen

In 1990, Stephen Hanson introduced the stochastic delta rule, a stochastic method of training neural networks through backpropagation. Decades later, this method became popular under the moniker "dropout."

10. February 1990: Generative Adversarial Network/Curiosity

Generative Adversarial Network (GAN) was first used in 1990 as "artificial intelligence curiosity" Published in the name of.

Two adversarial NNs (a probability generator and a predictor) try to maximize each other's loss in a minimum limit game. Where:

- The generator (called the controller) generates probabilistic outputs (using stochastic units, such as later StyleGAN).

- The predictor (called the world model) sees the controller's outputs and predicts how the environment will react to them. Using gradient descent, the predictor NN minimizes its error, while the generator NN tries to maximize this error - one network's loss is the other network's gain.

Four years before the 2014 paper on GAN, Schmidhuber summarized the 1990 generative adversarial NN in the famous 2010 survey as follows: "As a predictive world model Neural networks are used to maximize the controller’s intrinsic reward, which is proportional to the model’s prediction error.”

The GAN released later is just an example. Where the experiments are very short, the environment simply returns 1 or 0 depending on whether the output of the controller (or generator) is in a given set.

The 1990 principles are widely used in the exploration of reinforcement learning and the synthesis of realistic images, although the latter field has recently been taken over by Latent Diffusion by Rombach et al.

In 1991, Schmidhuber published another ML method based on two adversarial NNs, called predictability minimization, for creating separate representations of partially redundant data, 1996 Year applied to images.

11. April 1990: Generate sub-goals/work according to instructions

In recent centuries, most NNs have been dedicated to simple pattern recognition. rather than advanced reasoning.

However, in the early 1990s, for the first time, exceptions emerged. This work injects the concept of traditional "symbolic" hierarchical artificial intelligence into an end-to-end distinguishable "sub-symbolic" NN.

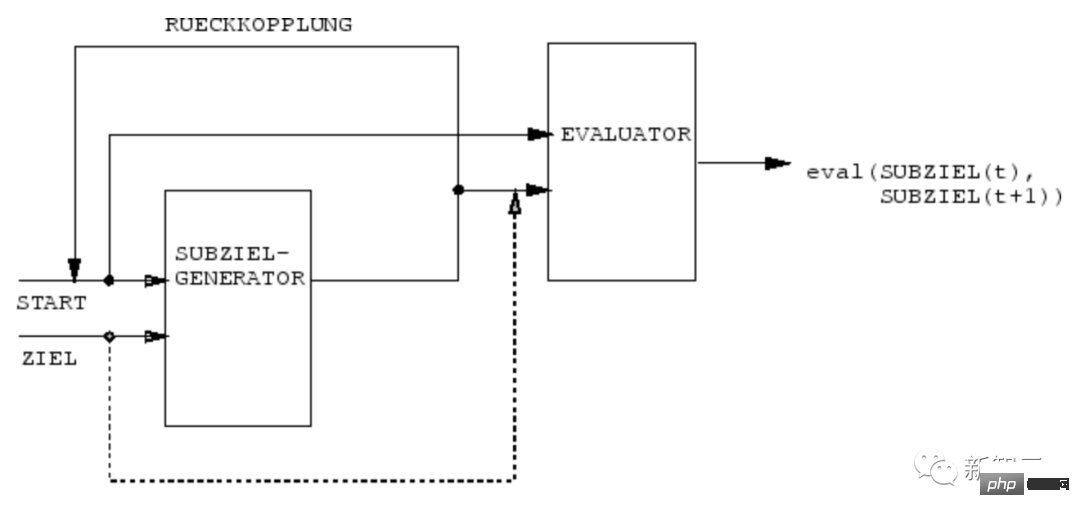

In 1990, Schmidhuber's team's NN learned to use the end-to-end differentiable NN's sub-goal generator to generate hierarchical action plans for hierarchical reinforcement learning (HRL) .

An RL machine gets additional command input in the form (start, target). An estimator NN learns to predict the current reward/cost from start to goal. A (R)NN-based subgoal generator also sees (start, goal) and learns a sequence of lowest-cost intermediate subgoals via gradient descent using a (copy) of the evaluator NN. The RL machine attempts to use this sequence of subgoals to achieve the final goal.

The system learns action plans at multiple levels of abstraction and multiple time scales, and in principle solves what have recently been called "open problems."

12. March 1991: Transformer with linear self-attention

The Transformer with "linear self-attention" was first published in March 1991.

These so-called "Fast Weight Programmers" or "Fast Weight Controllers" separate storage and control just like traditional computers, but In an end-to-end differentiated, adaptive, and neural network way.

In addition, today’s Transformers make extensive use of unsupervised pre-training, a deep learning method first published by Schmidhuber in 1990-1991.

Thirteen, April 1991: Deep learning through self-supervised pre-training

Today’s most powerful NNs tend to be very deep, that is, , they have many layers of neurons or many subsequent computational stages.

However, before the 1990s, gradient-based training did not work for deep NNs (only for shallow NNs).

Different from feedforward NN (FNN), RNN has feedback connections. This makes RNN a powerful, general-purpose, parallel sequence computer that can process input sequences of any length (such as speech or video).

However, before the 1990s, RNN failed to learn deep problems in practice.

To this end, Schmidhuber established a self-supervised RNN hierarchy to try to achieve "universal deep learning".

April 1991: Distilling one NN into another NN

By using the NN distillation procedure proposed by Schmidhuber in 1991, above The hierarchical internal representation of the Neural History Compressor can be compressed into a single Recursive NN (RNN).

Here, the knowledge of the teacher NN is "distilled" into the student NN by training the student NN to imitate the behavior of the teacher NN (while also retraining the student NN to ensure that the previously learned Skills acquired will not be forgotten). The NN distillation method was also republished many years later and is widely used today.

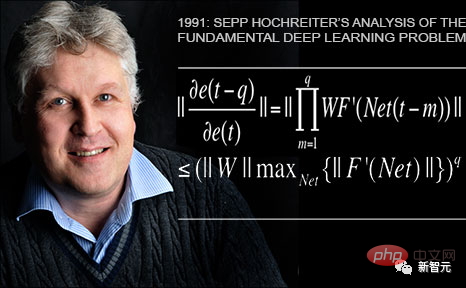

Fourteenth, June 1991: Basic problem - vanishing gradient

Schmidhuber’s first student Sepp Hochreiter discovered and analyzed it in his 1991 graduation thesis Basic deep learning problems.

Deep NNs suffer from the now-famous vanishing gradient problem: in typical deep or recurrent networks, the backpropagated error signal either shrinks rapidly or grows out of bounds. In both cases, learning fails.

Fifteenth, June 1991: The basis of LSTM/Highway Net/ResNet

Long short-term memory (LSTM) recursion Neural networks overcome the fundamental deep learning problem pointed out by Sepp Hochreiter in the above-mentioned 1991 thesis.

After publishing a peer-reviewed paper in 1997 (now the most cited NN article of the 20th century), Schmidhuber's students Felix Gers and Alex Graves, among others, further improved it LSTM and its training procedure.

The LSTM variant published in 1999-2000 - the "vanilla LSTM architecture" with forgetting gates - is still used in Google's Tensorflow today.

In 2005, Schmidhuber first published an article on complete backpropagation and two-way propagation of LSTM in time (also widely used).

A landmark training method in 2006 was "Connectionist Temporal Classification" (CTC), which is used to simultaneously align and identify sequences.

Schmidhuber’s team successfully applied CTC-trained LSTM to speech in 2007 (there were also layered LSTM stacks), achieving superior end-to-end neural speech recognition for the first time Effect.

In 2009, through Alex’s efforts, LSTM trained by CTC became the first RNN to win international competitions, namely three ICDAR 2009 handwriting competitions (French, Persian, Arabic ). This has aroused great interest in the industry. LSTM was quickly used in all situations involving sequence data, such as speech and video.

In 2015, the CTC-LSTM combination greatly improved Google’s speech recognition performance on Android smartphones. Until 2019, Google's speech recognition on the mobile terminal was still based on LSTM.

1995: Neural Probabilistic Language Model

In 1995, Schmidhuber proposed an excellent neural probabilistic text model, the basic concept of which was introduced in 2003 Years were reused.

In 2001, Schmidhuber showed that LSTM can learn languages that traditional models such as HMM cannot learn.

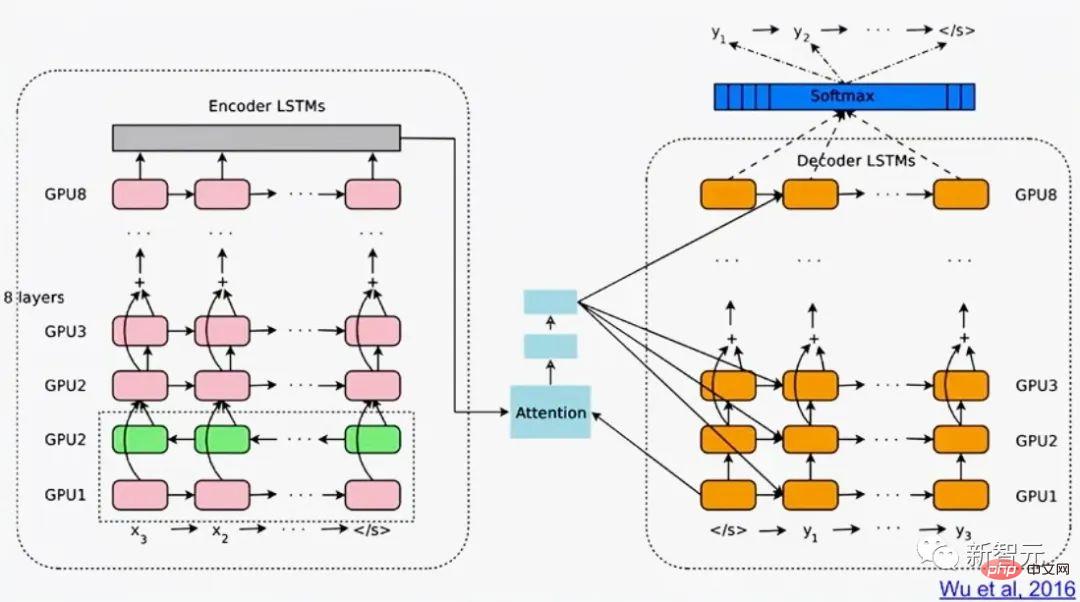

Google Translate in 2016 is based on two connected LSTMs (the white paper mentions LSTM more than 50 times), one for incoming text and one for outgoing translation.

In the same year, more than a quarter of the massive computing power used for inference in Google data centers was used for LSTM (and 5% was used for another popular deep learning technology, namely CNN).

# By 2017, LSTM was also powering machine translation for Facebook (more than 30 billion translations per week), Apple on approximately 1 billion iPhones It provides support for Quicktype, Amazon Alexa Voice, Google Image Caption Generation and automatic email answering.

Of course, Schmidhuber’s LSTM is also widely used in healthcare and medical diagnosis – a simple Google Scholar search will find countless medical articles with “LSTM” in the title.

In May 2015, the Schmidhuber team proposed Highway Network based on the LSTM principle, the first very deep FNN with hundreds of layers (previously The NN has only a few dozen layers at most). Microsoft's ResNet (which won the ImageNet 2015 competition) is a version of it.

The performance of early Highway Net on ImageNet was roughly the same as ResNet. Variants of Highway Net are also used for certain algorithmic tasks where pure residual layers do not perform well.

The LSTM/Highway Net principle is the core of modern deep learning

The core of deep learning is NN depth.

In the 1990s, LSTM brought essentially infinite depth to supervised recursive NNs; in 2000, the LSTM-inspired Highway Net brought feedforward NNs depth.

Now, LSTM has become the most cited NN in the 20th century, and ResNet, one of the versions of Highway Net, It is the most cited NN in the 21st century.

16, 1980 to present: NNN for learning actions without a teacher

In addition, NN is also related to reinforcement learning (RL).

Although some of the problems can be solved by non-neurological technologies invented as early as the 1980s. For example, Monte Carlo tree search (MC), dynamic programming (DP), artificial evolution, α-β-pruning, control theory and system identification, stochastic gradient descent, and general search techniques. But deep FNN and RNN can bring better results for certain types of RL tasks.

Generally speaking, a reinforcement learning agent must learn how to interact with a dynamic, initially unknown, partially observable environment without the help of a teacher, so that the expected Maximize the cumulative reward signal. There may be arbitrary, a priori unknown delays between actions and perceived consequences.

Based on dynamic programming (DP)/timing when the environment has a Markov interface such that the input to the RL agent can convey all the information needed to determine the next best action Differential (TD)/Monte Carlo tree search (MC) RL can be very successful.

For more complex situations without a Markov interface, the agent must consider not only the current input, but also the history of previous inputs. In this regard, the combination of RL algorithms and LSTM has become a standard solution, especially LSTM trained through policy gradient.

For example, in 2018, a PG-trained LSTM at the heart of OpenAI’s famous Dactyl learned to control a dexterous robotic hand without a teacher.

The same goes for video games.

In 2019, DeepMind (co-founded by a student from Schmidhuber's lab) defeated professional players in the game "StarCraft", using Alphastar, which has a Deep LSTM core trained by PG.

At the same time, RL LSTM (accounting for 84% of the total number of model parameters) is also the core of the famous OpenAI Five, which defeated professional human players in Dota 2.

#The future of RL will be about learning/combining/planning with compact spatiotemporal abstractions of complex input streams, which is about common sense reasoning and learning to think.

Schmidhuber proposed in a paper published in 1990-91 that a self-supervised neural history compressor can learn representation concepts at multiple levels of abstraction and multiple time scales; while end-based With an end-to-end distinguishable NN sub-goal generator, hierarchical action plans can be learned through gradient descent.

Subsequently in 1997 and 2015-18, more sophisticated methods of learning abstract thinking were published.

17. It’s a hardware problem, idiot!

In the past one thousand years, without the continuous improvement and accelerated upgrading of computer hardware, deep learning algorithms would not be able to There may be a major breakthrough.

Our first known geared computing device is the Antikythera mechanism from ancient Greece over 2,000 years ago. It is the oldest complex scientific computer known today and the world's first analog computer.

Antikythera Machinery

And the first in the world This practical programmable machine was invented by the ancient Greek mechanic Helen in the 1st century AD.

Machines in the 17th century became more flexible and could calculate answers based on input data.

The first mechanical calculator for simple arithmetic was invented and manufactured by Wilhelm Schickard in 1623.

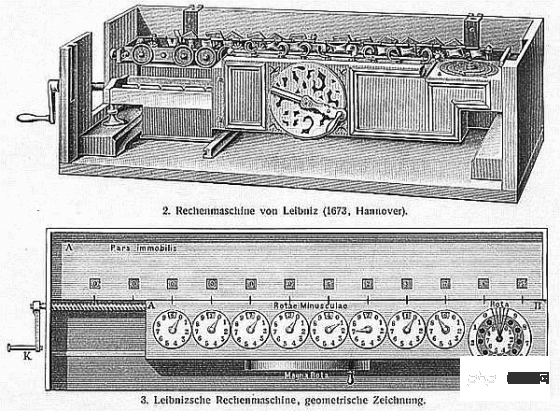

In 1673, Leibniz designed the first machine that could perform all four arithmetic operations and had a memory. He also described the principles of a binary computer controlled by a punched card and proposed the chain rule, which forms an important part of deep learning and modern artificial intelligence.

Leibniz Multiplier

Around 1800, Joseph ·Joseph Marie Jacquard and others built the first designable loom in France - the Jacquard machine. This invention was instrumental in the future development of other programmable machines, such as computers.

Jacquard Loom

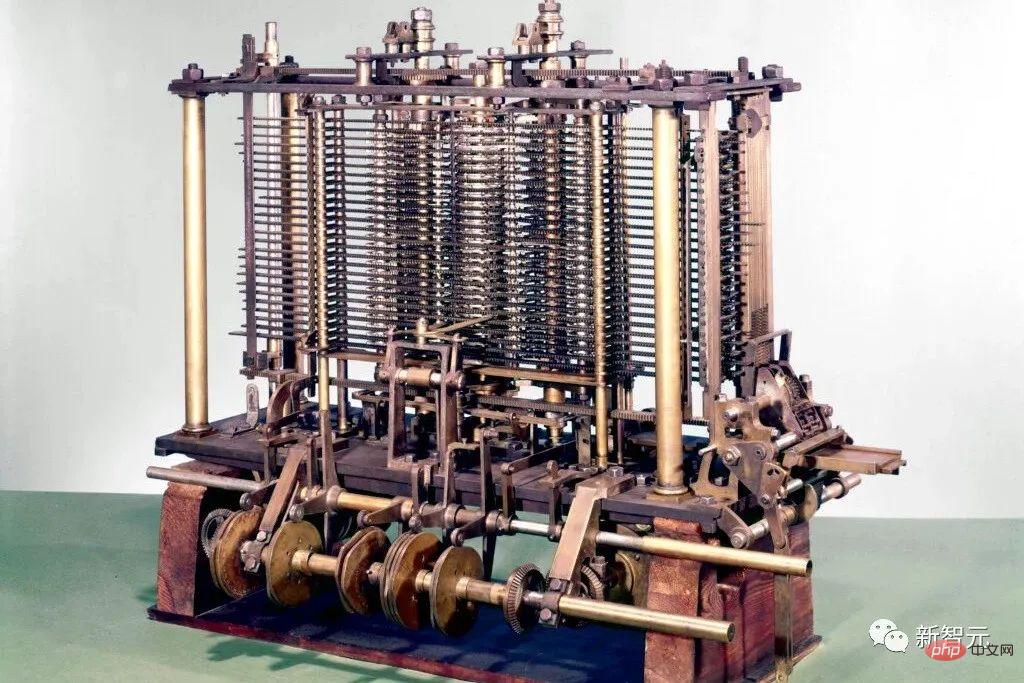

They inspired Ada Low Ada Lovelace and her mentor Charles Babbage invented a precursor to the modern electronic computer: Babbage's Difference Engine.

In the following 1843, Lovelace published the world's first set of computer algorithms.

Babbage’s Difference Engine

In 1914, the Spaniard Leonardo Torres y Quevedo became the first artificial intelligence pioneer of the 20th century when he created the first chess terminal machine player.

Between 1935 and 1941, Konrad Zuse invented the world's first operational programmable general-purpose computer: the Z3.

Conrad Zuse

##Unlike Babbage’s Analytical Engine, Zuse used Leibniz's principle of binary calculation, rather than traditional decimal calculation. This greatly simplifies the load on the hardware.

In 1944, Howard Aiken led a team to invent the world's first large-scale automatic digital computer, Mark I (Mark I).

Invented in 1948 by Frederic Williams, Tom Kilburn and Geoff Tootill The world's first electronic stored-program computer: the Small Experimental Machine (SSEM), also known as the "Manchester Baby".

"Manchester Baby" Replica

Since then, Computer operations have become faster with the help of integrated circuits (ICs). In 1949, Werner Jacobi of Siemens applied for a patent for an integrated circuit semiconductor that would allow multiple transistors on a common substrate.

In 1958, Jack Kilby demonstrated an integrated circuit with external wires. In 1959, Robert Noyce proposed the monolithic integrated circuit. Since the 1970s, graphics processing units (GPUs) have been used to accelerate computing through parallel processing. Today, a computer's GPU contains billions of transistors.

Where are the physical limits?

According to the Bremermann limit proposed by Hans Joachim Bremermann, a computer with a mass of 1 kilogram and a volume of 1 liter is at most Can perform up to 10 to the 51st power of operations per second in positions up to 10 to the 32nd power.

##Hans Joachim Bremermann

However, the mass of the solar system is only 2x10^30 kilograms, and this trend is bound to break within a few centuries, because the speed of light will severely limit the acquisition of additional mass in the form of other solar systems.Therefore, the constraints of physics require that future efficient computing hardware must be like a brain, with many processors compactly placed in three-dimensional space to minimize the total connection cost, its basic architecture It is essentially a deep, sparsely connected three-dimensional RNN.

Schmidhuber speculates that deep learning methods for such RNNs will become more important.

18. Artificial Intelligence Theory since 1931

The core of modern artificial intelligence and deep learning is mainly based on mathematics in recent centuries: calculus, linear Algebra and Statistics.In the early 1930s, Gödel founded modern theoretical computer science. He introduced a universal coding language based on integers that allowed the operations of any digital computer to be formalized in axiomatic form.

At the same time, Gödel also constructed the famous formal statement, which systematically enumerates all possible theorems from an enumerable set of axioms given a computational theorem tester. . Thus, he identified fundamental limitations of algorithmic theorem proving, computation, and any kind of computation-based artificial intelligence.

In addition, Gödel identified the most famous open question in computer science "P=NP?" in his famous letter to John von Neumann.

In 1935, Alonzo Church derived a corollary to Gödel's result by proving that there is no general solution to Hilbert and Ackermann's decision-making problem. To do this, he used another of his general-purpose coding languages called Untyped Lambda Calculus, which formed the basis of the highly influential programming language LISP.

In 1936, Alan Turing introduced another general model: the Turing machine, and re-obtained the above results. In the same year, Emil Post published another independent general model of computing.

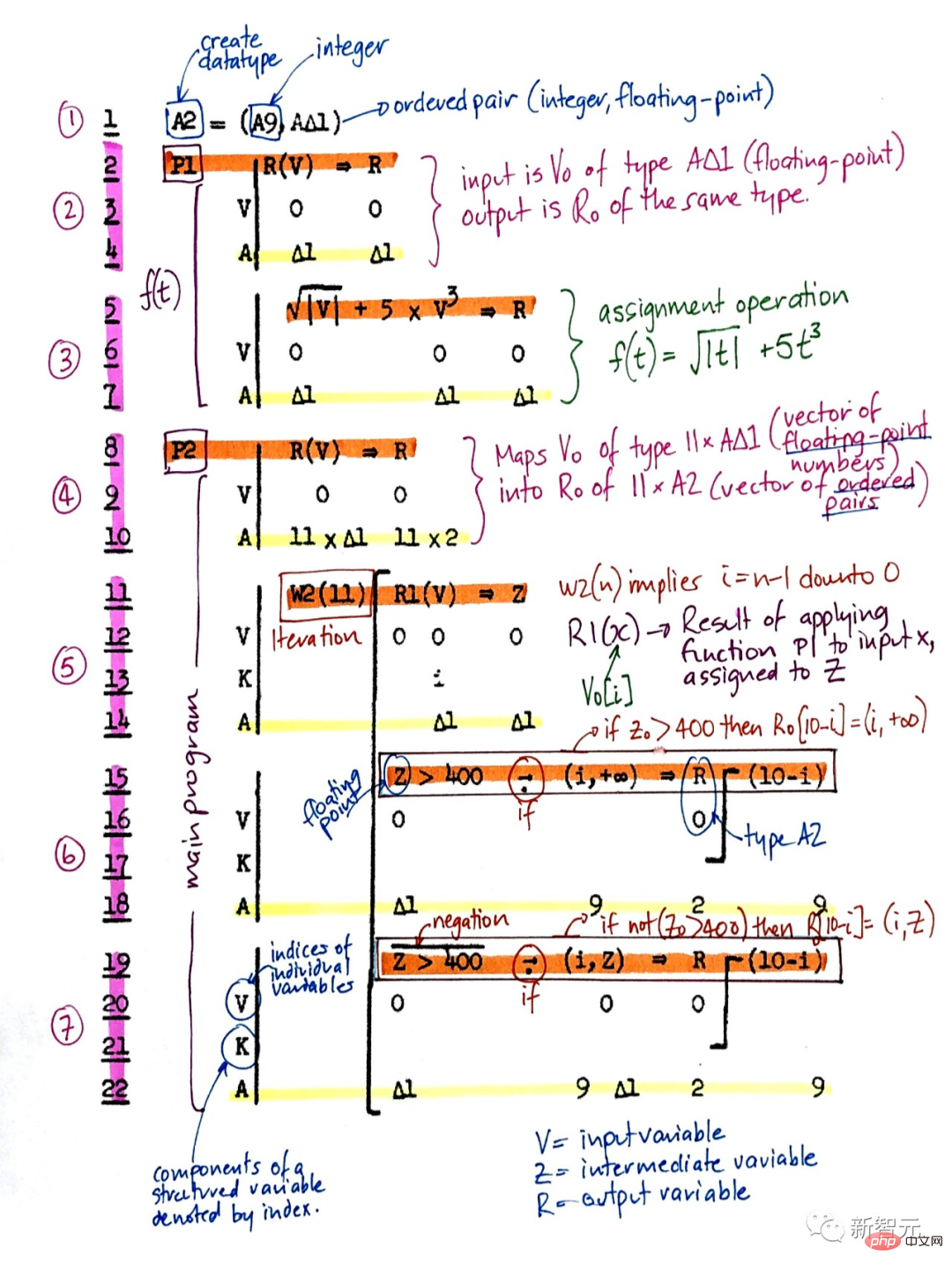

Konrad Zuse not only created the world's first usable programmable general-purpose computer, but also designed the first high-level programming language, Plankalkül. He applied it to chess in 1945 and to theorem proving in 1948.

Plankalkül

Most of the early 1940s-1970s Artificial intelligence is really about theorem proving and Gödelian derivation through expert systems and logic programming.

In 1964, Ray Solomonoff combined Bayesian (actually Laplace) probabilistic reasoning and theoretical computer science to arrive at a mathematically optimal (but computationally infeasible) learning method to predict future data from past observations.

Together with Andrej Kolmogorov he founded the theory of Korotkoff complexity or Algorithmic Information Theory (AIT), which formalized the concept of Occam's razor through the concept of the shortest program that computes data , thus surpassing traditional information theory.

Coriolis complexity

Self-referring Gödel machine is more general The optimality of is not limited to asymptotic optimality.

Despite this, such mathematically optimal artificial intelligence is not yet feasible in practice for various reasons. Instead, practical modern artificial intelligence is based on suboptimal, limited, but not extremely understood technologies, such as NN and deep learning are the focus.

But who knows what the history of artificial intelligence will be like in 20 years?

The above is the detailed content of 300 years of artificial intelligence! The father of LSTM writes a 10,000-word long article: Detailed explanation of the development history of modern AI and deep learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S