Technology peripherals

Technology peripherals

AI

AI

The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!

The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!

The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!

Three-dimensional reconstruction (3D Reconstruction) technology has always been a key research area in the field of computer graphics and computer vision.

Simply put, 3D reconstruction is to restore the 3D scene structure based on 2D images.

It is said that after Jimmy Lin was in a car accident, his facial reconstruction plan used three-dimensional reconstruction.

Different technical routes for three-dimensional reconstruction are expected to be integrated

In fact, three-dimensional reconstruction technology has been used in games, movies, surveying, positioning, navigation, It has been widely used in autonomous driving, VR/AR, industrial manufacturing and consumer goods fields.

With the development of GPU and distributed computing, as well as hardware, depth cameras such as Microsoft's Kinect, Asus' XTion and Intel's RealSense have gradually matured, and the cost of 3D reconstruction has increased. Showing a downward trend.

Operationally speaking, the 3D reconstruction process can be roughly divided into five steps.

The first step is to obtain the image.

Since 3D reconstruction is the inverse operation of the camera, it is necessary to first use the camera to obtain the 2D image of the 3D object.

This step cannot be ignored, because lighting conditions, camera geometric characteristics, etc. have a great impact on subsequent image processing.

The second step is camera calibration.

This step is to use the images captured by the camera to restore the objects in the space.

It is usually assumed that there is a linear relationship between the image captured by the camera and the object in the three-dimensional space. The process of solving the parameters of the linear relationship is called camera calibration.

The third step is feature extraction.

Features mainly include feature points, feature lines and regions.

In most cases, feature points are used as matching primitives. The form in which feature points are extracted is closely related to the matching strategy used.

Therefore, when extracting feature points, you need to first determine which matching method to use.

The fourth step is stereo matching.

Stereo matching refers to establishing a correspondence between image pairs based on extracted features, that is, imaging points of the same physical space point in two different images. Correspond one to one.

The fifth step is three-dimensional reconstruction.

With relatively accurate matching results, combined with the internal and external parameters of the camera calibration, the three-dimensional scene information can be restored.

These five steps are interlocking. Only when each link is done with high precision and small errors can a relatively accurate stereoscopic vision system be designed.

In terms of algorithms, 3D reconstruction can be roughly divided into two categories. One is the 3D reconstruction algorithm based on traditional multi-view geometry.

The other is a three-dimensional reconstruction algorithm based on deep learning.

Currently, due to the huge advantages of CNN in image feature matching, more and more researchers are beginning to turn their attention to three-dimensional reconstruction based on deep learning.

However, this method is mostly a supervised learning method and is highly dependent on the data set.

The collection and labeling of data sets have always been a source of problems for supervised learning. Therefore, three-dimensional reconstruction based on deep learning is mostly studied in the direction of reconstruction of smaller objects.

In addition, the three-dimensional reconstruction based on deep learning has high fidelity and has better performance in terms of accuracy.

But training the model takes a lot of time, and the 3D convolutional layers used for 3D reconstruction are very expensive.

Therefore, some researchers began to re-examine the traditional three-dimensional reconstruction method.

Although the traditional three-dimensional reconstruction method has shortcomings in performance, the technology is relatively mature.

Then, a certain integration of the two methods may produce better results.

3D reconstruction without 3D convolutional layers

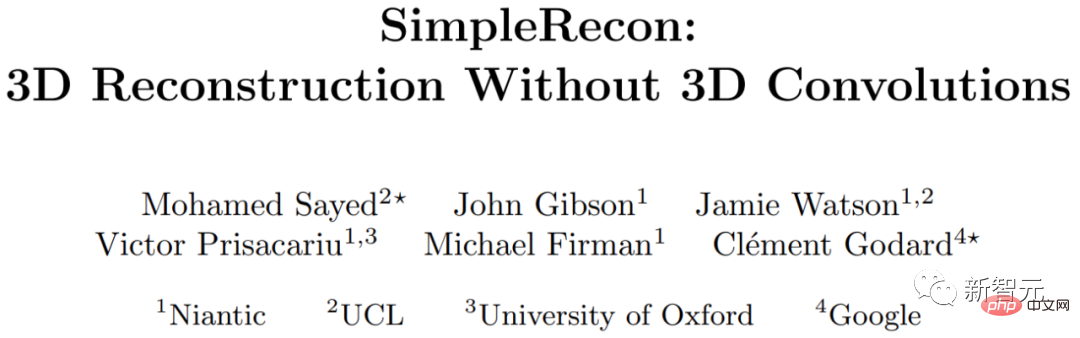

From University of London, University of Oxford, Google and Niantic (spun out from Google Researchers from institutions such as the Unicorn Company that studies AR) have explored a 3D reconstruction method that does not require 3D convolution.

They propose a simple state-of-the-art multi-view depth estimator.

This multi-view depth estimator has two breakthroughs.

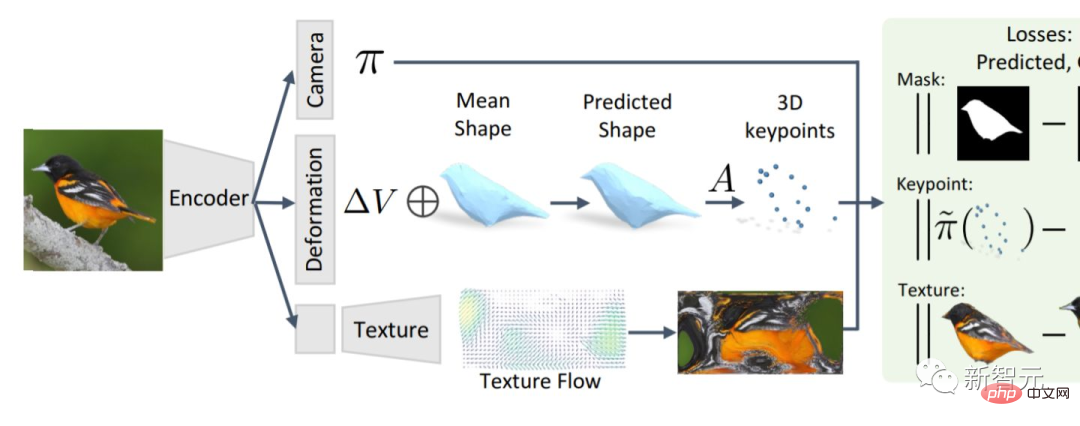

The first is a carefully designed two-dimensional CNN, which can make use of powerful image priors, and can obtain plane scanning feature quantities and geometric losses;

The second is the ability to integrate keyframes and geometric metadata into cost volumes, enabling informed depth plane scoring.

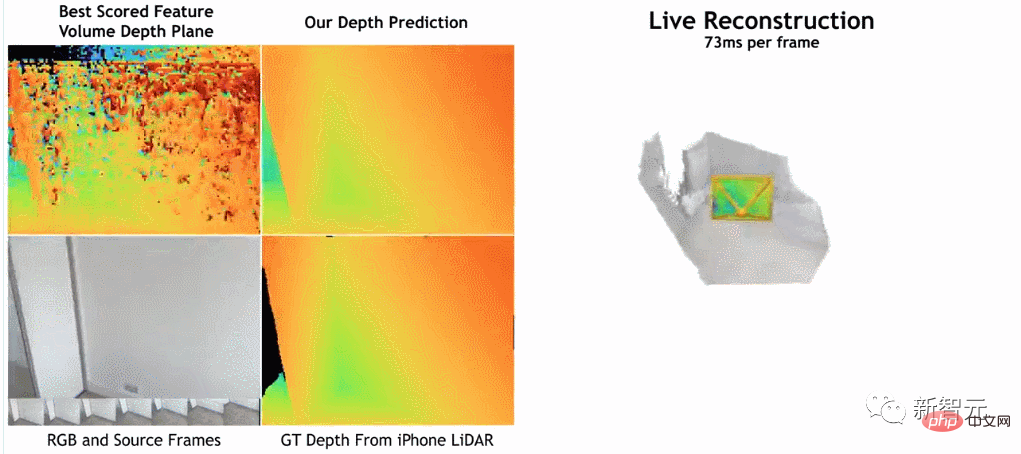

According to the researchers, their method has a clear lead over current state-of-the-art methods in depth estimation.

and is close to or better for 3D reconstruction on ScanNet and 7-Scenes, but still allows online real-time low-memory reconstruction.

Moreover, the reconstruction speed is very fast, only taking about 73ms per frame.

The researchers believe this makes accurate reconstruction possible through fast deep fusion.

#According to the researchers, their method uses an image encoder to extract data from a reference image and a source image. Extract matching features, then input them into the cost volume, and then use a 2D convolutional encoder/decoder network to process the output results of the cost volume.

The research was implemented using PyTorch, and used ResNet18 for matching feature extraction. It also used two 40GB A100 GPUs and completed the entire work in 36 hours.

In addition, although the model does not use 3D convolutional layers, it outperforms the baseline model in depth prediction indicators.

This shows that a carefully designed and trained 2D network is sufficient for high-quality depth estimation.

Interested readers can read the original text of the paper:

https://nianticlabs.github.io/simplerecon /resources/SimpleRecon.pdf

However, it should be reminded that there is a professional threshold for reading this paper, and some details may not be easily noticed.

We might as well take a look at what foreign netizens discovered from this paper.

A netizen named "stickshiftplease" said, "Although the inference time on the A100 is about 70 milliseconds, this can be shortened through various techniques, and the memory requirements do not have to be 40GB, with the smallest model running 2.6GB of memory.”

Another netizen named "IrreverentHippie" pointed out, "Please note that this research is still based on LiDAR depth sensor sampling. This is why this method achieves such good results quality and accuracy reasons".

Another netizen named "nickthorpie" made a longer comment. He said, "The advantages and disadvantages of ToF cameras are well documented. ToF solves various problems that plague original image processing. Among them, two A major issue is scalability and details. ToF always has difficulty identifying small details such as table edges or thin poles. This is crucial for autonomous or semi-autonomous applications.

In addition, since ToF is an active sensor, when multiple sensors are used together, such as at a crowded intersection or in a self-built warehouse, the picture quality will quickly degrade.

Obviously, the more data you collect on a scene, the more accurate the description you can create. Many researchers prefer to study raw image data because it is more flexible."

The above is the detailed content of The 3D reconstruction of Jimmy Lin's face can be achieved with two A100s and a 2D CNN!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

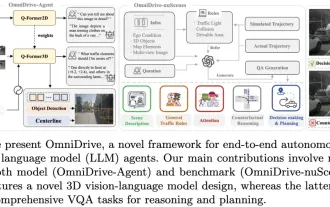

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

Learning cross-modal occupancy knowledge: RadOcc using rendering-assisted distillation technology

Jan 25, 2024 am 11:36 AM

Learning cross-modal occupancy knowledge: RadOcc using rendering-assisted distillation technology

Jan 25, 2024 am 11:36 AM

Original title: Radocc: LearningCross-ModalityOccupancyKnowledgethroughRenderingAssistedDistillation Paper link: https://arxiv.org/pdf/2312.11829.pdf Author unit: FNii, CUHK-ShenzhenSSE, CUHK-Shenzhen Huawei Noah's Ark Laboratory Conference: AAAI2024 Paper Idea: 3D Occupancy Prediction is an emerging task that aims to estimate the occupancy state and semantics of 3D scenes using multi-view images. However, due to the lack of geometric priors, image-based scenarios