Technology peripherals

Technology peripherals

AI

AI

PyTorch 2.0 official version released! One line of code speeds up 2 times, 100% backwards compatible

PyTorch 2.0 official version released! One line of code speeds up 2 times, 100% backwards compatible

PyTorch 2.0 official version released! One line of code speeds up 2 times, 100% backwards compatible

The official version of PyTorch 2.0 is finally here!

## Last December, the PyTorch Foundation released the first preview version of PyTorch 2.0 at the PyTorch Conference 2022.

# Compared with the previous version 1.0, 2.0 has undergone subversive changes. In PyTorch 2.0, the biggest improvement is torch.compile.

The new compiler can generate code on-the-fly much faster than the default "eager mode" in PyTorch 1.0, further improving PyTorch performance. .

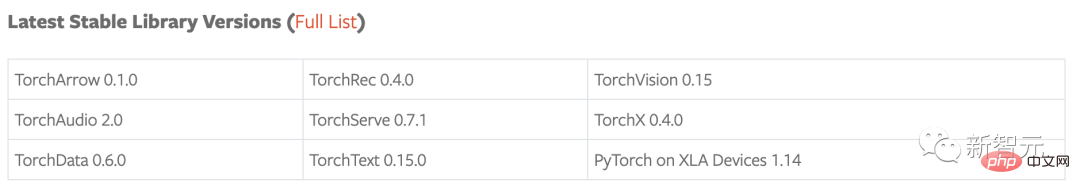

In addition to 2.0, a series of beta updates for the PyTorch domain libraries have been released, including those in the tree library, as well as standalone libraries including TorchAudio, TorchVision and TorchText. Updates to TorchX are also released at the same time to provide community support mode.

-torch.compile is the main API of PyTorch 2.0, it wraps and returns For compiled models, torch.compile is a completely add-on (and optional) feature, so version 2.0 is 100% backwards compatible.

- As the underlying technology of torch.compile, TorchInductor with Nvidia and AMD GPUs will rely on the OpenAI Triton deep learning compiler to generate high-performance code and Hide low-level hardware details. The performance of kernel implementations generated by OpenAI Triton is comparable to handwritten kernels and specialized CUDA libraries such as cublas.

- Accelerated Transformers introduces high-performance support for training and inference, using a custom kernel architecture to implement Scaled Dot Product Attention (SPDA). The API is integrated with torch.compile(), and model developers can also use scaled dot product attention kernels directly by calling the new scaled_dot_product_attention() operator.

- Metal Performance Shaders (MPS) backend provides GPU-accelerated PyTorch training on the Mac platform and adds support for the top 60 most commonly used operations , covering more than 300 operators.

- Amazon AWS optimizes PyTorch CPU inference on C7g instances based on AWS Graviton3. PyTorch 2.0 improves Graviton’s inference performance compared to previous versions, including improvements to Resnet50 and Bert.

- New prototyping features and techniques across TensorParallel, DTensor, 2D parallel, TorchDynamo, AOTAutograd, PrimTorch and TorchInductor.

- TorchDynamo

- AOTAutograd

- PrimTorch

- TorchInductor

TorchInductor is a deep learning compiler that can generate fast code for multiple accelerators and backends. For NVIDIA GPUs, it uses OpenAI Triton as a key building block.

The PyTorch Foundation said that the launch of 2.0 will promote "the return from C to Python", adding that this is a substantial new direction for PyTorch.

# "We knew the performance limits of "eager execution" from day one. In July 2017, we started our first research project, developing a compiler for PyTorch. The compiler needs to make PyTorch programs run quickly, but not at the expense of the PyTorch experience, while retaining flexibility and ease of use, so that researchers can use dynamic models and programs at different stages of exploration. "

# Of course, the non-compiled "eager mode" uses a dynamic real-time code generator and is still available in 2.0. Developers can use the porch.compile command to quickly upgrade to compiled mode by adding just one line of code.

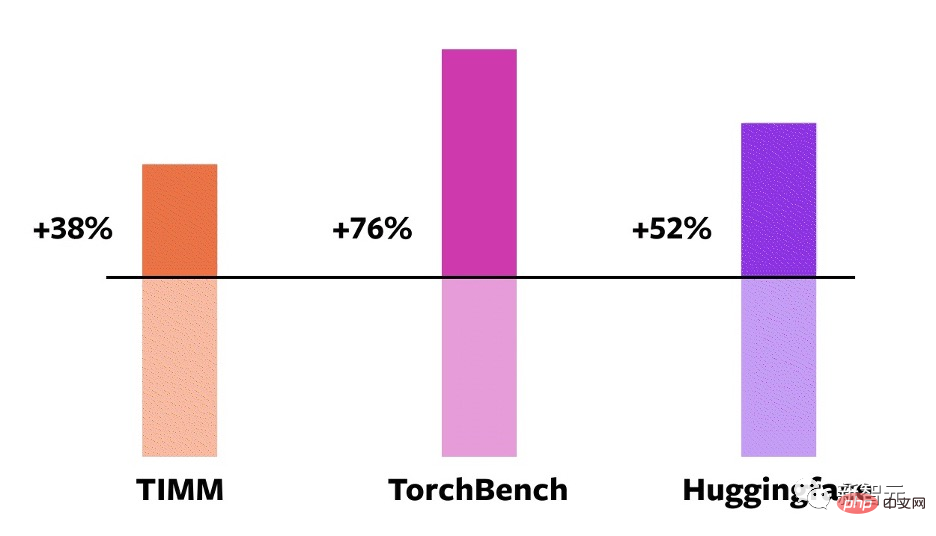

# Users can see that the compilation time of 2.0 is increased by 43% compared to 1.0.

This data comes from the PyTorch Foundation’s benchmark test on 163 open source models using PyTorch 2.0 on Nvidia A100 GPU, including image classification, target detection , image generation and other tasks, as well as various NLP tasks.

These Benchmarks are divided into three categories: HuggingFace Transformers, TIMM and TorchBench.

##NVIDIA A100 GPU eager mode torch.compile speed-up performance for different models

According to the PyTorch Foundation, the new compiler runs 21% faster when using Float32 precision mode and 51% faster when using automatic mixed precision (AMP) mode.

Among these 163 models, torch.compile can run normally on 93% of the models.

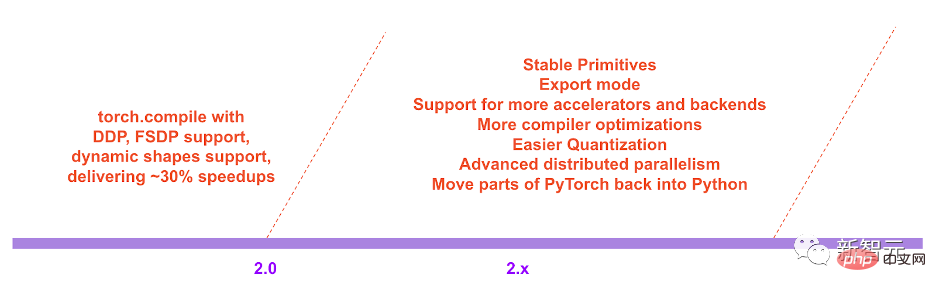

"In the PyTorch 2.x roadmap, we hope to take the compilation model further and further in terms of performance and scalability. There is still some work It didn't start. Some work couldn't be completed due to insufficient bandwidth."

In addition, performance is another major focus of PyTorch 2.0, and it is also a focus that developers have been generous in promoting.

#In fact, one of the highlights of the new feature is Accelerated Transformers, previously known as Better Transformers.

In addition, the official version of PyTorch 2.0 includes a new high-performance PyTorch TransformAPI implementation.

One of the goals of the PyTorch project is to make training and deployment of state-of-the-art transformer models easier and faster.

Transformers are the basic technology that helps realize the modern era of generative artificial intelligence, including OpenAI models such as GPT-3 and GPT-4.

##In PyTorch 2.0 Accelerated Transformers, a custom kernel architecture approach (also known as scaled dot product Attention SDPA), providing high-performance support for training and inference. Since there are many types of hardware that can support Transformers, PyTorch 2.0 can support multiple SDPA custom kernels. Going a step further, PyTorch integrates custom kernel selection logic that will select the highest performing kernel for a given model and hardware type. #The impact of the acceleration is significant, as it helps enable developers to train models faster than in previous iterations of PyTorch. The new version enables high-performance support for training and inference, using a customized kernel architecture to handle Scaled Dot Product Attention (SPDA), extending inference fast path architecture. Similar to the fastpath architecture, the custom kernel is fully integrated into the PyTorch Transformer API - therefore, using the native Transformer and MultiHeadAttention API will enable users to: - See significant speed improvements; - Support more use cases, including using cross-attention Models, Transformer decoders and training models; # - Continue to use fast path inference for fixed and variable sequence length transformer encoders and self-attention Use cases for force mechanisms. To take full advantage of different hardware models and Transformer use cases, multiple SDPA custom cores are supported and custom core selection logic will be picked for specific models and hardware types Highest performance core. #In addition to the existing Transformer API, developers can also directly use scaled dot product attention attention kernels to speed up PyTorch by calling the new scaled_dot_product_attention() operator. 2 Transformers are integrated with torch.compile(). In order to get the additional acceleration of PT2 compilation (for inference or training) while using the model, you can use model = torch.compile(model ) to preprocess the model. Currently, a combination of custom kernels and torch.compile() has been used to train Transformer models, especially large languages using the accelerated PyTorch 2 Transformer Substantial acceleration has been achieved in models. Using a custom kernel and torch.compile to provide significant acceleration for large language model training Sylvain Gugger, the main maintainer of HuggingFace Transformers, wrote in a statement released by the PyTorch project, "With just one line of code, PyTorch 2.0 can provide 1.5x better performance when training Transformers models. to 2.0x speedup. This is the most exciting thing since the introduction of mixed precision training!" PyTorch and Google's TensorFlow are the two most popular deep learning framework. Thousands of institutions around the world are using PyTorch to develop deep learning applications, and its usage is growing. The launch of PyTorch 2.0 will help accelerate the development of deep learning and artificial intelligence applications, said Chief Technology Officer of Lightning AI and one of the main maintainers of PyTorch Lightning Luca Antiga said: ## "PyTorch 2.0 embodies the future of deep learning frameworks. No user intervention is required to capture PyTorch programs, which can be used out of the box. Generation, along with huge device acceleration, this possibility opens up a whole new dimension for AI developers." References: https://www.php.cn/link/d6f84c02e2a54908d96f410083beb6e0 https://www.php.cn/link/89b9e0a6f6d1505fe13dea0f18a2dcfa https://www.php.cn/link/3b2acfe2e38102074656ed938abf4ac3

The above is the detailed content of PyTorch 2.0 official version released! One line of code speeds up 2 times, 100% backwards compatible. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G