Zero-sample information extraction by talking to GPT

The current trend of general-purpose large models replacing proprietary models customized for specific tasks is gradually emerging. This approach has significantly reduced the marginal cost of AI model application. This raises a question: Is it feasible to achieve zero-sample information extraction without training?

Information extraction technology is an important part of building a knowledge graph. If it can be implemented without training at all, it will greatly reduce the threshold of data analysis and help realize automated knowledge. Library build.

We build a general zero-sample IE system by using prompt engineering method for GPT-3.5——GPT4IE (GPT for Information Extraction), found that GPT3.5 can automatically extract structured information from original sentences. Supports both Chinese and English, and the tool code is open source.

Tool URL: https://cocacola-lab.github.io/GPT4IE/

Code: https://github.com/cocacola-lab/GPT4IE

##1 Background introduction

Information The goal of extraction (Information Extraction, IE) is to extract structured information from unstructured text, including entity-relation triple extraction (Entity-relation Extract, RE), named entity recognition (Named Entity Recognition, NER) and event extraction ( Event Extraction, EE) [1][2][3][4][5]. Many studies have begun to rely on IE technology to automate zero-shot/few-shot work, such as clinical IE [6].

Recently, large-scale pre-trained language models (LLMs) have performed extremely well on many downstream tasks, even with just a few examples as a guide without the need for It can be achieved with a little tweaking. From this we raise a question: Is it feasible to implement zero-sample IE tasks only through prompts? We try to use the prompt method to build a general zero-sample IE system for GPT-3.5 - GPT4IE (GPT for Information Extraction) . Combined with GPT3.5 and hints, it is able to automatically extract structured information from original sentences.

2 Technical Framework

Design a task-specified prompt template, and then fill the template with user input The specific slot value (slot) forms a prompt (prompt), which is entered into GPT-3.5 and used for IE. There are three supported tasks: RE, NER and EE, and all three tasks are bilingual in Chinese and English. The user needs to enter a sentence and formulate a list of extraction types (i.e., relationship list, head entity list, tail entity list, entity type list, or event list). The details are as follows:

The goal of the RE task is to extract triples from the text, such as "(China, capital, Beijing)", "("Ruyi "Biography", starring, Zhou Xun)". The required input format is as follows (the items with "*" represent non-required fields. We have set default values for these options, but for flexibility, we support user-defined specified lists, the same below):

- Input Sentence: Input text

- Relation type list (rtl)* : ['Relation type 1', 'Relation type 2', ...]

- Subject type list (stl)* : ['Header entity type 1', 'Header entity type 2', ...]

- Object type list (otl)* : ['Tail entity type 1', 'Tail entity type 2', ...]

- OpenAI API key: OpenAI API key (our Some of the available keys are provided in Github for example use.)

NER task is designed to extract entities from text, such as "(LOC , Beijing)”, “(Character, Zhou Enlai)”. On the NER task, the input format is as follows:

- Input Sentence: Input text

- Entity type list (etl)* : [ 'Entity type 1', 'Entity type 2', ...]

- OpenAI API key: OpenAI API key

EE Task aims to extract events from plain text, such as "{Life-Divorce: {Person: Bob, Time: today, Place: America}}", "{Contest Behavior-Promotion: {Time : None, Promotional side: Northwest Wolves, Promotional event: Battle for the top spot in the Chinese Premier League}}". The input format is as follows:

- Input Sentence: Input text

- Event type list (etl)* : {'Event type 1': ['Argument role 1', ' Argument role 2', ...], ...}

- OpenAI API key: OpenAI API key

3 Tool Usage Example

##3.1 RE Example 1

Input:

Input Sentence: Bob worked for Google in Beijing, the capital of China.

rtl: [ 'location-located_in', 'administrative_division-country', 'person-place_lived', 'person-company', 'person-nationality', 'company-founders', 'country-administrative_divisions', 'person-children', 'country -capital', 'deceased_person-place_of_death', 'neighborhood-neighborhood_of', 'person-place_of_birth']

stl: ['organization', 'person' , 'location', 'country']

otl: ['person', 'location', 'country', 'organization', 'city']

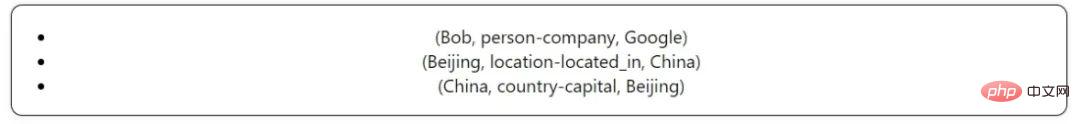

Output:

##3.2 RE Example 2

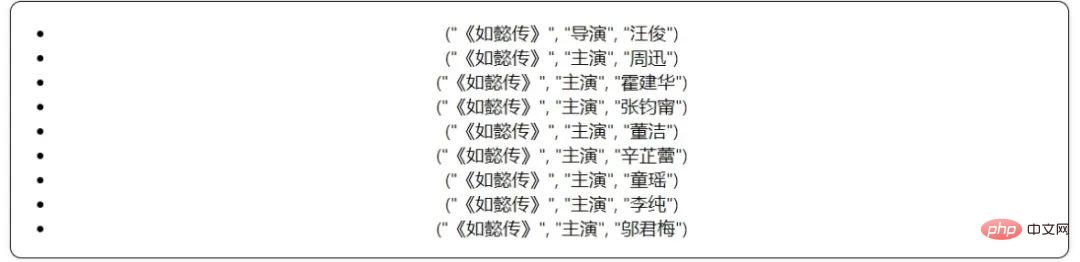

Input Sentence:"Ruyi's Royal Love in the Palace" is an ancient costume palace emotional TV series, produced by Directed by Wang Jun, starring Zhou Xun, Huo Jianhua, Zhang Junning, Dong Jie, Xin Zhilei, Tong Yao, Li Chun, Wu Junmei and others.

rtl: ['Album', 'Date of establishment', 'Altitude', 'Official language', 'Area', 'Father', 'Singer', 'Producer', 'Director', 'Capital', 'Starring', 'Chairman', 'ancestral home', 'Wife', 'Mother', 'Climate', 'Area', 'Protagonist' , 'Postal code', 'Abbreviation', 'Production company', 'Registered capital', 'Screenwriter', 'Founder', 'Graduation school', 'Nationality', 'Professional code', 'Dynasty', 'Author ', 'lyrics', 'city', 'guest', 'headquarter location', 'population', 'spokesperson', 'adapted from', 'principal', 'husband', 'host', 'theme song' ', 'years of study', 'composition', 'number', 'release time', 'box office', 'acting', 'dubbing', 'award-winning']

# #stl: ['Country', 'Administrative Region', 'Literary Works', 'Characters', 'Film and Television Works', 'School', 'Book Works', 'Place', 'Historical Figures', 'Attractions' , 'Song', 'Subject Major', 'Enterprise', 'TV Variety Show', 'Institution', 'Enterprise/Brand', 'Entertainment Figure']

#otl: ['Country', 'Person', 'Text', 'Date', 'Place', 'Climate', 'City', 'Song', 'Enterprise', 'Number', 'Music Album', 'School', 'Work', 'Language']Output:

3.3 NER Example 1

Input: Input Sentence : Bob worked for Google in Beijing, the capital of China.

etl

: ['LOC', 'MISC', 'ORG', ' PER']Output:

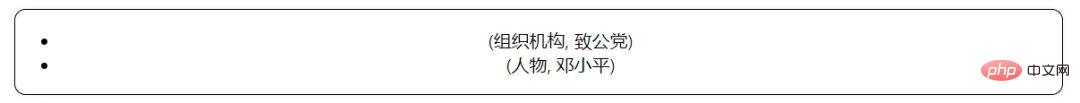

## 3.4 NER Example 2

Input Sentence: In the past five years, under the guidance of Deng Xiaoping Theory, the Zhigong Party has followed the basic line of the primary stage of socialism and worked hard to implement the policies proposed at the 10th National Congress of the Zhigong Party The basic tasks of participating in party functions and strengthening self-construction. etl: ['Organization', 'Location', 'People'] Output:

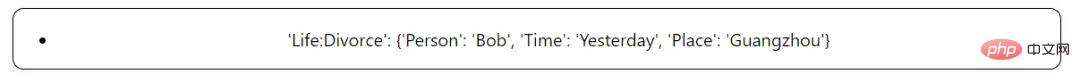

##3.5 EE Example 1

Input:

Input Sentence: Yesterday Bob and his wife got divorced in Guangzhou.

etl: {'Personnel:Elect': ['Person', 'Entity', 'Position', 'Time', 'Place'], 'Business:Declare-Bankruptcy': ['Org', 'Time ', 'Place'], 'Justice:Arrest-Jail': ['Person', 'Agent', 'Crime', 'Time', 'Place'], 'Life:Divorce': ['Person', 'Time ', 'Place'], 'Life:Injure': ['Agent', 'Victim', 'Instrument', 'Time', 'Place']}

Output:

##3.6 EE Example 2

Input:

Input Sentence:: In the 2022 Qatar World Cup final, Argentina narrowly defeated France in a penalty shootout.

etl: {'Organizational Behavior-Strike': ['Time', 'Affiliation', 'Number of Strikers', 'Strike Personnel'], ' Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limited Stock': ['Time', 'Limited Stock'], 'Organizational Relations-Dismissal': [' Time', 'Firing Party', 'Fired Person']}

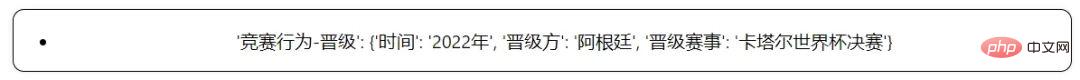

Output:

3.7 EE example three (an interesting error example)

Input:

Input Sentence:: I divorced him today

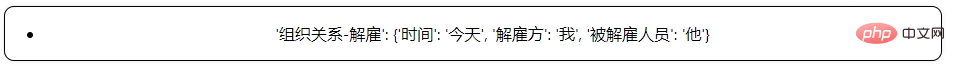

##etl: {'Organizational Behavior-Strike': [ 'Time', 'Organization', 'Number of strikes', 'Strike personnel'], 'Competition Behavior-Promotion': ['Time', 'Promotion Party', 'Promotion Event'], 'Finance/Trading-Limit' :['Time', 'Limit Stock'] , 'Organizational Relations-Dismissal': ['Time', 'Dismissal Party', 'Dismissed Personnel']}Output:

The above is the detailed content of Zero-sample information extraction by talking to GPT. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

How to remove author and last modified information in Microsoft Word

Apr 15, 2023 am 11:43 AM

How to remove author and last modified information in Microsoft Word

Apr 15, 2023 am 11:43 AM

Microsoft Word documents contain some metadata when saved. These details are used for identification on the document, such as when it was created, who the author was, date modified, etc. It also has other information such as number of characters, number of words, number of paragraphs, and more. If you might want to remove the author or last modified information or any other information so that other people don't know the values, then there is a way. In this article, let’s see how to remove a document’s author and last modified information. Remove author and last modified information from Microsoft Word document Step 1 – Go to

The ultimate weapon for Kubernetes debugging: K8sGPT

Feb 26, 2024 am 11:40 AM

The ultimate weapon for Kubernetes debugging: K8sGPT

Feb 26, 2024 am 11:40 AM

As artificial intelligence and machine learning technologies continue to develop, companies and organizations have begun to actively explore innovative strategies to leverage these technologies to enhance competitiveness. K8sGPT[2] is one of the most powerful tools in this field. It is a GPT model based on k8s, which combines the advantages of k8s orchestration with the excellent natural language processing capabilities of the GPT model. What is K8sGPT? Let’s look at an example first: According to the K8sGPT official website: K8sgpt is a tool designed for scanning, diagnosing and classifying kubernetes cluster problems. It integrates SRE experience into its analysis engine to provide the most relevant information. Through the application of artificial intelligence technology, K8sgpt continues to enrich its content and help users understand more quickly and accurately.

Should I choose MBR or GPT as the hard disk format for win7?

Jan 03, 2024 pm 08:09 PM

Should I choose MBR or GPT as the hard disk format for win7?

Jan 03, 2024 pm 08:09 PM

When we use the win7 operating system, sometimes we may encounter situations where we need to reinstall the system and partition the hard disk. Regarding the issue of whether win7 hard disk format requires mbr or gpt, the editor thinks that you still have to make a choice based on the details of your own system and hardware configuration. In terms of compatibility, it is best to choose the mbr format. For more details, let’s take a look at how the editor did it~ Win7 hard disk format requires mbr or gpt1. If the system is installed with Win7, it is recommended to use MBR, which has good compatibility. 2. If it exceeds 3T or install win8, you can use GPT. 3. Although GPT is indeed more advanced than MBR, MBR is definitely invincible in terms of compatibility. GPT and MBR areas

How to get the GPU in Windows 11 and check the graphics card details

Nov 07, 2023 am 11:21 AM

How to get the GPU in Windows 11 and check the graphics card details

Nov 07, 2023 am 11:21 AM

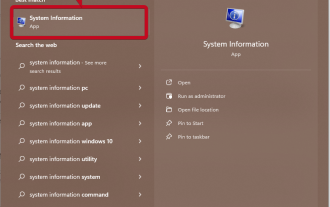

Using System Information Click Start and enter System Information. Just click on the program as shown in the image below. Here you can find most of the system information, and one thing you can find is graphics card information. In the System Information program, expand Components, and then click Show. Let the program gather all the necessary information and once it's ready, you can find the graphics card-specific name and other information on your system. Even if you have multiple graphics cards, you can find most content related to dedicated and integrated graphics cards connected to your computer from here. Using the Device Manager Windows 11 Just like most other versions of Windows, you can also find the graphics card on your computer from the Device Manager. Click Start and then

In-depth understanding of Win10 partition format: GPT and MBR comparison

Dec 22, 2023 am 11:58 AM

In-depth understanding of Win10 partition format: GPT and MBR comparison

Dec 22, 2023 am 11:58 AM

When partitioning their own systems, due to the different hard drives used by users, many users do not know whether the win10 partition format is gpt or mbr. For this reason, we have brought you a detailed introduction to help you understand the difference between the two. Win10 partition format gpt or mbr: Answer: If you are using a hard drive exceeding 3 TB, you can use gpt. gpt is more advanced than mbr, but mbr is still better in terms of compatibility. Of course, this can also be chosen according to the user's preferences. The difference between gpt and mbr: 1. Number of supported partitions: 1. MBR supports up to 4 primary partitions. 2. GPT is not limited by the number of partitions. 2. Supported hard drive size: 1. MBR only supports up to 2TB

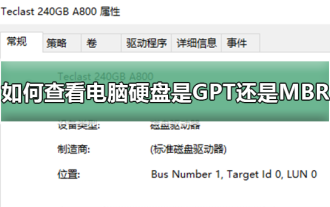

How to determine whether the computer hard drive uses GPT or MBR partitioning method

Dec 25, 2023 pm 10:57 PM

How to determine whether the computer hard drive uses GPT or MBR partitioning method

Dec 25, 2023 pm 10:57 PM

How to check whether a computer hard disk is a GPT partition or an MBR partition? When we use a computer hard disk, we need to distinguish between GPT and MBR. In fact, this checking method is very simple. Let's take a look with me. How to check whether the computer hard disk is GPT or MBR 1. Right-click 'Computer' on the desktop and click "Manage" 2. Find "Disk Management" in "Management" 3. Enter Disk Management to see the general status of our hard disk, then How to check the partition mode of my hard disk, right-click "Disk 0" and select "Properties" 4. Switch to the "Volume" tab in "Properties", then we can see the "Disk Partition Form" and you can see it as Problems related to MBR partition win10 disk How to convert MBR partition to GPT partition >

How to share contact details with NameDrop: How-to guide for iOS 17

Sep 16, 2023 pm 06:09 PM

How to share contact details with NameDrop: How-to guide for iOS 17

Sep 16, 2023 pm 06:09 PM

In iOS 17, there's a new AirDrop feature that lets you exchange contact information with someone by touching two iPhones. It's called NameDrop, and here's how it works. Instead of entering a new person's number to call or text them, NameDrop allows you to simply place your iPhone near their iPhone to exchange contact details so they have your number. Putting the two devices together will automatically pop up the contact sharing interface. Clicking on the pop-up will display a person's contact information and their contact poster (you can customize and edit your own photos, also a new feature of iOS17). This screen also includes the option to "Receive Only" or share your own contact information in response.

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

Apr 13, 2023 am 10:58 AM

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

Apr 13, 2023 am 10:58 AM

Current image 3D reconstruction work usually uses a multi-view stereo reconstruction method (Multi-view Stereo) that captures the target scene from multiple viewpoints (multi-view) under constant natural lighting conditions. However, these methods usually assume Lambertian surfaces and have difficulty recovering high-frequency details. Another approach to scene reconstruction is to utilize images captured from a fixed viewpoint but with different point lights. Photometric Stereo methods, for example, take this setup and use its shading information to reconstruct the surface details of non-Lambertian objects. However, existing single-view methods usually use normal map or depth map to represent the visible