Technology peripherals

Technology peripherals

AI

AI

How far has Transformer developed in reinforcement learning? Tsinghua University, Peking University and others jointly released a review of TransformRL

How far has Transformer developed in reinforcement learning? Tsinghua University, Peking University and others jointly released a review of TransformRL

How far has Transformer developed in reinforcement learning? Tsinghua University, Peking University and others jointly released a review of TransformRL

Reinforcement learning (RL) provides a mathematical form for sequential decision-making, and deep reinforcement learning (DRL) has also made great progress in recent years. However, sample efficiency issues hinder the widespread application of deep reinforcement learning methods in the real world. To solve this problem, an effective mechanism is to introduce inductive bias in the DRL framework.

In deep reinforcement learning, function approximators are very important. However, compared with the architecture design in supervised learning (SL), the architectural design issues in DRL are still rarely studied. Most existing work on RL architectures has been driven by the supervised/semi-supervised learning community. For example, when processing input based on high-dimensional images in DRL, a common approach is to introduce convolutional neural networks (CNN) [LeCun et al., 1998; Mnih et al., 2015]; to deal with partial observability (partial observability) A common approach to images is to introduce recurrent neural networks (RNN) [Hochreiter and Schmidhuber, 1997; Hausknecht and Stone, 2015].

In recent years, the Transformer architecture [Vaswani et al., 2017] has shown better performance than CNN and RNN, and has become a learning paradigm in more and more SL tasks [Devlin et al ., 2018; Dosovitskiy et al., 2020; Dong et al., 2018]. The Transformer architecture supports modeling of long-range dependencies and has excellent scalability [Khan et al., 2022]. Inspired by the success of SL, people have developed strong interest in applying Transformer to reinforcement learning, hoping to apply the advantages of Transformer to the field of RL.

The use of Transformer in RL can be traced back to a study by Zambaldi et al. in 2018, in which the self-attention mechanism was used to structure the relationship between state representations. reasoning. Subsequently, many researchers sought to apply self-attention to representation learning to extract relationships between entities for better policy learning [Vinyals et al., 2019; Baker et al., 2019].

In addition to using Transformer for representation learning, previous work also used Transformer to capture multi-temporal dependencies to deal with partial observability issues [Parisotto et al., 2020; Parisotto and Salakhutdinov, 2021 ]. Offline RL [Levine et al., 2020] has attracted attention for its ability to use offline large-scale datasets. Inspired by offline RL, recent research shows that the Transformer structure can be directly used as a model for sequential decision-making [Chen et al., 2021; Janner et al., 2021] and generalized to multiple tasks and fields [Lee et al., 2022; Carroll et al., 2022].

In fact, using Transformer as a function approximator in reinforcement learning faces some special challenges, including:

- Reinforcement Learning The training data of the agent is usually a function of the current policy, which will lead to non-stationarity when learning the Transformer;

- Existing RL algorithms usually Highly sensitive to design choices during training, including model architecture and model capacity [Henderson et al., 2018];

- Transformer-based architectures are often limited by high-performance computing and memory costs , which makes both training and inference in the RL learning process expensive.

For example, in AI for video games, the efficiency of sample generation (which affects training performance to a large extent) depends on the RL policy network and the valuation network ( value network) [Ye et al., 2020a; Berner et al., 2019].

In order to better promote the development of the field of reinforcement learning, researchers from Tsinghua University, Peking University, Zhiyuan Artificial Intelligence Research Institute and Tencent jointly published an article on reinforcement learning. This review paper of Transformer (i.e. TransformRL) summarizes the current existing methods and challenges, and discusses future development directions. The author believes that TransformRL will play an important role in stimulating the potential of reinforcement learning.

Paper address: https://arxiv.org/pdf/2301.03044.pdf

The overall structure of the paper is as follows:

- Chapter 2 introduces the background knowledge of RL and Transformer, and then briefly introduces how the two are combined;

- Chapter 3 Describes the evolution of network architecture in RL and the challenges that have long hindered widespread exploration of Transformer architecture in RL;

- In Chapter 4, the author classifies Transformers in RL and discusses The current representative methods are summarized;

- Chapter 5 summarizes and points out potential future research directions.

The core content starts from Chapter 3 of the paper. Let’s take a look at the main content of the paper.

Network architecture in RL

Before introducing the classification method of TransformRL, the paper reviews the early progress of network architecture design in RL and summarizes its existing challenges . The author believes that Transformer is an advanced neural network architecture that will contribute to the development of deep reinforcement learning (DRL).

The architecture of the function approximator

Since the creation of Deep Q-Network [Mnih et al., 2015] Since the advent of sex work, many efforts have been made on the network architecture of DRL agents. Improvements in network architecture in reinforcement learning are mainly divided into two categories.

One type is to design new structures and combine RL inductive bias to reduce the difficulty of training strategies or value functions. For example [Wang et al. 2016] proposed a dueling network architecture, in which one network is used for the state value function and the other is used for the state-related action advantage function. This architectural design combines inductive bias .

The other type is to study whether commonly used neural network techniques (such as regularization, skip connection, batch normalization) can be applied to RL. For example, [Ota et al. 2020] found that increasing the input dimension while using an online feature extractor to enhance state representation can help improve the performance and sample efficiency of DRL algorithms. [Sinha et al. 2020] proposed a deeply dense architecture for DRL agents that uses residual connections for efficient learning and inductive biases to alleviate the data processing inequality problem. [Ota et al. 2021] use DenseNet [Huang et al., 2017] and decoupled representation learning to improve information flow and gradients in large networks. Recently, due to the superior performance of Transformer, researchers have tried to apply the Transformer architecture to policy optimization algorithms, but found that ordinary Transformer designs cannot achieve ideal performance in RL tasks [Parisotto et al., 2020].

Challenges

Although Transformer-based architecture has made a lot of progress in the SL field in the past few years, Applying Transformers to RL is not straightforward. In fact, there are several unique challenges.

From an RL perspective, many studies point out that existing RL algorithms are very sensitive to the architecture of deep neural networks [Henderson et al., 2018; Engstrom et al., 2019; Andrychowicz et al., 2020]. First, the paradigm alternation between data collection and policy optimization in RL leads to instability in training. Second, RL algorithms are often highly sensitive to design choices made during training. [Emmons et al. 2021] demonstrate that careful choice of model architecture and regularization is critical to the performance of DRL agents.

From a Transformer perspective, Transformer-based architectures suffer from large memory footprint and high latency, which hinders their efficient deployment and inference. Recently, much research has been conducted around computational and memory efficiency improvements of the original Transformer architecture, but most of this work has been focused on the SL domain.

In the field of RL, Parisotto and Salakhutdinov proposed to transform the learner model based on large-capacity Transformer into a small-capacity actor model to avoid the high inference delay of Transformer. However, this approach is still expensive in terms of memory and computation. Currently, efficient or lightweight Transformers are not fully explored by the RL community.

Transformer in Reinforcement Learning

Although Transformer has become the basic model for most supervised learning research, it has not been widely used in the RL community for a long time due to the aforementioned challenges. . In fact, most early attempts at TransformRL used Transformer for state representation learning or providing memory information, while still using standard RL algorithms for agent learning, such as temporal difference learning and policy optimization.

Therefore, despite the introduction of Transformer as a function approximator, these methods are still challenged by traditional RL frameworks. Until recently, offline RL made it possible to learn optimal policies from large-scale offline data. Inspired by offline RL, recent work further treats the RL problem as a conditional sequence modeling problem with fixed experience. Doing so helps bypass the bootstrapping error challenge in traditional RL, allowing the Transformer architecture to unleash its powerful sequential modeling capabilities.

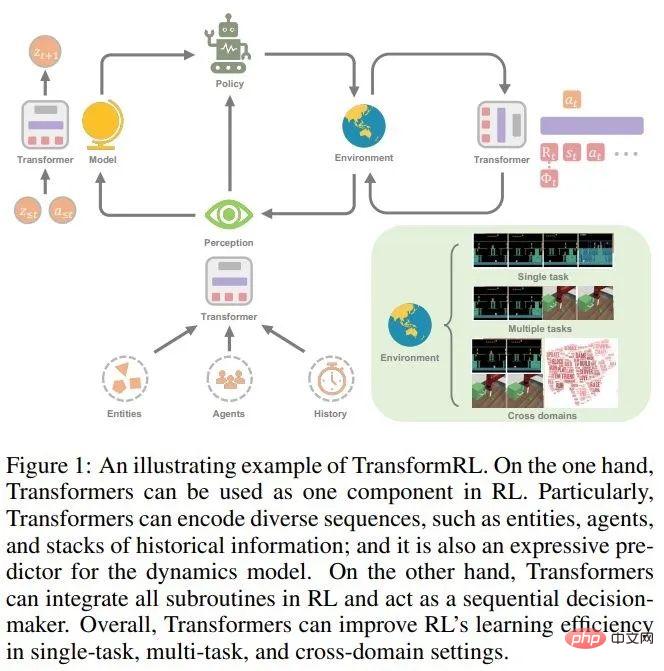

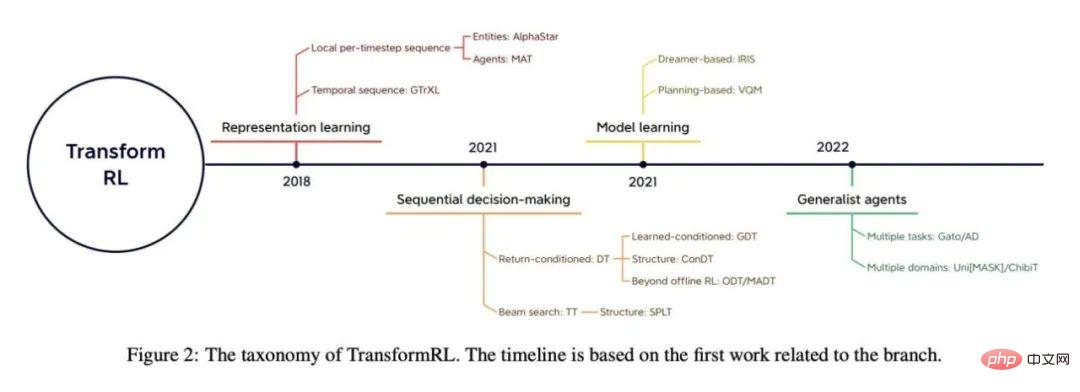

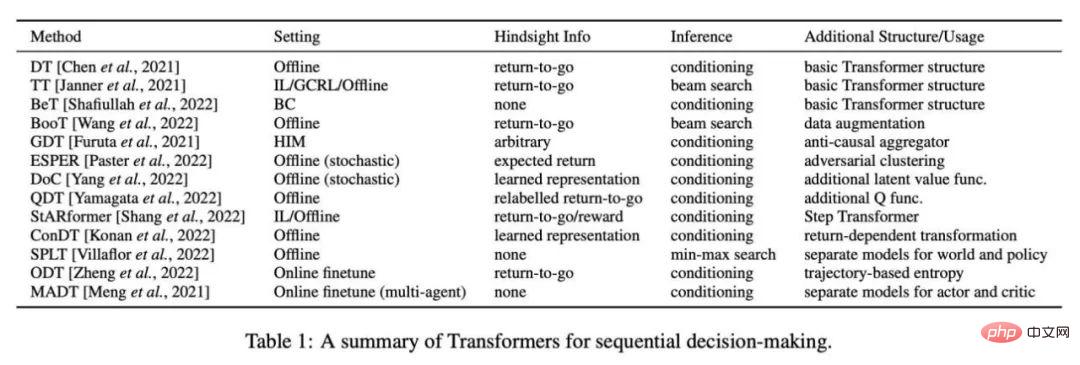

The paper reviews the progress of TransformRL and presents existing methods by classification. The authors classify existing methods into four categories: representation learning, model learning, sequential decision-making, and general-purpose agents. Figure 2 shows an overview of the relevant classifications.

Transformer for representation learning

Considering the sequential nature of RL tasks, it is reasonable to use the Transformer encoder module. In fact, various sequences in RL tasks need to be processed, such as local per-timestep sequences (multi-entity sequences [Vinyals et al., 2019; Baker et al., 2019], multi-agent sequences [Wen et al., 2022]), time series ([Parisotto et al., 2020; Banino et al., 2021]), etc.

Local per-timestep sequence encoder

This method was notable early on Success is reflected in the use of Transformers to process complex information about a variable number of entities observed by the agent. [Zambaldi et al., 2018a] first proposed using multi-head dot product attention to capture relational reasoning of structured observations, and then AlphaStar [Vinyals et al., 2019] implemented it in a challenging multi-agent environment (StarCraft II) Handling multi-entity observations. In this mechanism called entity Transformer, observations are encoded in the following form:

where e_i represents the agent's observation of entity i, Either sliced directly from the entire observation, or given by an entity tokenizer.

Some follow-up work enriches the entity Transformer mechanism. [Hu et al. 2020] proposed a compatible decoupling strategy to explicitly associate actions with various entities and leverage an attention mechanism for policy interpretation. To achieve challenging one-shot visual imitation, Dasari and Gupta [2021] use Transformer to learn representations that focus on task-specific elements.

Similar to entities scattered across observations, some studies utilize Transformers to process other local per-timestep sequences. Tang and Ha [2021] exploit Transformer's attention mechanism to process perceptual sequences and build a permutation-invariant input strategy. In incompatible multi-task RL, [Kurin et al., 2020] proposed using Transformer to extract morphological domain knowledge.

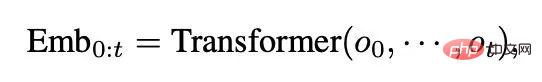

Timing Encoder

At the same time, it is also reasonable to use Transformer to process timing sequences. A temporal encoder is used as the storage architecture,

##where o_t represents the agent’s observation at time t, Emb_0:t Represents the embedding of historical observations from the initial observation to the current observation.

In earlier work, [Mishra et al. 2018] was unable to process temporal sequences using a vanilla Transformer and found that it performed even worse than a random policy on some specific tasks. Gated Transformer-XL (GTrXL) [Parisotto et al., 2020] is the first efficient solution to use Transformer as a storage architecture to process trajectories. GTrXL modifies the Transformer-XL architecture [Dai et al., 2019] through Identity Map Reordering to provide a "skip" path from sequential input to Transformer output, which may help form a stable training process from the beginning. [Loynd et al. 2020] proposed a memory vector shortcut mechanism for long-term dependencies, and [Irie et al. 2021] combined a linear Transformer with a Fast Weight Programmer for better performance. [Melo 2022] proposed using a self-attention mechanism to simulate storage recovery for storage-based meta-RL.

Although Transformer performs better than LSTM/RNN as storage grows and parameter size increases, it is not data efficient on RL. Subsequent work exploits some auxiliary self-supervised tasks to facilitate learning [Banino et al., 2021] or uses pre-trained Transformer architectures as temporal encoders [Li et al., 2022; Fan et al., 2022].

Transformer for model learning

In addition to using Transformer as the encoder for sequence embedding, the Transformer architecture is also As the backbone of the environment model in model-based algorithms. Unlike predictions that are conditioned on single-step observations and actions, Transformers enable environment models to predict transitions that are conditioned on a certain length of historical information.

In fact, the success of Dreamer and its subsequent algorithms [Hafner et al., 2020, 2021; Seo et al., 2022] has been achieved in some partially observable environments or requiring memory The advantages of world models based on historical information are demonstrated in the task of mechanism. A world model conditioned on historical information consists of an observation encoder that captures abstract information and a transformation model that learns transformations in the latent space.

There have been studies using the Transformer architecture instead of RNN to build history-based world models. [Chen et al. 2022] Replace the RNN-based Recurrent State-Space Model (RSSM) in Dreamer with the Transformer-based model TSSM (Transformer State-Space Model). IRIS (Imagination with autoRegression over an Inner Speech) [Micheli et al., 2022] learns a Transformer-based world model through autoregressive learning of rollout experience without KL balancing like Dreamer, and is implemented in Atari [Bellemare et al ., 2013] and achieved good results.

In addition, there are also studies trying to use Transformer-based world models for planning. [Ozair et al. 2021] verified the effectiveness of planning using the Transformer transformation model to complete stochastic tasks. [Sun et al. 2022] proposed a target-conditioned Transformer transformation model, which is effective in visual-based planning of procedural tasks.

Both RNN and Transformer are suitable for learning world models based on historical information. However, [Micheli et al. 2022] found that the Transformer architecture is a more data-efficient world model compared to Dreamer. Experimental results on TSSM show that the Transformer architecture performs well in tasks requiring long-term memory.

Transformer for sequential decision-making

In addition to being integrated into traditional RL algorithms as a high-performance architecture, Transformer It can also be used directly as a sequential decision-making model. This is because RL can be viewed as a conditional sequence modeling problem: generate sequences of actions that yield high rewards.

Given the Transformer’s excellent accuracy in sequence prediction, Bootstrapped Transformer (BooT) [Wang et al., 2022 ] proposed to generate data through bootstrap Transformer, while optimizing the data for sequential decision-making. Bootstrapping Transformer for data augmentation can expand the number and coverage of offline data sets, thereby improving performance. Specifically, BooT compares different data generation schemes and bootstrapping schemes to analyze how BooT facilitates policy learning. The results show that it can generate data consistent with the underlying MDP without additional constraints.

Transformer for general-purpose agents

Decision Transformer has played a huge role in various tasks on offline data. Some researchers have begun to consider whether Transformer can allow general-purpose agents to solve multiple different tasks or problems like in the fields of CV and NLP.

Generalize to multiple tasks

Some researchers draw on CV and NLP The idea is to pre-train large-scale data sets and try to abstract general strategies from large-scale multi-task data sets. Multi-Game Decision Transformer (MGDT) [Lee et al., 2022] is a variant of DT that learns DT on a diverse dataset consisting of expert and non-expert data, and uses a set of parameters to Achieve near-human performance on Atari games. In order to obtain expert-level performance on non-expert-level data sets, MGDT designed an expert action reasoning mechanism to calculate the expert-level return-to-go posterior distribution from the return-to-go prior distribution and calculate it according to the Bayesian formula Default expert return-to-go probability.

Similarly, Switch Trajectory Transformer (SwitchTT) [Lin et al., 2022] is a multi-task extension of TT, utilizing a sparse activation model to replace the FFN layer with a hybrid expert layer to Achieve efficient multi-task offline learning. Additionally, SwitchTT employs a distributed trajectory value estimator to model the uncertainty of value estimates. Relying on these two enhancements, SwitchTT is much improved than TT in terms of performance and training speed. MGDT and SwitchTT leverage experience gathered from multiple tasks and various performance-level policies to learn a general policy. However, building large-scale multi-task datasets is not trivial.

Unlike large-scale data sets in CV and NLP, which usually use massive data from the Internet and simple manual labeling, sequential decision-making data in RL always lacks action information and is not easy to mark. Therefore, [Baker et al. 2022] proposed a semi-supervised scheme to learn a Transformer-based inverse dynamic model (IDM) using large-scale online data without action information. The model leverages past and future observations to predict action information and is capable of labeling large amounts of online video data. IDM is learned on a small dataset containing manually labeled actions and is sufficiently accurate.

Many existing works in NLP have proven the effectiveness of prompt in adapting to new tasks, and some work utilize prompt technology based on DT methods to achieve rapid adaptation. Prompt-based Decision Transformer (Prompt-DT) [Xu et al., 2022] samples a series of transformations as prompts from a few-shot demonstration dataset and generalizes the few-shot strategy to offline meta-RL tasks. [Reed et al. 2022] further leverage prompt-based architecture to learn a general agent (Gato) through autoregressive sequence modeling on very large-scale datasets covering natural language, images, temporal decision-making, and multi-modal data. Gato is capable of performing a range of tasks from different domains, including text generation and decision-making.

[Laskin et al. 2022] proposed Algorithmic Distillation (AD) to train Transformer on an across-episode sequence of single-task RL algorithm learning process. Therefore, even in new tasks, the Transformer can learn to gradually improve its policy during the autoregressive generation process.

Generalize to a wider field

In addition to generalizing to multiple tasks, Transformer is also a powerful "universal" model that can be used in a range of domains related to sequential decision-making. Inspired by the masked language modeling [Devlin et al., 2018] technology in NLP, [Carroll et al. 2022] proposed Uni [MASK], which unifies various commonly used research fields into mask reasoning Problems including behavioral cloning, offline RL, GCRL, past/future reasoning, and dynamic prediction. Uni [MASK] compares different mask schemes, including task-specific masks, random masks, and fine-tuned variants. The results show that a single Transformer trained with a random mask can solve arbitrary inference tasks.

Furthermore, [Reid et al. 2022] found that fine-tuning DT using a Transformer pretrained on a language dataset or a multimodal dataset containing language modalities is beneficial of. This shows that even knowledge from non-RL domains can be used for RL training through transformers.

Interested readers can read the original text of the paper to learn more about the research details.

The above is the detailed content of How far has Transformer developed in reinforcement learning? Tsinghua University, Peking University and others jointly released a review of TransformRL. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to add, modify and delete MySQL data table field operation guide

Apr 11, 2025 pm 05:42 PM

How to add, modify and delete MySQL data table field operation guide

Apr 11, 2025 pm 05:42 PM

Field operation guide in MySQL: Add, modify, and delete fields. Add field: ALTER TABLE table_name ADD column_name data_type [NOT NULL] [DEFAULT default_value] [PRIMARY KEY] [AUTO_INCREMENT] Modify field: ALTER TABLE table_name MODIFY column_name data_type [NOT NULL] [DEFAULT default_value] [PRIMARY KEY]

Detailed explanation of nested query instances in MySQL database

Apr 11, 2025 pm 05:48 PM

Detailed explanation of nested query instances in MySQL database

Apr 11, 2025 pm 05:48 PM

Nested queries are a way to include another query in one query. They are mainly used to retrieve data that meets complex conditions, associate multiple tables, and calculate summary values or statistical information. Examples include finding employees above average wages, finding orders for a specific category, and calculating the total order volume for each product. When writing nested queries, you need to follow: write subqueries, write their results to outer queries (referenced with alias or AS clauses), and optimize query performance (using indexes).

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

This article describes how to customize Apache's log format on Debian systems. The following steps will guide you through the configuration process: Step 1: Access the Apache configuration file The main Apache configuration file of the Debian system is usually located in /etc/apache2/apache2.conf or /etc/apache2/httpd.conf. Open the configuration file with root permissions using the following command: sudonano/etc/apache2/apache2.conf or sudonano/etc/apache2/httpd.conf Step 2: Define custom log formats to find or

What does oracle do

Apr 11, 2025 pm 06:06 PM

What does oracle do

Apr 11, 2025 pm 06:06 PM

Oracle is the world's largest database management system (DBMS) software company. Its main products include the following functions: relational database management system (Oracle database) development tools (Oracle APEX, Oracle Visual Builder) middleware (Oracle WebLogic Server, Oracle SOA Suite) cloud service (Oracle Cloud Infrastructure) analysis and business intelligence (Oracle Analytics Cloud, Oracle Essbase) blockchain (Oracle Blockchain Pla

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

How Tomcat logs help troubleshoot memory leaks

Apr 12, 2025 pm 11:42 PM

Tomcat logs are the key to diagnosing memory leak problems. By analyzing Tomcat logs, you can gain insight into memory usage and garbage collection (GC) behavior, effectively locate and resolve memory leaks. Here is how to troubleshoot memory leaks using Tomcat logs: 1. GC log analysis First, enable detailed GC logging. Add the following JVM options to the Tomcat startup parameters: -XX: PrintGCDetails-XX: PrintGCDateStamps-Xloggc:gc.log These parameters will generate a detailed GC log (gc.log), including information such as GC type, recycling object size and time. Analysis gc.log

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

Record the specific steps for installing MariaDB in Ubuntu

Apr 11, 2025 pm 05:15 PM

Record the specific steps for installing MariaDB in Ubuntu

Apr 11, 2025 pm 05:15 PM

Steps to install MariaDB on Ubuntu: Add MariaDB repository installation MariaDB starts MariaDB service protection MariaDB installation Connect to MariaDB Create database and user (optional) Verify installation

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

This article describes how to configure firewall rules using iptables or ufw in Debian systems and use Syslog to record firewall activities. Method 1: Use iptablesiptables is a powerful command line firewall tool in Debian system. View existing rules: Use the following command to view the current iptables rules: sudoiptables-L-n-v allows specific IP access: For example, allow IP address 192.168.1.100 to access port 80: sudoiptables-AINPUT-ptcp--dport80-s192.16