Technology peripherals

Technology peripherals

AI

AI

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.

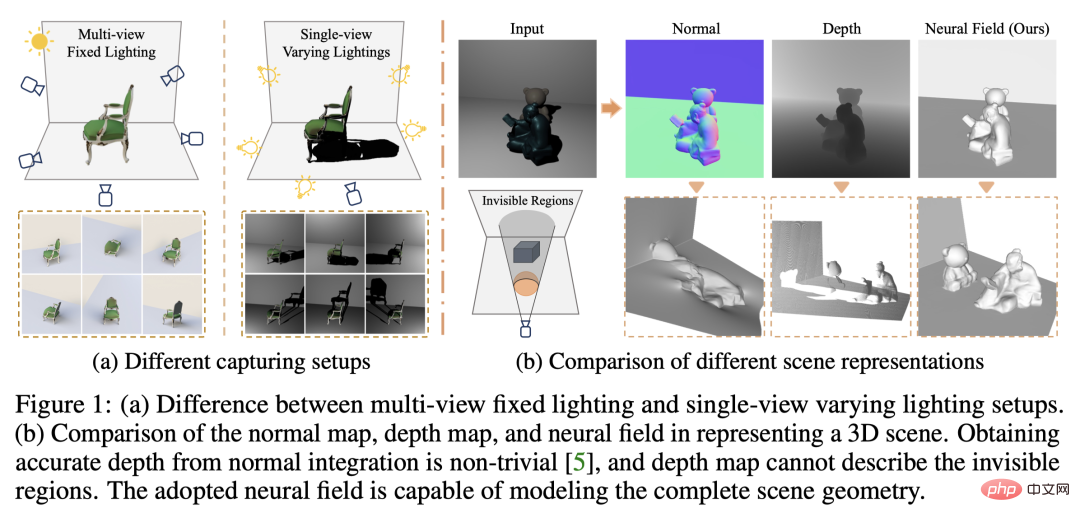

Currently, 3D image reconstruction work usually uses a multi-view stereo reconstruction method (Multi-view Stereo) that captures the target scene from multiple viewpoints (multi-view) under constant natural lighting conditions. However, these methods usually assume Lambertian surfaces and have difficulty recovering high-frequency details.

Another method of scene reconstruction is to utilize images captured from a fixed viewpoint but different point lights. Photometric Stereo methods, for example, take this setup and use its shading information to reconstruct the surface details of non-Lambertian objects. However, existing single-view methods usually use normal maps or depth maps to represent the visible surface, which makes them unable to describe the back side of objects and occluded areas, and can only reconstruct 2.5D scene geometry. Additionally, normal maps cannot handle depth discontinuities.

In a recent study, researchers from the University of Hong Kong, the Chinese University of Hong Kong (Shenzhen), Nanyang Technological University, and MIT-IBM Watson AI Lab proposed a method to use a single View multi-light (single-view, multi-lights) images to reconstruct complete 3D scenes.

- ## Paper link: https://arxiv.org/abs/2210.08936

- Paper homepage: https://ywq.github.io/s3nerf/

- Code link: https://github.com/ywq/s3nerf

Unlike existing single-view approaches based on normal maps or depth maps, S 3-NeRF is based on neural scene representation and uses the shading and shadow information in the scene to reconstruct the entire 3D scene (including visible/invisible areas). Neural scene representation methods use multilayer perceptrons (MLPs) to model continuous 3D space, mapping 3D points to scene attributes such as density, color, etc. Although neural scene representation has made significant progress in multi-view reconstruction and new view synthesis, it has been less explored in single-view scene modeling. Unlike existing neural scene representation-based methods that rely on multi-view photo consistency, S3-NeRF mainly optimizes the neural field by utilizing shading and shadow information under a single view.

We found that simply introducing light source position information directly into NeRF as input cannot reconstruct the geometry and appearance of the scene. To make better use of the captured photometric stereo images, we explicitly model the surface geometry and BRDF using a reflection field, and employ physically based rendering to calculate the color of the scene's 3D points, which is obtained via stereo rendering. The color of the two-dimensional pixel corresponding to the ray. At the same time, we perform differentiable modeling of the visibility of the scene and calculate the visibility of the point by tracing the rays between the 3D point and the light source. However, considering the visibility of all sample points on a ray is computationally expensive, so we optimize shadow modeling by calculating the visibility of surface points obtained by ray tracing.

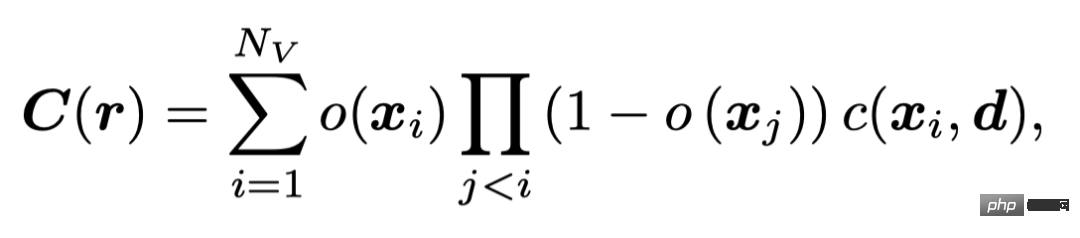

We use an occupancy field similar to UNISURF to represent scene geometry. UNISURF maps the 3D point coordinates and line of sight direction to the occupancy value and color of the point through MLP, and obtains the color of the pixel through stereo rendering,

Nv# is the number of sampling points on each ray.

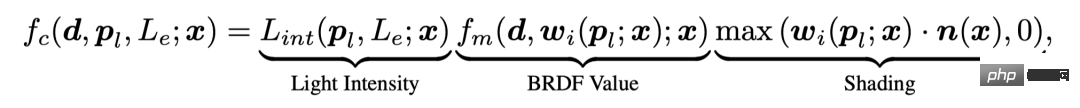

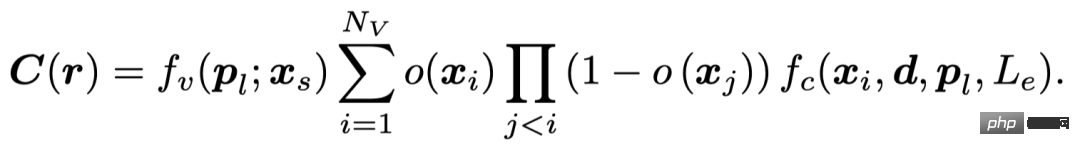

In order to effectively utilize the shading information in photometric stereo images, S3-NeRF explicitly models the BRDF of the scene and uses physically based Render the color of the 3D point. At the same time, we model the light visibility of 3D points in the scene to take advantage of the rich shadow cues in the image, and obtain the final pixel value through the following equation.

Physically Based Rendering Model

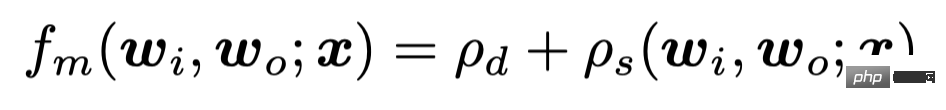

Our approach considers non-Lambertian surfaces and spatially varying BRDFs. The value of point x observed from the line of sight direction d under the near-field point light source (pl, Le) can be expressed as

where, we consider For the light attenuation problem of point light sources, the intensity of light incident on the point is calculated through the distance between the light source and the point. We use a BRDF model that considers diffuse reflection and specular reflection

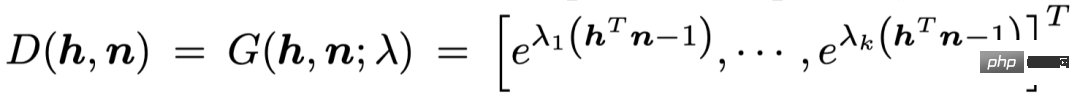

to represent the specular reflectance through a weighted combination of Sphere Gaussian basis

Shadow modeling

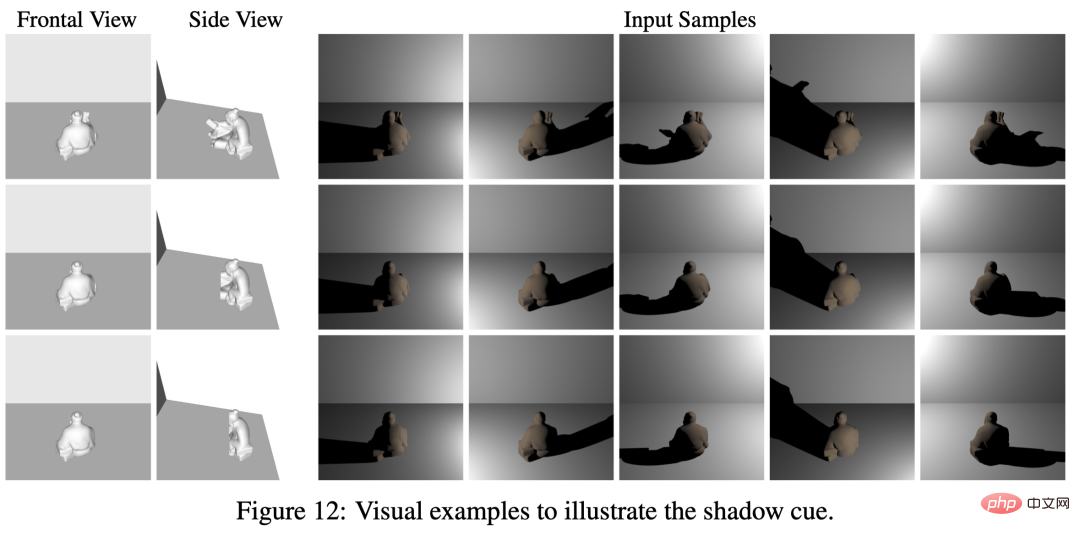

Shadows are one of the crucial clues in scene geometry reconstruction. The three objects in the picture have the same shape and appearance in front view, but have different shapes on the back. Through the shadows produced under different lighting, we can observe that the shapes of the shadows are different, which reflects the geometric information of the invisible areas in the front view. The light creates certain constraints on the back contour of the object through the shadows reflected in the background.

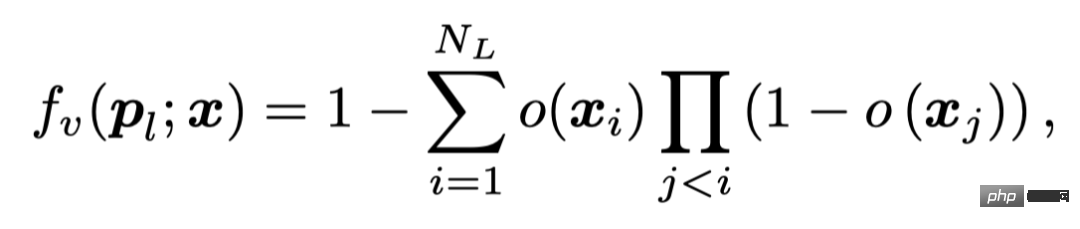

We reflect the light visibility of the point by calculating the occupancy value between the 3D point and the light source

Among them, NL is the number of points sampled on the point-light source line segment.

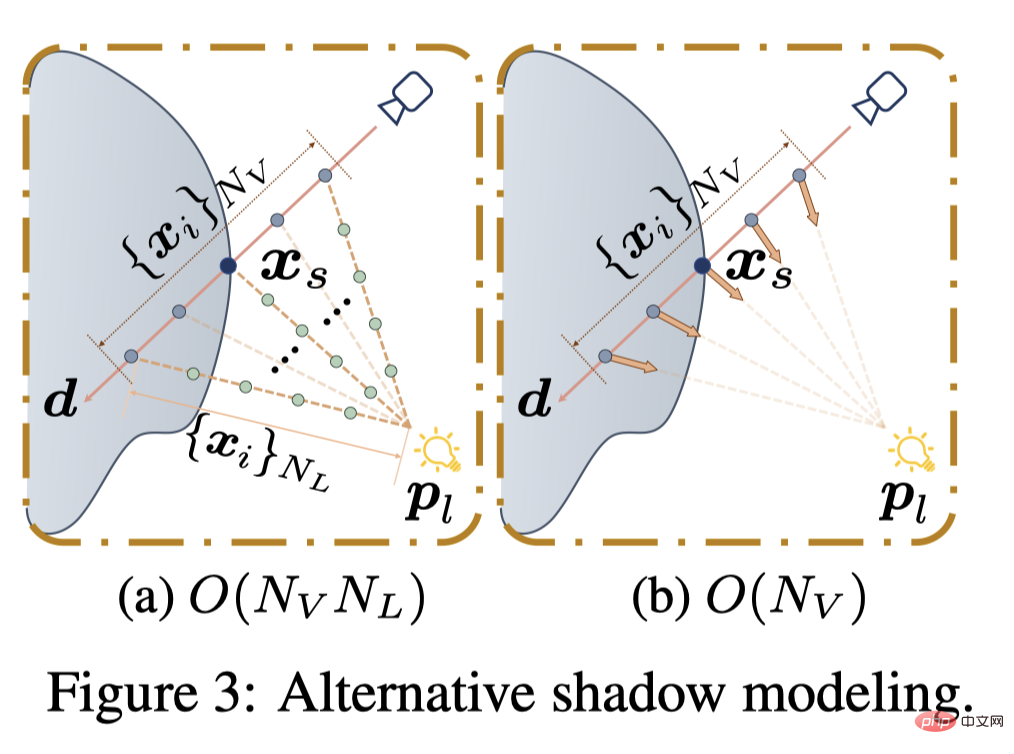

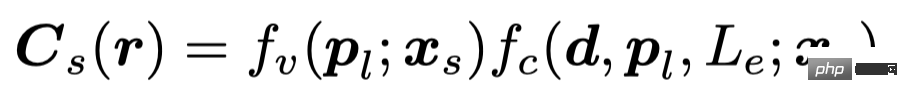

Due to the large cost of calculating the visibility of all Nv points sampled by pixel points along the ray (O (NvNL)), some existing methods use MLP to directly return the visibility of the point (O (Nv )), or pre-extract surface points after obtaining the scene geometry (O (NL)). S3-NeRF calculates the light visibility of the pixel online through the surface points located by root-finding, and expresses the pixel value through the following formula.

Scene Optimization

Our method does not require supervision of shadows; Rely on image reconstruction loss for optimization. Considering that there are no additional constraints brought by other perspectives in a single perspective, if a sampling strategy like UNISURF is adopted to gradually reduce the sampling range, it will cause the model to begin to degrade after the sampling interval is reduced. Therefore, we adopt a strategy of joint stereo rendering and surface rendering, using root-finding to locate the surface points to render the color and calculate L1 loss.

Experimental results

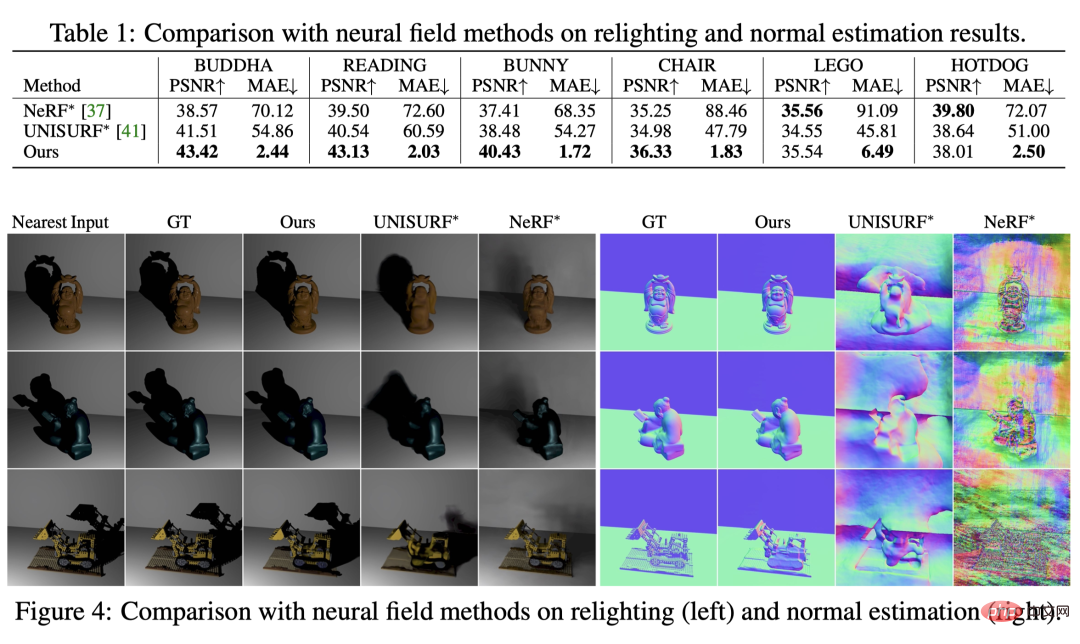

Comparison with neural radiation field method

We first compare with two baseline methods based on neural radiation fields (due to different tasks, we introduce light source information in their color MLP). You can see that they are unable to reconstruct scene geometry or accurately generate shadows under new lighting.

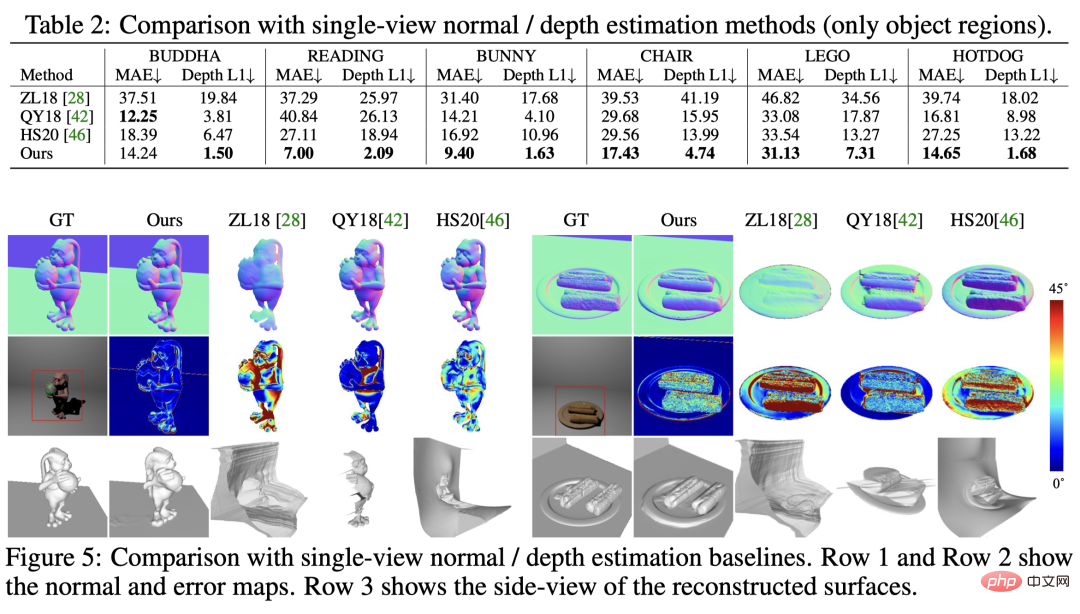

Comparison with single-view shape estimation method

Now and now From the comparison of single-view normal/depth estimation methods, we can see that our method achieves the best results in both normal estimation and depth estimation, and can simultaneously reconstruct visible and invisible areas in the scene.

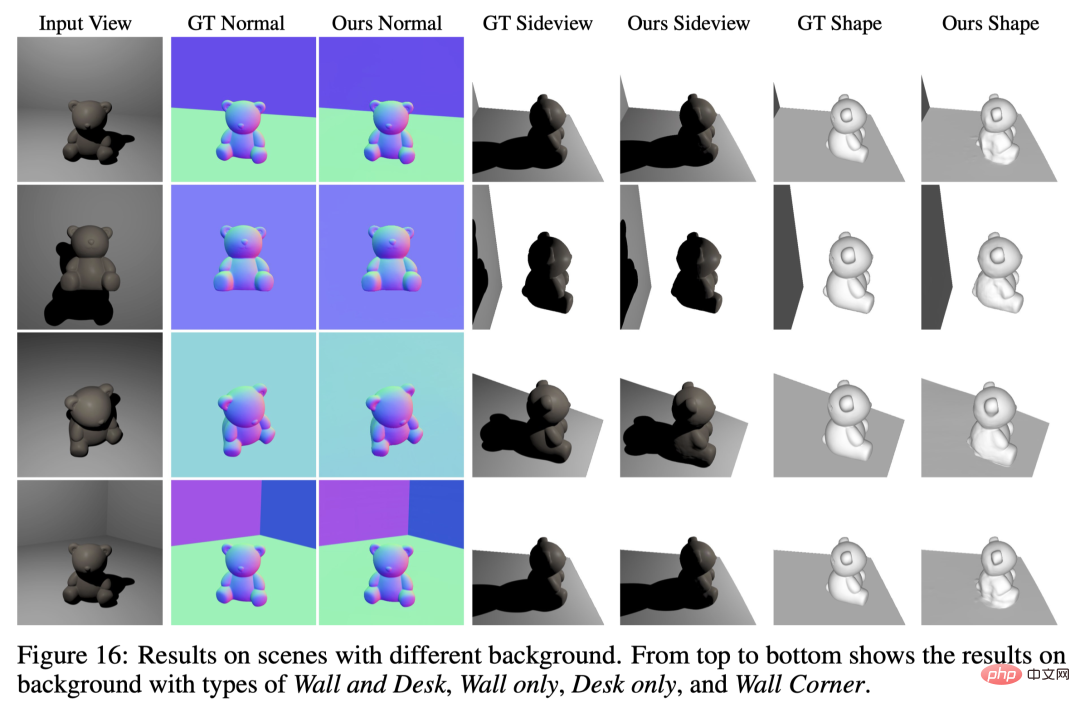

Scene reconstruction for different backgrounds

Our method is applicable to various scenes with different background conditions.

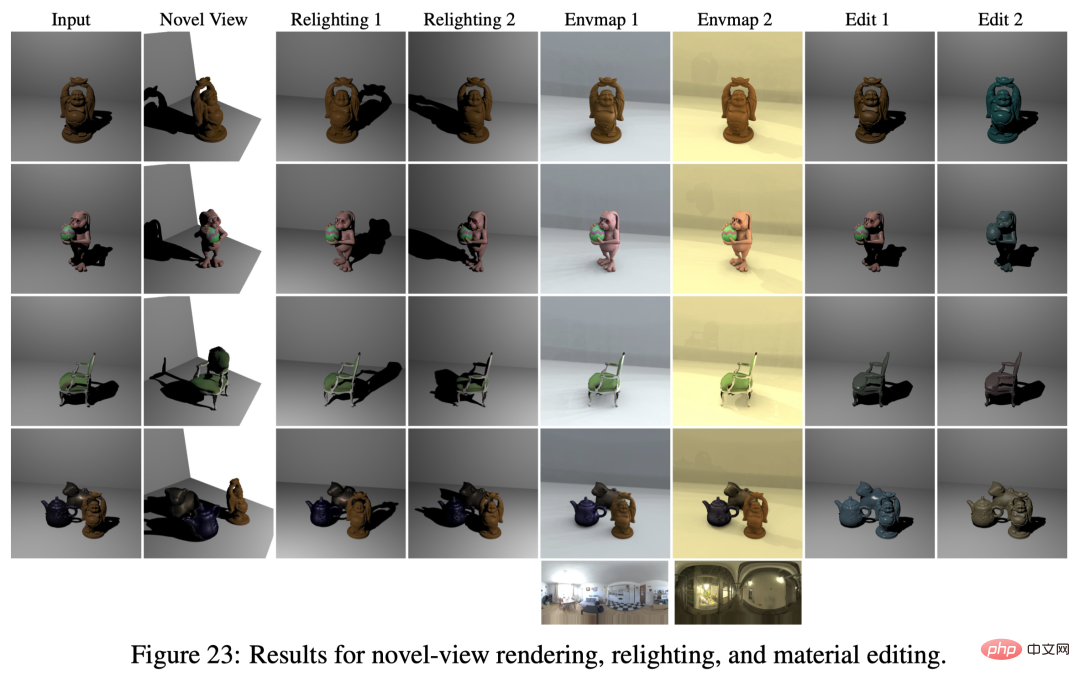

New view rendering, changing lighting and material editing

Neural based For the scene modeling of the reflection field, we have successfully decoupled the geometry/material/lighting of the scene, etc., so it can be applied to applications such as new view rendering, changing scene lighting, and material editing.

Reconstruction of real shooting scenes

We shot three real scenarios to explore its practicality. We fixed the camera position, used the mobile phone's flashlight as a point light source (the ambient light source was turned off), and moved the handheld flashlight randomly to capture images under different light sources. This setup does not require light source calibration, we apply SDPS‑Net to get a rough estimate of the light source direction, and initialize the light source position by roughly estimating the camera-object and light source-object relative distances. Light source positions are jointly optimized with the scene's geometry and BRDF during training. It can be seen that even with a more casual data capture setting (without calibration of the light source), our method can still reconstruct the 3D scene geometry well.

Summary

- S3-NeRF images captured under multiple point light sources using a single view to optimize the neural reflection field to reconstruct 3D scene geometry and material information.

- By utilizing shading and shadow clues, S3-NeRF can effectively restore the geometry of visible/invisible areas in the scene, achieving Reconstruction of complete scene geometry/BRDF from monocular perspective.

- Various experiments show that our method can reconstruct scenes of various complex geometries/materials, and can cope with backgrounds of various geometries/materials and different light quantities/light source distributions.

The above is the detailed content of The single-view NeRF algorithm S^3-NeRF uses multi-illumination information to restore scene geometry and material information.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to remove author and last modified information in Microsoft Word

Apr 15, 2023 am 11:43 AM

How to remove author and last modified information in Microsoft Word

Apr 15, 2023 am 11:43 AM

Microsoft Word documents contain some metadata when saved. These details are used for identification on the document, such as when it was created, who the author was, date modified, etc. It also has other information such as number of characters, number of words, number of paragraphs, and more. If you might want to remove the author or last modified information or any other information so that other people don't know the values, then there is a way. In this article, let’s see how to remove a document’s author and last modified information. Remove author and last modified information from Microsoft Word document Step 1 – Go to

Learn about 3D Fluent emojis in Microsoft Teams

Apr 24, 2023 pm 10:28 PM

Learn about 3D Fluent emojis in Microsoft Teams

Apr 24, 2023 pm 10:28 PM

You must remember, especially if you are a Teams user, that Microsoft added a new batch of 3DFluent emojis to its work-focused video conferencing app. After Microsoft announced 3D emojis for Teams and Windows last year, the process has actually seen more than 1,800 existing emojis updated for the platform. This big idea and the launch of the 3DFluent emoji update for Teams was first promoted via an official blog post. Latest Teams update brings FluentEmojis to the app Microsoft says the updated 1,800 emojis will be available to us every day

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Paint 3D in Windows 11: Download, Installation, and Usage Guide

Apr 26, 2023 am 11:28 AM

Paint 3D in Windows 11: Download, Installation, and Usage Guide

Apr 26, 2023 am 11:28 AM

When the gossip started spreading that the new Windows 11 was in development, every Microsoft user was curious about how the new operating system would look like and what it would bring. After speculation, Windows 11 is here. The operating system comes with new design and functional changes. In addition to some additions, it comes with feature deprecations and removals. One of the features that doesn't exist in Windows 11 is Paint3D. While it still offers classic Paint, which is good for drawers, doodlers, and doodlers, it abandons Paint3D, which offers extra features ideal for 3D creators. If you are looking for some extra features, we recommend Autodesk Maya as the best 3D design software. like

Get a virtual 3D wife in 30 seconds with a single card! Text to 3D generates a high-precision digital human with clear pore details, seamlessly connecting with Maya, Unity and other production tools

May 23, 2023 pm 02:34 PM

Get a virtual 3D wife in 30 seconds with a single card! Text to 3D generates a high-precision digital human with clear pore details, seamlessly connecting with Maya, Unity and other production tools

May 23, 2023 pm 02:34 PM

ChatGPT has injected a dose of chicken blood into the AI industry, and everything that was once unthinkable has become basic practice today. Text-to-3D, which continues to advance, is regarded as the next hotspot in the AIGC field after Diffusion (images) and GPT (text), and has received unprecedented attention. No, a product called ChatAvatar has been put into low-key public beta, quickly garnering over 700,000 views and attention, and was featured on Spacesoftheweek. △ChatAvatar will also support Imageto3D technology that generates 3D stylized characters from AI-generated single-perspective/multi-perspective original paintings. The 3D model generated by the current beta version has received widespread attention.

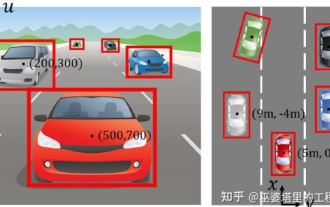

An in-depth interpretation of the 3D visual perception algorithm for autonomous driving

Jun 02, 2023 pm 03:42 PM

An in-depth interpretation of the 3D visual perception algorithm for autonomous driving

Jun 02, 2023 pm 03:42 PM

For autonomous driving applications, it is ultimately necessary to perceive 3D scenes. The reason is simple. A vehicle cannot drive based on the perception results obtained from an image. Even a human driver cannot drive based on an image. Because the distance of objects and the depth information of the scene cannot be reflected in the 2D perception results, this information is the key for the autonomous driving system to make correct judgments on the surrounding environment. Generally speaking, the visual sensors (such as cameras) of autonomous vehicles are installed above the vehicle body or on the rearview mirror inside the vehicle. No matter where it is, what the camera gets is the projection of the real world in the perspective view (PerspectiveView) (world coordinate system to image coordinate system). This view is very similar to the human visual system,