Technology peripherals

Technology peripherals

AI

AI

Google and OpenAI scholars talk about AI: Language models are working hard to 'conquer' mathematics

Google and OpenAI scholars talk about AI: Language models are working hard to 'conquer' mathematics

Google and OpenAI scholars talk about AI: Language models are working hard to 'conquer' mathematics

If you ask what computers are good at, among all the answers, mathematics must be on the list. After a long period of research, top scholars have achieved surprising results in studying the development of computers in mathematical calculations.

Take last year, for example, researchers from the University of California, Berkeley, OpenAI and Google have made great progress in language models. GPT-3, DALL·E 2, etc. have been developed. However, until now, language models have not been able to solve some simple, verbally described mathematical problems, such as "Alice has five more balls than Bob, and Bob has two balls after giving Charlie four balls. Ask Alice how many balls she has ?" For the language model, it may be a bit "difficult" to give the correct answer.

“When we say computers are very good at math, we mean that they are very good at specific, specific things,” said Guy Gur-Ari, a machine learning expert at Google. It is true that computers are good at arithmetic, but outside of specific modes, computers are powerless and cannot answer simple text description questions.

Google researcher Ethan Dyer once said: People who do mathematics research have a rigid reasoning system. There is a clear gap between what they know and what they don’t understand. .

Solving word problems or quantitative reasoning questions is tricky because, unlike other problems, these two require robustness and rigor. If you go wrong at any step in the process, you will get the wrong answer. DALL·E is impressive at drawing, even though the images it generates are sometimes weird, with fingers missing and eyes looking weird... We can all accept that, but it makes mistakes in math, and our The tolerance will be very small. Vineet Kosaraju, a machine learning expert from OpenAI, has also expressed this idea, "Our tolerance for mathematical errors made by language models (such as misunderstanding 10 as 1 and 0 instead of 10) is still relatively small."

"We study mathematics simply because we find it independent and very interesting," said Karl Cobbe, a machine learning expert at OpenAI.

As machine learning models are trained on larger data samples, they become more robust and make fewer errors. But scaling up models appears to be possible only through quantitative reasoning. The researchers realized that the mistakes made by language models seemed to require a more targeted approach.

Last year, two research teams from the University of California, Berkeley and OpenAI released the data sets MATH and GSM8K respectively. These two data sets contain thousands of geometry, algebra, elementary mathematics, etc. Math problems. “We wanted to see if this was a problem with the data set,” said Steven Basart, a researcher at the Center for AI Security who works in mathematics. It is known that language models are not good at word problems. How bad do they perform on this problem? Can it be solved by introducing better formatted and larger data sets?

On the MATH dataset, the top language model achieved an accuracy of 7%, compared to 40% accuracy for human graduate students and 90% accuracy for Olympic champions. On the GSM8K dataset (elementary school level problem), the model achieved 20% accuracy. In the experiment, OpenAI used two techniques, fine-tuning and verification, and the results showed that the model can see many examples of its own errors, which is a valuable finding.

At that time, OpenAI’s model needed to be trained on 100 times more data to achieve 80% accuracy on GSM8K. But in June of this year, Google released Minerva, which achieved 78% accuracy. This result exceeded expectations, and the researchers said it came faster than expected.

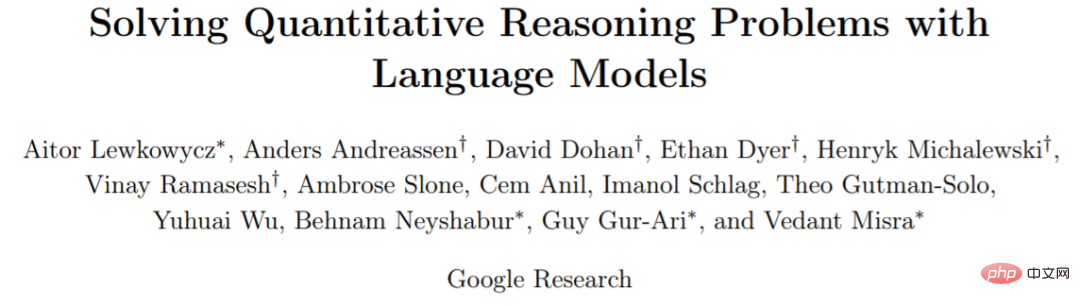

##Paper address: https://arxiv.org/pdf/2206.14858.pdf

Minerva is based on Google’s self-developed Pathways Language Model (PaLM) and has more mathematical data sets, including arXiv, LaTeX and other mathematical formats. Another strategy Minerva employs is chain-of-thought prompting, in which Minerva breaks larger problems into smaller pieces. Additionally, Minerva uses majority voting, where instead of asking the model to come up with one answer, it asks it to come up with 100 answers. Of these answers, Minerva chooses the most common one.

The gains from these new strategies are huge, with Minerva achieving up to 50% accuracy on MATH, GSM8K, and MMLU (a more general set of algorithms including chemistry and biology). The accuracy rate on STEM problems is close to 80%. When Minerva was asked to redo slightly tweaked problems, it performed equally well, showing that its abilities don't just come from memory.

Minerva can have weird, confusing reasoning and still come up with the right answer. While models like Minerva may arrive at the same answers as humans, the actual process they follow may be very different.

Ethan Dyer, a machine learning expert at Google, said, "I think there is this idea that people in mathematics have some rigorous reasoning system between knowing something and not knowing something. There's a clear difference." But people give inconsistent answers, make mistakes, and fail to apply core concepts. On the machine learning frontier, the boundaries are blurry.

The above is the detailed content of Google and OpenAI scholars talk about AI: Language models are working hard to 'conquer' mathematics. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to use char array in C language

Apr 03, 2025 pm 03:24 PM

How to use char array in C language

Apr 03, 2025 pm 03:24 PM

The char array stores character sequences in C language and is declared as char array_name[size]. The access element is passed through the subscript operator, and the element ends with the null terminator '\0', which represents the end point of the string. The C language provides a variety of string manipulation functions, such as strlen(), strcpy(), strcat() and strcmp().

Avoid errors caused by default in C switch statements

Apr 03, 2025 pm 03:45 PM

Avoid errors caused by default in C switch statements

Apr 03, 2025 pm 03:45 PM

A strategy to avoid errors caused by default in C switch statements: use enums instead of constants, limiting the value of the case statement to a valid member of the enum. Use fallthrough in the last case statement to let the program continue to execute the following code. For switch statements without fallthrough, always add a default statement for error handling or provide default behavior.

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

There is no built-in sum function in C language, so it needs to be written by yourself. Sum can be achieved by traversing the array and accumulating elements: Loop version: Sum is calculated using for loop and array length. Pointer version: Use pointers to point to array elements, and efficient summing is achieved through self-increment pointers. Dynamically allocate array version: Dynamically allocate arrays and manage memory yourself, ensuring that allocated memory is freed to prevent memory leaks.

How to inverse the result of !x in C?

Apr 03, 2025 pm 01:57 PM

How to inverse the result of !x in C?

Apr 03, 2025 pm 01:57 PM

In C language, you can use !!x, but it only uses two Boolean conversions, and it is more concise and efficient to use x directly.

The importance of default in switch case statement (C language)

Apr 03, 2025 pm 03:57 PM

The importance of default in switch case statement (C language)

Apr 03, 2025 pm 03:57 PM

The default statement is crucial in the switch case statement because it provides a default processing path that ensures that a block of code is executed when the variable value does not match any case statement. This prevents unexpected behavior or errors and enhances the robustness of the code.

What is the impact of the static keyword on the scope of C user identifiers?

Apr 03, 2025 pm 12:09 PM

What is the impact of the static keyword on the scope of C user identifiers?

Apr 03, 2025 pm 12:09 PM

The static keyword affects the scope and life cycle of the identifier: Global variable: Limited to the source file, only visible in the current file, avoiding naming conflicts. Function: Limited to the source file, it is only visible in the current file, hiding implementation details and improving encapsulation. Local variables: The life cycle is extended to the entire program, retaining values between function calls, and can be used to record states, but pay attention to memory management risks.

What is the priority of C language !x?

Apr 03, 2025 pm 02:06 PM

What is the priority of C language !x?

Apr 03, 2025 pm 02:06 PM

The logical non-operator (!) has the priority next to parentheses, which means that in expressions, it will precede most other operators. Understanding priority not only requires rote memorization, but more importantly, understanding the logic and potential pitfalls behind it to avoid undetectable errors in complex expressions. Adding brackets can clarify expression intent, improve code clarity and maintainability, and prevent unexpected behavior.

Is sum a keyword in C language?

Apr 03, 2025 pm 02:18 PM

Is sum a keyword in C language?

Apr 03, 2025 pm 02:18 PM

The sum keyword does not exist in C language, it is a normal identifier and can be used as a variable or function name. But to avoid misunderstandings, it is recommended to avoid using it for identifiers of mathematical-related codes. More descriptive names such as array_sum or calculate_sum can be used to improve code readability.