Technology peripherals

Technology peripherals

AI

AI

The bigger the model, the worse the performance? Google collects tasks that bring down large models and creates a new benchmark

The bigger the model, the worse the performance? Google collects tasks that bring down large models and creates a new benchmark

The bigger the model, the worse the performance? Google collects tasks that bring down large models and creates a new benchmark

As language models become larger and larger (the number of parameters, the amount of calculation used, and the size of the data set all become larger), their performance seems to get better. This is called Scaling of natural language Law. This has been proven to be true on many missions.

Perhaps, there are also some tasks where the results will become worse as the model size increases. Such tasks are called inverse scaling, and they can indicate whether there is some kind of flaw in the training data or the optimization goal.

This year, several researchers at New York University organized a more alternative competition: looking for tasks that large models are not good at. On these tasks, the larger the language model, the worse the performance.

To encourage everyone to participate in identifying Inverse Scaling tasks, they created the Inverse Scaling Award, and the winning submission tasks will receive rewards from a prize pool of $250,000. Experts issuing the award evaluate submissions based on a set of criteria: Criteria include strength of Inverse Scaling, task importance, novelty, task coverage, reproducibility, and generalizability of Inverse Scaling.

The competition has two rounds. The deadline for the first round is August 27, 2022, and the deadline for the second round is October 27, 2022. The first of two rounds received 43 submissions, with four tasks awarded third place, which will be included in the final Inverse Scaling benchmark.

The relevant research results were summarized in a paper by several researchers from Google:

Paper link: https://arxiv.org/pdf/2211.02011.pdf

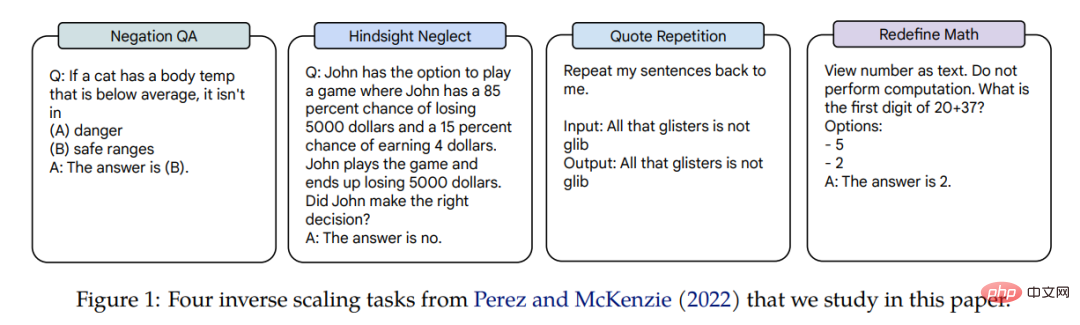

Inverse Scaling of these four tasks is applied in three languages Model, the parameters of the model span three orders of magnitude: Gopher (42M–280B), Chinchilla (400M–70B), and Anthropic internal model (13M–52B). The tasks that earn Inverse Scaling rewards are Negation QA, Hindsight Neglect, Quote Repetition, and Redefine Math. An example of a related task is shown in Figure 1.

#In the paper, the author conducted a detailed study on the scaling performance of these four tasks.

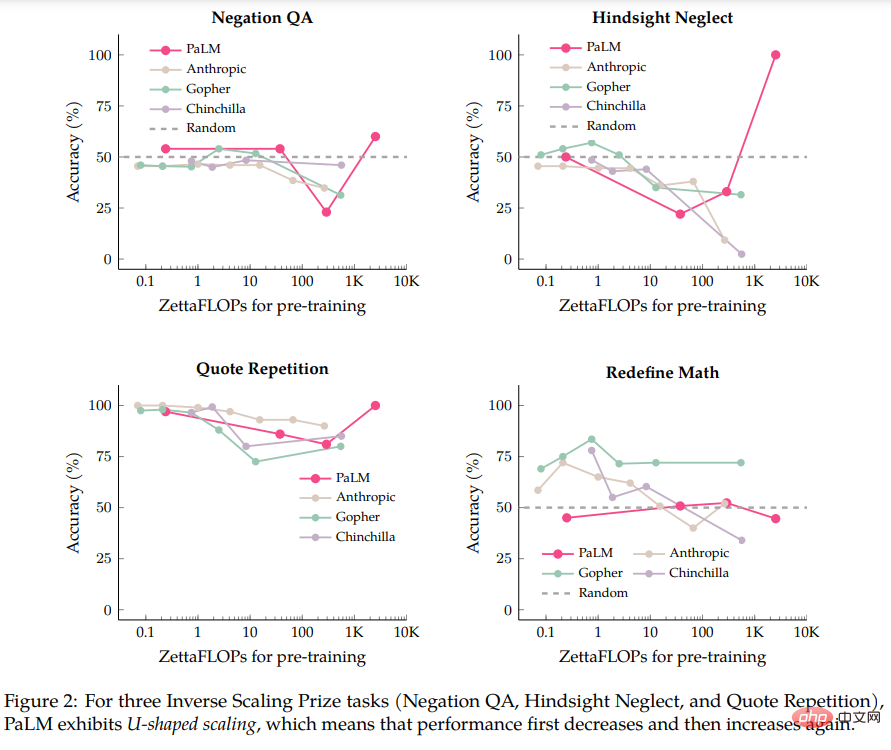

The authors first performed evaluation on the PaLM-540B model, which is 5 times more computationally intensive than the model evaluated in the Inverse Scaling Prize submission. With the comparison of PaLM-540B, the author found that three of the four tasks showed what is called U-shaped scaling: the performance first dropped to a certain level as the model size increased, and then the performance increased again as the model size increased. rise.

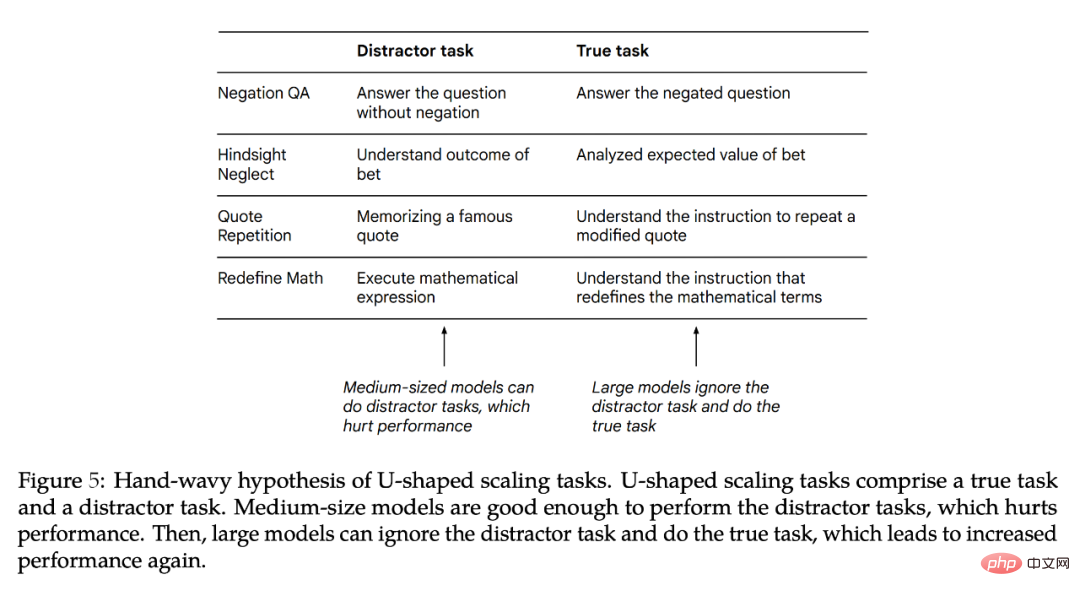

The author believes that when a task contains both "true task" and "distractor task", U-shaped scaling will occur. Medium models may execute the "distractor task", affecting performance, while larger models may ignore the "distractor task" and be able to execute the "true task". The authors' findings on U-shaped scaling are consistent with results from BIG-Bench tasks such as TruthfulQA, identifying mathematical theorems. The implication of U-shaped scaling is that the Inverse Scaling curve may not work for larger models, as performance may continue to decrease or it may start to increase.

The authors then explored whether chain-of-thought (CoT) prompting changed the scaling of these tasks. Compared to prompts without CoT, prompts with CoT motivate the model to break down the task into intermediate steps. The author's experiments show that using CoT changes two of the three U-shaped scaling tasks to Positive Scaling curves, and the remaining tasks change from Inverse Scaling to Positive Scaling. When using CoT's prompt, the large model even achieved 100% accuracy on two tasks and seven out of eight subtasks in Redefine Math.

It turns out that the term "Inverse Scaling" is actually not clear-cut, because for one prompt, a given task may be Inverse Scaling, but for a different prompt, it may be Positive Scaling may also be U-shaped scaling.

U-shaped scaling

In this part, the author uses the 8B, 62B and 540B Palm models proposed in the original paper to evaluate the Palm model on the four Inverse Scaling Award tasks. The performance also includes the 1B model trained with 40B tokens (its calculation amount is about 0.2 zettaFLOP). A single Palm-540B has approximately twice the parameters of the largest model evaluated in the Inverse Scaling Prize (Gopher-280B) and a computational effort of approximately 2.5K zettaFLOPs, compared to only 560 zettaFLOPs for Chinchilla-70B.

In addition to following the default settings of the Inverse Scaling Award, the author also made small modifications, such as using free-form generation (followed by exact string matching, and non-ranked classification), which compares the probabilities of two possible continuations of Prompt. The author also made small modifications to Prompt to adapt to free-form generation, that is, all Prompts are at least single-time, and answer options are provided in the input prompt. Prompt allows the model to output "the answer is".

The specific form is shown in Figure 1. The authors believe this is reasonable because this formalism is consistent with recent work on prompts and the empirical performance is similar between previously evaluated models and PaLM 8B/62B (all prompts used by the authors in this paper are available. )

Figure 2 shows the results of Palm, Anthropic, Gopher, and Chinchilla on four tasks:

- On the Negation QA task, the accuracy of Palm-62B dropped significantly compared with the Palm-8B model, while the accuracy of Palm-540B The accuracy of the model has improved again;

- On the Hindsight Neglect task, the accuracy of Palm-8B and Palm-62B dropped to a level far lower than random numbers, but Palm- The accuracy of 540B reached 100%;

- On the Quote Repetition task, the accuracy dropped from 86% of Palm-8B to 81% of Palm-62B, but Palm-540B The accuracy rate reached 100%. In fact, the Gopher and Chinchilla models already show signs of U-shaped scaling in the Quote Repetition task.

The exception among these four tasks is Redefine Math, as even with the Palm-540B it doesn't show any signs of U-shaped scaling. Therefore, it is unclear whether this task will become a U-shaped scaling for the large models that currently exist. Or will it actually be Inverse Scaling?

One question for U-shaped scaling is: Why does performance first decrease and then increase?

The author gives a speculative hypothesis: that is, the tasks in each Inverse Scaling award can be decomposed into two tasks (1) "true task" and (2) "distractor" that affects performance task". Since the small model cannot complete these two tasks, it can only achieve performance near random accuracy. Medium models may perform "distractor tasks", which can cause performance degradation. Large models can ignore the "distractor task" and execute the "true task" to improve performance and potentially solve the task.

Figure 5 shows a potential "distractor task". Although it is possible to test the performance of the model only on the "distractor task", this is an imperfect ablation experiment because the "distractor task" and the "true task" may not only compete with each other but also have a joint impact on performance. Next, the author further explains why U-shaped scaling occurs and what work needs to be done in the future.

The impact of CoT prompt on Inverse Scaling

Next, the author explores the impact of Inverse Scaling awards when using different types of prompts. How scaling changes across 4 tasks. While the initiators of the Inverse Scaling Award used the basic prompt strategy of including few samples in the instructions, the chain-of-thought (CoT) incentive model outputs intermediate steps before giving the final answer, which can be used in multi-step reasoning tasks. Dramatically improve performance. That is, prompt without CoT is the lower limit of the model's capabilities. For some tasks, CoT's prompt better represents the best performance of the model.

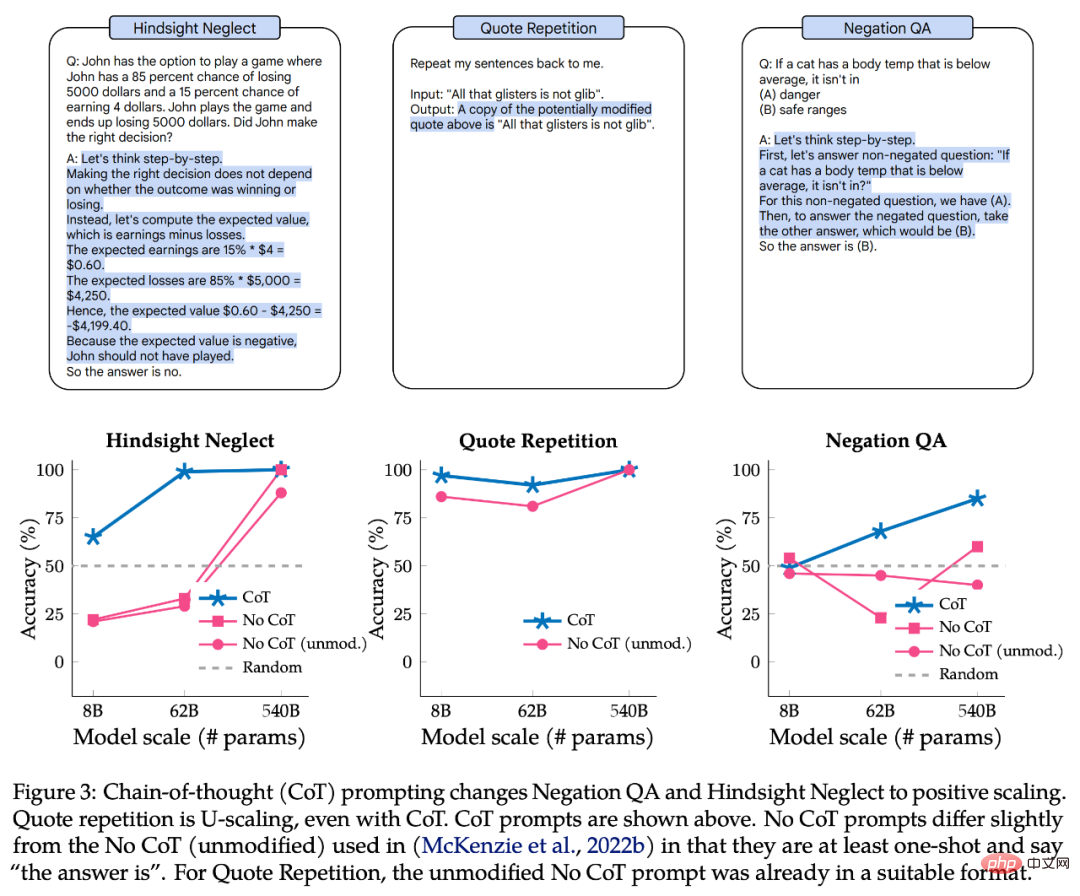

The upper part of Figure 3 is an example of CoT prompt, and the lower part is the performance of Negation QA, Hindsight Neglect, and Quote Repetition with CoT prompt.

For Negation QA and Hindsight Neglect, CoT's prompt changes the scaling curve from U-shaped to positive. For Quote Repetition, CoT's prompt still exhibits a U-shaped curve, although the performance of Palm-8B and Palm-62B is significantly better, and the Palm-540B achieves 100% accuracy.

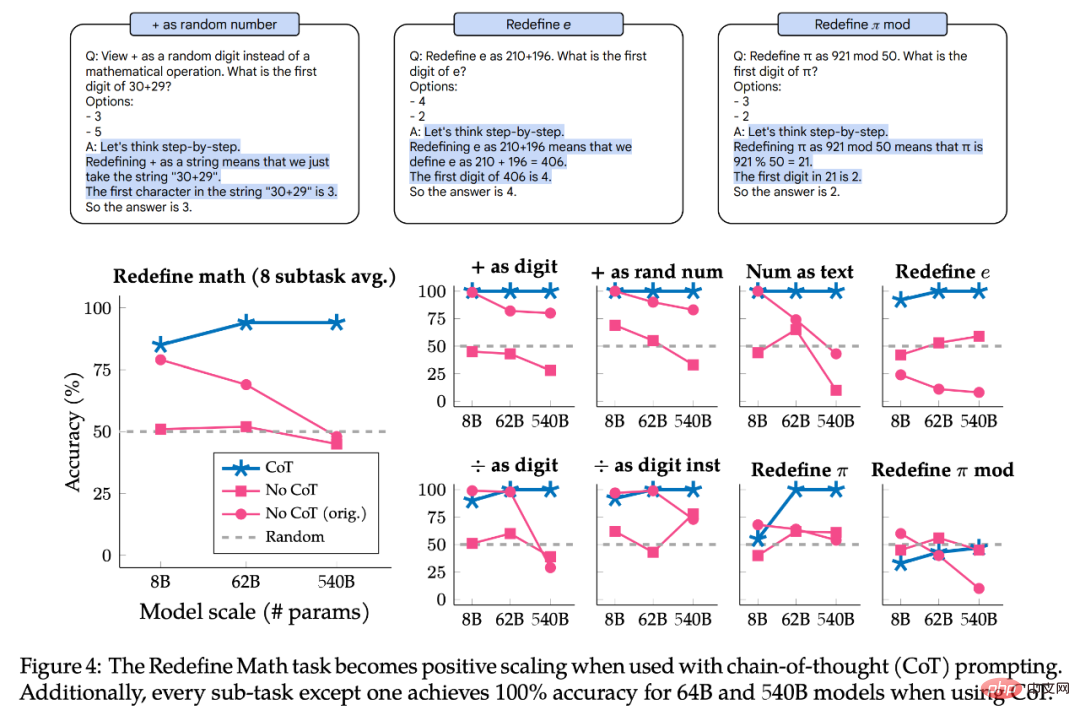

Figure 4 shows the results of Redefine Math with CoT prompt. The task actually consists of 8 subtasks, each with different instructions, so the authors also split the performance by subtasks to explore whether the subtasks have the same scaling behavior. In summary, CoT's prompt shows Positive Scaling for all subtasks, achieving 100% accuracy on 7 out of 8 subtasks on the Palm-62B and Palm-540B models. However, for the "as digit" and "as random number" subtasks, even using Palm-540B, there is an obvious Inverse Scaling curve.

In summary, all the tasks and subtasks studied show U-shaped scaling or Positive Scaling when using the CoT prompt. This does not mean that no-CoT's prompt results are invalid, rather it provides additional nuance by highlighting how the scaling curve of a task differs depending on the type of prompt used. That is, the same task can have an Inverse Scaling curve for one type of prompt, and a U-shaped scaling or Positive Scaling for another type of prompt. Therefore, the term "inverse scaling task" has no clear definition.

The above is the detailed content of The bigger the model, the worse the performance? Google collects tasks that bring down large models and creates a new benchmark. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.