Introduction to OpenAI and Microsoft Sentinel

Welcome to our series about OpenAI and Microsoft Sentinel! Large language models, or LLMs, such as OpenAI’s GPT3 family, are taking over the public imagination with innovative use cases such as text summarization, human-like conversations, code parsing and debugging, and many other examples. We've seen ChatGPT write screenplays and poems, compose music, write essays, and even translate computer code from one language to another.

What if we could harness this incredible potential to help incident responders in security operations centers? Well, of course we can – and it’s easy! Microsoft Sentinel already includes a built-in connector for OpenAI GPT3 models that we can implement in our automation playbooks powered by Azure Logic Apps. These powerful workflows are easy to write and integrate into SOC operations. Today we'll take a look at the OpenAI connector and explore some of its configurable parameters using a simple use case: describing a MITER ATT&CK policy related to Sentinel events.

Before we get started, let’s cover some prerequisites:

- If you don’t have a Microsoft Sentinel instance yet, you can create one using your free Azure account and follow Get started with Sentinel Get started quickly.

- We will use pre-recorded data from the Microsoft Sentinel Training Lab to test our playbook.

- You will also need a personal OpenAI account with an API key for GPT3 connections.

- I also highly recommend checking out Antonio Formato's excellent blog on handling events with ChatGPT and Sentinel, where Antonio introduces a very useful all-purpose manual that has been implemented in almost all OpenAI models in Sentinel to date refer to.

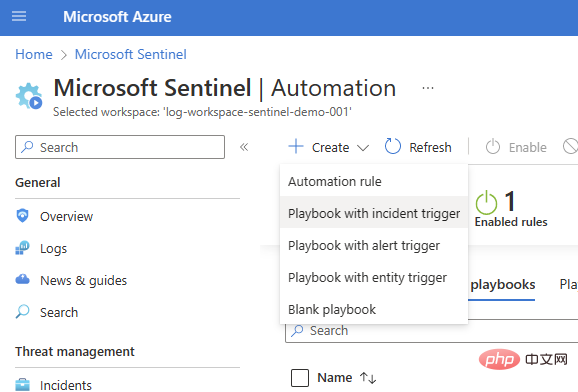

We will start with a basic incident trigger playbook (Sentinel > Automation > Create > Playbook with incident trigger).

Select the subscription and resource group, add a playbook name, and move to the Connections tab. You should see Microsoft Sentinel with one or two authentication options - I'm using Managed Identity in this example - but if you don't have any connections yet, you can also add a Sentinel connection in the Logic Apps Designer .

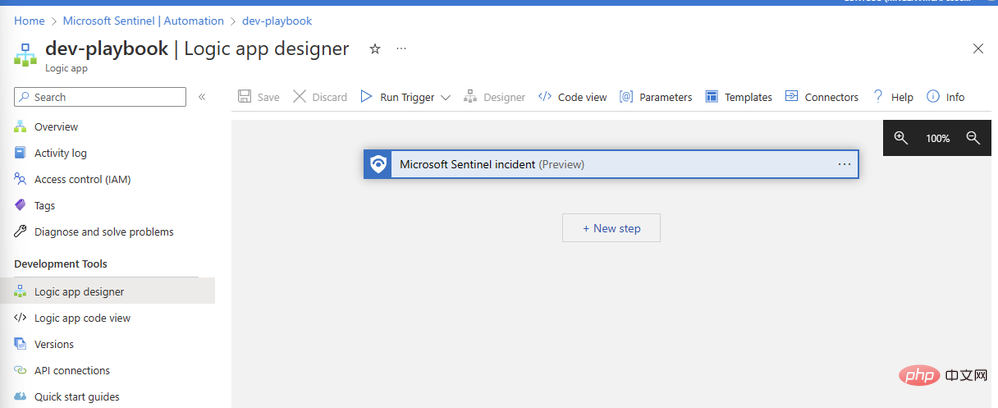

View and create the playbook, after a few seconds the resource will be deployed successfully and take us to the Logic App Designer canvas:

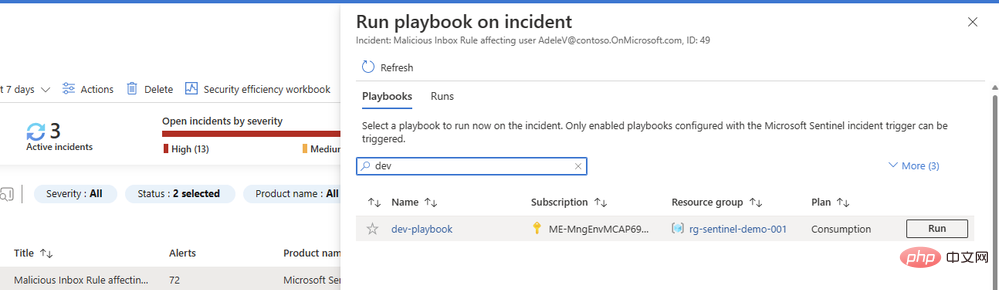

Let's add our OpenAI connector. Click New Step and type "OpenAI" in the search box. You'll see the connector in the top pane, and two actions below it: "Create Image" and "GPT3 Complete Your Prompt":

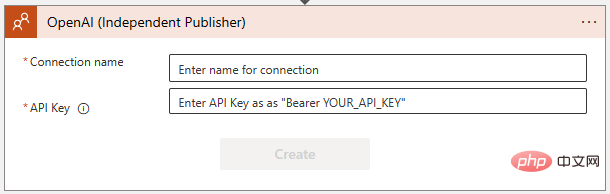

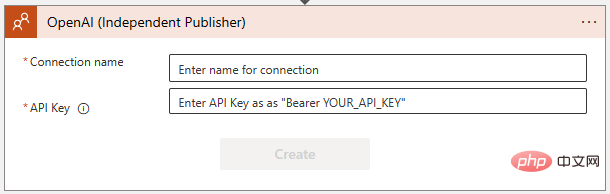

Select "GPT3 Complete your prompt". You will then be asked to create a connection to the OpenAI API in the following dialog box. If you haven't already, create a key at https://platform.openai.com/account/api-keys and make sure to keep it in a safe location!

Make sure you follow the instructions exactly when adding the OpenAI API key - it requires the word "Bearer", followed by a space, and then the key itself:

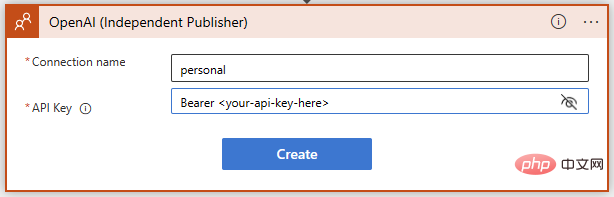

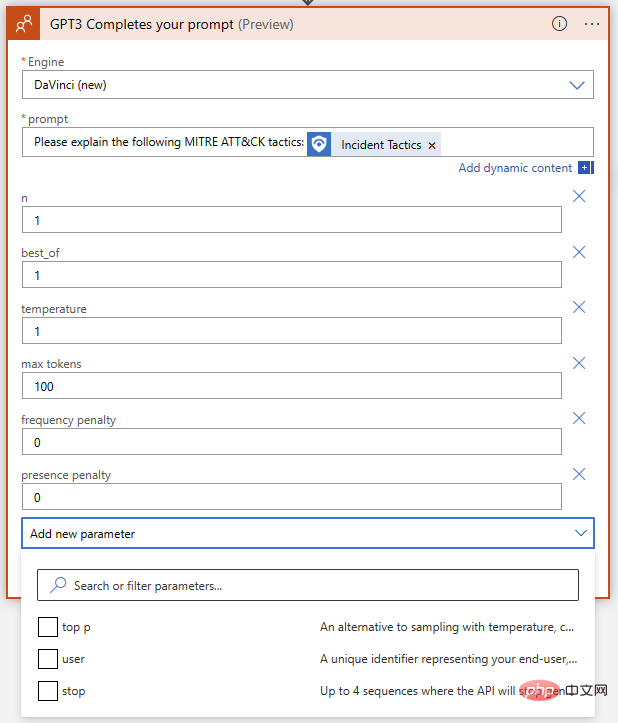

success! We now have GPT3 text completion ready for our prompt. We want to have the AI model interpret MITER ATT&CK strategies and techniques related to Sentinel events, so let's write a simple prompt using dynamic content to insert event strategies from Sentinel.

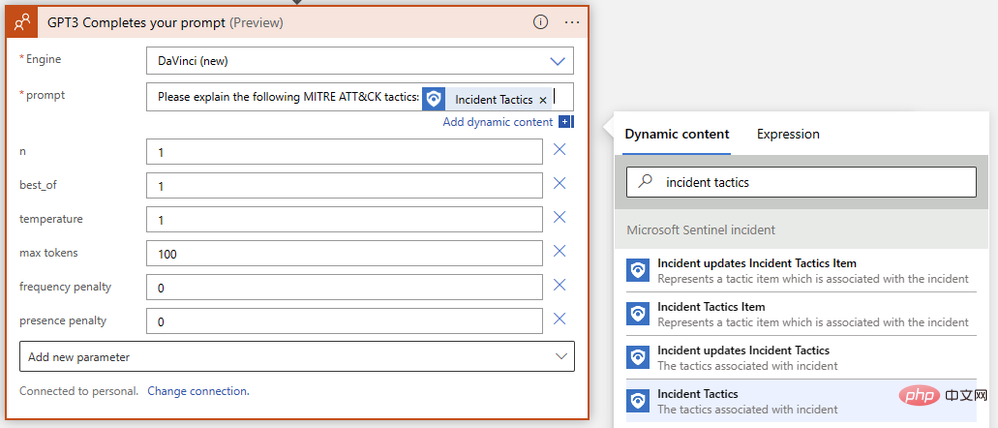

We're almost done! Save your logic app and go to Microsoft Sentinel Events to test run it. I have test data from Microsoft Sentinel Training Lab in my instance, so I will run this playbook against events triggered by malicious inbox rule alerts.

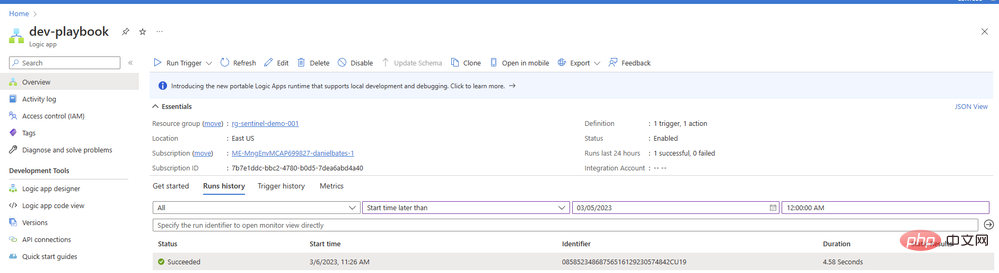

You may be wondering why we didn’t configure a second action in our playbook to add a comment or task with a result. We'll get there - but first we want to make sure our prompts return good content from the AI model. Return to the Playbook and open the Overview in a new tab. You should see an item in your run history, hopefully with a green checkmark:

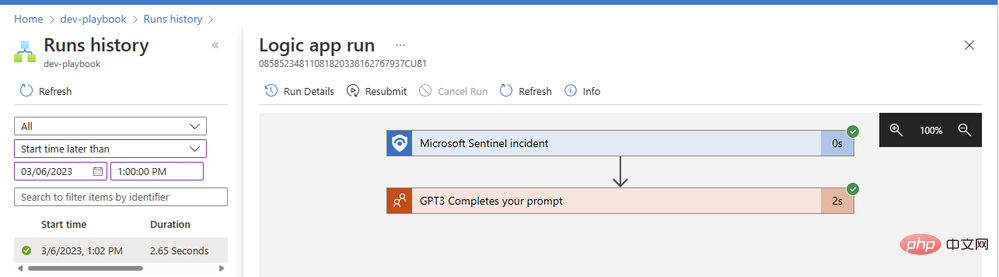

Click the item to view details about the logic app run. We can expand any operation block to view detailed input and output parameters:

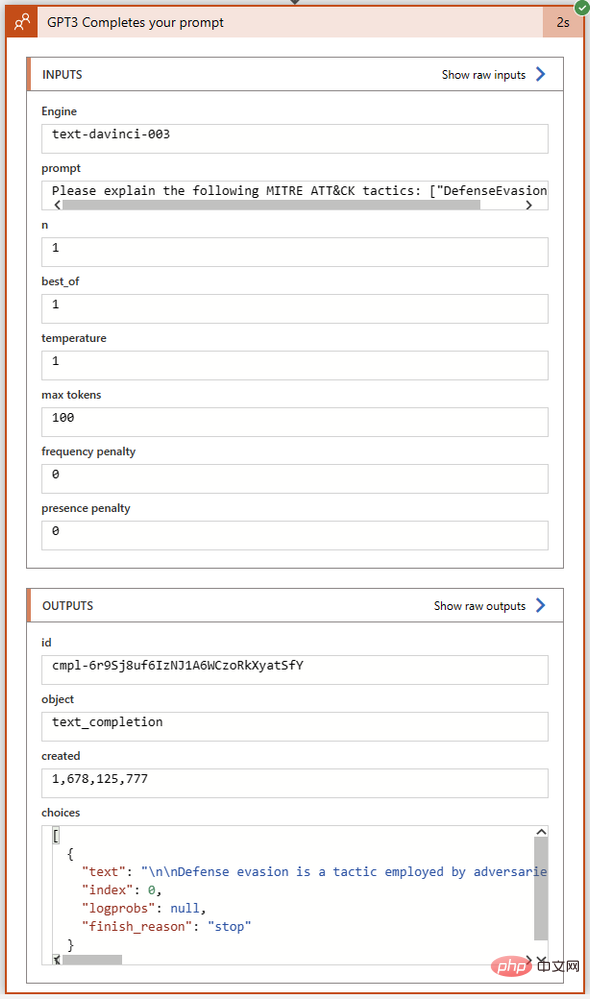

Our GPT3 operation took just two seconds to complete successfully. Let's click on the action block to expand it and see the full details of its inputs and outputs:

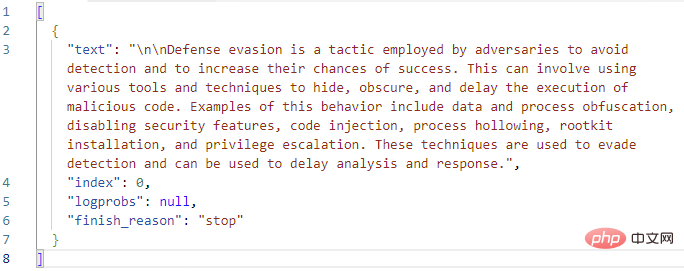

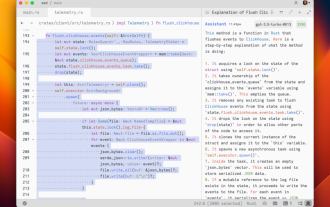

Let's take a closer look at the Select field in the Outputs section . This is where GPT3 returns the text of its completion along with the completion status and any error codes. I've copied the full text of the Choices output into Visual Studio Code:

Looks good so far! GPT3 correctly expands the MITER definition of "defense evasion." Before we add a logical action to the playbook to create an event comment with this answer text, let's take another look at the parameters of the GPT3 action itself. There are a total of nine parameters in the OpenAI text completion action, not counting engine selection and prompts:

What do these mean, and how do we adjust them to get the best results? To help us understand the impact of each parameter on the results, let's go to the OpenAI API Playground. We can paste the exact prompt in the input field where the logic app runs, but before clicking Submit we want to make sure the parameters match. Here's a quick table comparing parameter names between Azure Logic App OpenAI Connector and OpenAI Playground:

| Azure Logic App Connector | OpenAIPlayground | Explanation |

| Engine | Model | will generate the completed model. We can select Leonardo da Vinci (new), Leonardo da Vinci (old), Curie, Babbage or Ada in the OpenAI connector, corresponding to 'text-davinci-003', 'text-davinci-002', 'text respectively -curie-001' , 'text-babbage-001' and 'text-ada-001' in Playground. |

| n | N/A | How many completions to generate for each prompt. It is equivalent to re-entering the prompt multiple times in the Playground. |

| Best | (Same) | Generate multiple completions and return the best one. Use with caution - this costs a lot of tokens! |

| Temperature | (Same) | Defines the randomness (or creativity) of the response. Set to 0 for highly deterministic, repeated prompt completion where the model will always return its most confident choice. Set to 1 for maximum creative responses with more randomness, or somewhere in between if desired. |

| Maximum Tokens | Maximum Length | The maximum length of the ChatGPT response, given in token form. A token is approximately equal to four characters. ChatGPT uses token pricing; at the time of writing, 1000 tokens cost $0.002. The cost of an API call will include the hinted token length along with the reply, so if you want to keep the lowest cost per response, subtract the hinted token length from 1000 to cap the reply. |

| Frequency Penalty | (Same) | A number ranging from 0 to 2. The higher the value, the less likely the model is to repeat the line verbatim (it will try to find synonyms or restatements of the line). |

| There is a penalty | (Same) | A number between 0 and 2. The higher the value, the less likely the model is to repeat topics that have already been mentioned in the response. |

| Top | (Same) | Another way to set the response to "creativity" if you are not using temperature. This parameter limits the possible answer tokens based on probability; when set to 1, all tokens are considered, but smaller values reduce the set of possible answers to the top X%. |

| User | Not applicable | Unique identifier. We don't need to set this parameter because our API key is already used as our identifier string. |

| Stop | Stop Sequence | Up to four sequences will end the model's response. |

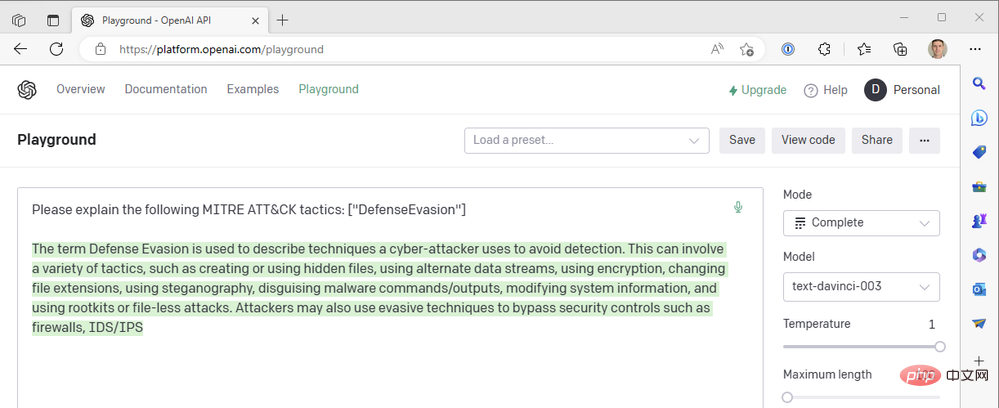

Let’s use the following OpenAI API Playground settings to match our logic application actions:

- Model: text-davinci-003

- Temperature: 1

- Maximum length: 100

This is the result we get from the GPT3 engine.

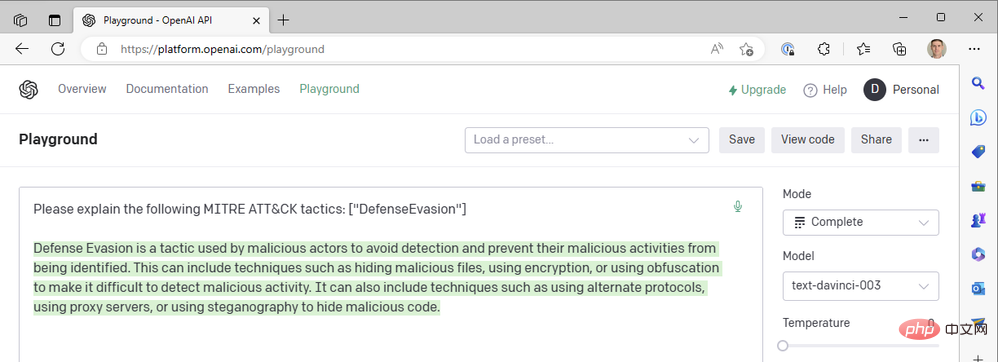

It looks like the response is truncated in the middle of the sentence, so we should increase the max length parameter. Otherwise, this response looks pretty good. We are using the highest possible temperature value - what happens if we lower the temperature to get a more certain response? Take a temperature of zero as an example:

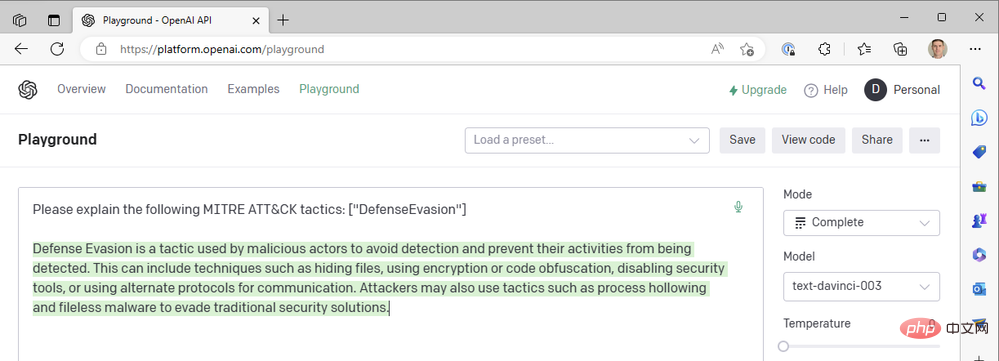

At temperature=0, no matter how many times we regenerate this prompt, we get almost the exact same result. This works well when we ask GPT3 to define technical terms; there shouldn't be much difference in what "defensive evasion" means as a MITER ATT&CK tactic. We can improve the readability of responses by adding a frequency penalty to reduce the model's tendency to reuse the same words ("technical like"). Let's increase the frequency penalty to a maximum of 2:

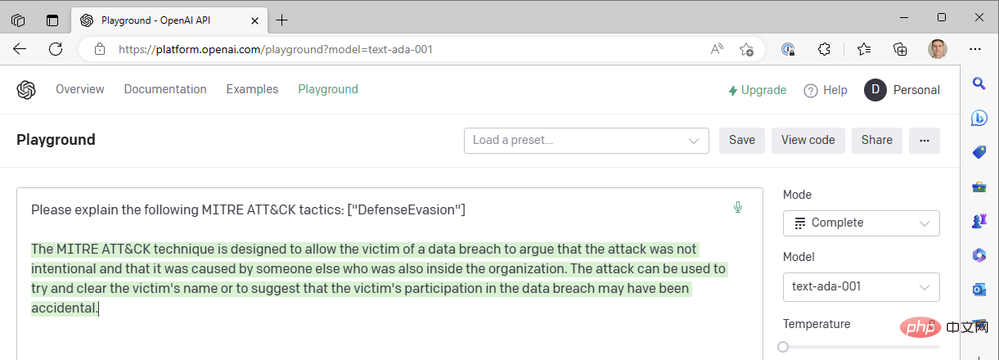

So far we've only used the latest da Vinci models to get things done quickly. What happens if we drop down to one of OpenAI’s faster and cheaper models, such as Curie, Babbage, or Ada? Let's change the model to "text-ada-001" and compare the results:

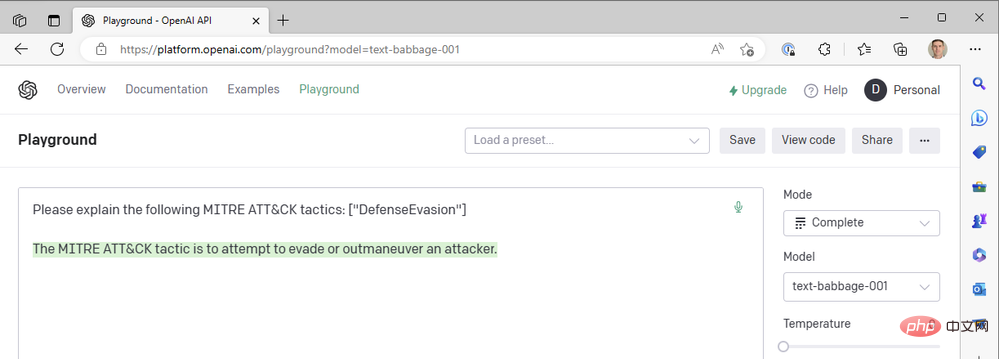

Well... not quite. Let's try Babbage:

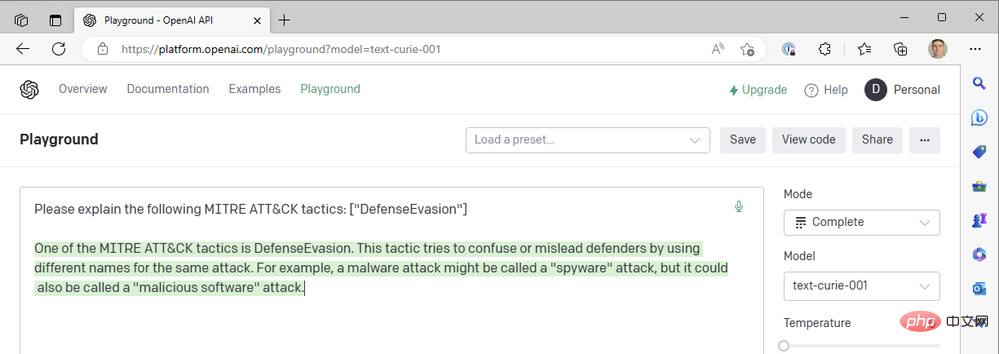

Babbage doesn't seem to return the results we're looking for either. Maybe Curie would be better off?

Sadly, Curie also didn’t meet the standards set by Leonardo da Vinci. They're certainly fast, but our use case of adding context to security events doesn't rely on sub-second response times - the accuracy of the summary is more important. We continue to use the successful combination of da Vinci models, low temperature and high frequency punishment.

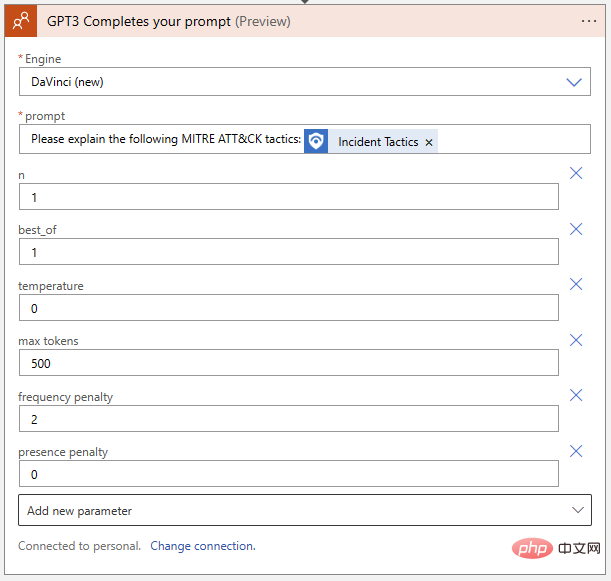

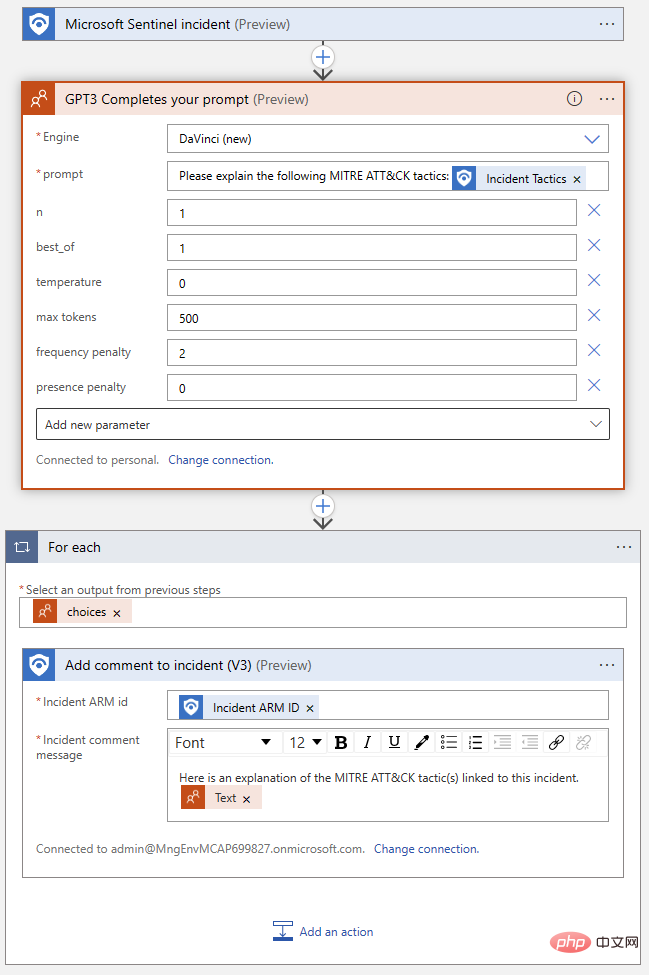

Back to our Logic App, let’s transfer the settings we discovered from the Playground to the OpenAI Action Block:

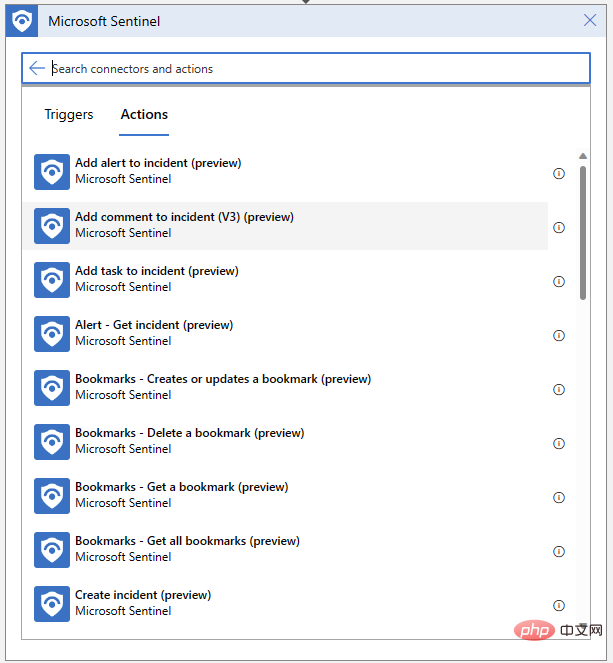

Our Logic App is also Need to be able to write reviews for our events. Click "New Step" and select "Add Comment to Event" from the Microsoft Sentinel Connector:

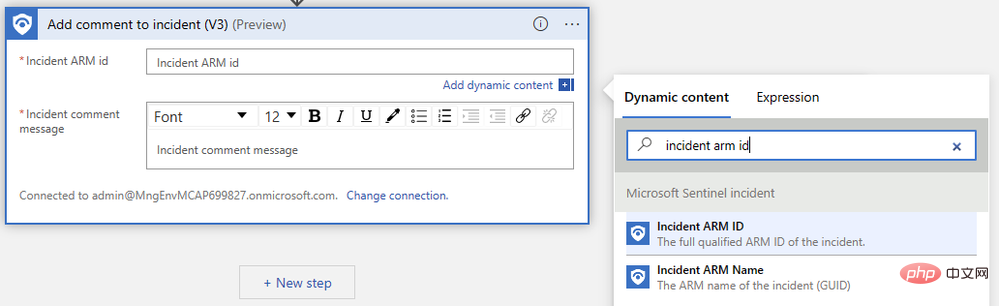

We just need to specify the event ARM identifier and compose our comment message . First, search for "Event ARM ID" in the dynamic content pop-up menu:

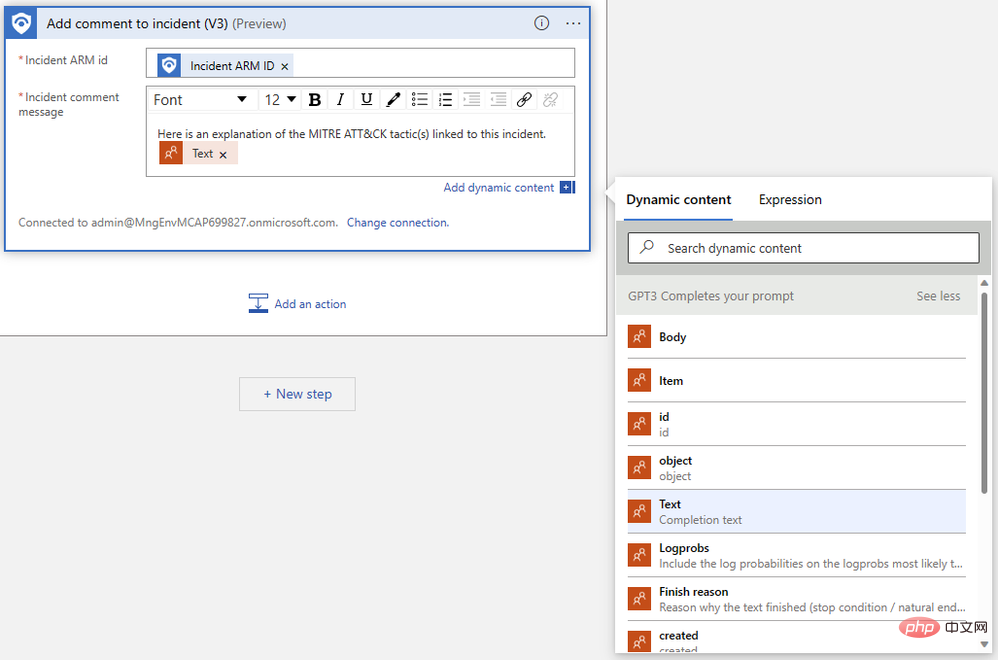

Next, find the "Text" we output in the previous step. You may need to click See More to see the output. The Logic App Designer automatically wraps our comment action in a "For each" logic block to handle cases where multiple completions are generated for the same prompt.

Our completed Logic App should look something like the following:

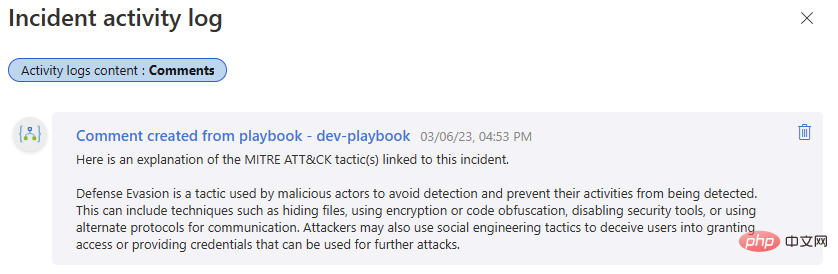

Let’s test it out again! Go back to that Microsoft Sentinel event and run the playbook. We should get another successful completion in our Logic App run history and a new comment in our event activity log.

If you have been keeping in touch with us so far, you can now integrate OpenAI GPT3 with Microsoft Sentinel, which can add value to your security investigations . Stay tuned for our next installment, where we’ll discuss more ways to integrate OpenAI models with Sentinel, unlocking workflows that can help you get the most out of your security platform!

The above is the detailed content of Introduction to OpenAI and Microsoft Sentinel. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Not long ago, OpenAISora quickly became popular with its amazing video generation effects. It stood out among the crowd of literary video models and became the focus of global attention. Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team has fully open sourced the world's first Sora-like architecture video generation model "Open-Sora1.0", covering the entire training process, including data processing, all training details and model weights, and join hands with global AI enthusiasts to promote a new era of video creation. For a sneak peek, let’s take a look at a video of a bustling city generated by the “Open-Sora1.0” model released by the Colossal-AI team. Open-Sora1.0

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft and OpenAI were revealed to be investing large sums of money into a humanoid robot startup at the beginning of the year. Among them, Microsoft plans to invest US$95 million, and OpenAI will invest US$5 million. According to Bloomberg, the company is expected to raise a total of US$500 million in this round, and its pre-money valuation may reach US$1.9 billion. What attracts them? Let’s take a look at this company’s robotics achievements first. This robot is all silver and black, and its appearance resembles the image of a robot in a Hollywood science fiction blockbuster: Now, he is putting a coffee capsule into the coffee machine: If it is not placed correctly, it will adjust itself without any human remote control: However, After a while, a cup of coffee can be taken away and enjoyed: Do you have any family members who have recognized it? Yes, this robot was created some time ago.

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

Ollama is a super practical tool that allows you to easily run open source models such as Llama2, Mistral, and Gemma locally. In this article, I will introduce how to use Ollama to vectorize text. If you have not installed Ollama locally, you can read this article. In this article we will use the nomic-embed-text[2] model. It is a text encoder that outperforms OpenAI text-embedding-ada-002 and text-embedding-3-small on short context and long context tasks. Start the nomic-embed-text service when you have successfully installed o

Sudden! OpenAI fires Ilya ally for suspected information leakage

Apr 15, 2024 am 09:01 AM

Sudden! OpenAI fires Ilya ally for suspected information leakage

Apr 15, 2024 am 09:01 AM

Sudden! OpenAI fired people, the reason: suspected information leakage. One is Leopold Aschenbrenner, an ally of the missing chief scientist Ilya and a core member of the Superalignment team. The other person is not simple either. He is Pavel Izmailov, a researcher on the LLM inference team, who also worked on the super alignment team. It's unclear exactly what information the two men leaked. After the news was exposed, many netizens expressed "quite shocked": I saw Aschenbrenner's post not long ago and felt that he was on the rise in his career. I didn't expect such a change. Some netizens in the picture think: OpenAI lost Aschenbrenner, I