One attempt to ensure that a machine learning model generalizes in unknown environments is to split the data. This can be achieved in a variety of ways, from 3-way (train, test, evaluation) splits to cross-validated k-splits. The basic principle is that by training a machine learning model on a subset of data and evaluating it on unknown data, you can better reason about whether the model was underfitting or overfitting during training.

For most jobs, a simple 3-way is sufficient. In real production, splitting methods often require more complex methods to ensure generalization issues. These splits are more complex because they are derived from actual data rather than the data structures on which ordinary splitting methods are based. This article attempts to explain some unconventional ways of splitting data in machine learning development, and the reasons behind them.

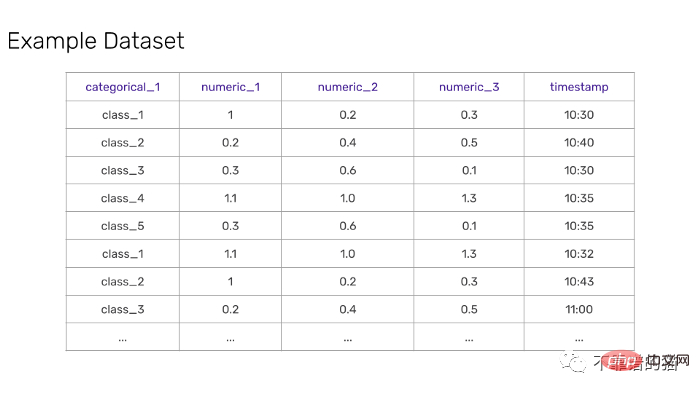

For simplicity, let’s use a tabular format to represent a simple multivariate time series dataset. The data consists of 3 numeric features, 1 categorical feature and 1 timestamp feature. Here’s the visualization:

# This type of dataset is common across many use cases and industries for machine learning. A specific example is time-streamed data transmitted from multiple sensors on a factory floor. The categorical variable will be the ID of the machine, the numeric feature will be the information the sensor is recording (e.g. pressure, temperature, etc.), and the timestamp will be the time the data was transferred and recorded in the database.

Suppose you received this dataset as a csv file from the data engineering department and were tasked with writing a classification or regression model. In this case, the label can be any feature or extra column. The first thing to do is to split the data into meaningful subsets.

For convenience, you can simply split it into a training set and a test set. Immediately the problem arises, simple splitting of the data will not work here: the data is composed of multiple sensor data streams indexed by time. So, how to split the data so that the order is maintained and the subsequent machine learning model has good generalization?

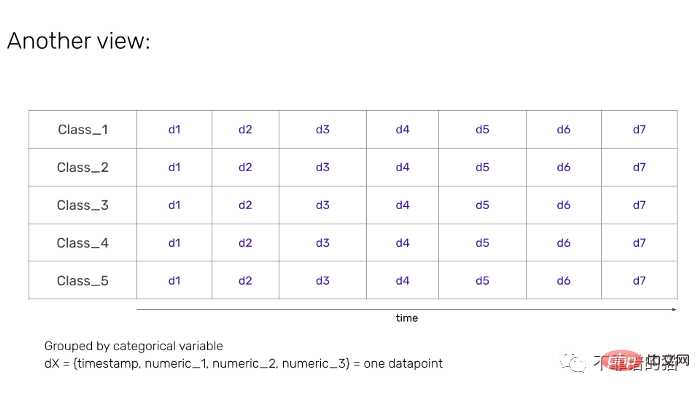

What we can do The most straightforward transformation is to represent the data for each classification class (in our running example, visualizing the data for each machine). This will produce the following result:

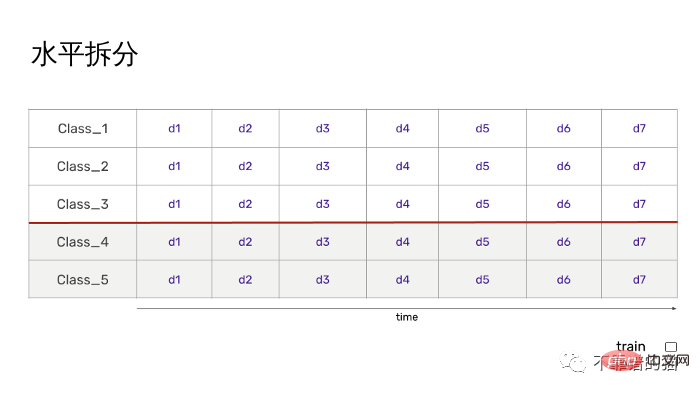

Horizontal Split

Grouping makes the splitting problem a little easier, and it's largely up to you Assumptions. You may ask: How does a machine learning model trained on one group generalize to other groups, that is, if it is trained on class_1, class_2, and class_3 time streams, how will the model perform on class_4 and class_5 time streams? ?The following is a visualization of this split:

The above split method is what I call horizontal splitting. In most machine learning libraries, this split is easily achieved by simply grouping by categorical features and partitioning along the categories. By training with this split, the model has gathered information that generalizes across unknown groupings.

It is worth noting that splitting does not use time as the basis for splitting itself. However, it can be assumed that you will also split by time ordering of each time stream to maintain this relationship in the data. This brings us to the next split.

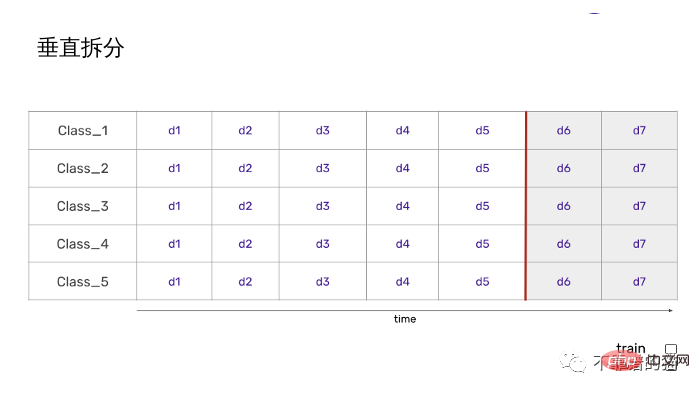

But what if you want to span time itself? For most time series modeling, the common ways to split the data are past and future. That is, comparing the historical data of the training set with the data of the evaluation set. The hypothesis in this case is: How does a machine learning model trained on each group's historical data generalize to each group's future data? This question can be answered by what is called vertical splitting:

Successful training of this split will show that the model is able to extract patterns in the time stream it has seen and make accurate predictions about future behavior. However, this by itself does not indicate that the model generalizes well to other temporal streams from different groups.

Of course, your multiple time streams must now be sorted individually, so we still need to group. But this time, instead of crossing groups, we draw samples from each group in past and put them into train, and put the future group into eval accordingly. In this idealized example, all time streams have the same length, i.e. each time stream has exactly the same number of data points. However, in the real world, this may not be the case - so you need a system to index each group for splitting.

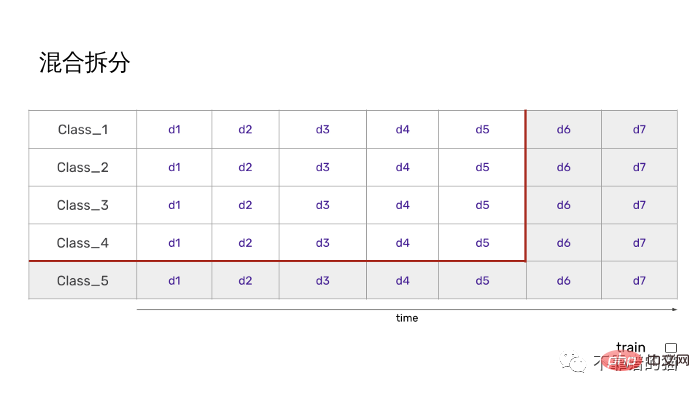

You may be wondering, can they generate a model that can generalize well under the constraints of both horizontal and vertical splitting? In this case, the hypothesis would be: How does a machine learning model trained on some groups of historical data generalize to future data of those groups and to all data of other groups? A visualization of this hybrid split would look like this:

Of course, if the model training is successful, this model will definitely be more robust in the real world than other models. Not only can it demonstrate that it has seen learning patterns for some groups, but it can also demonstrate that it has acquired information that generalizes across groups. This could be useful if we were to add more similar machines to the factory in the future.

The concepts of horizontal and vertical splitting can be generalized to many dimensions. For example, you may want to group based on two categorical features instead of one to further isolate subgroups in your data and sort them by subgroups. There may also be complex logic in the middle to filter groups with small sample sizes, as well as other business-level logic related to the domain.

This hypothetical example serves to illustrate the endless possibilities of the various machine learning splits that can be created. Just as it’s important to ensure fairness in machine learning when evaluating models, it’s equally important to spend enough time considering partitioning your dataset and its consequences for biasing downstream models.

The above is the detailed content of Unconventional Splitting Techniques for Time Series Machine Learning Datasets. For more information, please follow other related articles on the PHP Chinese website!