Technology peripherals

Technology peripherals

AI

AI

Quickly gaining 2,500 stars, Andrej Karpathy rewrote a minGPT library

Quickly gaining 2,500 stars, Andrej Karpathy rewrote a minGPT library

Quickly gaining 2,500 stars, Andrej Karpathy rewrote a minGPT library

As a representative work of "violent aesthetics" in the field of artificial intelligence, GPT can be said to have stolen the limelight. From the 117 million parameters of GPT at the beginning of its birth, it has soared all the way to 175 billion parameters of GPT-3. With the release of GPT-3, OpenAI has opened its commercial API to the community, encouraging everyone to try more experiments using GPT-3. However, use of the API requires an application, and your application is likely to come to nothing.

In order to allow researchers with limited resources to experience the fun of playing with large models, former Tesla AI director Andrej Karpathy wrote it based on PyTorch with only about 300 lines of code. A small GPT training library was developed and named minGPT. This minGPT can perform addition operations and character-level language modeling, and the accuracy is not bad.

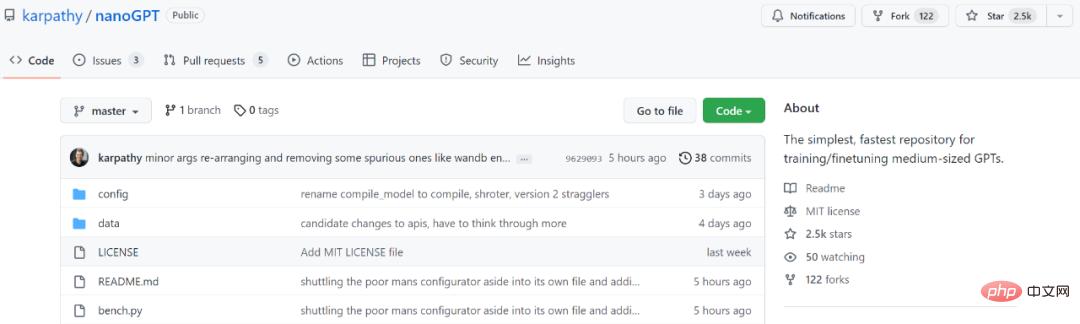

After two years, minGPT has been updated, and Karpathy has launched a new version named NanoGPT. This library is used to train and fine-tune medium-sized GPT. In just a few days since it was launched, it has collected 2.5K stars.

## Project address: https://github.com/karpathy/nanoGPT

In the project introduction, Karpathy wrote: "NanoGPT is the simplest and fastest library for training and fine-tuning medium-scale GPT. It is a rewrite of minGPT, because minGPT It's so complex that I don't want to use it anymore. NanoGPT is still under development and is currently working on reproducing GPT-2 on the OpenWebText dataset.

NanoGPT code design goals It is simple and easy to read, among which train.py is a code of about 300 lines; model.py is a GPT model definition of about 300 lines, which can choose to load GPT-2 weights from OpenAI."

In order to render the data set, the user first needs to tokenize some documents into a simple 1D index array.

$ cd data/openwebtext $ python prepare.py

This will generate two files: train.bin and val.bin, each containing a raw sequence of uint16 bytes representing the GPT-2 BPE token id. This training script attempts to replicate the smallest version of GPT-2 provided by OpenAI, which is the 124M version.

$ python train.py

If you want to use PyTorch distributed data parallelism (DDP) for training, please use torchrun to run the script.

$ torchrun --standalone --nproc_per_node=4 train.py

To make the code more efficient, users can also sample from the model:

$ python sample.py

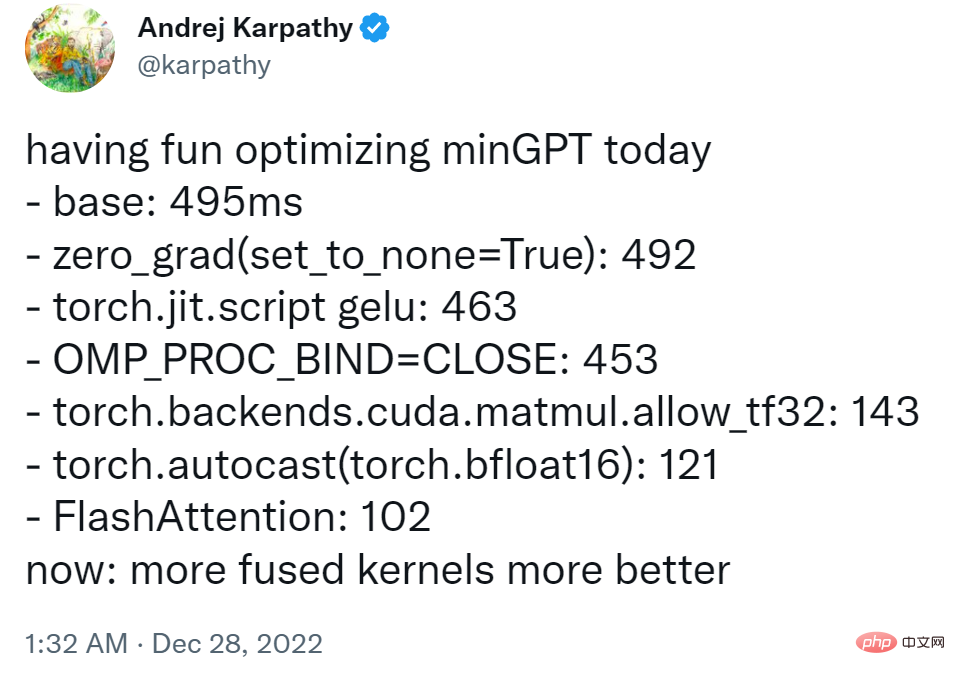

Karpathy said the project is currently in 1 The training loss for one night on the A100 40GB GPU is about 3.74, and the training loss on 4 GPUs is about 3.60. Training dropped to 3.1 atm with 400,000 iterations (~1 day) on 8 x A100 40GB nodes.

As for how to fine-tune GPT on new text, users can visit data/shakespeare and look at prepare.py. Unlike OpenWebText, this will run in seconds. Fine-tuning takes very little time, e.g. just a few minutes on a single GPU. The following is an example of running fine-tuning

$ python train.py config/finetune_shakespeare.py

As soon as the project went online, someone has already started trying it:

Friends who want to try it can refer to the original project.

The above is the detailed content of Quickly gaining 2,500 stars, Andrej Karpathy rewrote a minGPT library. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons