Technology peripherals

Technology peripherals

AI

AI

Machine learning model classifies organic reaction mechanisms with outstanding accuracy

Machine learning model classifies organic reaction mechanisms with outstanding accuracy

Machine learning model classifies organic reaction mechanisms with outstanding accuracy

The discovery of chemical reactions is affected not only by how quickly experimental data can be obtained, but also by how easy it is for chemists to understand that data. Uncovering the mechanistic basis of new catalytic reactions is a particularly complex problem that often requires expertise in computational and physical organic chemistry. However, it is important to study catalytic reactions because they represent the most efficient chemical processes.

Recently, Burés and Larrosa from the Department of Chemistry at the University of Manchester (UoM) in the UK reported a machine learning model that demonstrated that a deep neural network model can be trained to analyze ordinary dynamics learn data and automatically elucidate the corresponding mechanistic categories without any additional user input. The model identifies various types of mechanisms with excellent accuracy.

The findings demonstrate that AI-guided mechanism classification is a powerful new tool that can simplify and automate mechanism elucidation. This work is expected to further advance the discovery and development of fully automated organic reactions.

The research is titled "Organic reaction mechanism classification using machine learning" and was published in "Nature on January 25, 2023 "superior.

Paper link: https://www.nature.com/articles/s41586 -022-05639-4

Traditional way of elucidating the mechanism of chemical reaction

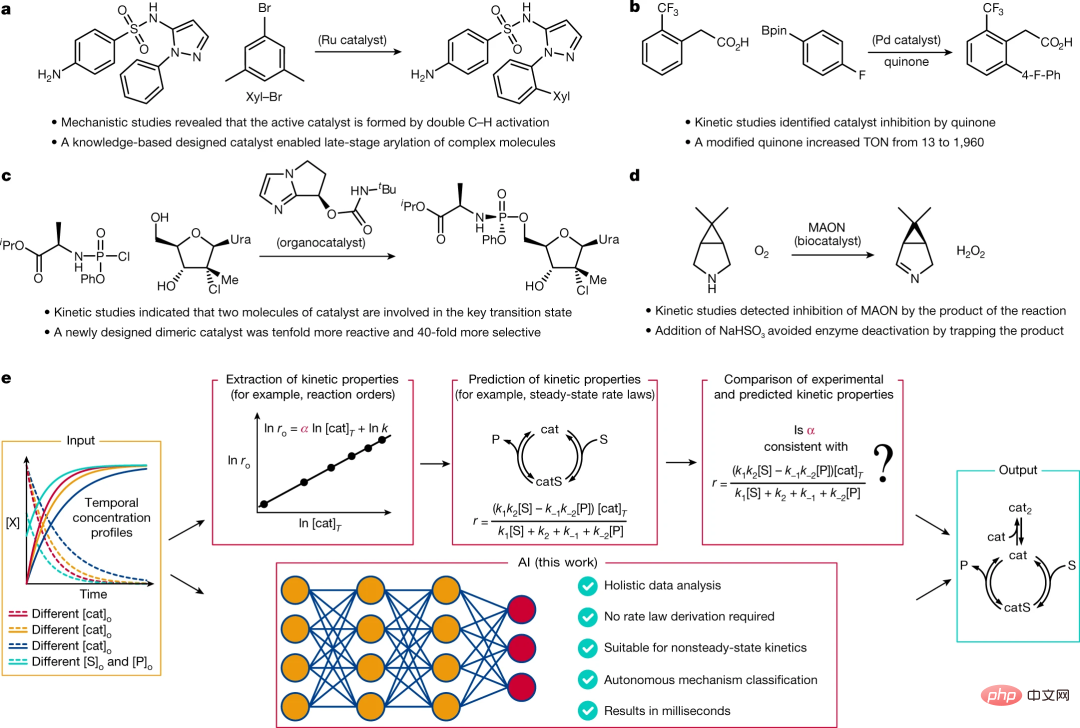

Determine the conversion of the substrate into The exact sequence of the basic steps involved in producing a product is critical for rationally improving synthesis methods, designing new catalysts, and safely scaling up industrial processes. To elucidate the reaction mechanism, multiple kinetic curves need to be collected, and human experts must perform kinetic analysis on the data. Although reaction monitoring technology has improved significantly over the past few decades to the point where kinetic data collection can be fully automated, the theoretical framework underlying mechanistic elucidation has not evolved at the same pace.

The current kinetic analysis pipeline consists of three main steps: extracting kinetic properties from experimental data, predicting kinetic properties for all possible mechanisms, and combining experimentally extracted properties with predictions characteristics for comparison.

For more than a century, chemists have been extracting mechanistic information from reaction rates. One method still used today is to evaluate the initial rate of a reaction, focusing on the consumption of the first few percent of the starting material. This method is popular because in most cases the change in reactant concentration over time is linear at the beginning of the reaction and is therefore simple to analyze. Although insightful, this technique ignores changes in reaction rates and concentrations that occur over much of the time course.

Over the past few decades, more advanced methods have been developed to evaluate the concentrations of reaction components throughout the reaction process. These methods are further facilitated by mathematical techniques that reveal the number of components participating in a reaction step (also known as the order of reaction components) from reaction kinetic diagrams. These techniques will certainly continue to provide insights into chemical reactivity, but they are limited to analyzing the order of reaction components rather than providing a more comprehensive mechanistic hypothesis describing the kinetic behavior of a catalytic system.

Figure 1: Relevance and state-of-the-art techniques for kinetic analysis. (Source: paper)

AI changes the field of kinetic analysis

Machine learning is revolutionizing the way chemists solve problems, From designing molecules and routes to synthesizing molecules to understanding reaction mechanisms. Burés and Larrosa are now bringing this revolution to kinetic analysis by using machine learning models to classify reactions based on their simulated kinetic characteristics.

Here, researchers demonstrate that a deep learning model trained on simulated kinetic data is able to correctly elucidate various mechanisms from temporal concentration distributions. Machine learning models simplify kinetic analysis by eliminating the need for rate law derivation and kinetic property extraction and prediction, greatly facilitating the elucidation of reaction mechanisms in all synthesis laboratories.

Due to the holistic analysis of all available kinetic data, this method improves the ability to interrogate reaction curves, eliminates potential human error during kinetic analysis, and expands the available The kinetic range of the analysis includes non-steady states (including activation and deactivation processes) and reversible reactions. This approach would complement currently available kinetic analysis methods and would be particularly useful in the most challenging situations.

Specific studies

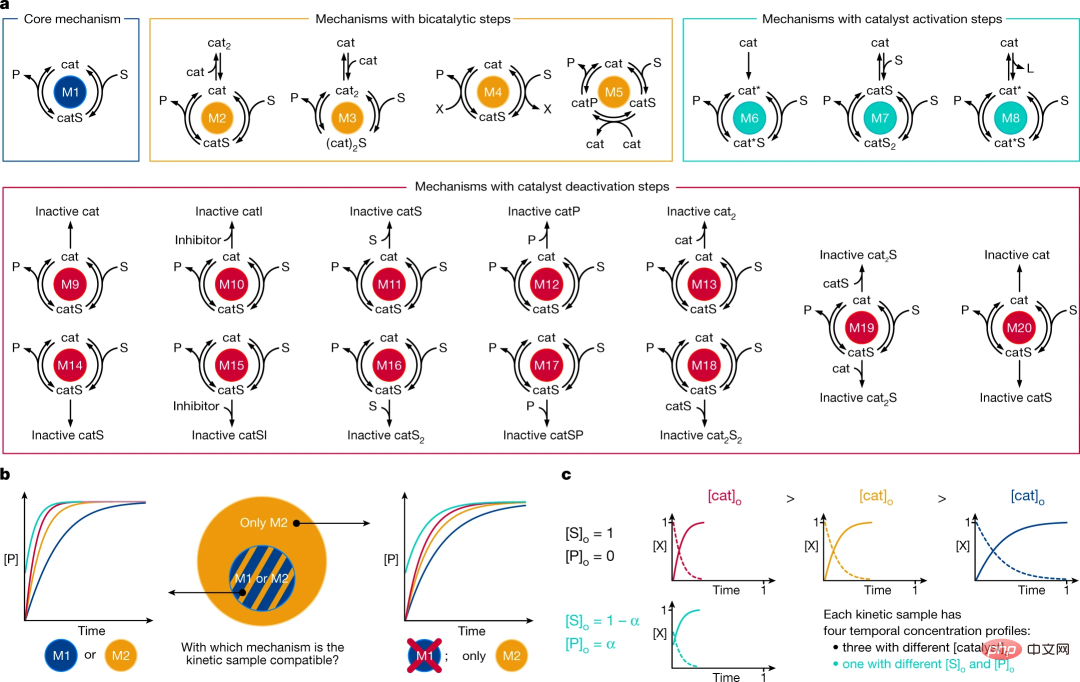

The researchers defined 20 categories of reaction mechanisms and developed rate laws for each category. Each mechanism consists of a set of kinetic constants (k1, … kn ) and the chemical substance concentration are mathematically described by ordinary differential equation (ODE) functions. They then solved these equations, generating millions of simulations describing the decay of reactants and the production of products. These simulated kinetics data are used to train learning algorithms to identify characteristic signatures for each mechanistic class. The resulting classification model uses kinetic curves as input, including initial and time concentration data, and outputs the mechanistic class of the reaction.

Figure 2: Mechanistic scope and data composition. (Source: Paper)

Training of deep learning models often requires large amounts of data, which can pose considerable challenges when this data must be collected experimentally.

Burés and Larrosa's approach to training the algorithm avoids the bottleneck of generating large amounts of experimental kinetic data. In this case, the researchers were able to numerically solve a set of ODEs to generate 5 million dynamics samples for model training and validation without using steady-state approximations.

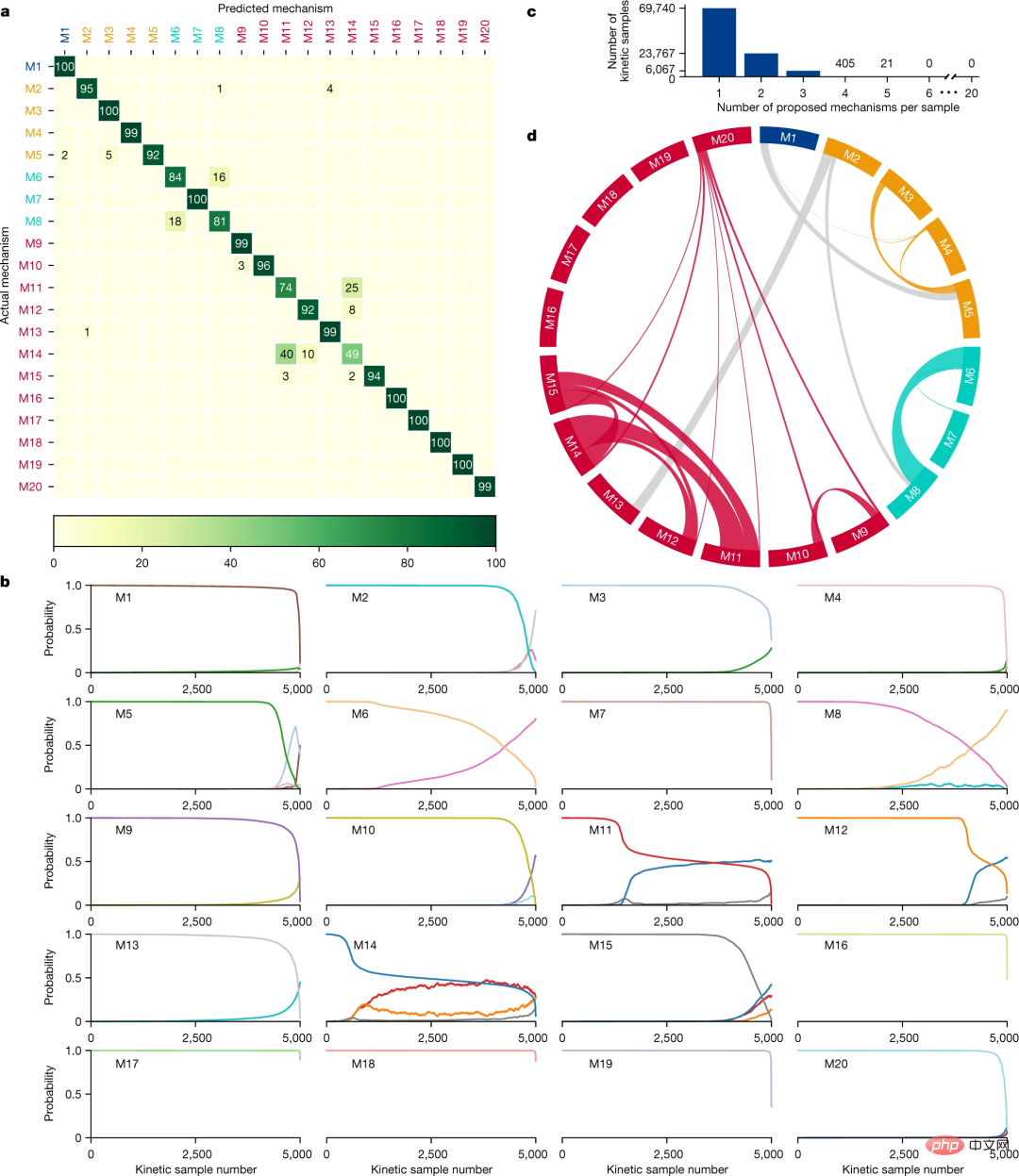

The model contains 576,000 trainable parameters and uses a combination of two types of neural networks: (1) long-short-term memory neural network, which is used to process temporal data sequences (i.e., time concentration data); (2) a fully connected neural network for processing non-temporal data (i.e., the initial concentration of the catalyst in each kinetic run and the features extracted from long short-term memory). The model outputs a probability for each mechanism that sums to 1.

The researchers evaluated the trained model using a test set of simulated kinetic curves and demonstrated that it correctly assigned these curves to mechanistic classes with 92.6% accuracy.

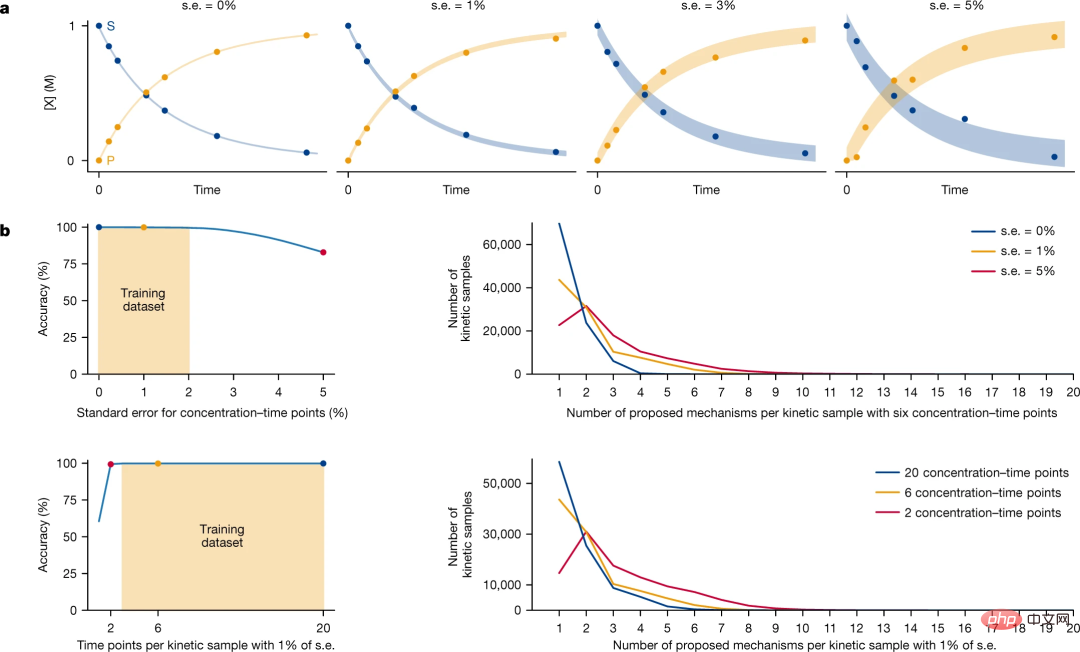

Figure 3: Performance of the machine learning model on the test set, each kinetic curve has six point in time. (Source: paper)

#The model performs well even when "noisy" data is intentionally introduced, which means it can be used to classify experimental data.

Figure 4: The impact of error and number of data points on machine learning model performance. (Source: paper)

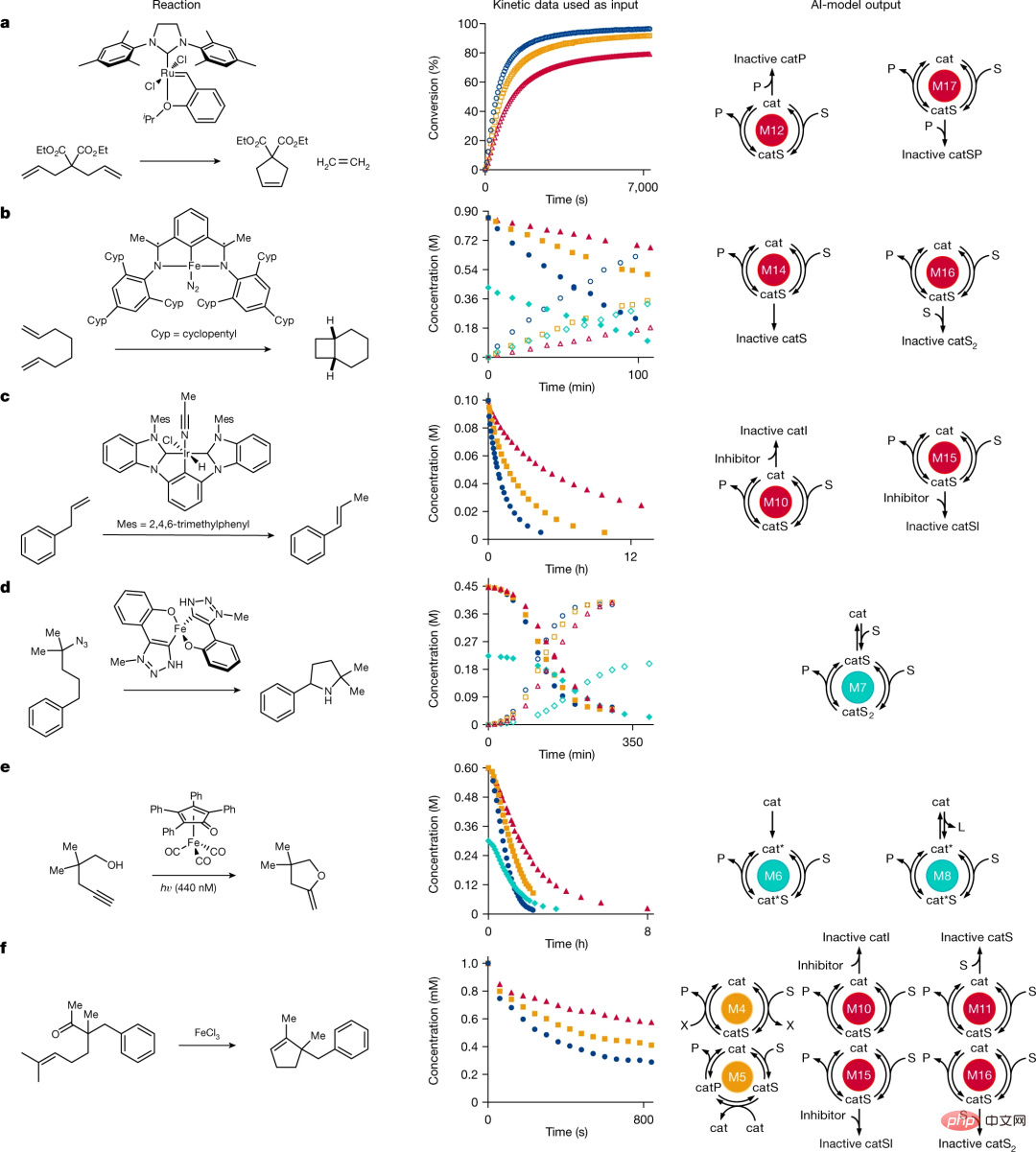

Finally, the researchers benchmarked their model using several previously reported experimental kinetic curves. The predicted mechanism is in good agreement with the conclusions of earlier kinetic studies. In some cases, the model also identified mechanistic details that were not detected in the original work. For a challenging reaction, the model proposes three very similar mechanistic categories. However, the authors correctly state that this result is not a bug but a feature of their model, as it suggests that further specific experiments are needed to explore the mechanism.

Figure 5: Case study with experimental kinetic data. (Source: Paper)

In summary, Burés and Larrosa have developed a method that not only automates the long process of deriving mechanistic hypotheses from kinetic studies; Perform kinetic analysis of challenging reaction mechanisms. As with any technological advance in data analysis, the resulting mechanistic classifications should be viewed as hypotheses requiring further experimental support. There is always a risk of misinterpreting kinetic data, but the algorithm's ability to identify the correct reaction path with high accuracy based on a small number of experiments could convince more researchers to try kinetic analysis.

Thus, this approach could popularize and facilitate the incorporation of kinetic analysis into reaction development processes, especially as chemists become more familiar with machine learning algorithms.

The above is the detailed content of Machine learning model classifies organic reaction mechanisms with outstanding accuracy. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require