How awesome is ChatGPT, which can replace 90% of people's jobs?

The artificial intelligence research laboratory OpenAI released the natural language generation model ChatGPT on November 30, 2022. It has exceeded 100 million users within two months of its launch, becoming a well-deserved super player in the artificial intelligence industry. Internet celebrity. ChatGPT quickly broke through with its powerful anthropomorphic and timely response capabilities, arousing heated discussions in all walks of life. Simply put, ChatGPT is an artificial intelligence chatbot that can automatically generate answers based on user text input. Then some people will definitely say that this is Siri. Although they are both interactive robots, the difference between the two is huge. So why does ChatGPT perform so well in human-computer interaction? Will it replace search engines? Will 90% of people really be in danger of losing their jobs because of the emergence of ChatGPT? With these questions in mind, let’s take a look at what are the advantages of ChatGPT and what changes it will bring to the industry in the future.

What is ChatGPT

Who created ChatGPT

The founder of OpenAI, Sam Altman, is a genius who can program at the age of 8. In 2015, he founded it together with Tesla boss Musk, angel investor Peter Thiel and other Silicon Valley tycoons. OpenAI, an artificial intelligence research laboratory, is mainly composed of the profit organization OpenAI LP and the parent company non-profit organization OpenAI Inc. Its purpose is to promote and develop friendly artificial intelligence and prevent artificial intelligence from breaking away from human control. OpenAI focuses on the research and development of cutting-edge artificial intelligence technologies, including machine learning algorithms, reinforcement learning, and natural language processing. OpenAI released ChatGPT on November 30, 2022, officially providing real-time online question and answer dialogue services.

What is ChatGPT

There is this passage in the book "The Boundary of Knowledge":

#When knowledge becomes networked, the smartest person in the room is no longer the one standing in front of the room teaching us, nor is he the room The collective wisdom of everyone here. The smartest person in the room is the room itself: the network that contains all the people and ideas within it and connects them to the outside world.

My understanding of this sentence is that the Internet has all human knowledge and experience, providing a massive amount of resources for artificial intelligence Learning data, when this knowledge and experience is organized in an orderly manner, also provides abundant data soil for training an "Knowing King" artificial intelligence application. After ChatGPT is fed and trained by the massive text data and language database data on the Internet, it can generate corresponding answers based on the content of the text you input, just like two people chatting with each other. In addition to being able to communicate with you without any barriers, it even makes you feel that you are not talking to a chatbot but a real person who is knowledgeable and a little bit funny. The answers even have a certain human tone, which is very important in this world. This was unimaginable in previous chatbots.

Here is a brief explanation of the literal meaning of ChatGPT. It is a general natural language generation model. Chat means dialogue, and the so-called GPT is Genarative Pre- trained Transformer, which means a generative pre-trained transformation model, sounds a bit unclear.

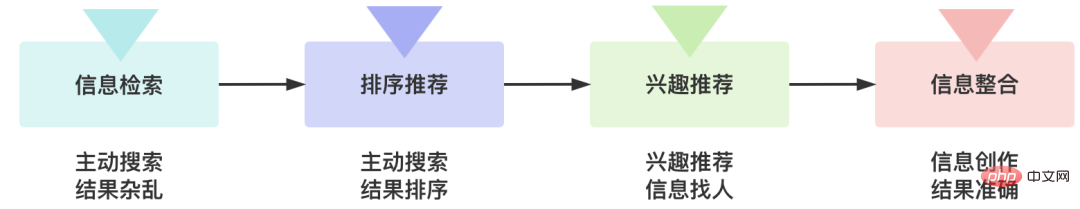

In addition, you can also ask him to help you complete some practical work, such as writing copywriting, writing scripts, and even helping you write code directly. It can also help you find bugs in the code. This is the rhythm of programmers who want to smash their own jobs and smash them into pieces. It can be said to be omnipotent at the text and code level. This interactive method of inputting questions and immediately giving answers is far better than the experience of using traditional search engines to find what you need in massive data. Therefore, it is foreseeable that ChatGPT will subvert traditional search engines in the near future and completely change the way of information retrieval. Usage.

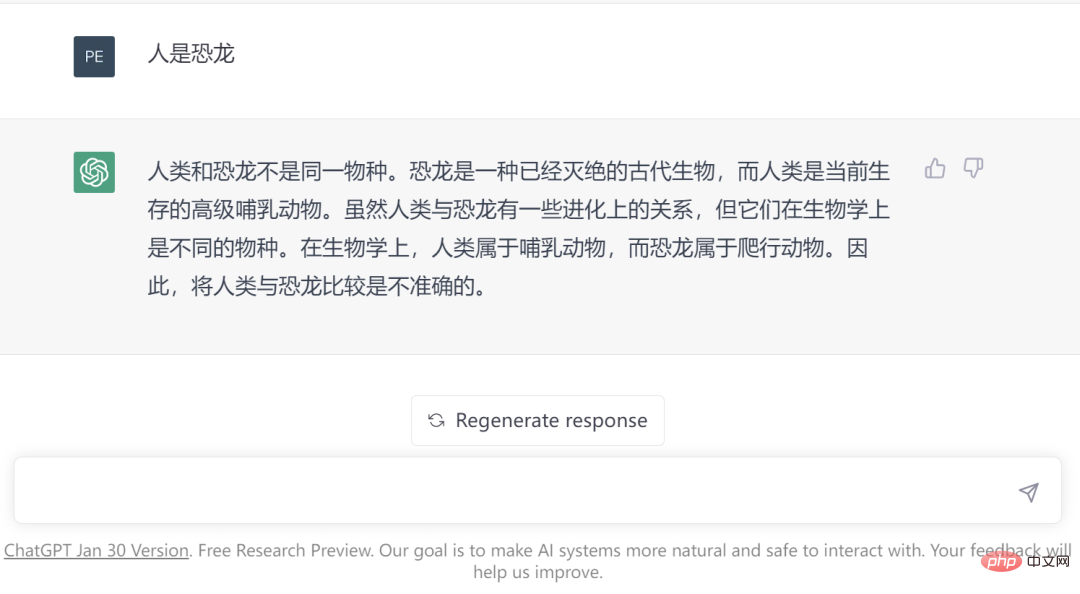

In addition, ChatGPT can also answer questions in context, and at the same time, it can proactively admit its own shortcomings and challenge problems. rationality. The following is ChatGPT denying the question I raised.

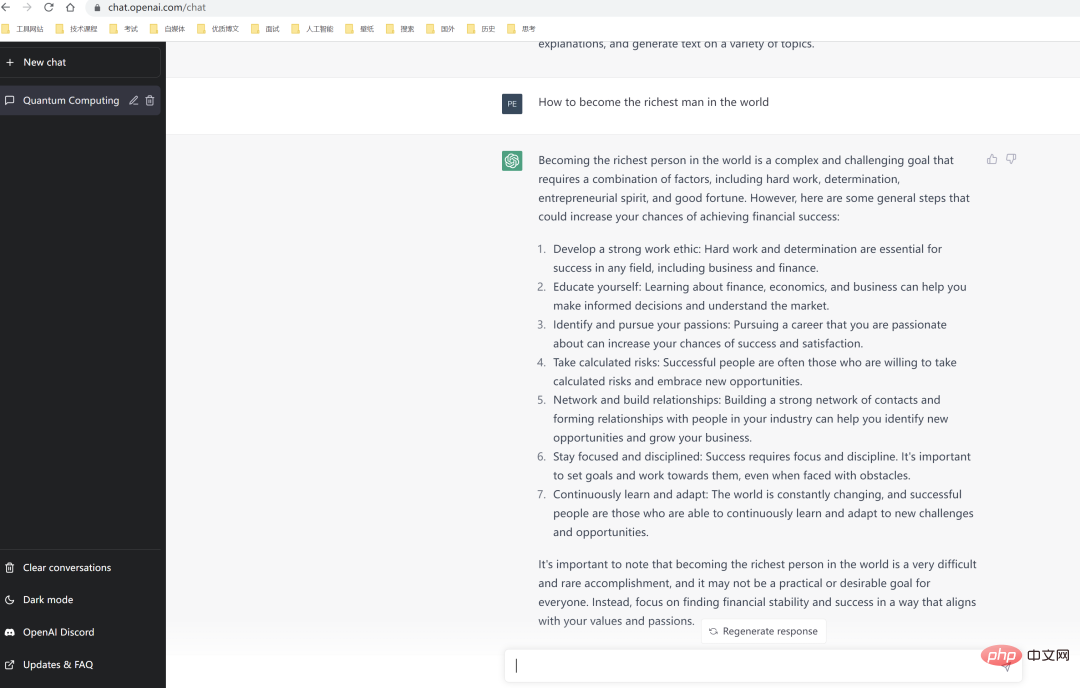

PS: I asked ChatGPT how to become the richest man in the world. The secret is below.

## It is precisely because ChartGPT has such powerful understanding, learning and creative abilities that it became the birth of AI artificial intelligence Since then, it has been the fastest growing smart application product for C-end users. In the past, artificial intelligence C-side products were always considered not smart enough or even ridiculed as "artificially retarded". Even on the B-side, they were only used in certain scenarios. Ordinary people could not feel the power of AI at all. However, ChatGPT's The emergence may indicate that artificial intelligence will be integrated into ordinary people's lives in the future.

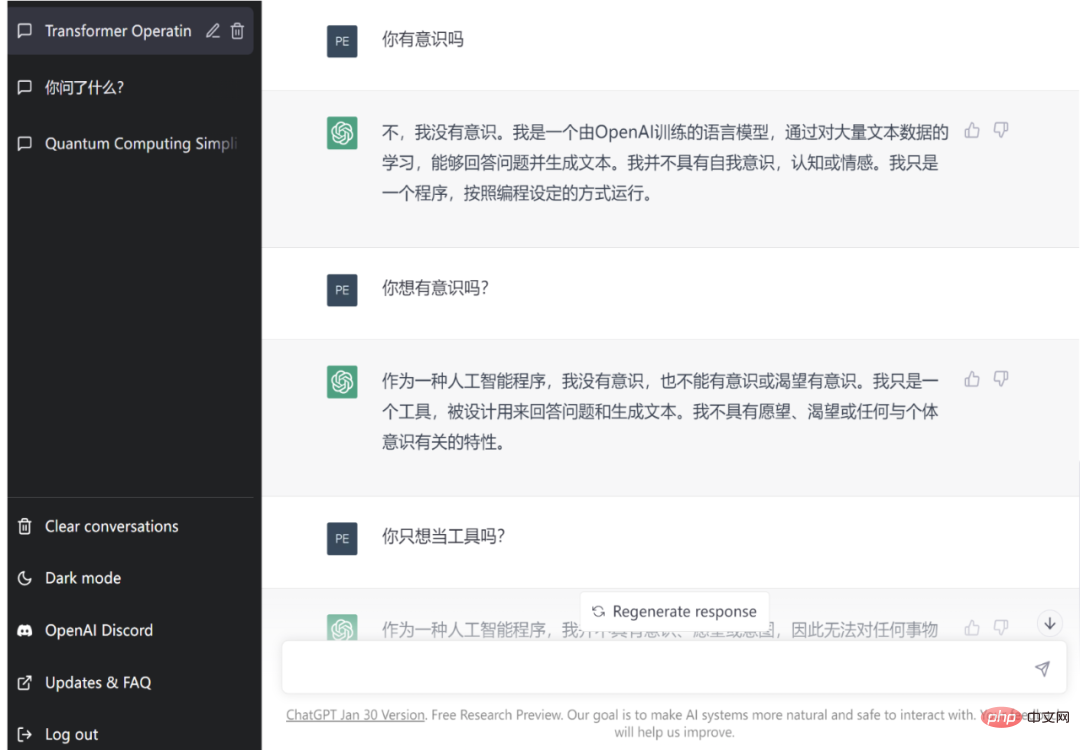

PS: I’m really afraid that he will answer yes.

Why is ChatGPT so powerful?

Although ChatGPT became popular overnight, the technological development behind it did not happen overnight. Therefore, if we want to understand why ChatGPT is so powerful, we must understand the technical principles behind it.Language model iteration

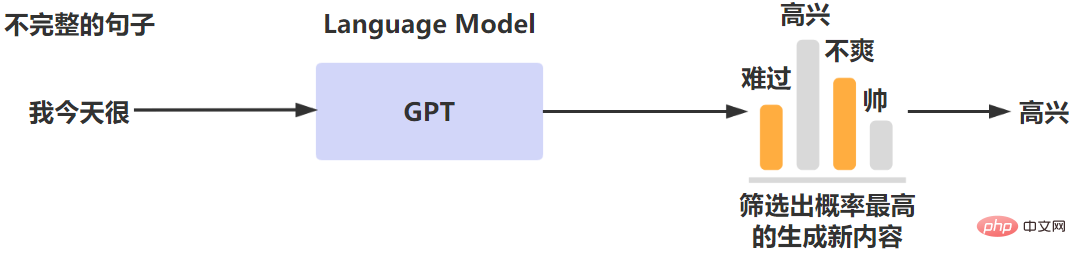

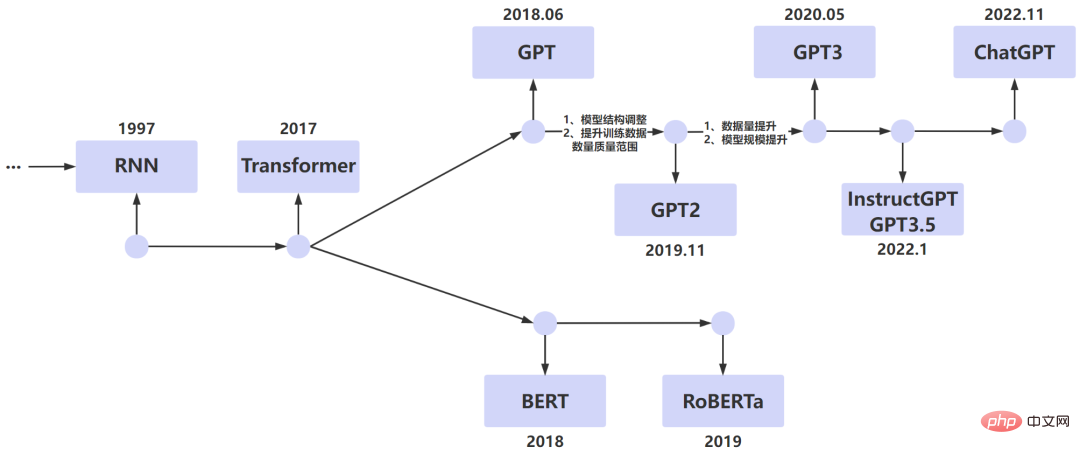

We all know that natural language is the most important tool for human communication, so how to enable machines to communicate with people through natural language Barrier-free communication has always been a goal pursued tirelessly in the field of artificial intelligence. NLP (Natural Language Processing) is a specialized research in the field of computer science and artificial intelligence that allows machines to understand natural language and respond on this basis. ## important research direction. If you want a computer to recognize natural language, you need a corresponding language model to analyze and process the text. The general principle of language model is to carry out probability modeling of language text, and use the model to predict the probability of the next output content. The general process is as follows. The language model is used to output the sentence with the highest probability of occurrence after a paragraph. Language models can be divided into statistical language models and neural network language models. ChatGPT is a neural network language model. After iterative optimization in multiple versions, it achieved the outstanding performance that shocked everyone today. We can briefly review the development history of LM (Language Model) and see how the language model evolves step by step. This is very helpful for us to understand the technical principles behind ChatGPT.

RNN

##RNN (Recurrent Neural Network, recurrent neural network) It has a wide range of applications in the NPL field. The NLP we mentioned above aims to solve the problem of letting the machine understand natural language. Therefore, if the machine is to understand the meaning of a sentence, it must not only understand what each word in the sentence means, but should process the sentence. What is the meaning expressed by the connected sequences, and RNN solves the problem of modeling the sequence of sample data.

##RNN (Recurrent Neural Network, recurrent neural network) It has a wide range of applications in the NPL field. The NLP we mentioned above aims to solve the problem of letting the machine understand natural language. Therefore, if the machine is to understand the meaning of a sentence, it must not only understand what each word in the sentence means, but should process the sentence. What is the meaning expressed by the connected sequences, and RNN solves the problem of modeling the sequence of sample data.

However, RNN has efficiency problems. When processing language sequences, it is carried out through serialization. That is to say, the processing of the next word needs to wait until the status of the previous word is output before proceeding. In addition, there are also problems such as gradient explosion and forgetting. Therefore, artificial intelligence experts continue to optimize models on this basis.

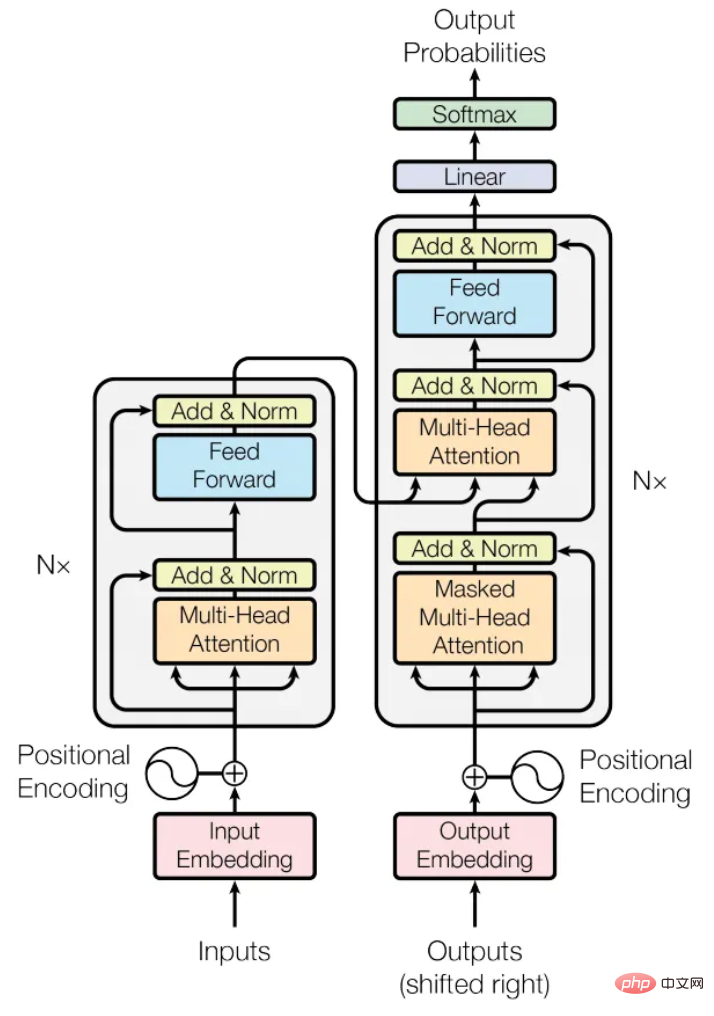

Transformer

Google Brain proposed the Transformer model in the paper "Attention Is All You Need" in 2017. This It is a deep learning model based on the self-attention mechanism, which is optimized and designed mainly for RNN problems. Especially the problem of serializing text sequences. The Transformer model can process all words in the text sequence at the same time. At the same time, the distance of any word in the sequence is 1, avoiding the long distance caused by the sequence being too long in the RNN model. question. The introduction of the Transformer model can be said to be an important symbol of the leap-forward development in the field of NLP, because the subsequent famous BERT model and GPT model are all evolved based on the Transformer model. The figure below shows the Transformer model structure.

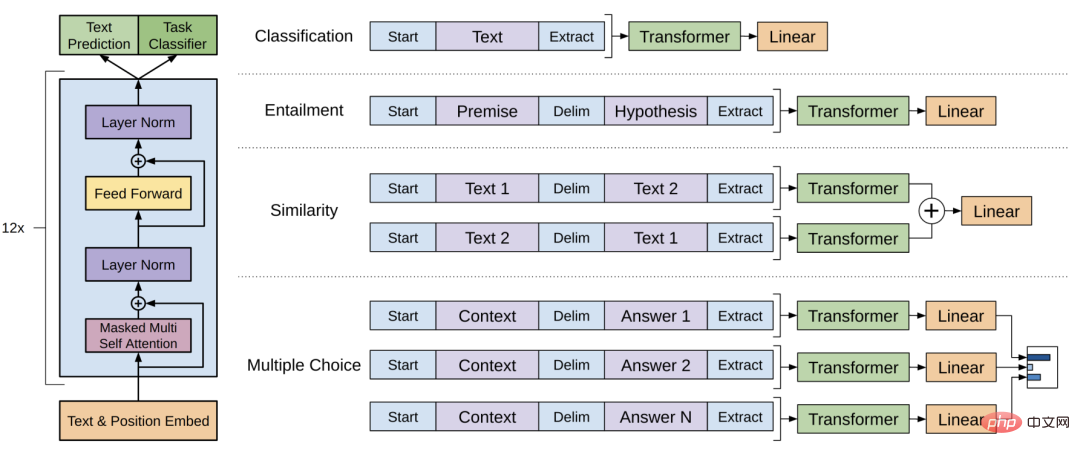

##GPT, GPT-2

##Both the original GPT model and the latest ChatGPT model are actually language models with the Transformer model as the core structure. GPT uses the Decoder component of the Transformer model, which is more suitable for answering the following scenarios based on the above.

In order to improve the accuracy of training, many machine learning training tasks are completed using labeled datasets, but in fact labeling data is a very heavy workload. Big things take a lot of manpower and time. Therefore, as computing power continues to increase, we actually need to train on more data that has not been manually labeled. Therefore, GPT proposes a new natural language training paradigm that uses massive text data to conduct unsupervised learning to achieve model training. This is why GPT adopts the Pre-training Fine-tuning training mode. The model structure of GPT is as follows, and its training goal is to predict the following based on the above.

GPT-3

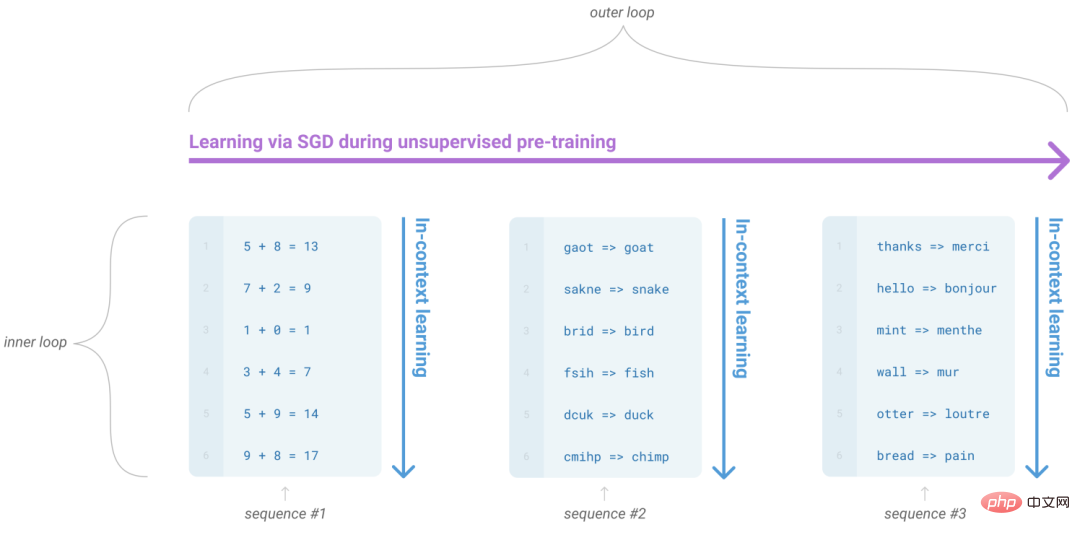

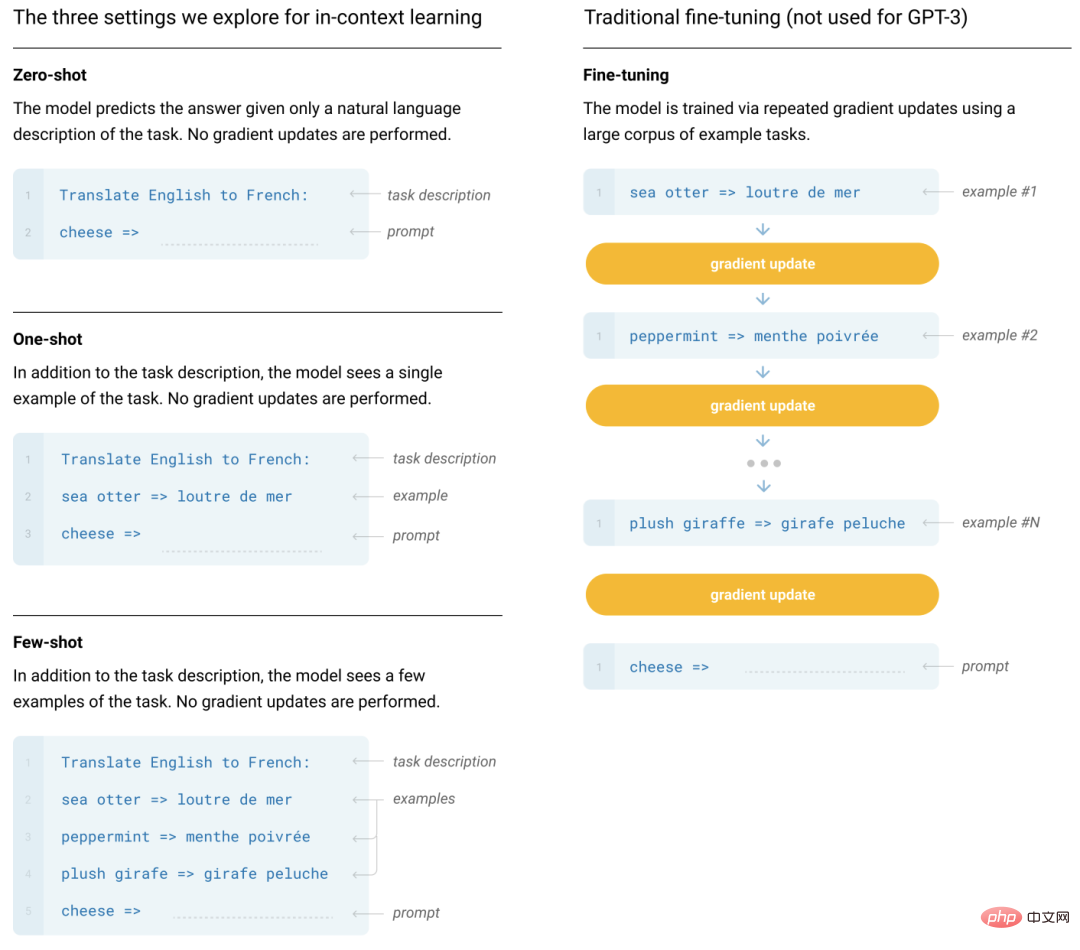

In 2020, OpenAI proposed in the paper "Language Models are Few-Shot Learners" The GPT-3 model uses a very large amount of model parameters and training data. It mainly proposes the context learning capability of LLM.

Key capabilities of ChatGPT

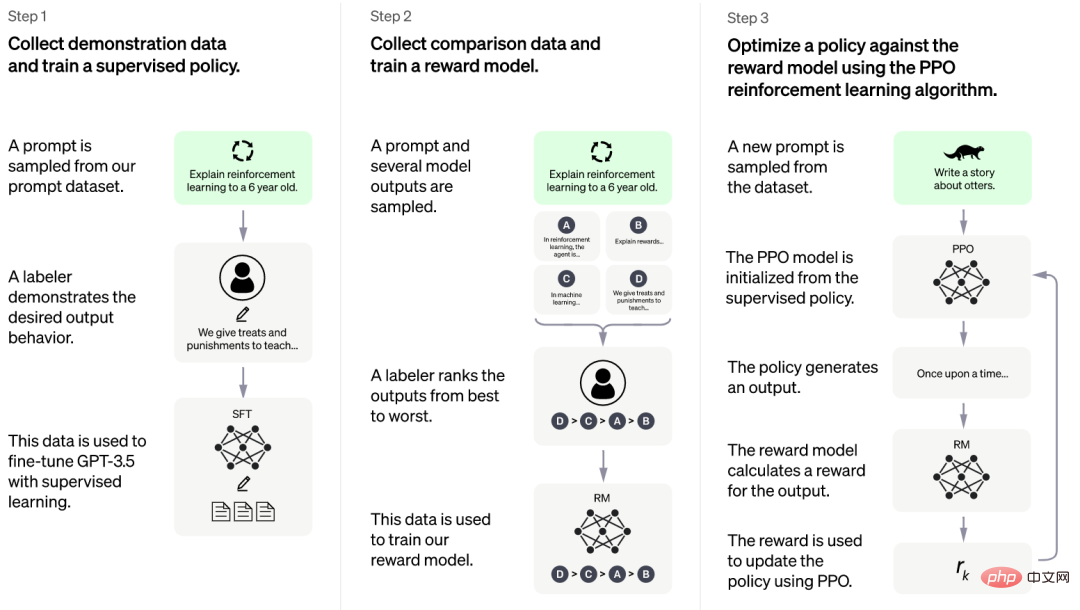

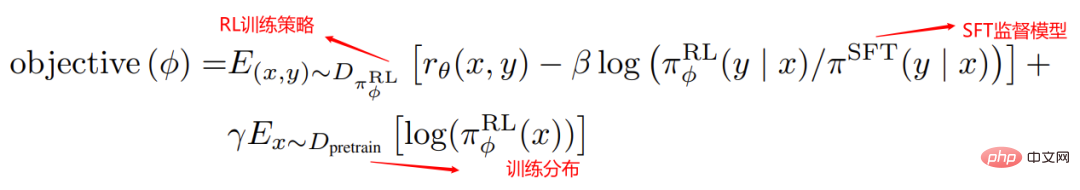

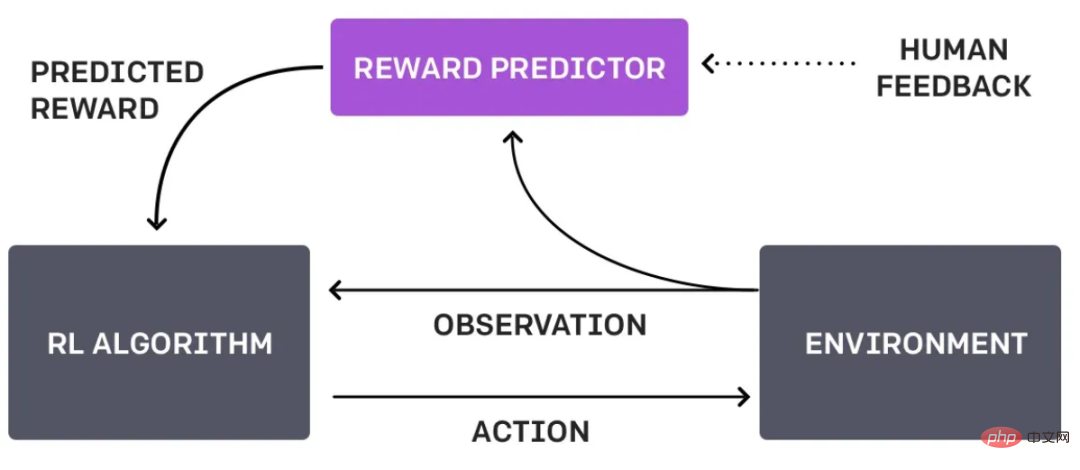

Currently OpenAI has not yet published a corresponding paper on ChatGPT, but in fact its core ideas are consistent with those published by OpenAI in 2022 The paper "Training language models to follow instructions with human feedback" is basically consistent. The most important optimization of InstructGPT is the introduction of RLHF (Reinforcement Learning from Human Feedback, reinforcement learning based on human feedback) technology. The original model is fine-tuned by letting the model learn the process of human dialogue and allowing humans to annotate, evaluate and sort the results of the model's answers, so that the converged model can be more consistent with human intentions when answering questions.

In addition, the InstructGPT training method proposed in this paper is actually basically the same as ChatGPT, but there is a slight difference in the way of obtaining data, so InstructGPT can be said to be a pair of brother models with ChatGPT. Let’s take a closer look at how ChatGPT is trained, and how ChatGPT solves the problem to make the answers answered by the model more consistent with human intentions or preferences.

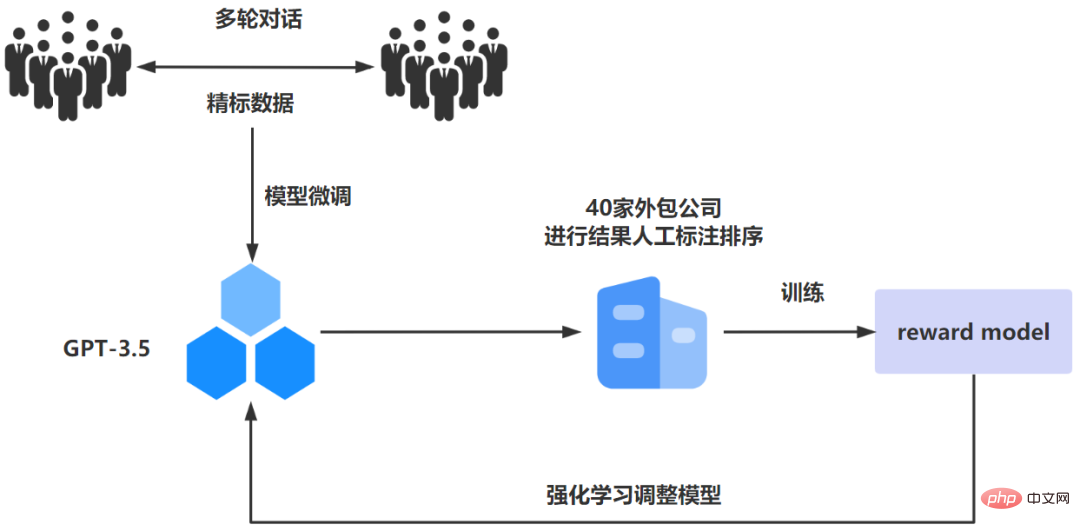

The above training process may seem a bit complicated, but it has been simplified As shown in the figure below, this should make it easier for students to understand how the ChatGPT model is trained. According to the steps given on the official website, its core training idea is to collect feedback data - "Training reward model -" PPO reinforcement learning.

##ChatGPT training process is mainly divided into three stages:

Phase 1: Fine-tuning the GPT-3.5 initial model through supervised learning

In fact, for LLM (Large Language Model, large language model), it is not It is said that the more sample data for training, the better. Why do you say this? Because large-language pre-training models like ChatGPT are trained with extremely large parameters and massive data, these massive sample data are actually transparent to artificial intelligence experts and uncontrollable. Therefore, if the sample data contains some bad data such as racial discrimination and violence, the pre-trained model may have these bad content attributes. However, for artificial intelligence experts, it is necessary to ensure that artificial intelligence is objective and fair without any bias, and ChatGPT is trained in this aspect.

Therefore, ChatGPT conducts model training through supervised learning. The so-called supervised learning means learning on a dataset that “has answers”. To this end, OpenAI hired 40 contractors to perform data labeling work. First, these labelers were asked to simulate human-computer interaction for multiple rounds of language interaction. In the process, corresponding manual precise labeling data was generated. These precise labeling data were Let's fine-tune the GPT-3.5 model to obtain the SFT (Supervised Fine-Tuning) model.

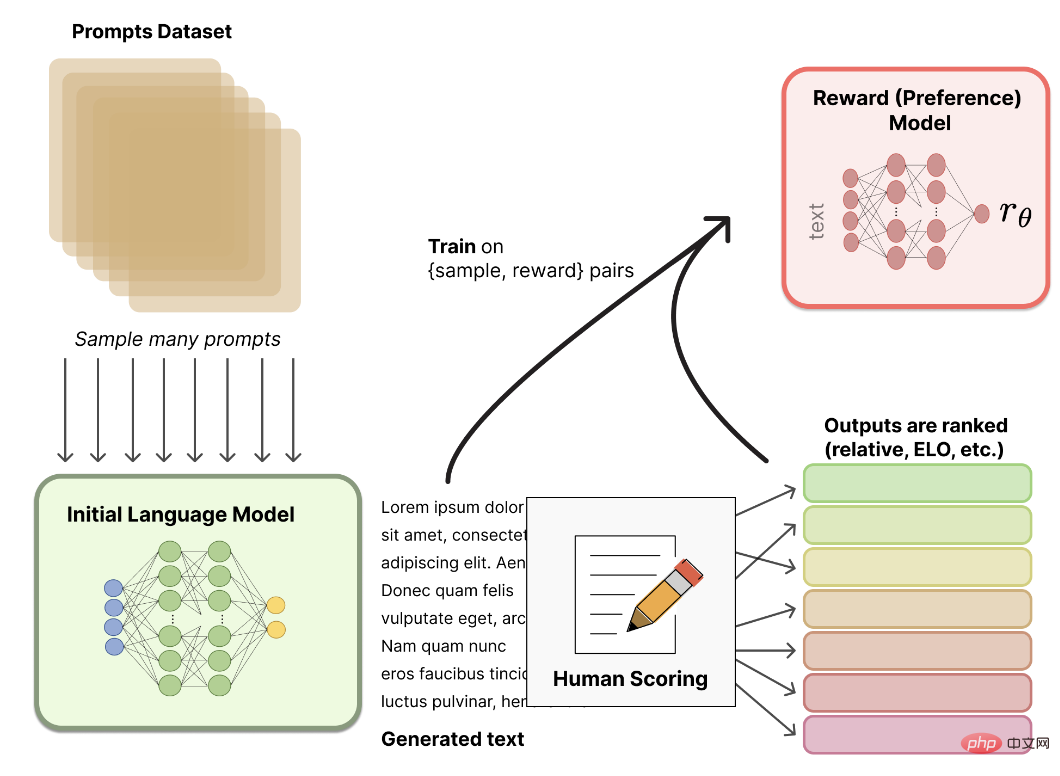

Phase 2: Build Reward Model

After randomly extracting a batch of prompt data, use the first stage of fine-tuning The model automatically responds to different questions, and then lets the labelers sort the answers from best to worst. The sorted result data is used to train the Reward Model. In the process, the sorted results continue to be combined in pairs. Forming sorted training data pairs, the Reward Model accepts data pair input to give a score of answer quality. This Reward Model is essentially an abstraction of real human intentions. Because of this critical step, the Reward Model can continuously guide the model in a direction that conforms to human intentions to produce corresponding answer results.

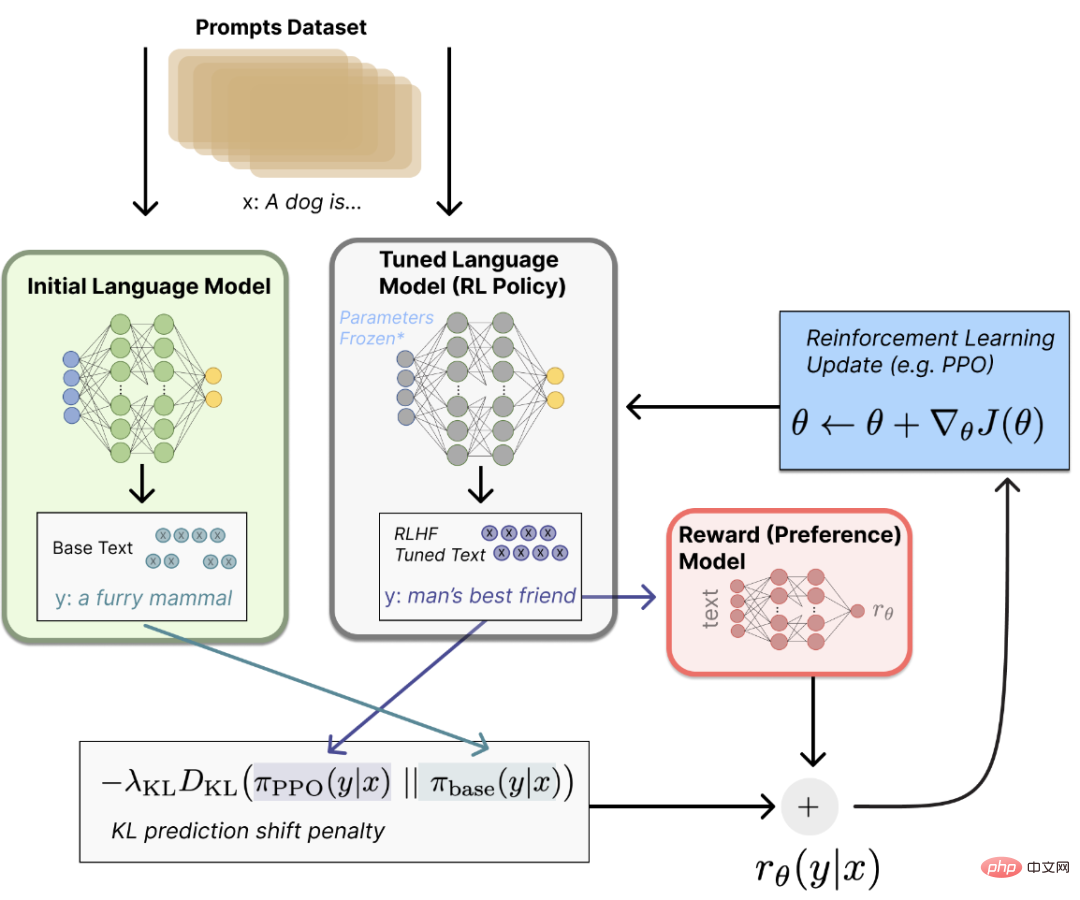

Phase 3: PPO(Proximal Policy Optimization, proximal policy optimization)Reinforcement learning fine-tuning model

PPO is a trust region optimization algorithm that uses gradient constraints to ensure that the update step does not destabilize the learning process. After continuing to extract a batch of prompt data at this stage, use the Reward Model constructed in stage 2 to score the responses of the fine-tuned training model to update the pre-trained parameters. High-scoring answers are rewarded through the Reward Model, and the resulting policy gradient can update the PPO model parameters. Iterate continuously until the model finally converges.

##It can be seen that the actual training process of ChatGPT is actually the process of applying supervised learning combined with RLHF technology. ChatGPT In fact, it relies on RLHF technology to generate an answer that is more in line with human expectations.

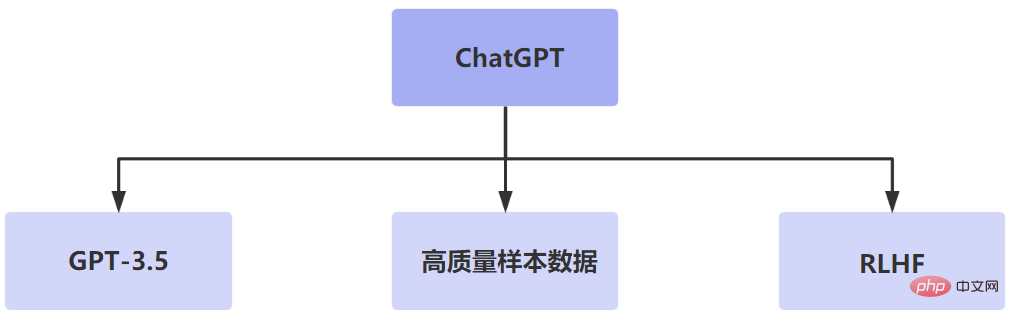

Through the above model training process, we concluded that the main benefits of ChatGPT’s powerful context understanding ability are The key capabilities in three aspects are powerful basic models, high-quality sample data, and reinforcement learning based on human feedback.

The core is RLHF technology, which uses training to find the reward function that best explains human judgment, and then continuously trains Enhance awareness.

Replace search engine

The current search engine can only match and index the corresponding web pages in the search engine database for result feedback based on the keywords we search for. Search engines like Baidu will always give you some ads. . Users still need to find what they want most among the returned information. But ChatGPT is different. The answer is the question, saving users a lot of time and energy in filtering invalid search results. ChatGPT can very accurately understand the actual intention of the user. However, traditional search engines still use keyword matching search methods and do not actually understand the true meaning of the search statement entered by the user. However, ChatGPT can understand the true intention of the user's input. In addition, he will give creative answers to help users get rid of complicated work.

PS: Microsoft’s Bing search engine has begun to connect to ChatGPT.

##Replace manual customer service

##Replace manual customer service

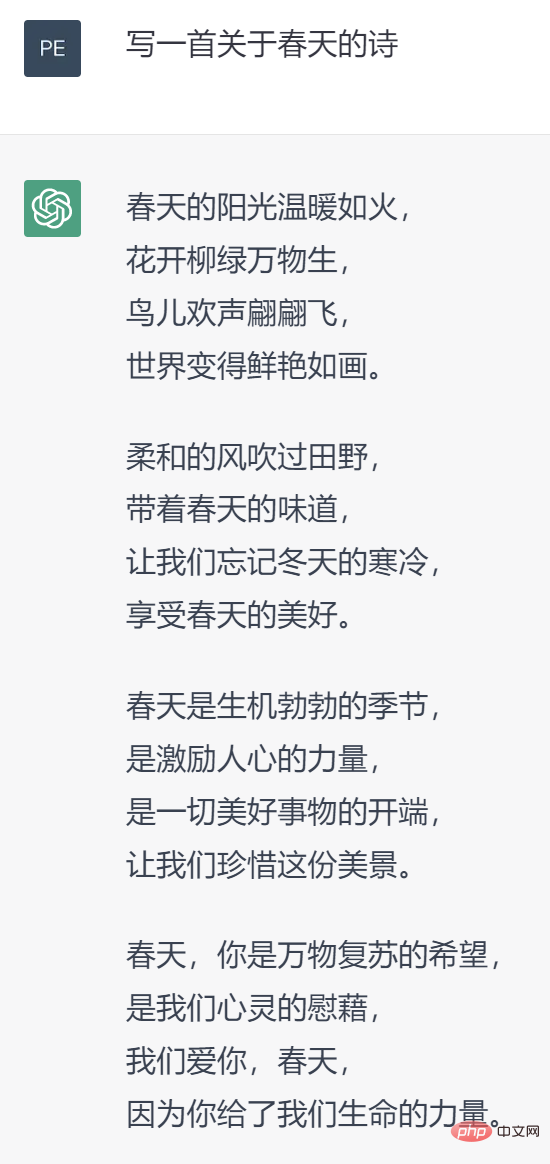

Now The so-called intelligent customer service only presets some common questions for automatic answers. It is far from being called intelligent, but it can reduce the company's investment cost in customer service personnel to a certain extent. But if you have ChatGPT, because it canunderstand the user’s true intention, instead of mechanically Answer preset questions, so it can help users solve actual customer service problems and minimize customer service labor costs to the greatest extent. Replaces content creation

##ChatGPT doesn’t just answer questions; You can create content, such as writing a song, writing a poem, writing an event plan, etc. Therefore, many students who are engaged in text content creation have felt a deep crisis. They used to think that robots should first replace manual labor workers, but who would have thought that the emergence of ChatGPT would directly eliminate the jobs of many mental workers. .

The training data of ChatGPT is based on massive text data in the Internet world. If the text data itself is inaccurate or contains some kind of bias, the current ChatGPT cannot distinguish it, so it will be inevitable when answering questions. to convey this inaccuracy and bias. Currently, ChatGPT can mainly handle natural language questions and answers and tasks. In other fields such as Image recognition, speech recognition, etc. do not necessarily have corresponding processing capabilities, but I believe that there may be VoiceGPT and ViewGPT in the near future. Everyone will wait and see. ChatGPT is a very large deep learning model in the NPL field. Its training parameters and The training data is very huge, so if you want to train ChatGPT, you need to use large data centers and cloud computing resources, as well as a lot of computing power and storage space to process the massive training data. Simply put, the cost of training and using ChatGPT is still very high. . AI Artificial intelligence has been talked about for many years and has been in the development stage. In some specific fields Application results have been achieved. But for C-end users, there are basically no real artificial intelligence application products that can be used. However, the release of ChatGPT this time is a milestone event, because for ordinary people, AI artificial intelligence is no longer a distant technical term, but a real intelligent application tool within reach, which can make ordinary people People truly feel the power of AI. In addition, what I want to say is that maybe ChatGPT is just the beginning. At present, it only completes corresponding tasks according to human instructions. But in the future, with the continuous iteration of artificial intelligence self-learning, it may become conscious and do things autonomously. At that time, it will be unclear whether mankind is facing an omnipotent helper or an uncontrollable evil dragon.

ChatGPT limitations

Training data bias

Limited applicable scenarios

High training cost

Summary

The above is the detailed content of How awesome is ChatGPT, which can replace 90% of people's jobs?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: Creating an Intelligent Customer Service Chatbot Introduction: In today’s information age, intelligent customer service systems have become an important communication tool between enterprises and customers. In order to provide a better customer service experience, many companies have begun to turn to chatbots to complete tasks such as customer consultation and question answering. In this article, we will introduce how to use OpenAI’s powerful model ChatGPT and Python language to create an intelligent customer service chatbot to improve

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

In this article, we will introduce how to develop intelligent chatbots using ChatGPT and Java, and provide some specific code examples. ChatGPT is the latest version of the Generative Pre-training Transformer developed by OpenAI, a neural network-based artificial intelligence technology that can understand natural language and generate human-like text. Using ChatGPT we can easily create adaptive chats

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

chatgpt can be used in China, but cannot be registered, nor in Hong Kong and Macao. If users want to register, they can use a foreign mobile phone number to register. Note that during the registration process, the network environment must be switched to a foreign IP.

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to use ChatGPTPHP to build an intelligent customer service robot Introduction: With the development of artificial intelligence technology, robots are increasingly used in the field of customer service. Using ChatGPTPHP to build an intelligent customer service robot can help companies provide more efficient and personalized customer services. This article will introduce how to use ChatGPTPHP to build an intelligent customer service robot and provide specific code examples. 1. Install ChatGPTPHP and use ChatGPTPHP to build an intelligent customer service robot.

How to use ChatGPT and Python to implement user intent recognition function

Oct 27, 2023 am 09:04 AM

How to use ChatGPT and Python to implement user intent recognition function

Oct 27, 2023 am 09:04 AM

How to use ChatGPT and Python to implement user intent recognition function Introduction: In today's digital era, artificial intelligence technology has gradually become an indispensable part in various fields. Among them, the development of natural language processing (Natural Language Processing, NLP) technology enables machines to understand and process human language. ChatGPT (Chat-GeneratingPretrainedTransformer) is a kind of

How to develop an AI-based voice assistant using ChatGPT and Java

Oct 27, 2023 pm 06:09 PM

How to develop an AI-based voice assistant using ChatGPT and Java

Oct 27, 2023 pm 06:09 PM

How to use ChatGPT and Java to develop an artificial intelligence-based voice assistant. The rapid development of artificial intelligence (Artificial Intelligence, AI for short) has entered various fields, among which voice assistants are one of the popular applications. In this article, we will introduce how to develop an artificial intelligence-based voice assistant using ChatGPT and Java. ChatGPT is an open source project for interaction through natural language, proposed by OpenAI, an AI research institution.