Technology peripherals

Technology peripherals

AI

AI

The lightweight version of ChatGPT training method is open source! Built around LLaMA in just 3 days, the training speed is claimed to be 15 times faster than OpenAI

The lightweight version of ChatGPT training method is open source! Built around LLaMA in just 3 days, the training speed is claimed to be 15 times faster than OpenAI

The lightweight version of ChatGPT training method is open source! Built around LLaMA in just 3 days, the training speed is claimed to be 15 times faster than OpenAI

The lightweight version of ChatGPT based on the Meta model is here?

Just three days after Meta announced the launch of LLaMA, an open source training method that turned it into ChatGPT appeared in the industry, claiming that the training speed is up to 15 times faster than ChatGPT.

LLaMA is an ultra-fast and ultra-small GPT-3 launched by Meta. The number of parameters is only 10% of the latter, and it only requires a single GPU to run.

The method to turn it into ChatGPT is called ChatLLaMA, which is trained based on RLHF (reinforcement learning based on human feedback) and quickly became popular on the Internet.

So, Meta’s open source version of ChatGPT is really coming?

Wait a minute, things are not that simple.

Training LLaMA into ChatGPT's "open source method"

Click on the ChatLLaMA project homepage and you will find that it actually integrates four parts -

DeepSpeed, RLHF method, LLaMA and data sets generated based on LangChain agent.

Among them, DeepSpeed is an open source deep learning training optimization library, including an existing optimization technology called Zero, which is used to improve large model training capabilities. Specifically, it refers to helping the model improve training speed, reduce costs, improve model availability, etc. .

RLHF will use the reward model to fine-tune the pre-trained model. The reward model first uses multiple models to generate questions and answers, and then relies on manual sorting of the questions and answers so that it can learn to score. Then, it scores the answers generated by the model based on reward learning, and enhances the model's capabilities through reinforcement learning.

LangChain is a large language model application development library that hopes to integrate various large language models and create a practical application combined with other knowledge sources or computing capabilities. The LangChain agent will release the entire process of GPT-3 thinking like a thought chain and record the operations.

At this time you will find that the most critical thing is still the LLaMA model weight. Where does it come from?

Hey, go to Meta and apply yourself, ChatLLaMA does not provide it. (Although Meta claims to open source LLaMA, you still need to apply)

So essentially, ChatLLaMA is not an open source ChatGPT project, but just a training method based on LLaMA. Several projects integrated in its library were originally open source.

In fact, ChatLLaMA was not built by Meta, but from a start-up AI company called Nebuly AI.

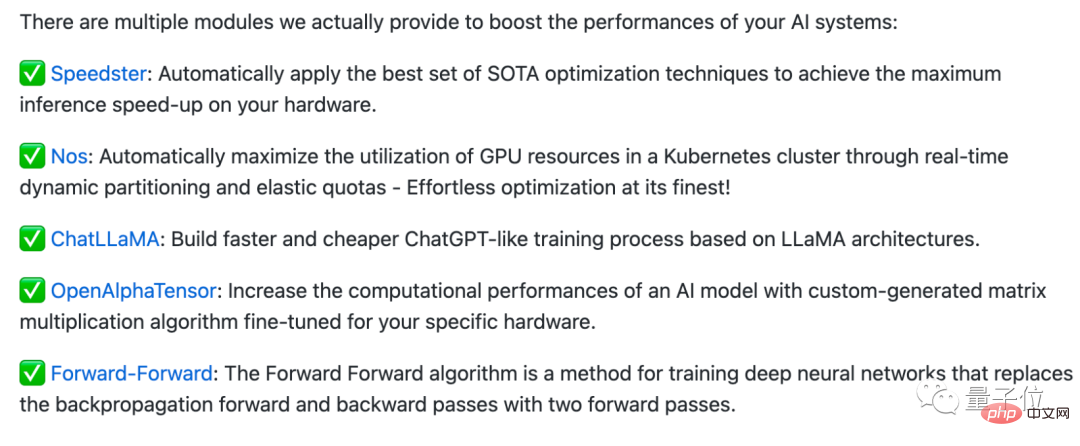

Nebuly AI has made an open source library called Nebullvm, which integrates a series of plug-and-play optimization modules to improve AI system performance.

For example, these are some modules currently included in Nebullvm, including OpenAlphaTensor based on DeepMind's open source AlphaTensor algorithm, optimization modules that automatically sense hardware and accelerate it...

ChatLLaMA is also in this series of modules, but it should be noted that its open source license is not commercially available.

So if you want to use the "domestic self-developed ChatGPT" directly, it may not be that simple (doge).

After reading this project, some netizens said that it would be great if someone really got the model weights (code) of LLaMA...

But Some netizens also pointed out that the statement "15 times faster than the ChatGPT training method" is purely misleading:

The so-called 15 times faster is just because the LLaMA model itself is very small and can even be used on a single GPU. running on it, but it shouldn't be because of anything done by this project, right?

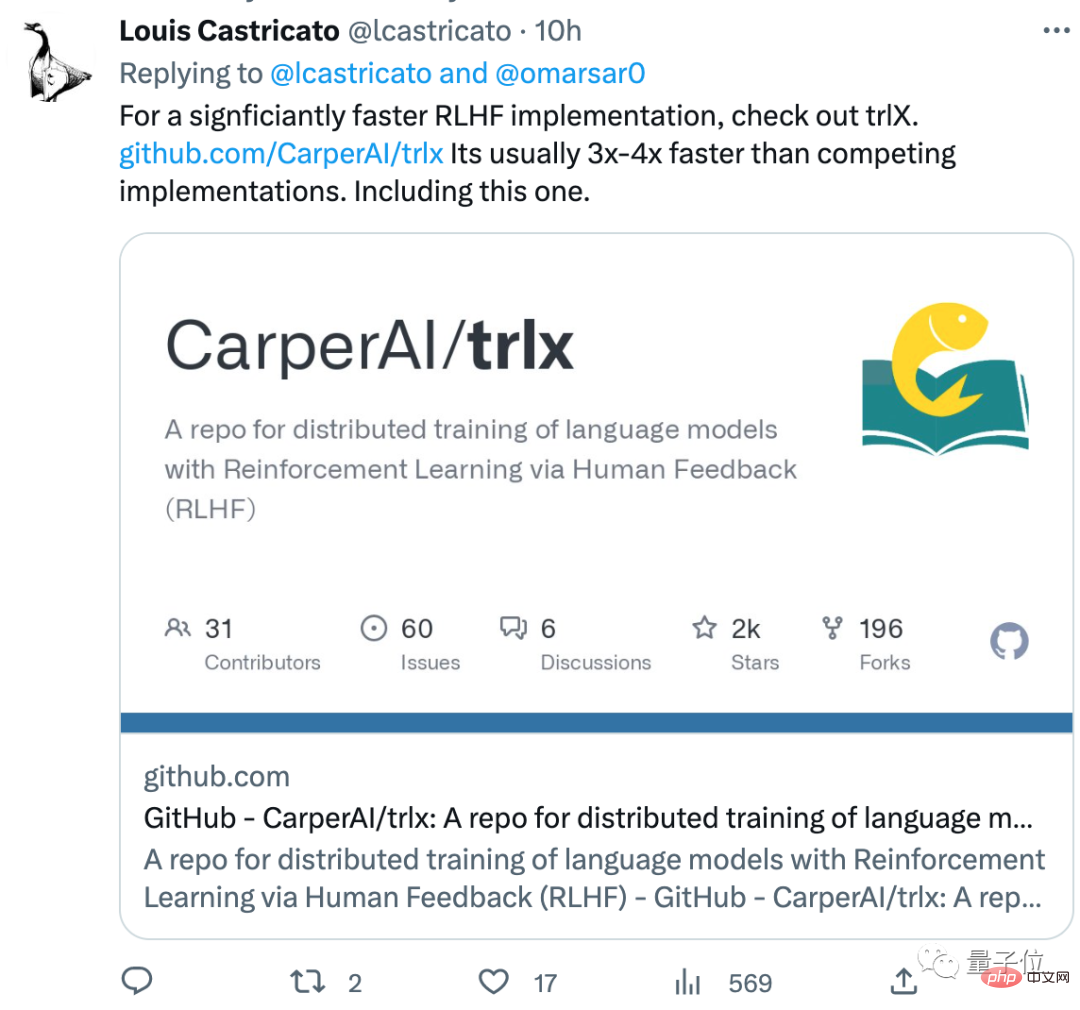

This netizen also recommended a RLHF training method that is better than the one in the library, called trlx, and the training speed is faster than the usual RLHF method. 3~4 times:

#Have you got the code for LLaMA? What do you think of this training method?

ChatLLaMA address:https://www.php.cn/link/fed537780f3f29cc5d5f313bbda423c4

Reference link:https://www.php.cn/link/fe27f92b1e3f4997567807f38d567a35

The above is the detailed content of The lightweight version of ChatGPT training method is open source! Built around LLaMA in just 3 days, the training speed is claimed to be 15 times faster than OpenAI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

What to do if the blue screen code 0x0000001 occurs

Feb 23, 2024 am 08:09 AM

What to do if the blue screen code 0x0000001 occurs

Feb 23, 2024 am 08:09 AM

What to do with blue screen code 0x0000001? The blue screen error is a warning mechanism when there is a problem with the computer system or hardware. Code 0x0000001 usually indicates a hardware or driver failure. When users suddenly encounter a blue screen error while using their computer, they may feel panicked and at a loss. Fortunately, most blue screen errors can be troubleshooted and dealt with with a few simple steps. This article will introduce readers to some methods to solve the blue screen error code 0x0000001. First, when encountering a blue screen error, we can try to restart

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

GE universal remote codes program on any device

Mar 02, 2024 pm 01:58 PM

GE universal remote codes program on any device

Mar 02, 2024 pm 01:58 PM

If you need to program any device remotely, this article will help you. We will share the top GE universal remote codes for programming any device. What is a GE remote control? GEUniversalRemote is a remote control that can be used to control multiple devices such as smart TVs, LG, Vizio, Sony, Blu-ray, DVD, DVR, Roku, AppleTV, streaming media players and more. GEUniversal remote controls come in various models with different features and functions. GEUniversalRemote can control up to four devices. Top Universal Remote Codes to Program on Any Device GE remotes come with a set of codes that allow them to work with different devices. you may

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

The familiar open source large language models such as Llama3 launched by Meta, Mistral and Mixtral models launched by MistralAI, and Jamba launched by AI21 Lab have become competitors of OpenAI. In most cases, users need to fine-tune these open source models based on their own data to fully unleash the model's potential. It is not difficult to fine-tune a large language model (such as Mistral) compared to a small one using Q-Learning on a single GPU, but efficient fine-tuning of a large model like Llama370b or Mixtral has remained a challenge until now. Therefore, Philipp Sch, technical director of HuggingFace