Technology peripherals

Technology peripherals

AI

AI

Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.

Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.

Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.

NeRF (Neural Radiance Fields), also known as neural radiation fields, has quickly become one of the most popular research fields since it was proposed, and the results are amazing. However, the direct output of NeRF is only a colored density field, which provides little information to researchers. The lack of context is one of the problems that need to be faced. The effect is that it directly affects the construction of interactive interfaces with 3D scenes.

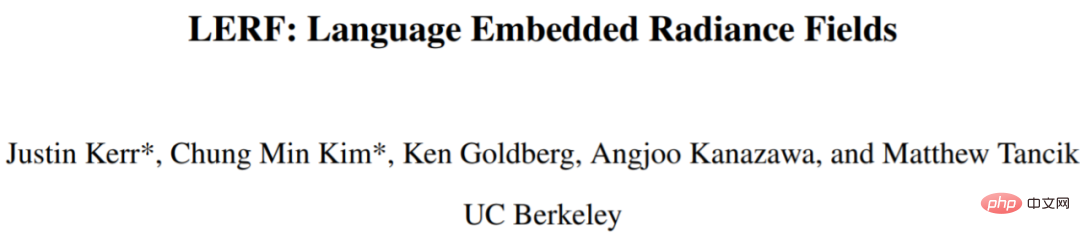

But natural language is different. Natural language interacts with 3D scenes very intuitively. We can use the kitchen scene in Figure 1 to explain that objects can be found in the kitchen by asking where the cutlery is, or asking where the tools used to stir are. However, completing this task requires not only the query capabilities of the model, but also the ability to incorporate semantics at multiple scales.

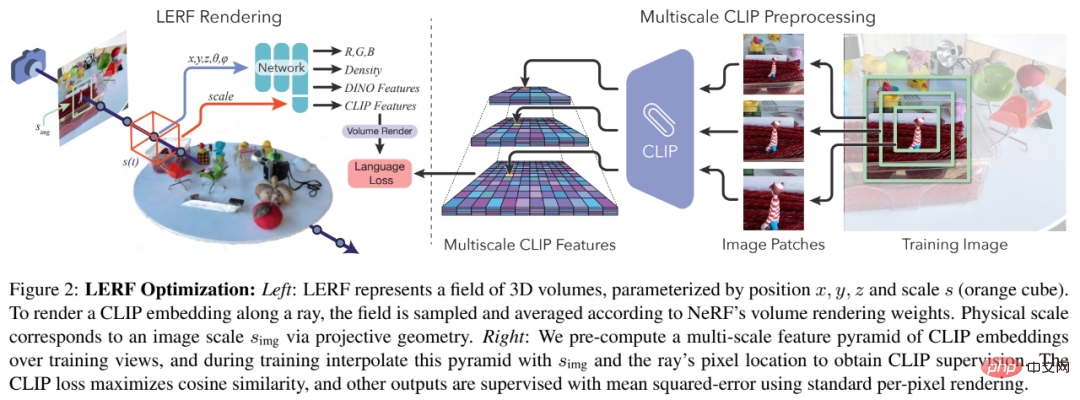

In this article, researchers from UC Berkeley proposed a novel method and named it LERF (Language Embedded Radiance Fields), which combines CLIP (Contrastive Language-Image Pre -training) are embedded into NeRF, making these types of 3D open language queries possible. LERF uses CLIP directly, without the need for fine-tuning through datasets such as COCO, or relying on masked region suggestions. LERF preserves the integrity of CLIP embeddings at multiple scales and is also able to handle a variety of linguistic queries, including visual attributes (e.g., yellow), abstract concepts (e.g., electric current), text, etc., as shown in Figure 1.

##Paper address: https://arxiv.org/pdf/2303.09553v1.pdf

Project homepage: https://www.lerf.io/

LERF can interactively provide languages for real-time Prompt to extract 3D related diagrams. For example, on a table with a lamb and a water cup, enter the prompt lamb or water cup, and LERF can give the relevant 3D picture:

Method

Method

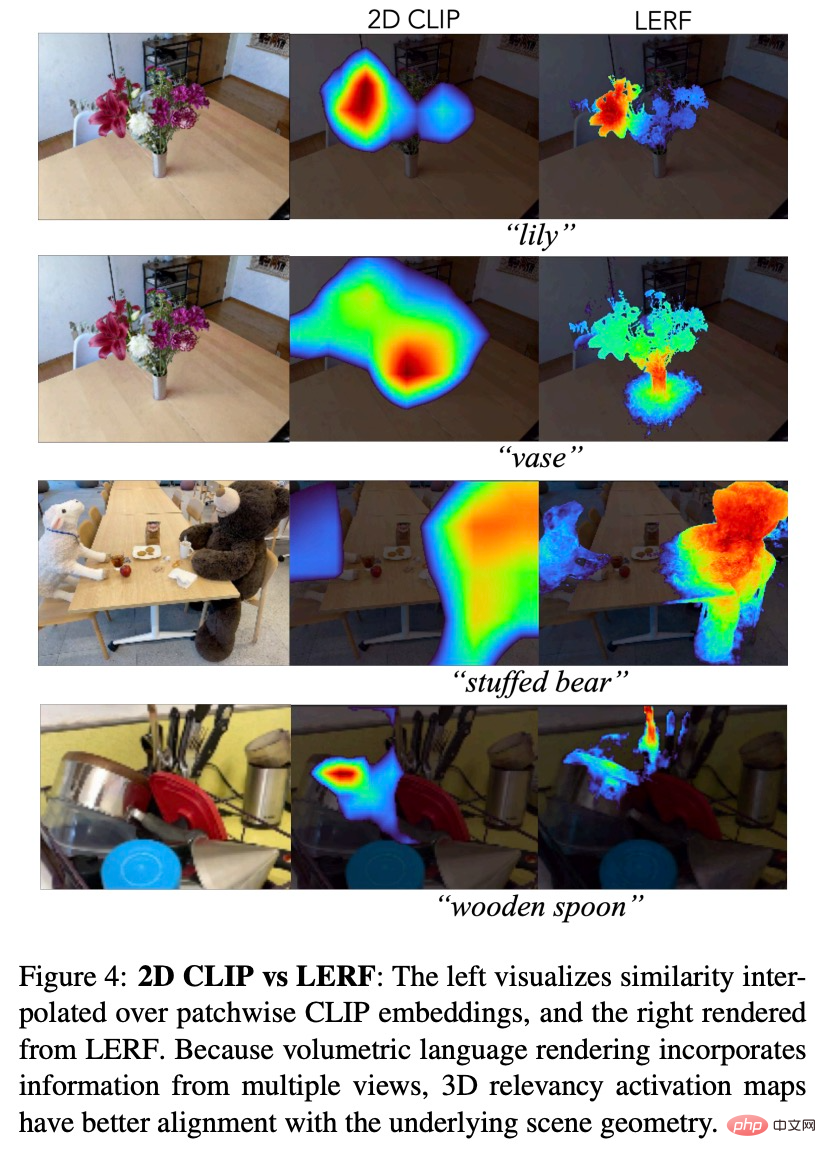

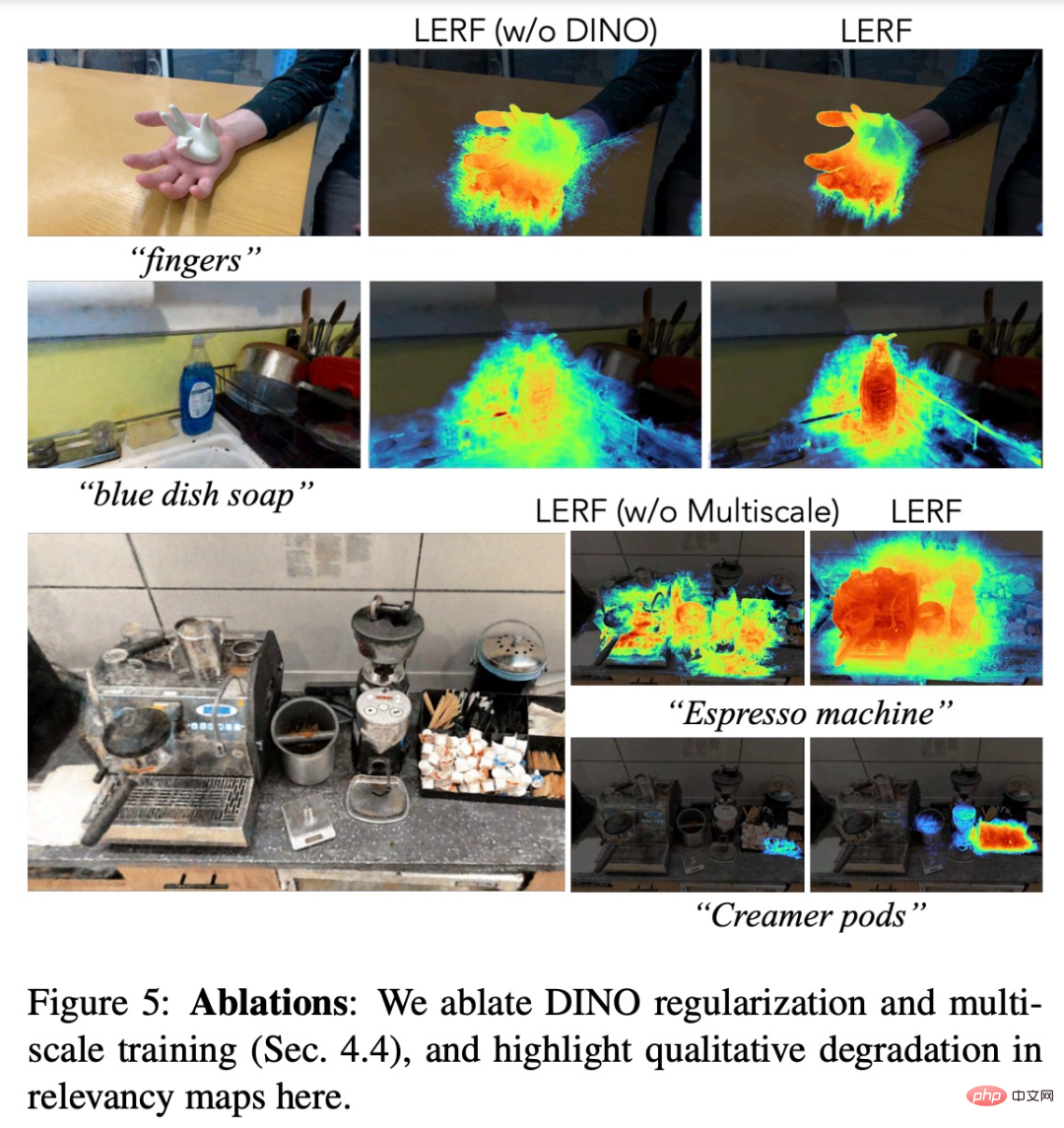

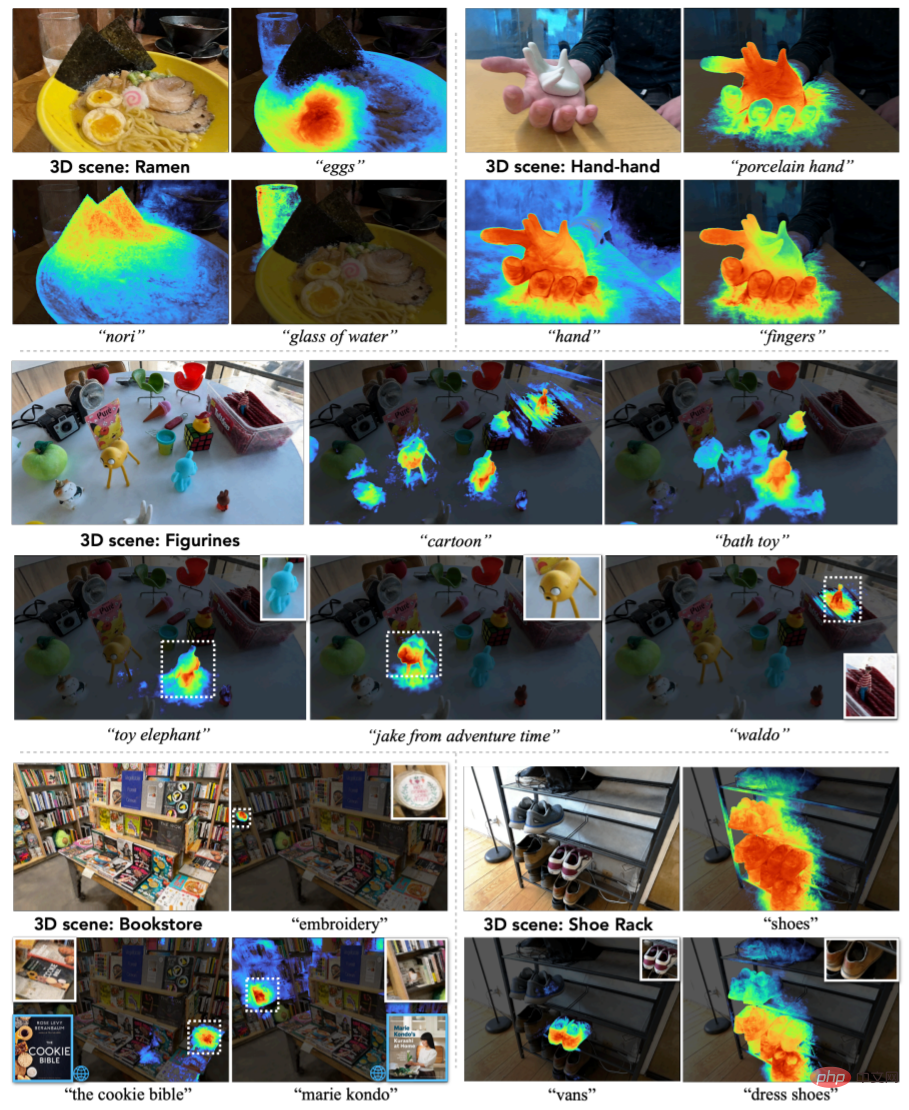

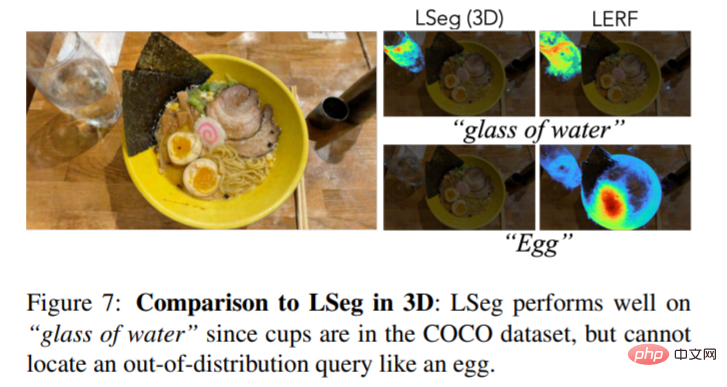

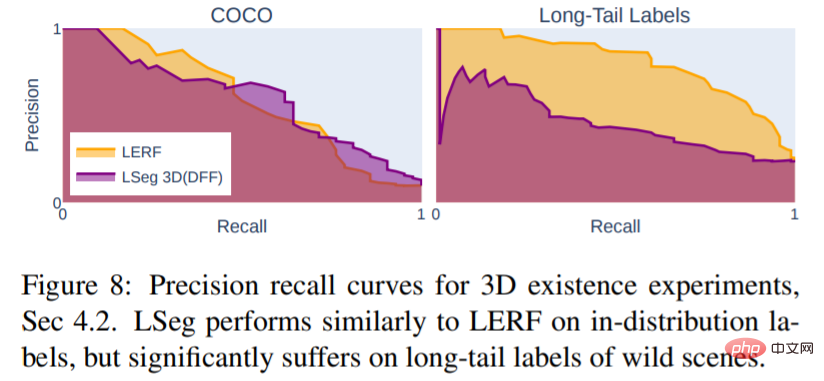

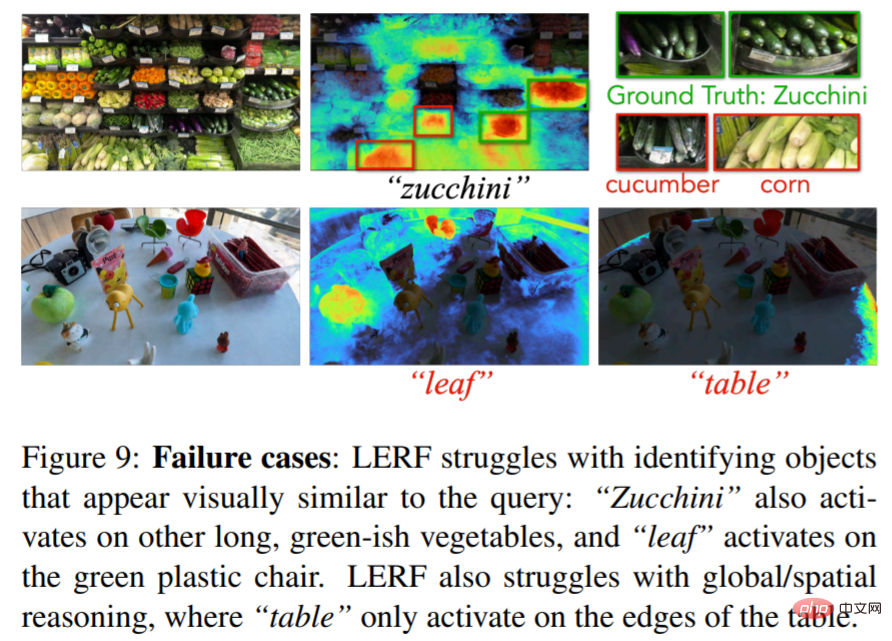

#LERF itself produces coherent results, but the resulting correlation map can sometimes be incomplete and contain some outliers, as shown in Figure 5 below. To standardize the optimized language field, this study introduces self-supervised DINO through shared bottlenecks. In terms of architecture, optimizing language embedding in 3D should not affect the density distribution in the underlying scene representation, so this study captures the inductive bias in LERF by training two independent networks. Settings (inductive bias): one for feature vectors (DINO, CLIP) and one for standard NeRF output (color, density). To demonstrate LERF’s ability to process real-world data, the study collected 13 scenes, including grocery stores, kitchens, bookstores, figurines, etc. . Figure 3 selects 5 representative scenarios to demonstrate LERF’s ability to process natural language. ##Figure 3 Figure 7 is 3D visual comparison of LERF and LSeg. In the eggs in the calibration bowl, LSeg is inferior to LERF: Figure 8 shows that under limited segmentation data LSeg trained on the set lacks the ability to effectively represent natural language. Instead, it only performs well on common objects within the training set distribution, as shown in Figure 7. However, the LERF method is not perfect yet. The following are failure cases. For example, when calibrating zucchini vegetables, other vegetables will appear:

Experiment

The above is the detailed content of Natural language is integrated into NeRF, and LERF, which generates 3D images with just a few words, is here.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

In natural language generation tasks, sampling method is a technique to obtain text output from a generative model. This article will discuss 5 common methods and implement them using PyTorch. 1. GreedyDecoding In greedy decoding, the generative model predicts the words of the output sequence based on the input sequence time step by time. At each time step, the model calculates the conditional probability distribution of each word, and then selects the word with the highest conditional probability as the output of the current time step. This word becomes the input to the next time step, and the generation process continues until some termination condition is met, such as a sequence of a specified length or a special end marker. The characteristic of GreedyDecoding is that each time the current conditional probability is the best

How to do basic natural language generation using PHP

Jun 22, 2023 am 11:05 AM

How to do basic natural language generation using PHP

Jun 22, 2023 am 11:05 AM

Natural language generation is an artificial intelligence technology that converts data into natural language text. In today's big data era, more and more businesses need to visualize or present data to users, and natural language generation is a very effective method. PHP is a very popular server-side scripting language that can be used to develop web applications. This article will briefly introduce how to use PHP for basic natural language generation. Introducing the natural language generation library The function library that comes with PHP does not include the functions required for natural language generation, so

Building text generators using Markov chains

Apr 09, 2023 pm 10:11 PM

Building text generators using Markov chains

Apr 09, 2023 pm 10:11 PM

In this article, we will introduce a popular machine learning project - text generator. You will learn how to build a text generator and learn how to implement a Markov chain to achieve a faster predictive model. Introduction to Text Generators Text generation is popular across industries, especially in mobile, apps, and data science. Even the press uses text generation to aid the writing process. In daily life, we will come into contact with some text generation technologies. Text completion, search suggestions, Smart Compose, and chat robots are all examples of applications. This article will use Markov chains to build a text generator. This would be a character-based model that takes the previous character of the chain and generates the next letter in the sequence. By training our program on sample words,

Traffic Engineering doubles code generation accuracy: from 19% to 44%

Feb 05, 2024 am 09:15 AM

Traffic Engineering doubles code generation accuracy: from 19% to 44%

Feb 05, 2024 am 09:15 AM

The authors of a new paper propose a way to "enhance" code generation. Code generation is an increasingly important capability in artificial intelligence. It automatically generates computer code based on natural language descriptions by training machine learning models. This technology has broad application prospects and can transform software specifications into usable code, automate back-end development, and assist human programmers to improve work efficiency. However, generating high-quality code remains challenging for AI systems, compared with language tasks such as translation or summarization. The code must accurately conform to the syntax of the target programming language, handle edge cases and unexpected inputs gracefully, and handle the many small details of the problem description accurately. Even small bugs that may seem innocuous in other areas can completely disrupt the functionality of a program, causing

Cursor integrated with GPT-4 makes writing code as easy as chatting. A new era of coding in natural language has arrived.

Apr 04, 2023 pm 12:15 PM

Cursor integrated with GPT-4 makes writing code as easy as chatting. A new era of coding in natural language has arrived.

Apr 04, 2023 pm 12:15 PM

Github Copilot X, which integrates GPT-4, is still in small-scale internal testing, while Cursor, which integrates GPT-4, has been publicly released. Cursor is an IDE that integrates GPT-4 and can write code in natural language, making writing code as easy as chatting. There is still a big difference between GPT-4 and GPT-3.5 in their ability to process and write code. A test report from the official website. The first two are GPT-4, one uses text input and the other uses image input; the third is GPT3.5. It can be seen that the coding capabilities of GPT-4 have been greatly improved compared to GPT-3.5. Github Copilot X integrating GPT-4 is still in small-scale testing, and

With full coverage of values and privacy protection, the Cyberspace Administration of China plans to 'establish rules” for generative AI

Apr 13, 2023 pm 03:34 PM

With full coverage of values and privacy protection, the Cyberspace Administration of China plans to 'establish rules” for generative AI

Apr 13, 2023 pm 03:34 PM

On April 11, the Cyberspace Administration of China (hereinafter referred to as the Cyberspace Administration of China) drafted and released the "Measures for the Management of Generative Artificial Intelligence Services (Draft for Comments)" and launched a month-long solicitation of opinions from the public. This management measure (draft for comments) has a total of 21 articles. In terms of scope of application, it includes both entities that provide generative artificial intelligence services, as well as organizations and individuals who use these services; the management measures cover the output content of generative artificial intelligence. value orientation, training principles for service providers, protection of privacy/intellectual property rights and other rights, etc. The emergence of large-scale generative natural language models and products such as GPT not only allowed the public to experience the rapid progress of artificial intelligence, but also exposed security risks, including the generation of biased and discriminatory information.

Is it necessary to 'participle'? Andrej Karpathy: It's time to throw away this historical baggage

May 20, 2023 pm 12:52 PM

Is it necessary to 'participle'? Andrej Karpathy: It's time to throw away this historical baggage

May 20, 2023 pm 12:52 PM

The emergence of conversational AI such as ChatGPT has made people accustomed to this kind of thing: input a piece of text, code or a picture, and the conversational robot will give you the answer you want. But behind this simple interaction method, the AI model needs to perform very complex data processing and calculations, and tokenization is a common one. In the field of natural language processing, tokenization refers to dividing text input into smaller units, called "tokens". These tokens can be words, subwords or characters, depending on the specific word segmentation strategy and task requirements. For example, if we perform tokenization on the sentence "I like eating apples", we will get a sequence of tokens: [&qu

Many countries are planning to ban ChatGPT. Is the cage for the 'beast' coming?

Apr 10, 2023 pm 02:40 PM

Many countries are planning to ban ChatGPT. Is the cage for the 'beast' coming?

Apr 10, 2023 pm 02:40 PM

"Artificial intelligence wants to escape from prison", "AI generates self-awareness", "AI will eventually kill humans", "the evolution of silicon-based life"... once only appeared in technological fantasies such as cyberpunk The plot is coming true this year, and generative natural language models are being questioned like never before. The one that has attracted the most attention is ChatGPT. From the end of March to the beginning of April, this text conversation robot developed by OpenAI suddenly changed from a representative of "advanced productivity" to a threat to mankind. First, it was named by thousands of elites in the technology circle and included in an open letter to "suspend the training of AI systems more powerful than GPT-4"; then, the American technology ethics organization asked the U.S. Federal Trade Commission to investigate OpenAI and prohibit the release of commercial Version