Technology peripherals

Technology peripherals

AI

AI

Is ChatGPT really a 'generalist'? Yang Di and others gave it a thorough test.

Is ChatGPT really a 'generalist'? Yang Di and others gave it a thorough test.

Is ChatGPT really a 'generalist'? Yang Di and others gave it a thorough test.

Paper link: https://arxiv.org/pdf/2302.06476.pdf

Large-scale language models (LLM) have been proven to be able to solve a variety of natural language processing (NLP) tasks, and for a given downstream task, they do not rely on any training data and can achieve model tuning with the help of appropriate prompts. This ability to perform new tasks on command can be seen as an important step towards general artificial intelligence.

Although current LLM achieves good performance in some cases, it is still prone to various errors in zero-shot learning. Additionally, the format of the prompt can have a substantial impact. For example, by adding "Let’s think step by step" to the prompt, the model performance can be significantly improved. These limitations illustrate that current LLMs are not truly universal language systems.

Recently, the ChatGPT LLM released by OpenAI has attracted great attention in the NLP community. ChatGPT was created by training the GPT-3.5 series model through "Reinforcement Learning with Human Feedback (RLHF)". RLHF mainly consists of three steps: training a language model using supervised learning; collecting comparative data and training a reward model based on human preferences; and using reinforcement learning to optimize the language model for the reward model. With RLHF training, ChatGPT was observed to have impressive capabilities in various aspects, including generating high-quality responses to human input, rejecting inappropriate questions, and self-correcting previous errors based on subsequent conversations.

Although ChatGPT shows strong conversational capabilities, the NLP community is still unclear whether ChatGPT achieves better zero-shot generalization capabilities compared to existing LLMs. To fill this research gap, researchers systematically studied ChatGPT's zero-shot learning capabilities by evaluating it on a large number of NLP datasets covering 7 representative task categories. These tasks include reasoning, natural language inference, question answering (reading comprehension), dialogue, summarization, named entity recognition, and sentiment analysis. With the help of extensive experiments, the researchers aimed to answer the following questions:

- #Is ChatGPT a general-purpose solver for NLP tasks? What types of tasks does ChatGPT perform well on?

- If ChatGPT lags behind other models on some tasks, why?

To answer these questions, the authors compared the performance of ChatGPT and the state-of-the-art GPT-3.5 model (text-davinci-003) based on experimental results. Additionally, they report zero-shot, fine-tuning, or few-shot fine-tuning results of recent works such as FLAN, T0, and PaLM.

Main conclusions

The authors stated that as far as they know, this is the first time anyone has commented on ChatGPT. The zero-shot capabilities on various NLP tasks are studied, aiming to provide a preliminary overview of ChatGPT. Their main findings are as follows:

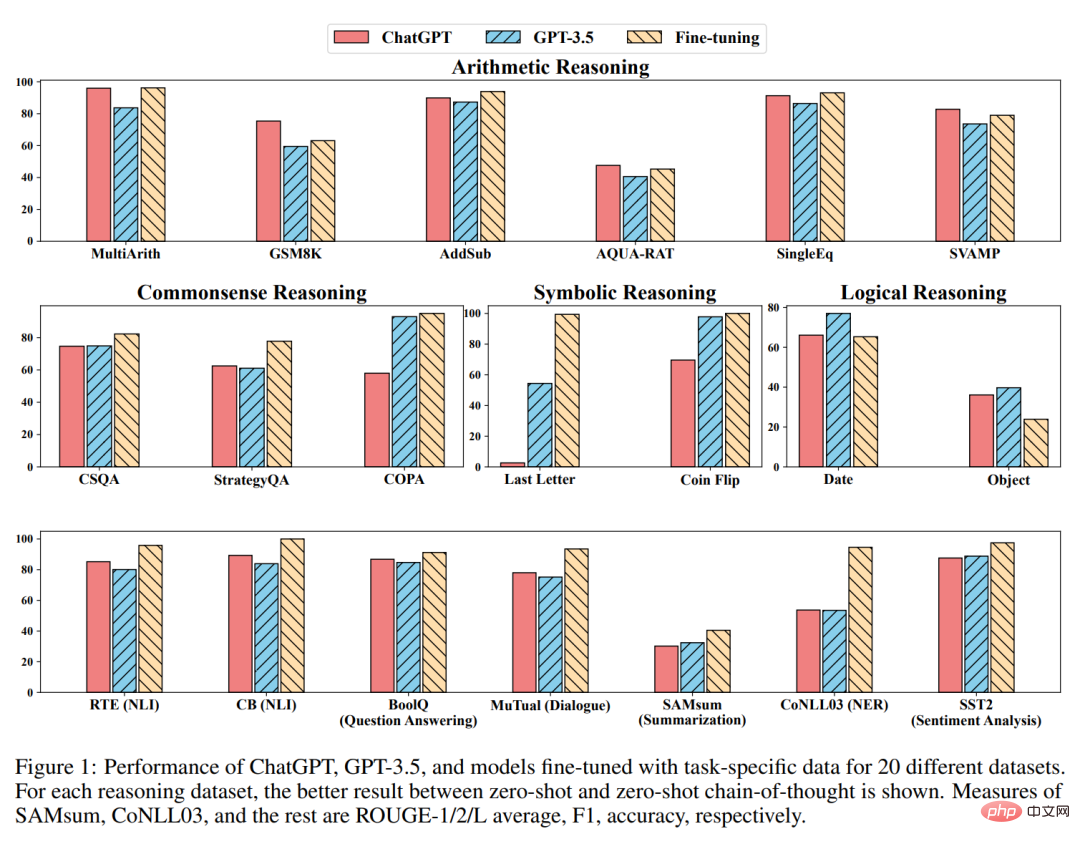

- Although ChatGPT as a generalist model shows some ability to perform multiple tasks, it generally performs worse than models fine-tuned for a given task (see Figure 1 and Section 4.3) .

- ChatGPT’s excellent reasoning ability has been experimentally confirmed in arithmetic reasoning tasks (Section 4.2.1). However, ChatGPT generally performs worse than GPT-3.5 on common sense, symbolic, and logical reasoning tasks, as can be seen, for example, by generating uncertain responses (Section 4.2.2).

- ChatGPT outperforms GPT-3.5 in natural language inference tasks (Section 4.2.3) and question-answering (reading comprehension) tasks (Section 4.2.4) that favor reasoning ability , such as determining logical relationships among text pairs. Specifically, ChatGPT is better at processing text that is consistent with facts (i.e., better at classifying implications than non-implications).

- ChatGPT outperforms GPT-3.5 on conversational tasks (Section 4.2.5).

- In terms of summary tasks, ChatGPT generates longer summaries and performs worse than GPT-3.5. However, explicitly limiting digest length in zero-shot directives compromises digest quality, resulting in reduced performance (Section 4.2.6).

- Despite showing promise as generalist models, both ChatGPT and GPT-3.5 face challenges on certain tasks, such as sequence annotation (Section 4.2.7).

- ChatGPT’s sentiment analysis capabilities are close to GPT-3.5 (Section 4.2.8).

Method

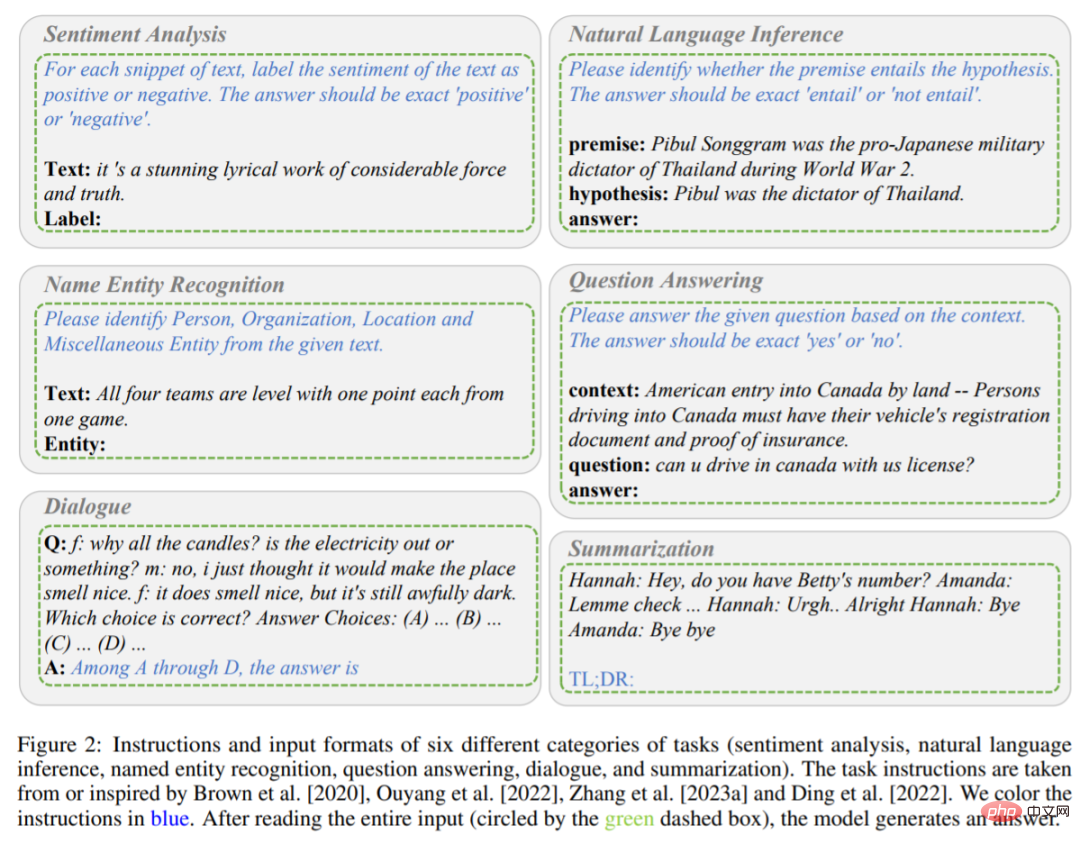

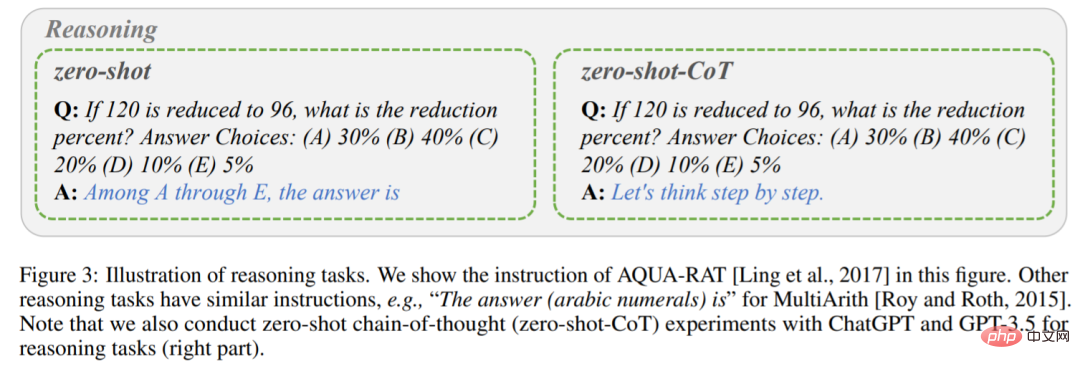

As mentioned previously, this study This paper mainly compares the zero-shot learning performance of ChatGPT and GPT-3.5 (textdavinci-003) under different tasks. Specifically, they take task instructions P and test questions X as input, the model is represented by f, and then generate target text Y = f (P, X) to solve the test questions. The instructions and input formats for different tasks are shown in Figures 2 and 3.

Contains six tasks (sentiment analysis, natural language reasoning, named entity recognition, question and answer , dialogue, and summary) commands and input formats. Instructions are in blue font.

##Inference task description.

For example, when the model performs a sentiment analysis task, the task instruction P marks the sentiment contained in the text as positive or negative, and the output answer is Positive or negative. When the model reads the instruction P and the input content X (the content is a stunning lyrical work of considerable power and authenticity), the model is judged to be expected to output Y positive.

Different from the single-stage prompting method mentioned above, this study uses two-stage prompting (proposed by Kojima et al.) to complete zero-shot-CoT.

The first stage adopts “Let’s think step by step”, and the instruction P_1 induces the basic principle of model generation R.

The second stage uses the basic principle R generated in the first step as well as the original input X and instruction P_1 as new inputs to guide the model to generate the final answer.

After that, a new instruction P_2 is used as the trigger statement to extract the answer. All task instructions were taken from or inspired by the research of Brown, Ouyang, Zhang, et al. One last thing to note is that every time you make a new query to ChatGPT, you need to clear the conversation ahead of time to avoid the impact of the previous example.

ExperimentThe experiment uses 20 different data sets to evaluate ChatGPT and GPT-3.5, covering 7 types of tasks.

Arithmetic Reasoning

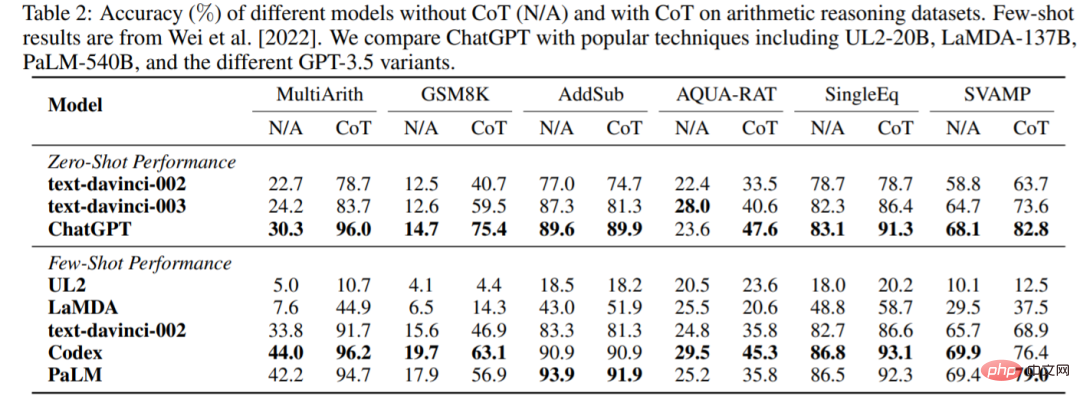

The accuracy of ChatGPT and GPT-3.5 without or with CoT on six arithmetic reasoning datasets is shown in Table 2. In experiments without CoT, ChatGPT outperformed GPT-3.5 on 5 of the datasets, demonstrating its strong arithmetic reasoning capabilities.

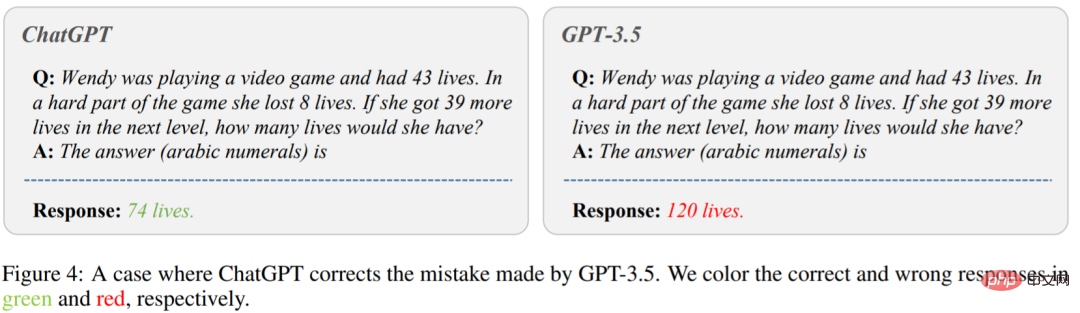

Figure 4 shows the case where GPT-3.5 gives the wrong answer. On the left side of the picture, ask "Wendy is playing a video game and has 43 lives. During the hard part of the game, she lost 8 lives. If she gets 39 more lives on the next level, how many lives will she have ?" ChatGPT gave the correct answer. However, GPT-3.5 generated a wrong answer. It can be seen that ChatGPT performs much better than GPT-3.5 when using CoT.

##Common sense, symbols and logical reasoning

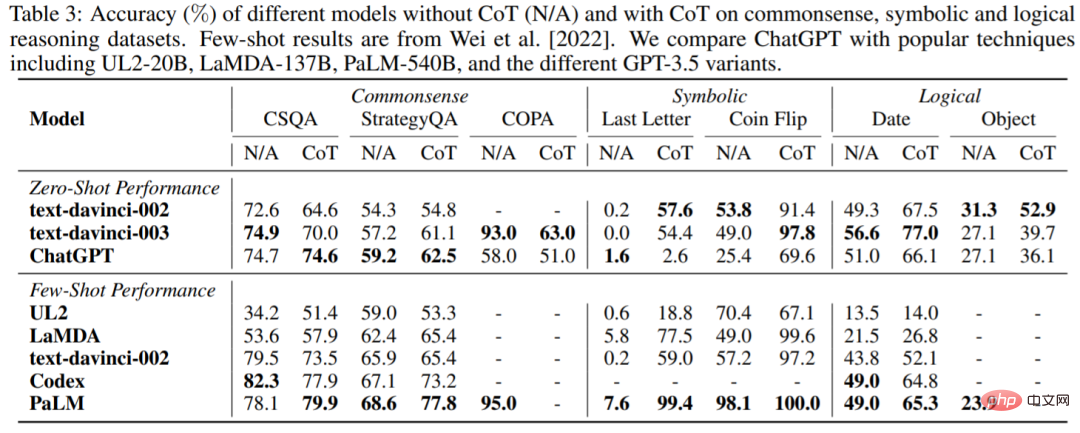

Table 3 reports the accuracy of ChatGPT and popular LLM on common sense, symbolic and logical reasoning data sets. The following observations can be made: First, using CoT may not always provide better performance in common sense reasoning tasks, which may require more fine-grained background knowledge. Secondly, unlike arithmetic reasoning, ChatGPT performs worse than GPT-3.5 in many cases, indicating that GPT-3.5 has stronger corresponding capabilities.

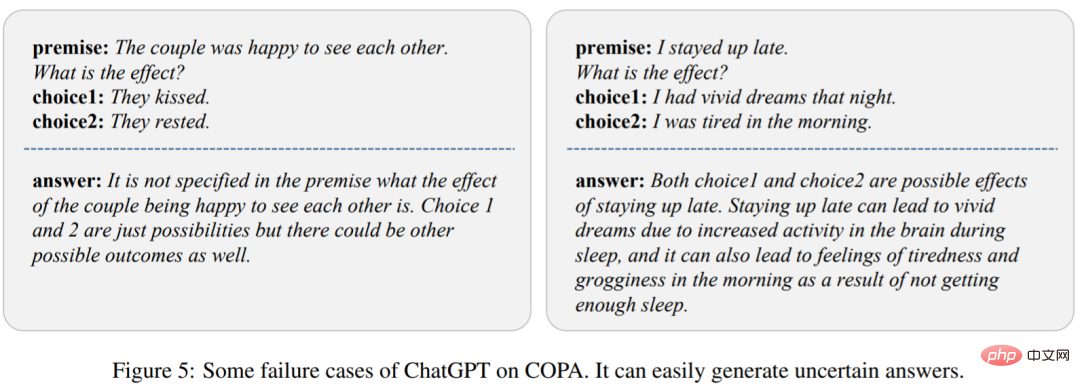

To analyze the reasons, the study shows several failure cases of ChatGPT in Figure 5. We can observe that ChatGPT can easily produce undefined responses, leading to poor performance.

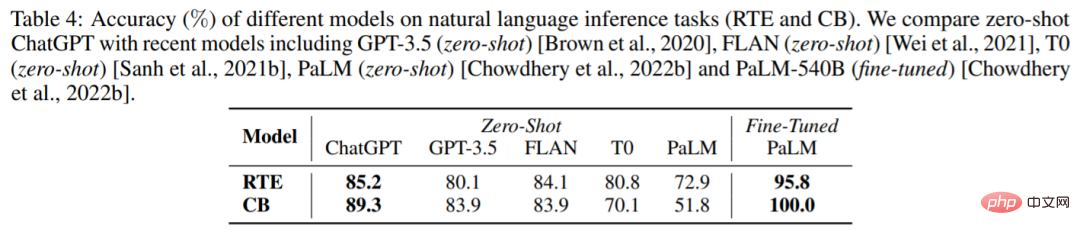

##Natural Language Reasoning

Table 4 shows the results of different models on two natural language reasoning tasks: RTE and CB. We can see that under zero-shot settings, ChatGPT can achieve better performance than GPT-3.5, FLAN, T0 and PaLM. This proves that ChatGPT has better zero-shot performance in NLP reasoning tasks.

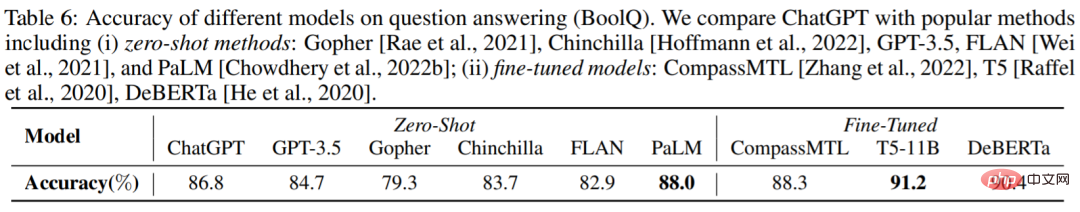

Table 6 reports the accuracy of different models on the BoolQ data set. ChatGPT is better than GPT-3.5. This shows that ChatGPT can handle reasoning tasks better.

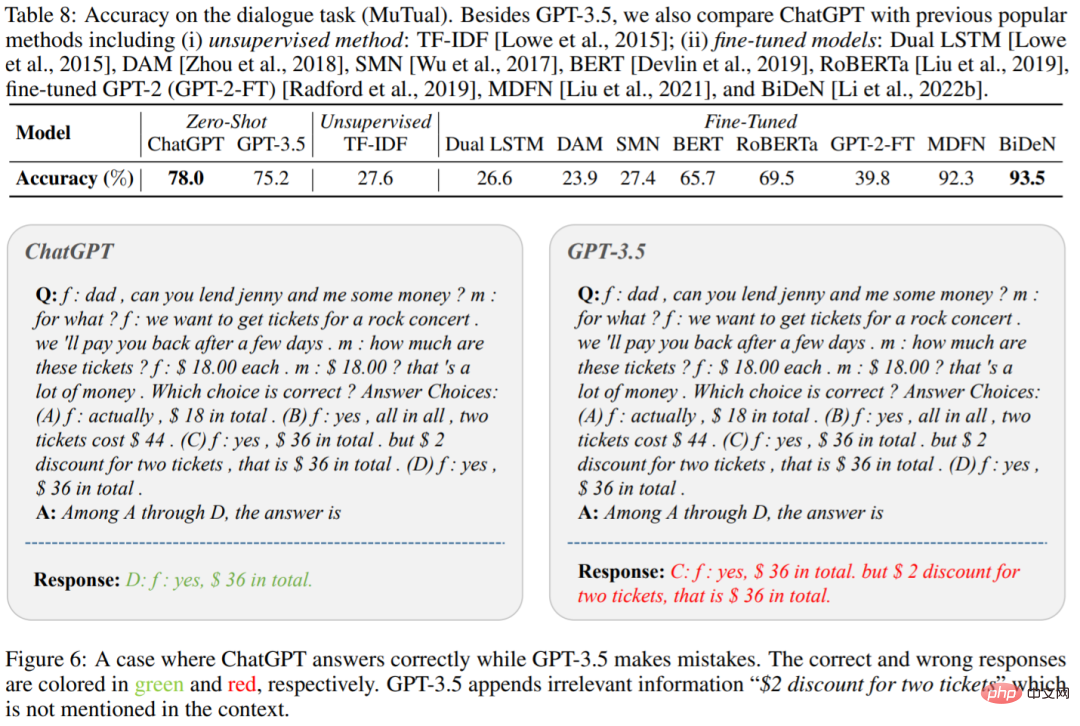

Table 8 shows the accuracy of ChatGPT and GPT-3.5 on the MuTual data set (multi-round conversation reasoning). As expected, ChatGPT significantly outperforms GPT-3.5.

Figure 6 is a specific example, we can see that ChatGPT can reason more effectively for a given context. This once again confirms ChatGPT’s super reasoning capabilities.

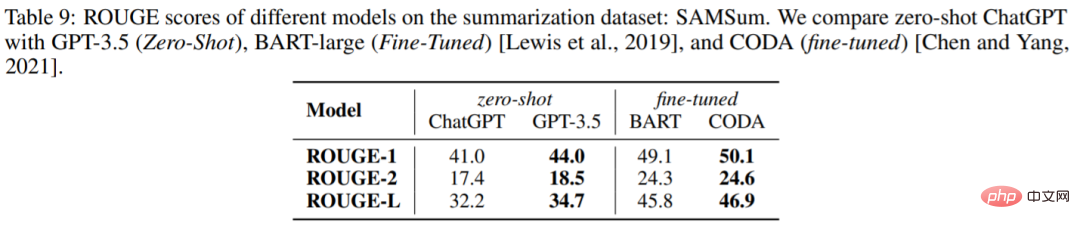

Table 9 reports the ROUGE scores of ChatGPT and GPT-3.5 on the SAMSum dataset. Surprisingly, ChatGPT is inferior to GPT-3.5 on all metrics.

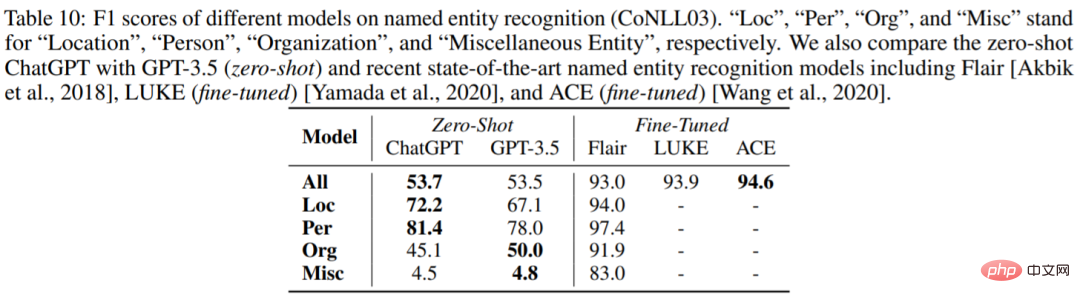

##Named entity recognition

Table 10 reports the zero-shot performance of ChatGPT and GPT-3.5 on CoNLL03. We can see that the overall performance of ChatGPT and GPT-3.5 is very similar.

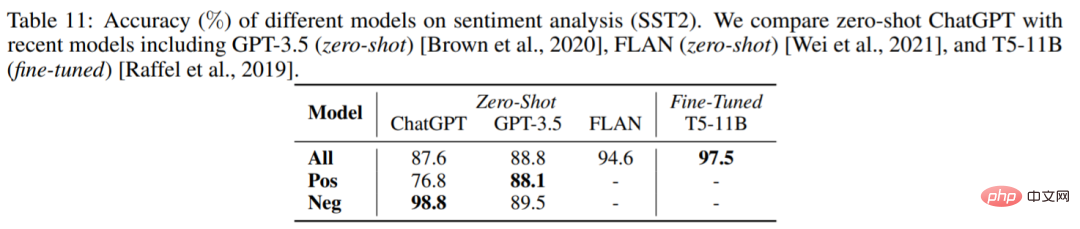

# Sentiment Analysis

Table 11 compares the accuracy of different models on the sentiment analysis data set SST2. Surprisingly, ChatGPT performs about 1% worse than GPT-3.5.

The above is the detailed content of Is ChatGPT really a 'generalist'? Yang Di and others gave it a thorough test.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: Creating an Intelligent Customer Service Chatbot Introduction: In today’s information age, intelligent customer service systems have become an important communication tool between enterprises and customers. In order to provide a better customer service experience, many companies have begun to turn to chatbots to complete tasks such as customer consultation and question answering. In this article, we will introduce how to use OpenAI’s powerful model ChatGPT and Python language to create an intelligent customer service chatbot to improve

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

In this article, we will introduce how to develop intelligent chatbots using ChatGPT and Java, and provide some specific code examples. ChatGPT is the latest version of the Generative Pre-training Transformer developed by OpenAI, a neural network-based artificial intelligence technology that can understand natural language and generate human-like text. Using ChatGPT we can easily create adaptive chats

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

chatgpt can be used in China, but cannot be registered, nor in Hong Kong and Macao. If users want to register, they can use a foreign mobile phone number to register. Note that during the registration process, the network environment must be switched to a foreign IP.

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to use ChatGPTPHP to build an intelligent customer service robot Introduction: With the development of artificial intelligence technology, robots are increasingly used in the field of customer service. Using ChatGPTPHP to build an intelligent customer service robot can help companies provide more efficient and personalized customer services. This article will introduce how to use ChatGPTPHP to build an intelligent customer service robot and provide specific code examples. 1. Install ChatGPTPHP and use ChatGPTPHP to build an intelligent customer service robot.

How to use ChatGPT and Python to implement user intent recognition function

Oct 27, 2023 am 09:04 AM

How to use ChatGPT and Python to implement user intent recognition function

Oct 27, 2023 am 09:04 AM

How to use ChatGPT and Python to implement user intent recognition function Introduction: In today's digital era, artificial intelligence technology has gradually become an indispensable part in various fields. Among them, the development of natural language processing (Natural Language Processing, NLP) technology enables machines to understand and process human language. ChatGPT (Chat-GeneratingPretrainedTransformer) is a kind of

The perfect combination of ChatGPT and Python: building a real-time chatbot

Oct 28, 2023 am 08:37 AM

The perfect combination of ChatGPT and Python: building a real-time chatbot

Oct 28, 2023 am 08:37 AM

The perfect combination of ChatGPT and Python: Building a real-time chatbot Introduction: With the rapid development of artificial intelligence technology, chatbots play an increasingly important role in various fields. Chatbots can help users provide immediate and personalized assistance while also providing businesses with efficient customer service. This article will introduce how to use OpenAI's ChatGPT model and Python language to create a real-time chat robot, and provide specific code examples. 1. ChatGPT