Technology peripherals

Technology peripherals

AI

AI

Tsinghua's latest 'continuous learning' review, 32 pages detailing the review of continuous learning theories, methods and applications

Tsinghua's latest 'continuous learning' review, 32 pages detailing the review of continuous learning theories, methods and applications

Tsinghua's latest 'continuous learning' review, 32 pages detailing the review of continuous learning theories, methods and applications

In a general sense, continuous learning is clearly limited by catastrophic forgetting, and learning new tasks often leads to a sharp decline in performance on old tasks.

# In addition to this, there have been an increasing number of developments in recent years that have expanded the understanding and application of continuous learning to a great extent.

# The growing and widespread interest in this direction demonstrates its practical significance and complexity.

Paper address: https://www.php.cn/link/82039d16dce0aab3913b6a7ac73deff7##

This article conducts a comprehensive survey on continuous learning and attempts to Make connections between basic settings, theoretical foundations, representative methods and practical applications.

Based on existing theoretical and empirical results, the general goals of continuous learning are summarized as: ensuring appropriate stability-plasticity trade-offs, and adequate task performance, in the context of resource efficiency Within/between-task generalization ability.

Provides a state-of-the-art and detailed taxonomy that extensively analyzes how representative strategies address continuous learning and how they adapt to specific challenges in various applications.

Through an in-depth discussion of current trends in continuous learning, cross-directional prospects, and interdisciplinary connections with neuroscience, we believe this holistic perspective can greatly advance this field and beyond. follow-up exploration.

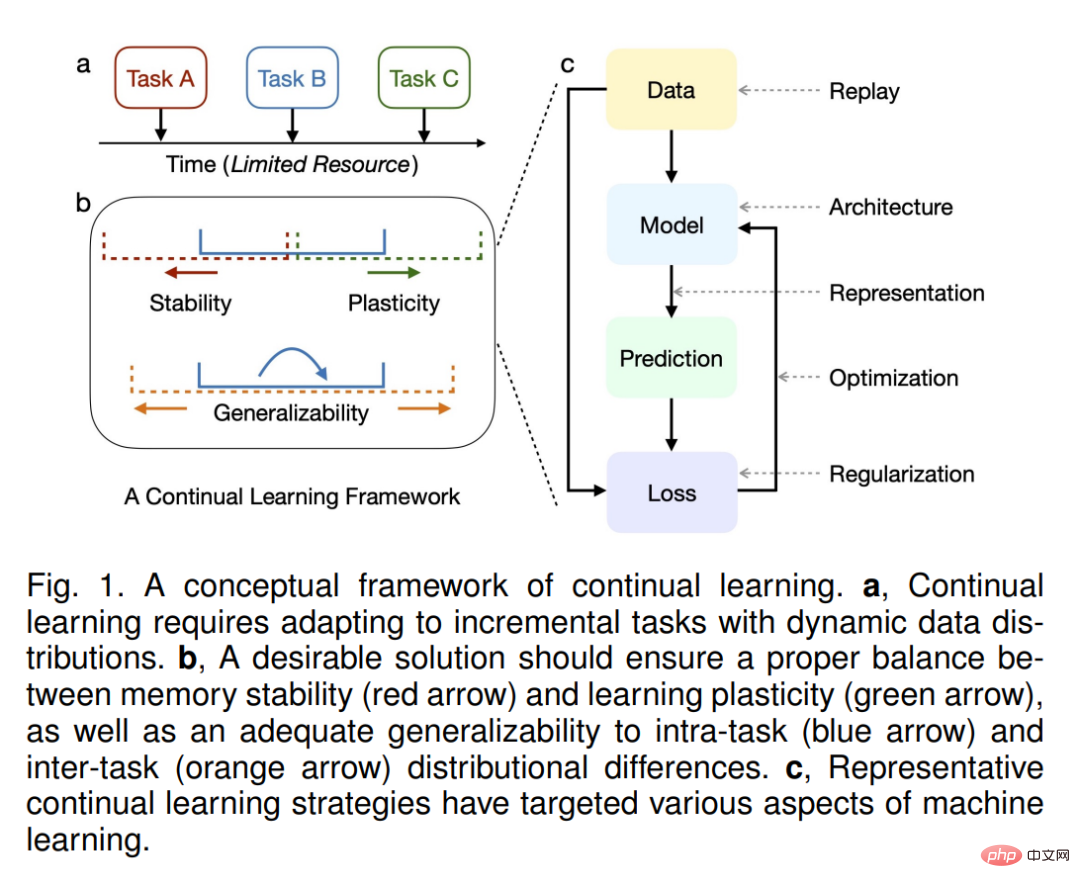

IntroductionLearning is the basis for intelligent systems to adapt to the environment. In order to cope with changes in the outside world, evolution has made humans and other organisms highly adaptable and able to continuously acquire, update, accumulate and utilize knowledge [148], [227], [322]. Naturally, we expect artificial intelligence (AI) systems to adapt in a similar way. This has inspired research on continuous learning, where a typical setting is to learn a sequence of contents one by one and behave as if they were observed simultaneously (Figure 1, a). These can be new skills, new examples of old skills, different environments, different contexts, etc., and contain specific real-world challenges [322], [413]. Since content is provided gradually over a lifetime, continuous learning is also called incremental learning or lifelong learning in many literatures, but there is no strict distinction [70], [227].

Different from traditional machine learning models based on static data distribution, continuous learning is characterized by learning from dynamic data distribution.A major challenge is known as catastrophic forgetting [291], [292], where adaptation to a new distribution often results in a greatly reduced ability to capture the old distribution. This dilemma is one aspect of the trade-off between learning plasticity and memory stability: too much of the former interferes with the latter, and vice versa. Beyond simply balancing the “ratio” of these two aspects, an ideal solution for continuous learning should achieve strong generalization capabilities to adapt to distributional differences within and between tasks (Figure 1, b). As a naive baseline, retraining all old training samples (if allowed) can easily solve the above challenges, but incurs huge computational and storage overhead (and potential privacy issues). In fact, the main purpose of continuous learning is to ensure resource efficiency of model updates, preferably close to only learning new training samples.

Many efforts have been devoted to solving the above challenges, which can be conceptually divided into five groups (Figure 1, c): Adding regularization terms with reference to the old model (regularization-based methods); Approximating and recovering old data distributions (replay-based approaches); operating optimizers explicitly (optimization-based approaches); learning representations that are robust and well-generalized (representation-based approaches); and building tasks using properly designed architectures Adaptive parameters (architecture-based approach). This taxonomy extends recent advances in commonly used taxonomies and provides refined sub-directions for each category. It summarizes how these methods achieve the general goals proposed, and provides an extensive analysis of their theoretical foundations and typical implementations. In particular, these methods are closely related, such as regularization and replay ultimately correct the gradient direction in optimization, and are highly synergistic, for example, the effect of replay can be improved by extracting knowledge from the old model.

Real-life applications pose special challenges to continuous learning, which can be divided into scene complexity and task specificity. For the former, for example, the task oracle (i.e. which task to perform) may be missing in training and testing, and the training samples may be introduced in small batches or even at once. Due to the cost and scarcity of data labelling, continuous learning needs to be effective in few-shot, semi-supervised or even unsupervised scenarios. For the latter, while current progress is mainly focused on visual classification, other visual fields such as object detection, semantic segmentation and image generation, as well as other related fields such as reinforcement learning (RL), natural language processing (NLP) and ethical considerations ) is receiving more and more attention, its opportunities and challenges.

Given the significant growth in interest in continuous learning, we believe this latest and comprehensive survey can provide a holistic perspective for subsequent work. Although there are some early investigations on continuous learning with relatively wide coverage [70], [322], the important progress in recent years has not been included. In contrast, recent surveys have generally collated only local aspects of continuous learning, regarding its biological basis [148], [156], [186], [227], and specialized settings for visual classification [85], [283] , [289], [346], and extensions in NLP [37], [206] or RL [214]. To the best of our knowledge, this is the first survey to systematically summarize recent advances in continuous learning. Building on these strengths, we provide an in-depth discussion of continuous learning on current trends, cross-directional prospects (such as diffusion models, large-scale pre-training, visual transformers, embodied AI, neural compression, etc.), and interdisciplinary connections with neuroscience.

Main contributions include:

(1) An up-to-date and comprehensive review of continuous learning , to connect advances in theory, methods, and applications;

(2) Based on existing theoretical and empirical results, the general goals of continuous learning are summarized, and Detailed classification of representative strategies;

#(3) Divide the special challenges of real-world applications into scene complexity and task specificity, and Extensive analysis of how continuous learning strategies adapt to these challenges; .

This paper is organized as follows: In Section 2, we introduce the setting of continuous learning, including its basic formula, typical scenarios and evaluation metrics. In Section 3, we summarize some theoretical efforts on continuous learning with their general goals. In Section 4, we provide an up-to-date and detailed classification of representative strategies, analyzing their motivations and typical implementations. In Sections 5 and 6, we describe how these strategies adapt to real-world challenges of scene complexity and task specificity. In Section 7, we provide a discussion of current trends, prospects for intersectional directions and interdisciplinary connections in neuroscience.

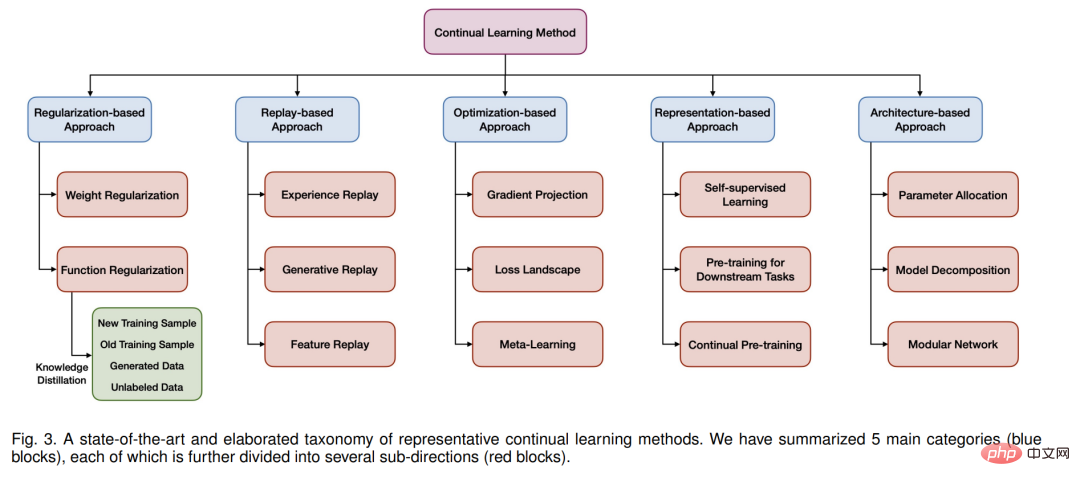

In this section, we detail the classification of representative continuous learning methods (see Figure 3 and Figure 1 ,c), and extensively analyze their main motivations, typical implementations, and empirical properties.

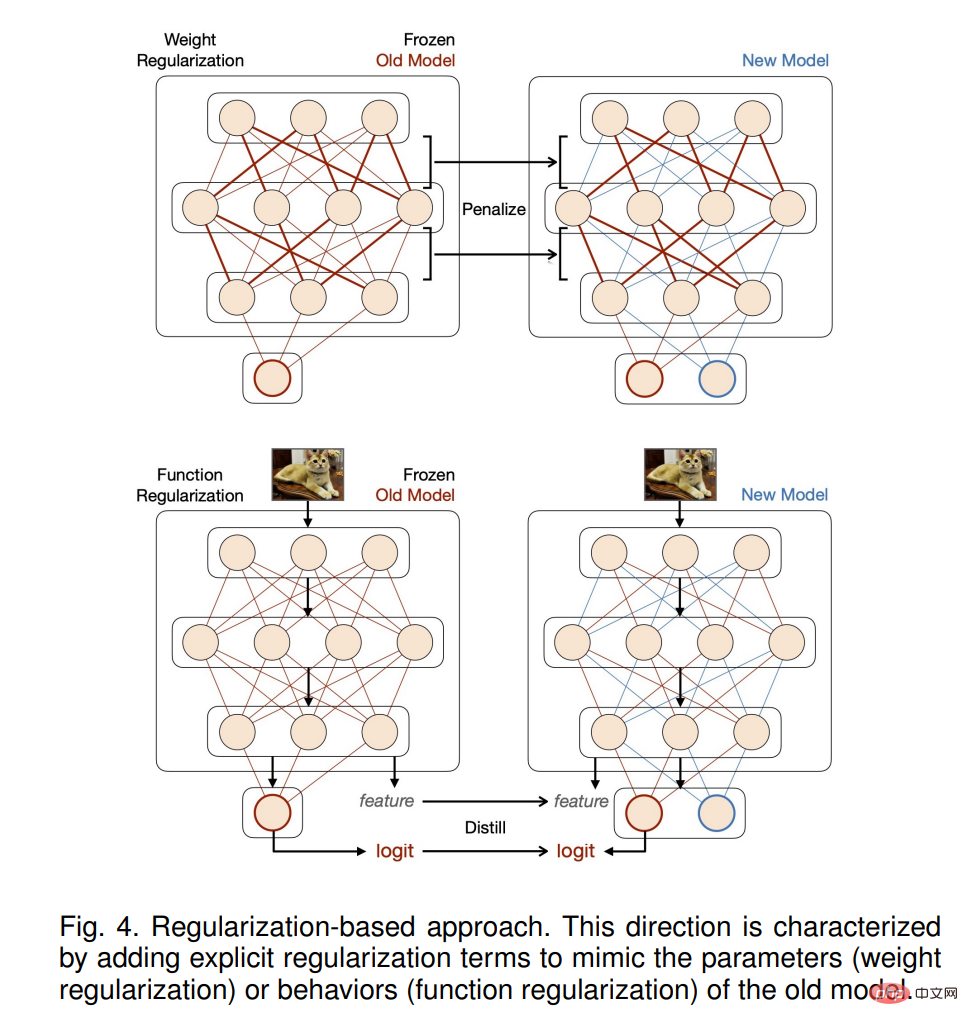

Regularization-based method

This direction is characterized by adding explicit regularization terms to balance old and new tasks, which often requires storing a frozen copy of the old model for reference (see Figure 4). According to the goal of regularization, such methods can be divided into two categories.

##Replay-based method

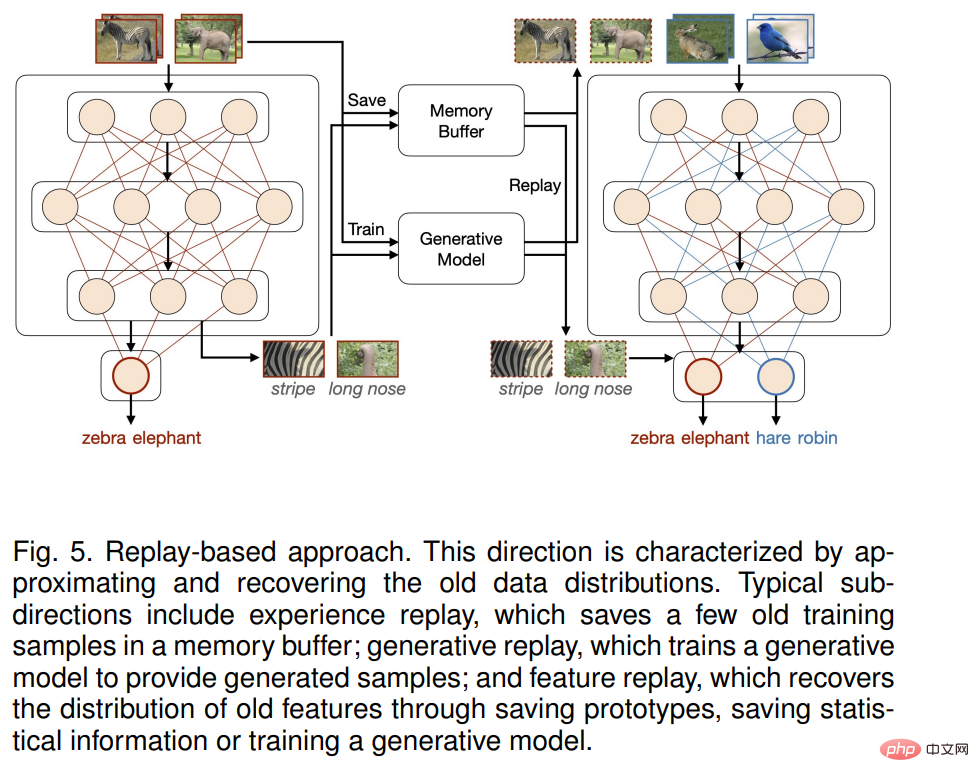

Group methods for approximating and recovering old data distributions into this direction (see Figure 5). Depending on the content of the playback, these methods can be further divided into three sub-directions, each with its own challenges.

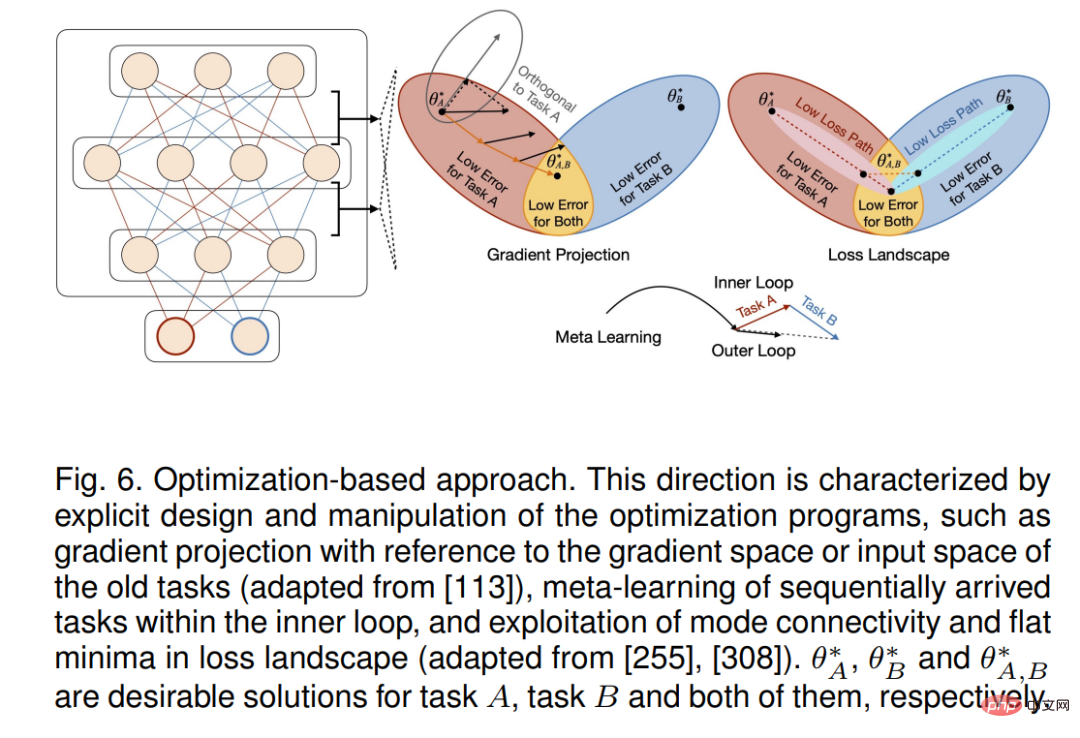

##Optimization-based method

Continuous learning can be achieved not only by adding additional terms to the loss function (such as regularization and replay), but also by explicitly designing and operating optimization procedures.

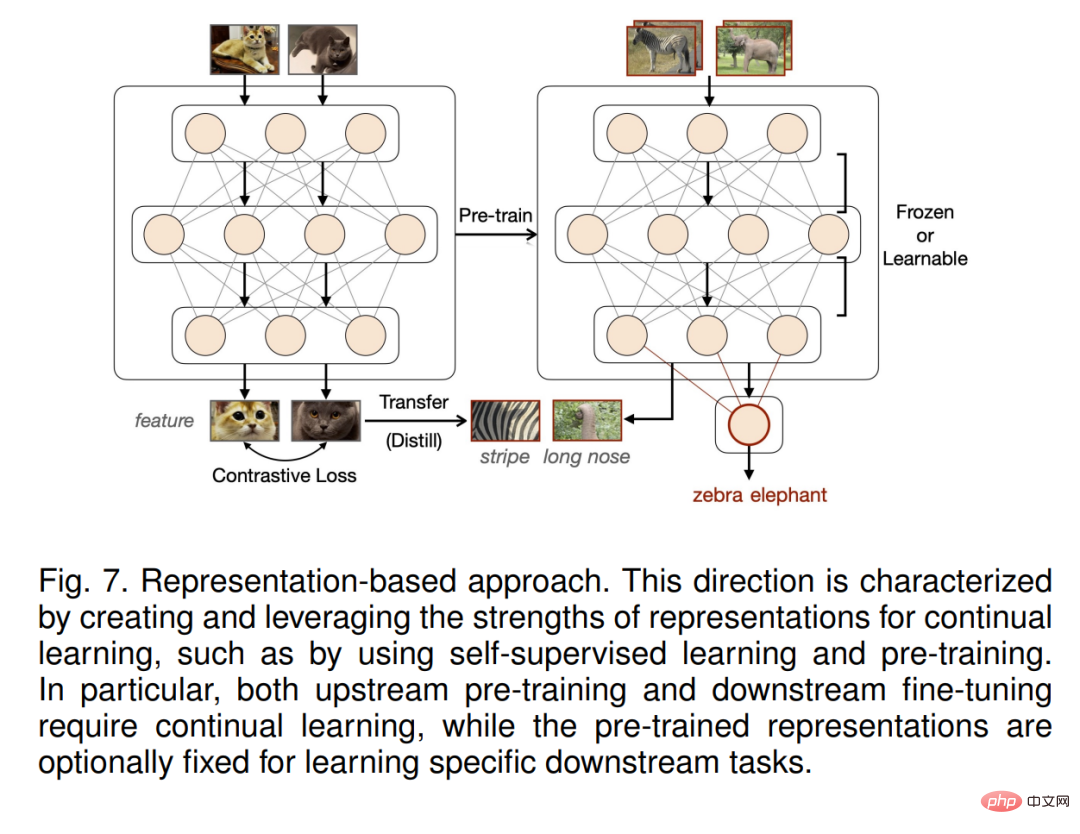

Methods for creating and taking advantage of continuous learning representations fall into this category. In addition to early work on obtaining sparse representations through meta-training [185], recent work has attempted to combine self-supervised learning (SSL) [125], [281], [335] and large-scale pre-training [295], [380], [456] to improve representation in initialization and ongoing learning. Note that these two strategies are closely related, as pre-training data is often huge and not explicitly labeled, while the performance of SSL itself is mainly evaluated by fine-tuning (a series of) downstream tasks. Below, we discuss representative sub-directions.

The above strategies mainly focus on learning all incremental tasks with shared parameter sets (i.e., a single model and a parameter space), which is the main cause of inter-task interference. Instead, constructing task-specific parameters can solve this problem explicitly. Previous work usually divides this direction into parameter isolation and dynamic architecture based on whether the network architecture is fixed or not. This paper focuses on ways to implement task-specific parameters, extending the above concepts to parameter assignment, model decomposition, and modular networks (Figure 8).

The above is the detailed content of Tsinghua's latest 'continuous learning' review, 32 pages detailing the review of continuous learning theories, methods and applications. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The role and practical application of arrow symbols in PHP

Mar 22, 2024 am 11:30 AM

The role and practical application of arrow symbols in PHP

Mar 22, 2024 am 11:30 AM

The role and practical application of arrow symbols in PHP In PHP, the arrow symbol (->) is usually used to access the properties and methods of objects. Objects are one of the basic concepts of object-oriented programming (OOP) in PHP. In actual development, arrow symbols play an important role in operating objects. This article will introduce the role and practical application of arrow symbols, and provide specific code examples to help readers better understand. 1. The role of the arrow symbol to access the properties of an object. The arrow symbol can be used to access the properties of an object. When we instantiate a pair

How to Undo Delete from Home Screen in iPhone

Apr 17, 2024 pm 07:37 PM

How to Undo Delete from Home Screen in iPhone

Apr 17, 2024 pm 07:37 PM

Deleted something important from your home screen and trying to get it back? You can put app icons back on the screen in a variety of ways. We have discussed all the methods you can follow and put the app icon back on the home screen. How to Undo Remove from Home Screen in iPhone As we mentioned before, there are several ways to restore this change on iPhone. Method 1 – Replace App Icon in App Library You can place an app icon on your home screen directly from the App Library. Step 1 – Swipe sideways to find all apps in the app library. Step 2 – Find the app icon you deleted earlier. Step 3 – Simply drag the app icon from the main library to the correct location on the home screen. This is the application diagram

From beginner to proficient: Explore various application scenarios of Linux tee command

Mar 20, 2024 am 10:00 AM

From beginner to proficient: Explore various application scenarios of Linux tee command

Mar 20, 2024 am 10:00 AM

The Linuxtee command is a very useful command line tool that can write output to a file or send output to another command without affecting existing output. In this article, we will explore in depth the various application scenarios of the Linuxtee command, from entry to proficiency. 1. Basic usage First, let’s take a look at the basic usage of the tee command. The syntax of tee command is as follows: tee[OPTION]...[FILE]...This command will read data from standard input and save the data to

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

When editing text content in Word, you sometimes need to enter formula symbols. Some guys don’t know how to input the root number in Word, so Xiaomian asked me to share with my friends a tutorial on how to input the root number in Word. Hope it helps my friends. First, open the Word software on your computer, then open the file you want to edit, and move the cursor to the location where you need to insert the root sign, refer to the picture example below. 2. Select [Insert], and then select [Formula] in the symbol. As shown in the red circle in the picture below: 3. Then select [Insert New Formula] below. As shown in the red circle in the picture below: 4. Select [Radical Formula], and then select the appropriate root sign. As shown in the red circle in the picture below:

Explore the advantages and application scenarios of Go language

Mar 27, 2024 pm 03:48 PM

Explore the advantages and application scenarios of Go language

Mar 27, 2024 pm 03:48 PM

The Go language is an open source programming language developed by Google and first released in 2007. It is designed to be a simple, easy-to-learn, efficient, and highly concurrency language, and is favored by more and more developers. This article will explore the advantages of Go language, introduce some application scenarios suitable for Go language, and give specific code examples. Advantages: Strong concurrency: Go language has built-in support for lightweight threads-goroutine, which can easily implement concurrent programming. Goroutin can be started by using the go keyword

Learn the main function in Go language from scratch

Mar 27, 2024 pm 05:03 PM

Learn the main function in Go language from scratch

Mar 27, 2024 pm 05:03 PM

Title: Learn the main function in Go language from scratch. As a simple and efficient programming language, Go language is favored by developers. In the Go language, the main function is an entry function, and every Go program must contain the main function as the entry point of the program. This article will introduce how to learn the main function in Go language from scratch and provide specific code examples. 1. First, we need to install the Go language development environment. You can go to the official website (https://golang.org

The wide application of Linux in the field of cloud computing

Mar 20, 2024 pm 04:51 PM

The wide application of Linux in the field of cloud computing

Mar 20, 2024 pm 04:51 PM

The wide application of Linux in the field of cloud computing With the continuous development and popularization of cloud computing technology, Linux, as an open source operating system, plays an important role in the field of cloud computing. Due to its stability, security and flexibility, Linux systems are widely used in various cloud computing platforms and services, providing a solid foundation for the development of cloud computing technology. This article will introduce the wide range of applications of Linux in the field of cloud computing and give specific code examples. 1. Application virtualization technology of Linux in cloud computing platform Virtualization technology

Understanding MySQL timestamps: functions, features and application scenarios

Mar 15, 2024 pm 04:36 PM

Understanding MySQL timestamps: functions, features and application scenarios

Mar 15, 2024 pm 04:36 PM

MySQL timestamp is a very important data type, which can store date, time or date plus time. In the actual development process, rational use of timestamps can improve the efficiency of database operations and facilitate time-related queries and calculations. This article will discuss the functions, features, and application scenarios of MySQL timestamps, and explain them with specific code examples. 1. Functions and characteristics of MySQL timestamps There are two types of timestamps in MySQL, one is TIMESTAMP