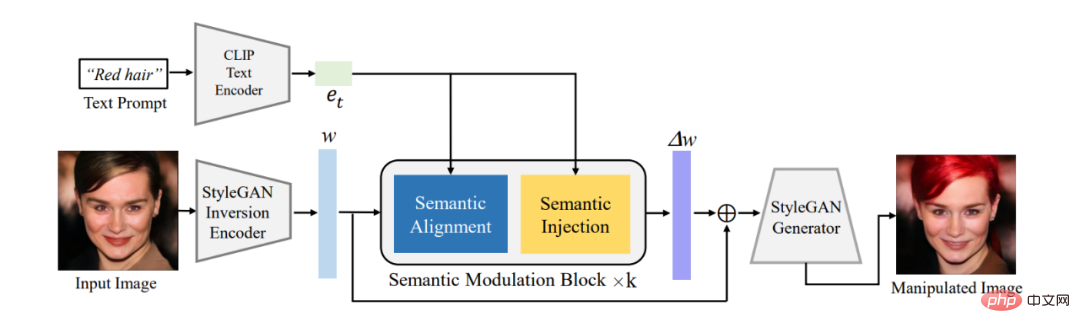

Paper 1: One Model to Edit Them All: Free-Form Text-Driven Image Manipulation with Semantic Modulations

Abstract: This article first uses the existing encoder to convert the image that needs to be edited into the latent encoding w in the W^ semantic space of StyleGAN, and then uses the proposed The semantic modulation module performs adaptive modulation on the latent coding. The semantic modulation module includes semantic alignment and semantic injection modules. It first aligns the semantics between the text encoding and the latent encoding of GAN through the attention mechanism, and then injects the text information into the aligned latent encoding, thereby ensuring that the Cain encoding owns the text. Information thereby achieving the ability to edit images using text.

Different from the classic StyleCLIP model, our model does not need to train a separate model for each text. One model can respond to multiple texts to effectively edit images, so we The model becomes FFCLIP-Free Form Text-Driven Image Manipulation. At the same time, our model has achieved very good results on the classic church, face and car data sets.

Figure 1: Overall frame diagram

Recommended: A new paradigm for text and image editing, a single model enables multi-text guided image editing.

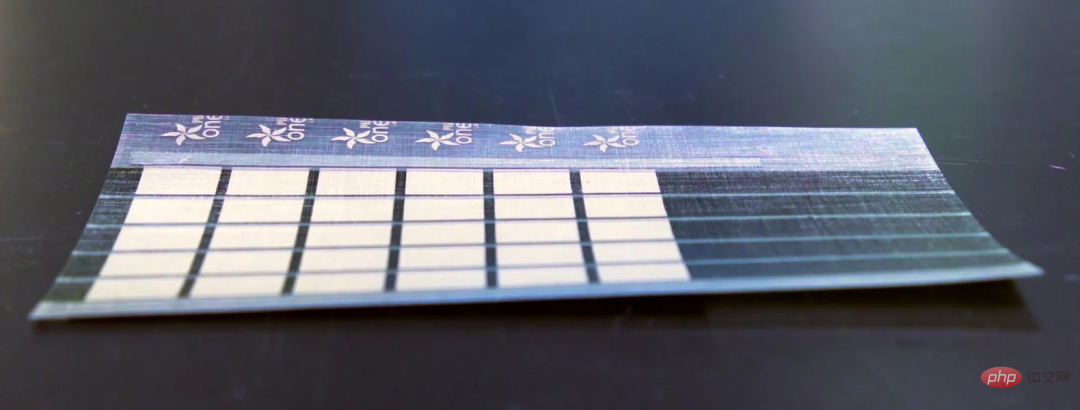

Paper 2: Printed Organic Photovoltaic Modules on Transferable Ultra-thin Substrates as Additive Power Sources

Abstract: MIT researchers have developed a scalable manufacturing technology that can produce ultra-thin, lightweight Solar cells can be placed on any surface.

MIT researchers have created solar panels that are thinner than a human hair and provide 18 times more energy per kilogram than current glass- and silicon-based solar panels. These solar panels weigh only one percent of traditional photovoltaic cells.

This ultra-thin solar panel can also be installed on boat sails, drone wings and tents. They are especially useful in remote areas and disaster relief operations.

Recommended: MIT creates paper-thin solar panels.

Paper 3: A Survey of Deep Learning for Mathematical Reasoning

Abstract: In a recently released report, researchers from UCLA and other institutions systematically reviewed the progress of deep learning in mathematical reasoning.

Specifically, this paper discusses various tasks and data sets (Section 2), and examines advances in neural networks (Section 3) and pre-trained language models (Section 4) in the field of mathematics . The rapid development of contextual learning of large language models in mathematical reasoning is also explored (Section 5). The article further analyzes existing benchmarks and finds that less attention is paid to multimodal and low-resource environments (Section 6.1). Evidence-based research shows that current representations of computing capabilities are inadequate and deep learning methods are inconsistent with respect to mathematical reasoning (Section 6.2). Subsequently, the authors suggest improvements to the current work in terms of generalization and robustness, trustworthy reasoning, learning from feedback, and multimodal mathematical reasoning (Section 7).

Recommendation: How deep learning slowly opens the door to mathematical reasoning.

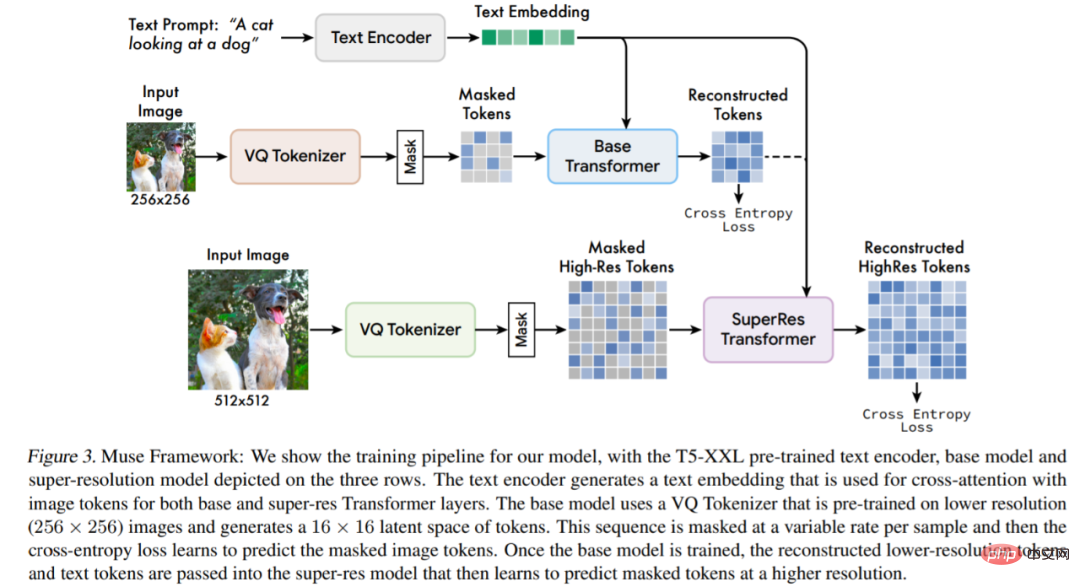

Paper 4: Muse: Text-To-Image Generation via Masked Generative Transformers

Abstract: This study proposes a new model for text-to-image synthesis using a masked image modeling approach, in which the image decoder architecture is derived from Pre-trained and frozen T5-XXL large language model (LLM) encoder embeddings are conditioned.

Compared with Imagen (Saharia et al., 2022) or Dall-E2 (Ramesh et al., 2022) based on the cascaded pixel-space diffusion model Compared with Muse, the efficiency is significantly improved due to the use of discrete tokens. Compared with the SOTA autoregressive model Parti (Yu et al., 2022), Muse is more efficient due to its use of parallel decoding.

Based on experimental results on TPU-v4, researchers estimate that Muse is more than 10 times faster than Imagen-3B or Parti-3B models in inference speed, and faster than Stable Diffusion v1.4 (Rombach et al., 2022) 2 times faster. Researchers believe that Muse is faster than Stable Diffusion because the diffusion model is used in Stable Diffusion v1.4, which obviously requires more iterations during inference.

Model architecture overview.

Recommendation: The inference speed is 2 times faster than Stable Diffusion, and the generation and repair of images can be done with one Google model.

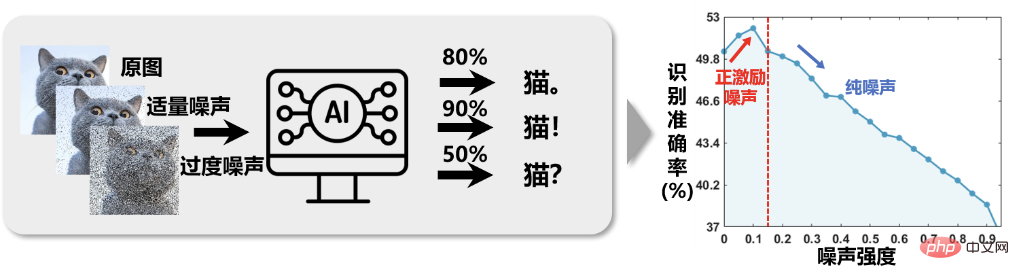

Paper 5: Positive-Incentive Noise

Abstract: In all aspects of scientific research, there is a large amount of noise, such as instrument errors caused by insufficient instrument precision, deviations caused by human errors in operation, information distortion caused by external interference such as extreme environments, etc. . It has become a common assumption among researchers that noise often has adverse effects on the tasks performed. Therefore, a large amount of research work has been generated around the core task of "noise reduction". However, the team of Professor Li Xuelong of Northwestern Polytechnical University questioned this assumption through experimental observations when performing signal detection and processing tasks: Is noise in scientific research really always harmful?

As shown in Figure 1, in an image intelligent classification system, after adding an appropriate amount of noise to the image and then training, the recognition accuracy increases. This brings us some inspiration: adding some noise to the image instead of removing it, and then performing the image classification task may have better results. As long as the impact of noise on the target is much smaller than the impact of noise on the background, the effect of "damaging the enemy (background noise) by one thousand and damaging yourself (target signal) by eight hundred" is meaningful, because the mission pursues a high signal-to-noise ratio. In essence, when faced with traditional classification problems, randomly adding moderate noise after features is equivalent to increasing the feature dimension. In a sense, it is similar to adding a kernel function to the features, which actually completes a task. A mapping from low-dimensional space to high-dimensional space makes the data more separable, thus improving the classification effect.

Figure 1 Image recognition accuracy shows a “counter-intuitive” relationship of “first increasing and then decreasing” as the image noise intensity increases.

Recommendation: Professor Li Xuelong of Western Polytechnic University proposed a mathematical analysis framework based on task entropy.

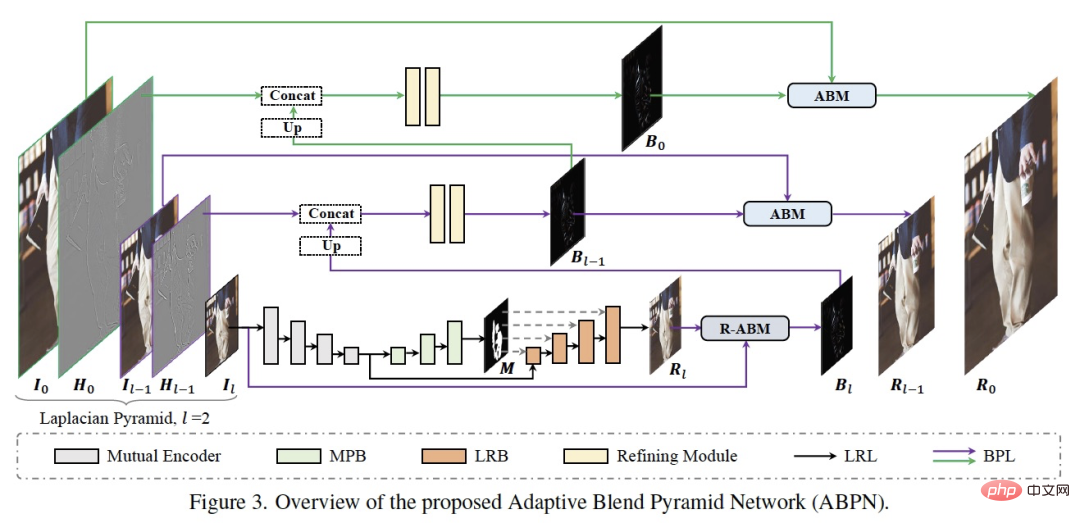

Paper 6: ABPN: Adaptive Blend Pyramid Network for Real-Time Local Retouching of Ultra High-Resolution Photo

##Abstract: Researchers from DAMO Academy take the realization of professional-level smart skin care as the starting point , developed a set of ultra-fine local retouching algorithm ABPN for high-definition images, which has achieved good results and applications in skin beautification and clothing wrinkle removal tasks in ultra-high-definition images.

Recommended: Erase blemishes and wrinkles with one click.

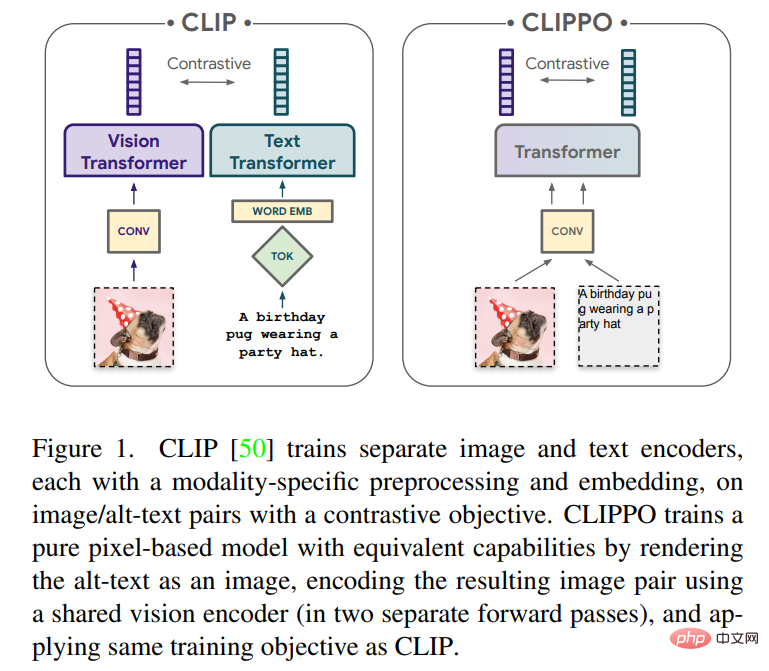

Paper 7: Image-and-Language Understanding from Pixels Only

Developing a single end-to-end model that can handle any modality or combination of modalities will be an important step towards multi-modal learning. In this article, researchers from Google Research (Google Brain team) in Zurich will focus mainly on images and text. This article will explore multi-modal learning of text and images using purely pixel-based models. The model is a separate visual Transformer that processes visual input or text, or both together, all rendered as RGB images. All modalities use the same model parameters, including low-level feature processing; that is, there are no modality-specific initial convolutions, tokenization algorithms, or input embedding tables. The model is trained with only one task: contrastive learning, as popularized by CLIP and ALIGN. Therefore the model is called CLIP-Pixels Only (CLIPPO).

Half the parameters, as good as CLIP, Visual Transformer realizes image and text unification starting from pixels. Heart of Machine cooperates with ArXiv Weekly Radiostation initiated by Chu Hang and Luo Ruotian, and selects more important papers this week on the basis of 7 Papers, including NLP, CV , 10 selected papers in each field of ML, and provide abstract introductions of the papers in audio form. The details are as follows: ##10 NLP PapersAudio: 00:0020:02 This week’s 10 selected NLP papers are: 1. Rethinking with Retrieval: Faithful Large Language Model Inference. (from Hongming Zhang , Dan Roth) 2. Understanding Political Polarization using Language Models: A dataset and method. (from Bhiksha Raj) 3. Towards Table-to-Text Generation with Pretrained Language Model: A Table Structure Understanding and Text Deliberating Approach. (from Hui Xiong) 4. Examining Political Rhetoric with Epistemic Stance Detection. (from Brendan O'Connor) 5. Towards Knowledge-Intensive Text-to-SQL Semantic Parsing with Formulaic Knowledge. (from Min-Yen Kan) 6. Leveraging World Knowledge in Implicit Hate Speech Detection. (from Jessica Lin) 7. Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers. (from Furu Wei) 8. EZInterviewer: To Improve Job Interview Performance with Mock Interview Generator. (from Tao Zhang) 9. Memory Augmented Lookup Dictionary based Language Modeling for Automatic Speech Recognition. (from Yuxuan Wang) 10. Parameter-Efficient Fine-Tuning Design Spaces. (from Diyi Yang) 10 CV PapersAudio:##00:0021:06 This week’s 10 selected CV papers are: 2. Mapping smallholder cashew plantations to inform sustainable tree crop expansion in Benin. (from Vipin Kumar) 3. Scale-MAE: A Scale-Aware Masked Autoencoder for Multiscale Geospatial Representation Learning. (from Trevor Darrell) 4. STEPs: Self-Supervised Key Step Extraction from Unlabeled Procedural Videos. (from Rama Chellappa) ##5. Muse: Text-To-Image Generation via Masked Generative Transformers. (from Ming-Hsuan Yang, Kevin Murphy, William T. Freeman) 6. Understanding Imbalanced Semantic Segmentation Through Neural Collapse. (from Xiangyu Zhang, Jiaya Jia) 7. Cross Modal Transformer via Coordinates Encoding for 3D Object Detection. (from Xiangyu Zhang) 8. Learning Road Scene-level Representations via Semantic Region Prediction. (from Alan Yuille) 9. Learning by Sorting: Self-supervised Learning with Group Ordering Constraints. (from Bernt Schiele) 10. AttEntropy: Segmenting Unknown Objects in Complex Scenes using the Spatial Attention Entropy of Semantic Segmentation Transformers. (from Pascal Fua) 10 ML Papers音频:00:0023:15 本周 10 篇 ML 精选论文是: 1. Self-organization Preserved Graph Structure Learning with Principle of Relevant Information. (from Philip S. Yu) 2. Modified Query Expansion Through Generative Adversarial Networks for Information Extraction in E-Commerce. (from Altan Cakir) 3. Disentangled Explanations of Neural Network Predictions by Finding Relevant Subspaces. (from Klaus-Robert Müller) 4. L-HYDRA: Multi-Head Physics-Informed Neural Networks. (from George Em Karniadakis) 5. On Transforming Reinforcement Learning by Transformer: The Development Trajectory. (from Dacheng Tao) 6. Boosting Neural Networks to Decompile Optimized Binaries. (from Kai Chen) 7. NeuroExplainer: Fine-Grained Attention Decoding to Uncover Cortical Development Patterns of Preterm Infants. (from Dinggang Shen) 8. A Theory of Human-Like Few-Shot Learning. (from Ming Li) 9. Temporal Difference Learning with Compressed Updates: Error-Feedback meets Reinforcement Learning. (from George J. Pappas) 10. Estimating Latent Population Flows from Aggregated Data via Inversing Multi-Marginal Optimal Transport. (from Hongyuan Zha)ArXiv Weekly Radiostation

The above is the detailed content of Inference speed is 2 times faster than Stable Diffusion; Visual Transformer unifies image text. For more information, please follow other related articles on the PHP Chinese website!