The emergence of the new coronavirus has really opened Pandora’s box.

Nowadays, new variants are emerging, disrupting the lives of people on the entire earth. The life without masks before COVID-19 may never go back.

Recently, scientists have made a new discovery, which may allow us to say goodbye to the days of poking our throats in the future.

At the European Respiratory Society International Conference in Barcelona, Spain, a study showed that AI can determine whether a user has been infected with COVID-19 through sounds collected by mobile phone applications.

According to News Medical’s report, the AI model used in this study is cheaper, faster, and easier to use than rapid antigen testing, making it ideal for low-income countries where PCR testing is expensive.

In addition, this AI has another advantage - higher accuracy. Compared with rapid antigen tests, its accuracy can reach 89%.

The research team used data from the "New Crown Pneumonia Sound Library" APP of the University of Cambridge in the UK, which contained 893 sounds from 4352 healthy and non-healthy participants. audio samples. The findings show that simple voice recordings and AI algorithms can accurately determine who is infected with COVID-19.

The editor thought that he had discovered a treasure APP. After downloading it with great expectation, he found that this app with a rating of 2.8 is currently only used to collect data.

High emotional intelligence: You have contributed to the development of science.

Low EQ: This software is temporarily useless.

Ms. Wafaa Aljbawi, a researcher at the Data Science Institute of Maastricht University in the Netherlands, said at the conference that the AI model is accurate in 89% of cases, while horizontal The accuracy of flow tests varies by brand, and lateral flow tests are much less accurate at detecting asymptomatic people.

These promising results suggest that simple recordings and fine-tuned AI algorithms may achieve high accuracy in determining which patients are infected with COVID-19. Such tests are freely available and easy to interpret. Additionally, they enable remote virtual testing with turnaround times of less than a minute. For example, they could be used at entry points to large gatherings, allowing for rapid screening of crowds. ”

Wafaa Aljbawi, Researcher, Data Science Institute, Maastricht University

This result is so exciting. What it means: Through basic voice recording and customized AI algorithms , we can identify COVID-19 infected patients with high accuracy. It’s free and easy to use. The editor rubbed his hands excitedly: Does this mean that the days of poking every three days are over?

The principle of this method is: After being infected with COVID-19, a person’s upper respiratory tract and vocal cords will be affected, thereby changing the voice.

In order to verify the feasibility of this method, Visara from the same Data Science Institute Dr. Urovi and Dr. Sami Simons, a pulmonologist at Maastricht University Medical Center, also conducted the tests.

They used information from the University of Cambridge’s crowdsourced COVID-19Sounds app, including input from 4,352 893 audio samples of healthy and unhealthy subjects, of which 308 people tested positive for COVID-19.

During the test, the user will start recording after downloading the APP to their mobile phone Breathing sounds. In this process, you need to cough 3 times, then take deep breaths through your mouth 3 to 5 times, and then read a short sentence on the screen 3 times.

The researchers used a method called Mel Spectrogram analysis is a speech analysis method that can identify different speech characteristics, such as loudness, power and changes over time.

"In this way, we can break down many attributes of the subject's voice," Ms Aljbawi said. "To distinguish the voices of people with COVID-19 from those without the disease, we built different artificial intelligence models and evaluated which model was best suited to classify COVID-19 cases."

They found, One model called long short-term memory (LSTM) significantly outperformed the others. LSTM is based on a neural network, which mimics the way the human brain works to identify potential relationships in data. Because it works on sequences, it is well suited for modeling signals collected over time, such as those from speech, because of its ability to store the data in memory.

Its overall accuracy is 89%, its ability to correctly identify positive cases (true positive rate or "sensitivity") is 89%, and its ability to correctly identify negative cases (true negative rate or "specificity") is 83%.

These results demonstrate a significant improvement in the accuracy of the LSTM model in diagnosing COVID-19 compared to state-of-the-art tests such as lateral flow testing.

The comparison results can be summarized in one sentence: the LSTM model has a higher recognition rate for positives, but it is also more likely to misdiagnose negatives as positives.

Specifically, lateral flow tests have a sensitivity of only 56% but a higher specificity of 99.5%, so lateral flow tests will more frequently mistake positives for negatives. Using the LSTM model might miss 11 out of 100 cases, while the lateral flow test would miss 44 out of 100 cases.

The high specificity of the lateral flow test means that only 1/10 negatives will be misdiagnosed as positives, while the misdiagnosis rate of the LSTM test is even higher, 17 of 100 negatives will be misdiagnosed as positives. However, since the test is effectively free, people can then be given a PCR test if the LSTM shows a positive result. So the impact of the latter is not big.

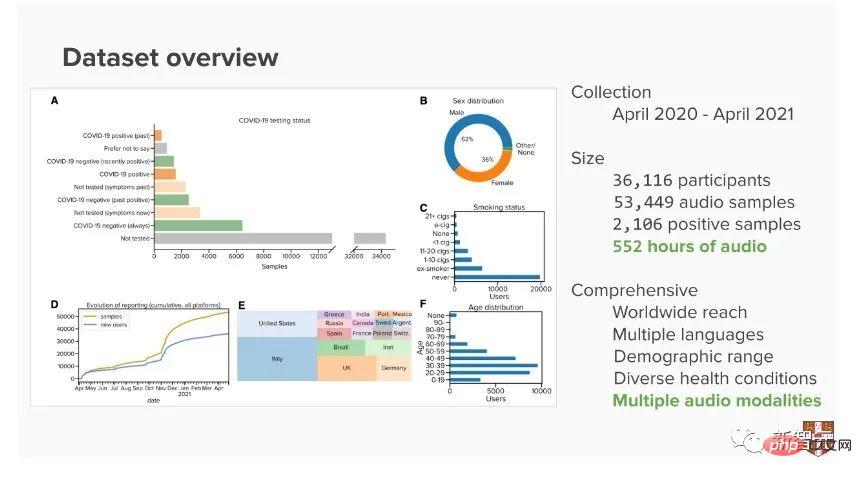

Currently, the researchers are still further verifying their results. They use a lot of data. Since the experiment began, they have collected 53,449 audio samples from 36,116 individuals, which can be used to enhance and validate the model's accuracy. In addition, they are conducting other research to determine what other speech factors will affect the AI model.

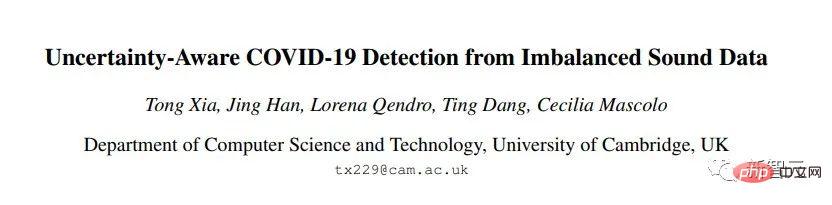

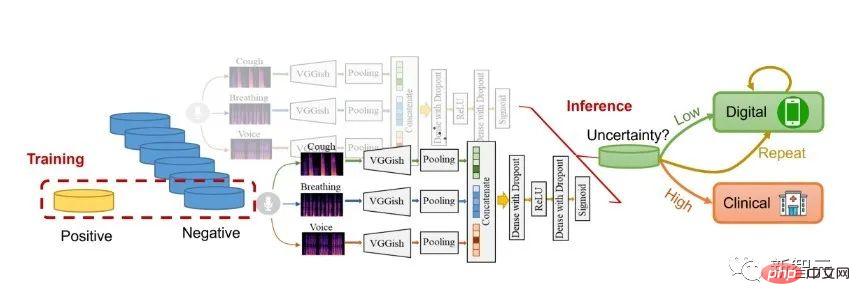

In June 2021, researchers began exploring the extent to which AI models can be trusted when used as automated screening tools for COVID-19. In this paper accepted by INTERSPEECH 2021, they try to combine uncertainty estimation with a deep learning model to detect COVID-19 from sound.

Paper address: https://arxiv.org/pdf/2104.02005.pdf

In the paper, the researchers analyzed 330 positive and 919 A subset of negative subjects.

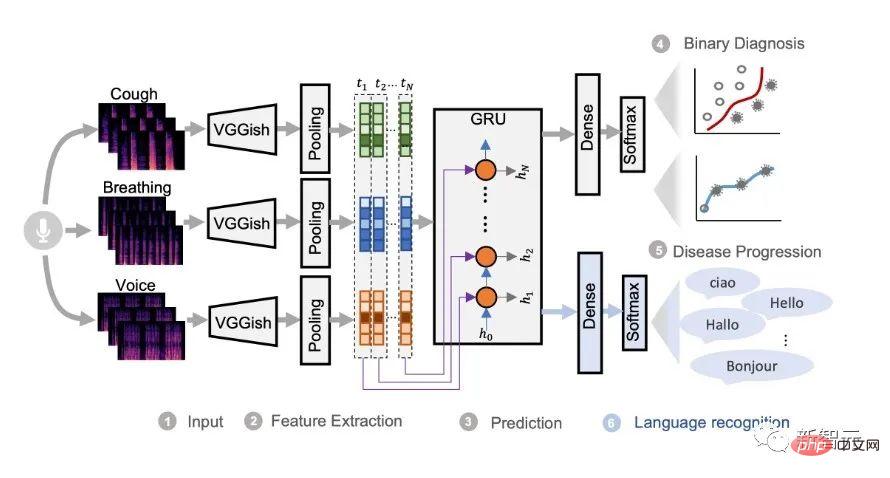

They propose an ensemble learning framework that addresses the common problem of data imbalance during the training phase and provides predictive uncertainty during inference, materialized as the variance of the predictions produced by the ensemble of models. The backbone model is a pretrained convolutional network named VGGish 1, modified to receive spectrograms of three sounds as input.

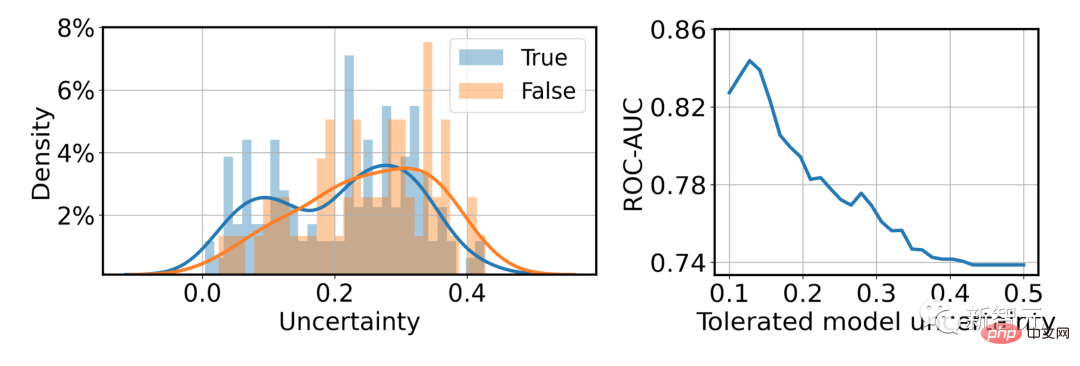

In this work, 10 deep learning models were trained and aggregated into an ensemble model, yielding an AUC of 0.74, a sensitivity of 0.68, and a specificity of 0.69, excellent for each model. On the one hand, the superiority of deep learning over hand-crafted features for audio-based COVID-19 detection is verified. On the other hand, it is shown that the ensemble of SVMs further improves the performance of a single SVM model since the samples are utilized more efficiently.

Wrong predictions typically produce higher uncertainties (see top left image), so being able to leverage the empirical uncertainty threshold to advise the user to repeat the audio test on the phone or perform additional clinical testing if digital diagnosis still fails (see image above right). By incorporating uncertainty into automated diagnostic systems, better risk management and more robust decision-making can be achieved.

In November 2021, researchers released a comprehensive large-scale COVID-19 audio dataset at NeurIPS 2021, consisting of 53,449 audio samples (over 552 hours in total) crowdsourced by 36,116 participants. Related papers have been accepted for publication in NeurIPS 2021 Dataset Track.

In the paper, the researchers demonstrate ROC-AUC performance exceeding 0.7 on the tasks of respiratory symptom prediction and COVID-19 prediction, confirming the promise of machine learning methods based on these types of data sets.

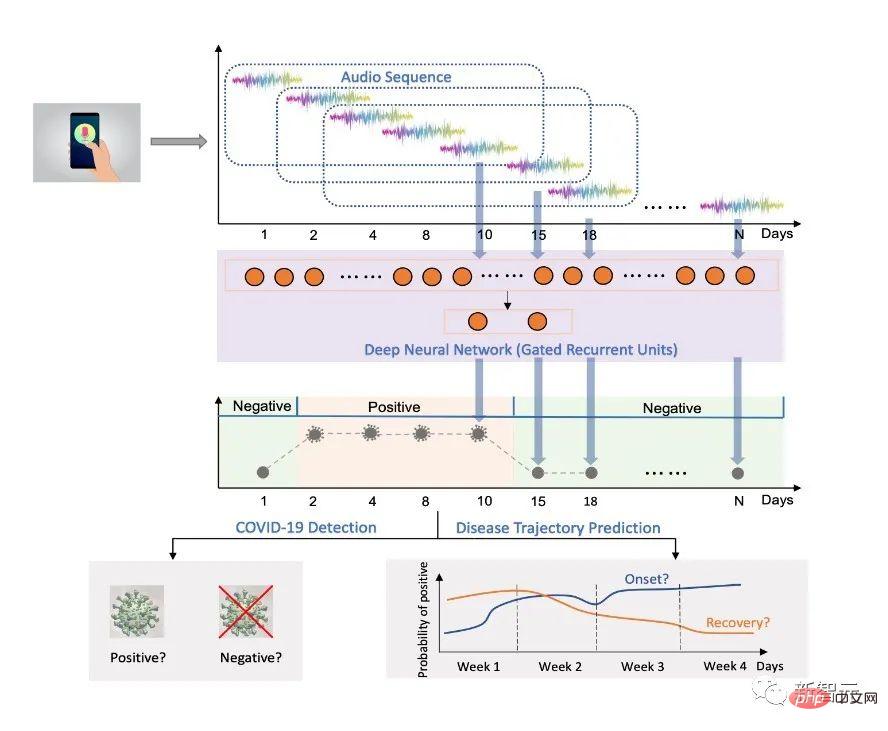

In June 2022, researchers hope to explore the potential of longitudinal audio samples over time for prediction of COVID-19 progression, specifically recovery trends using sequential deep learning predict. The paper was published in JMIR, a journal in the field of digital medicine and health. This study is arguably the first work to explore longitudinal audio dynamics for COVID-19 disease progression prediction.

Paper address: https://www.jmir.org/2022/6/e37004

To explore the audio dynamics of personal historical audio biomarkers , researchers developed and validated a deep learning method using gated recurrent units (GRU) to detect COVID-19 disease progression.

The proposed model includes a pre-trained convolutional network named VGGish to extract high-level audio information, and GRU to capture the temporal dependence of longitudinal audio samples .

The study found that the proposed system performed well in distinguishing COVID-19 positive and negative audio samples.

In this series of studies, Chinese scholars such as Ting Dang, Jing Han, and Tong Xia also appeared.

Perhaps, we are not far away from the day when we can detect the new crown with an app.

The above is the detailed content of Can you detect COVID-19 just by coughing into your phone? Still produced by Cambridge University. For more information, please follow other related articles on the PHP Chinese website!