Technology peripherals

Technology peripherals

AI

AI

Nearly ten thousand people watched Hinton's latest speech: Forward-forward neural network training algorithm, the paper has been made public

Nearly ten thousand people watched Hinton's latest speech: Forward-forward neural network training algorithm, the paper has been made public

Nearly ten thousand people watched Hinton's latest speech: Forward-forward neural network training algorithm, the paper has been made public

The NeurIPS 2022 conference is in full swing. Experts and scholars from all walks of life are communicating and discussing many subdivided fields such as deep learning, computer vision, large-scale machine learning, learning theory, optimization, and sparsity theory.

At the meeting, Turing Award winner and deep learning pioneer Geoffrey Hinton was invited to give a speech in recognition of the paper "ImageNet Classification" he co-wrote ten years ago with his graduate students Alex Krizhevsky and Ilya Sutskever. with Deep Convolutional Neural Networks,” which was awarded the Time-testing Prize for its “tremendous impact” on the field. Published in 2012, this work was the first time a convolutional neural network achieved human-level performance in the ImageNet image recognition competition, and it was a key event that launched the third wave of artificial intelligence.

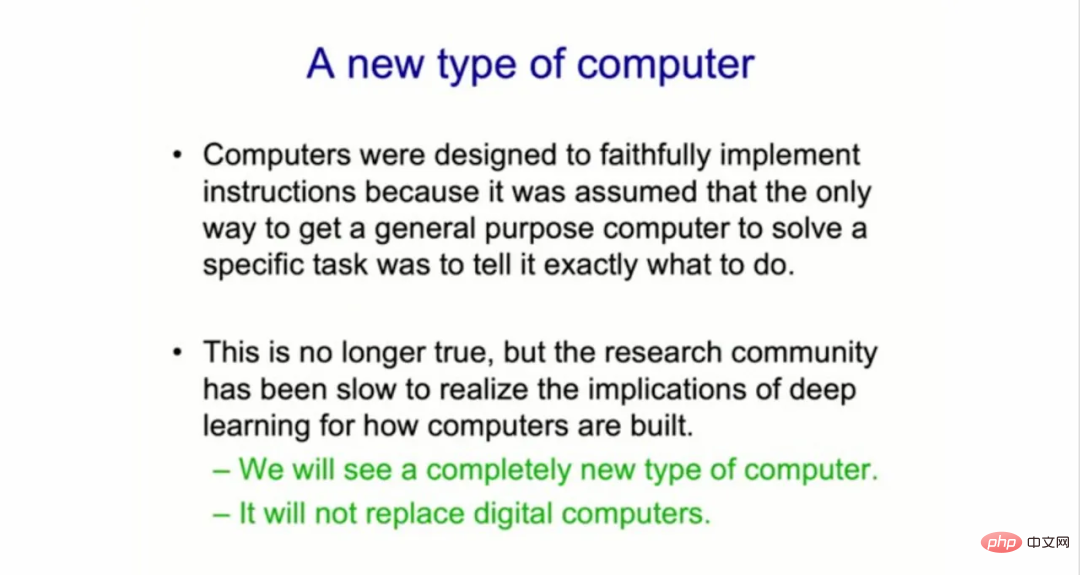

The theme of Hinton’s speech is “The Forward-Forward Algorithm for Training Deep Neural Networks”. In his speech, Geoffrey Hinton said, "The machine learning research community has been slow to realize the impact of deep learning on the way computers are built." He believes that the machine learning form of artificial intelligence will trigger The revolution in computer systems, this is a new combination of software and hardware that puts AI "into your toaster".

He continued, "I think we're going to see a completely different kind of computer, and it won't be possible for a few years. But there are good reasons to work on this completely different kind of computer." Computer."

Building a completely different new type of computer

All digital computers to date have been built to be "immortal" (immortal), where the hardware is designed to be extremely reliable so that the same software can run everywhere. "We can run the same program on different physical hardware, and the knowledge is immortal."

Hinton said that this design requirement means that digital computers have missed "the various aspects of hardware. "variable, random, unstable, simulated, and unreliable properties" that may be very useful to us.

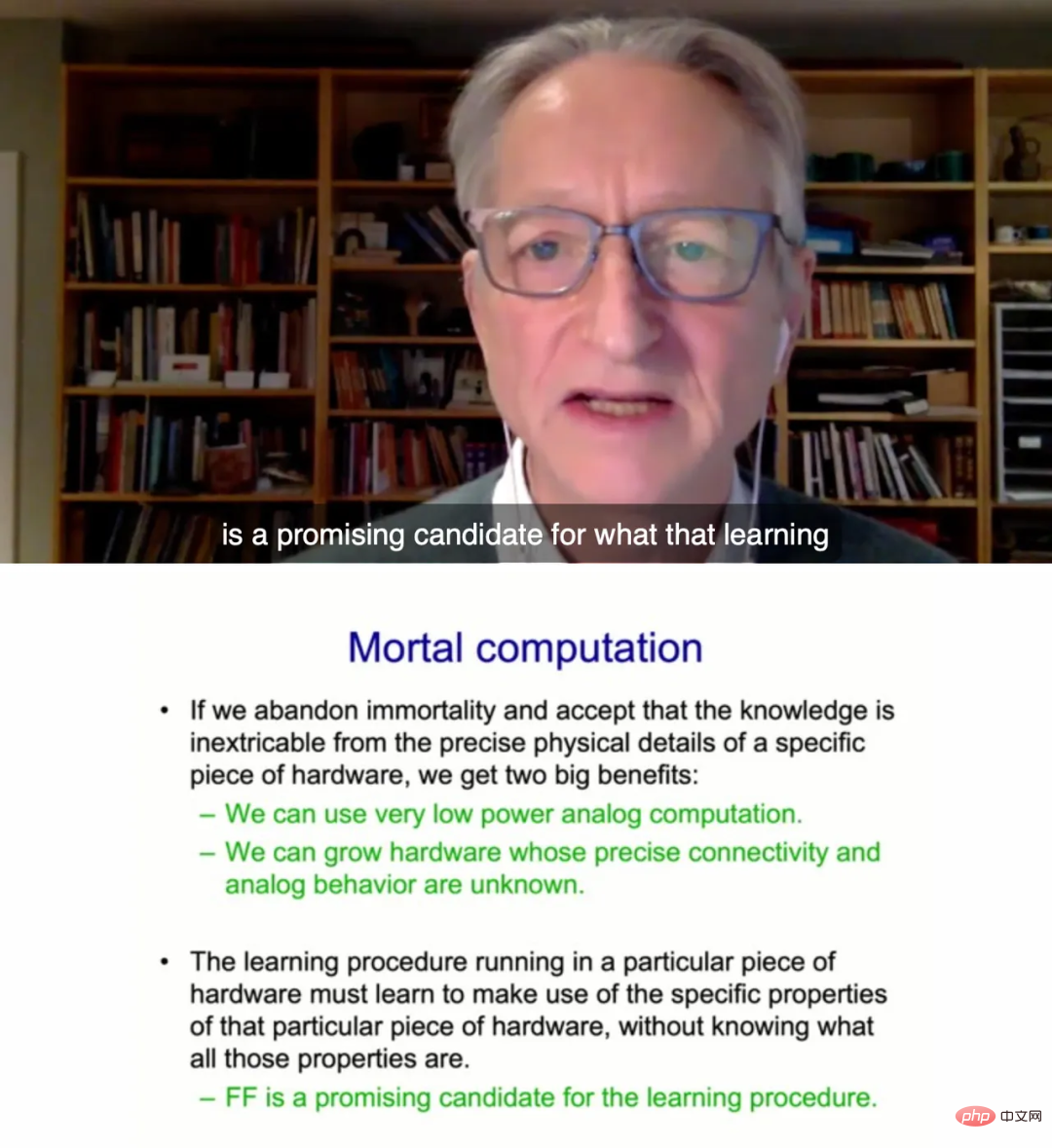

In Hinton’s view, future computer systems will take a different approach: they will be “neuromorphic” and ordinary ( mortal). This means that every computer will be a tight marriage of neural network software and disjointed hardware, in the sense of having analog rather than digital components, which can contain an element of uncertainty and evolve over time.

Hinton explained, "The alternative now is that we would give up the separation of hardware and software, but computer scientists really don't like that. ."

The so-called mortal computation means that the knowledge learned by the system is inseparable from the hardware. These ordinary computers can "grow" out of expensive chip manufacturing plants.

Hinton points out that if we do this, we can use extremely low-power analog calculations and use memristor weights to perform terascale parallel processing. This refers to a decades-old experimental chip based on nonlinear circuit components. Additionally we can evolve hardware without understanding the precise quality of the precise behavior of different bits of hardware.

However, Hinton also said that the new ordinary computer will not replace the traditional digital computer. "It is not a computer that controls your bank account, nor does it know exactly how much you have." Money."

This kind of computer is used to put (i.e. process) other things. It can put something like GPT-3 into your toaster using a dollar. Medium", so you can talk to your toaster using only a few watts of power.

FF network suitable for ordinary computing hardware

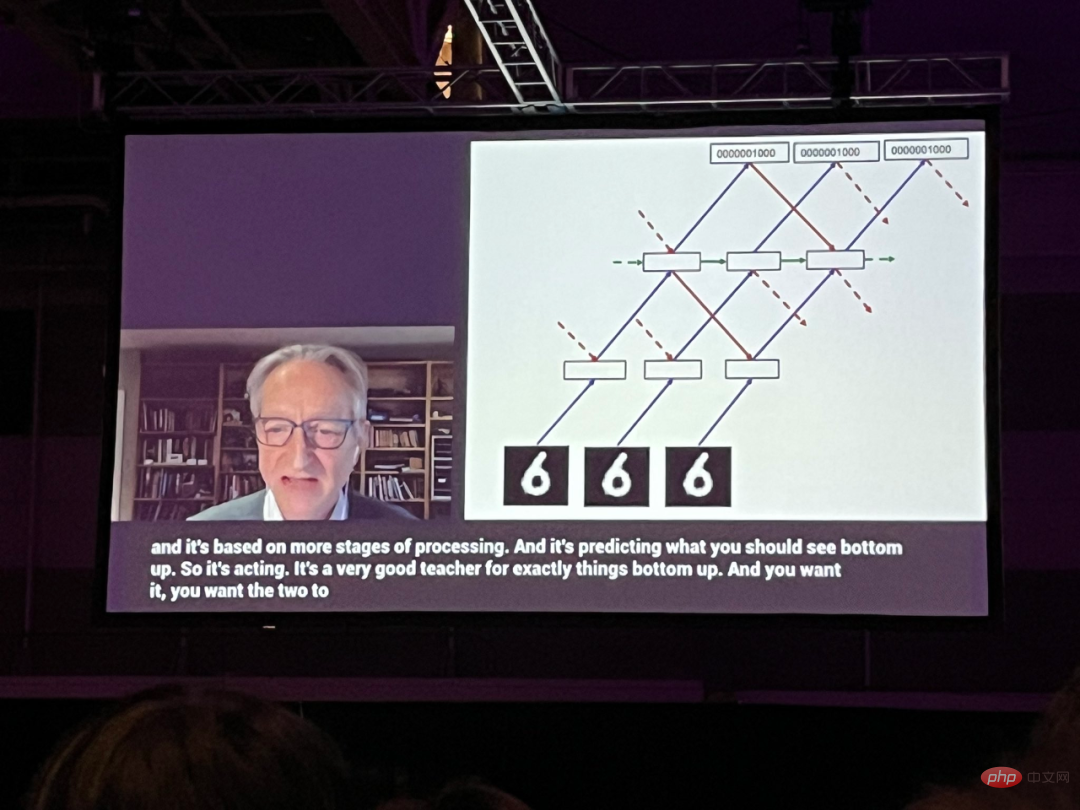

In this speech, Hinton spent most of the time talking about a new neural network method, which he called It is the Forward-Forward (FF) network, which replaces the backpropagation technique used in almost all neural networks. Hinton proposed that by removing backpropagation, forward networks might more reasonably approximate what happens in the brain in real life.

A draft of this paper is posted on the University of Toronto's Hinton homepage:

Paper link: https://www.cs.toronto.edu/~hinton/FFA13.pdf

Hinton said that the FF method may be more suitable for ordinary computing hardware. "To do something like this currently, we have to have a learning program that's going to run in proprietary hardware, and it has to learn to exploit the specific properties of that proprietary hardware, without knowing what all those properties are. But I think the forward algorithm is An option with potential." One obstacle to building new analog computers, he said, is the importance placed on the reliability of running a piece of software on millions of devices. "Each of these phones has to start out as a baby phone, and it has to learn how to become a phone," Hinton said. "And it's very painful."

Even the most skilled engineers will be reluctant to give up on perfect, identical immortal computers because of fear of uncertainty. paradigm.

Hinton said: "There are still very few people who are interested in analog computing who are willing to give up immortality. This is because of the attachment to consistency, predictability. But if You want the analog hardware to do the same thing every time, and sooner or later you run into real problems with all this mess."

Paper content

In the paper, Hinton introduced a new neural network learning procedure and experimentally demonstrated that it works well enough on some small problems. The specific content is as follows:What are the problems with backpropagation?

The success of deep learning over the past decade has established the effectiveness of performing stochastic gradient descent with large numbers of parameters and large amounts of data. Gradient is usually calculated by backpropagation, which has led to interest in whether the brain implements backpropagation or whether there are other ways to obtain the gradients needed to adjust the connection weights.As a model of how the cerebral cortex learns, backpropagation remains implausible, despite considerable efforts to implement it like real neurons. There is currently no convincing evidence that the cerebral cortex explicitly propagates error derivatives or stores neural activity for use in subsequent backpropagation. Top-down connections from one cortical area to areas earlier in the visual pathway were not as expected, i.e., bottom-up connections would occur if backpropagation was used in the visual system. Instead, they form loops in which neural activity passes through about half a dozen cortical layers in two regions and then returns to where it started.

Backpropagation through time is particularly unreliable as a way to learn sequences. In order to process a stream of sensory input without frequent timeouts, the brain needs to pipeline sensory data through different stages of sensory processing. It requires a learning program that can learn "on the fly." Representations in later stages of the pipeline may provide top-down information that affects representations in earlier stages of the pipeline in subsequent time steps, but the perception system needs to reason and learn in real time without stopping to perform backpropagation.

Another serious limitation of backpropagation is that it requires complete knowledge of the calculations performed in the forward pass in order to calculate the correct derivatives. If we insert a black box in the forward pass, then backpropagation is no longer possible unless we learn a differentiable model of the black box. As we will see, the black box does not change the learning procedure of the FF algorithm at all, since there is no need to backpropagate through it.

In the absence of a perfect forward pass model, one might resort to one of the many forms of reinforcement learning. The idea is to perform random perturbations on the weights or neural activity and relate these perturbations to changes in the payoff function. But reinforcement learning programs suffer from high variability: it’s hard to see the effect of perturbing one variable when many other variables are perturbed simultaneously. In order to average out the noise caused by all other perturbations, the learning rate needs to be inversely proportional to the number of variables being perturbed, which means that reinforcement learning scales poorly and cannot be compared with inverse for large networks containing millions or billions of parameters. Communication competition.

The main point of this paper is that neural networks containing unknown nonlinearities do not need to resort to reinforcement learning. The FF algorithm is comparable in speed to backpropagation, but has the advantage of being used when the precise details of the forward computation are not known. It also has the advantage of being able to learn while pipelined on sequential data through a neural network, without the need to store neural activity or stop propagating error derivatives.

Generally speaking, the FF algorithm is slower than backpropagation, and in several toy problems studied in this article, its generalization is not ideal, so when the power is low It is unlikely to replace backpropagation in applications that are too restricted. For very large models trained on very large datasets, this type of exploration will continue to use backpropagation. The FF algorithm may be superior to backpropagation in two ways, as a learning model for the cerebral cortex, and as a model using very low-power simulation hardware without resorting to reinforcement learning.

FF Algorithm

The Forward-Forward algorithm is a greedy multi-layer learning procedure inspired by Boltzmann machines and noise contrastive estimation. The idea is to use two forward passes to replace the forward and backward passes of backpropagation. These two forward passes are in exactly the same way. Operate on each other, but on different data, with opposite goals. Among them, the positive pass operates on the real data and adjusts the weights to increase the goodness in each hidden layer; the negative pass operates on the negative data and adjusts the weights to reduce the goodness in each hidden layer.

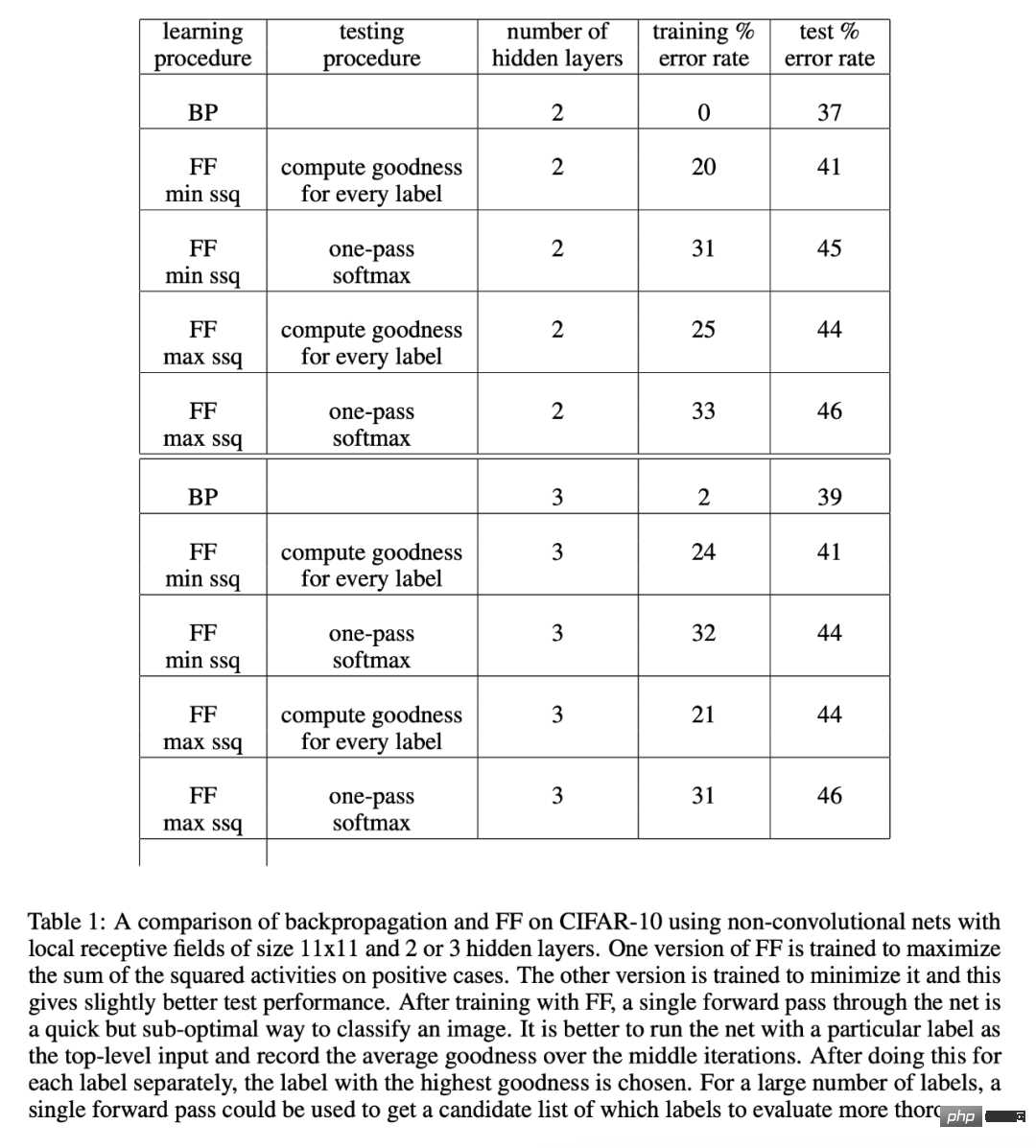

In the paper, Hinton demonstrated the performance of the FF algorithm through experiments on CIFAR-10.

CIFAR-10 has 50,000 training images that are 32 x 32 pixels in size with three color channels per pixel. Therefore, each image has 3072 dimensions. The backgrounds of these images are complex and highly variable, and cannot be modeled well with such limited training data. Generally speaking, when a fully connected network with two to three hidden layers is trained with the backpropagation method, unless the hidden layer is very small, the overfitting effect is very poor, so almost all reported results are for convolutional networks.

Since FF is intended for use in networks where weight sharing is not feasible, it was compared with a backpropagation network, which uses local receptive fields to limit the number of weights, without overly limiting the number of hidden units. The purpose is simply to show that, with a large number of hidden units, FF performs comparably to backpropagation for images containing highly variable backgrounds.

Table 1 shows the test performance of networks trained with backpropagation and FF, both of which use weight decay to reduce overfitting.

For more research details, please refer to the original paper.

The above is the detailed content of Nearly ten thousand people watched Hinton's latest speech: Forward-forward neural network training algorithm, the paper has been made public. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images