Technology peripherals

Technology peripherals

AI

AI

3 billion outperformed GPT-3's 175 billion. Google's new model caused heated discussion, but it got Hinton's age wrong.

3 billion outperformed GPT-3's 175 billion. Google's new model caused heated discussion, but it got Hinton's age wrong.

3 billion outperformed GPT-3's 175 billion. Google's new model caused heated discussion, but it got Hinton's age wrong.

An important goal of artificial intelligence is to develop models with strong generalization capabilities. In the field of natural language processing (NLP), pre-trained language models have made significant progress in this regard. Such models are often fine-tuned to adapt to new tasks.

Recently, researchers from Google analyzed a variety of instruction fine-tuning methods, including the impact of extensions on instruction fine-tuning. Experiments show that instruction fine-tuning can indeed scale well according to the number of tasks and model size, and models up to 540 billion parameters can significantly benefit. Future research should further expand the number of tasks and model size. In addition, the study also analyzed the impact of fine-tuning on the model's ability to perform inference, and the results are very attractive.

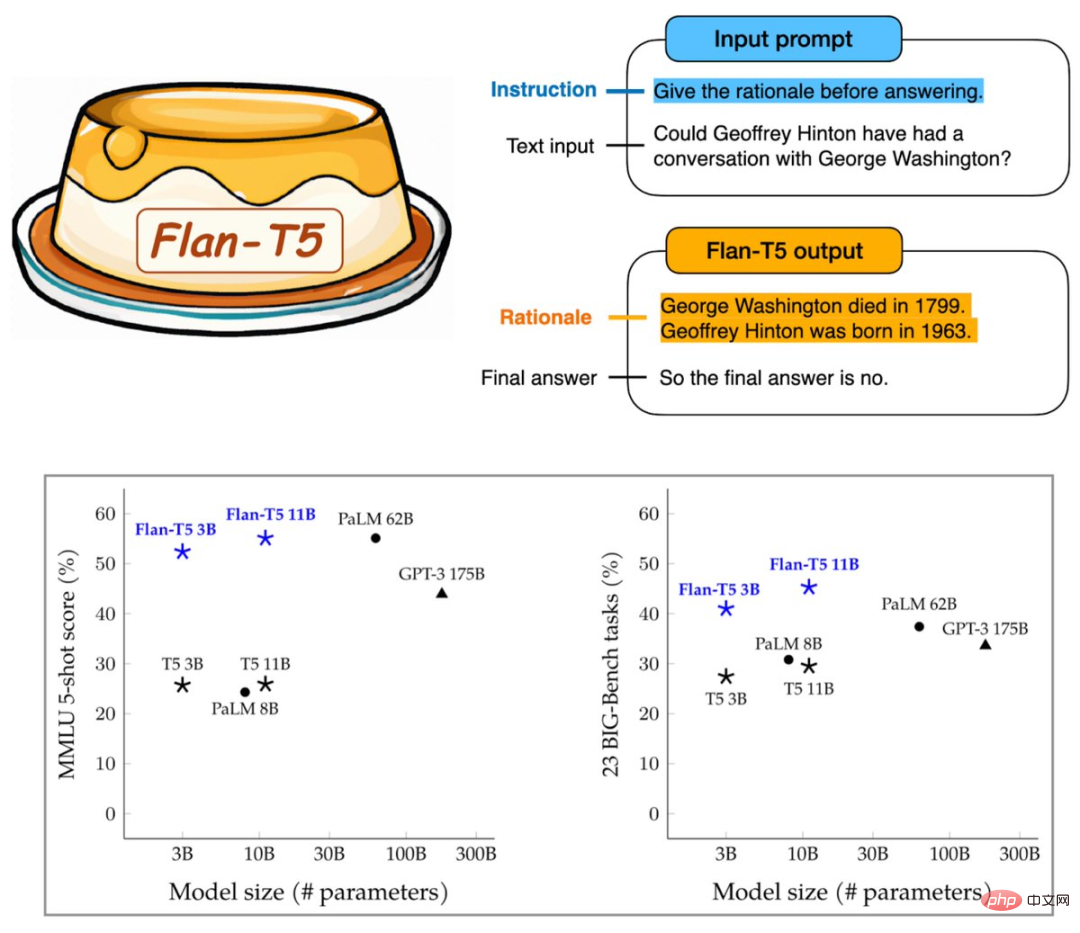

The resulting Flan-T5 has fine-tuned instructions for more than 1,800 language tasks, significantly improving prompts and multi-step reasoning capabilities. It can surpass GPT- 3 of 175 billion parameters.

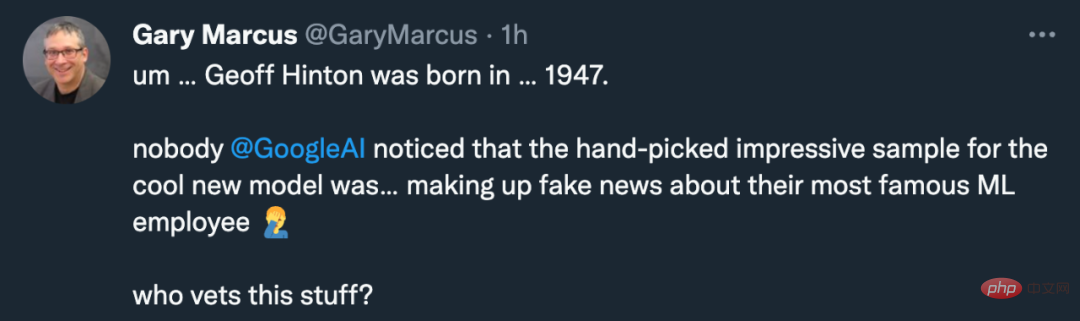

It seems that Google has found a direction to improve the capabilities of large models. However, this research not only attracted the welcome of the machine learning community, but also the complaints of Gary Marcus:

Google’s model Why did the birth date of Google's own famous scientist Geoffrey Hinton get wrong? He was obviously an old-timer born in 1947.

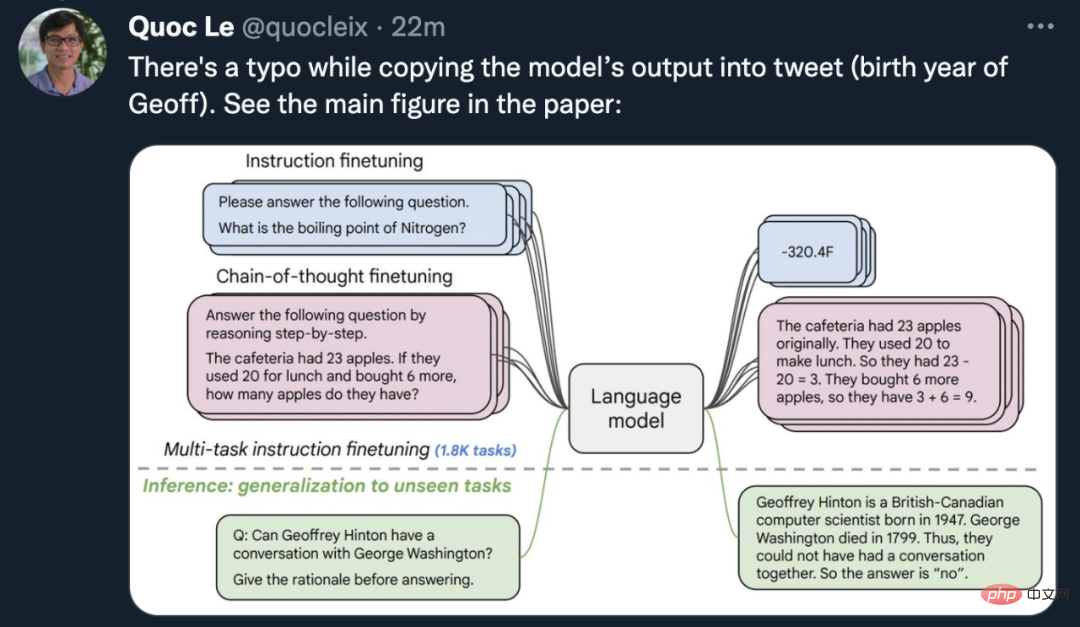

Quoc Le, chief scientist of Google Brain and one of the authors of the paper, quickly came out to correct the situation: the temporary worker picture was wrong, and the Flan-T5 model was not included in the paper. I got Geoff's birth date wrong, and there are pictures to prove it.

By the way, the famous AI scholar born in 1963 is Jürgen Schmidhuber.

Since it is not the AI model that is wrong, let’s see what changes Google’s new method can bring to the pre-trained model.

Thesis: Scaling Instruction-Finetuned Language Models

- ##Paper address: https://arxiv.org/abs/2210.11416

- Public model: https:/ /github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints

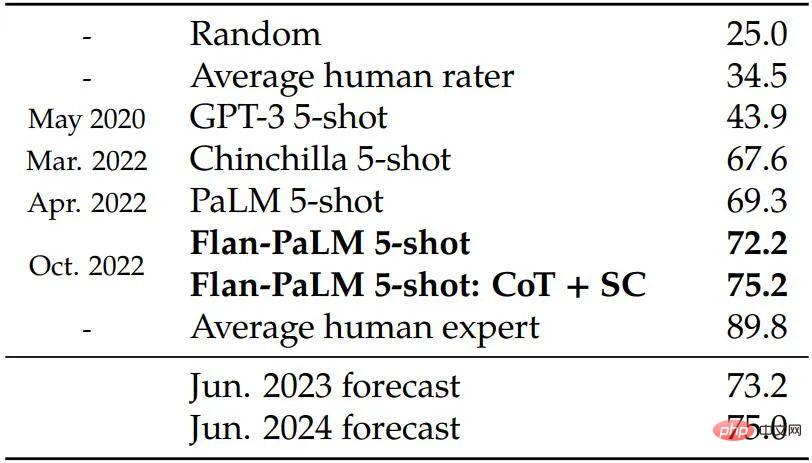

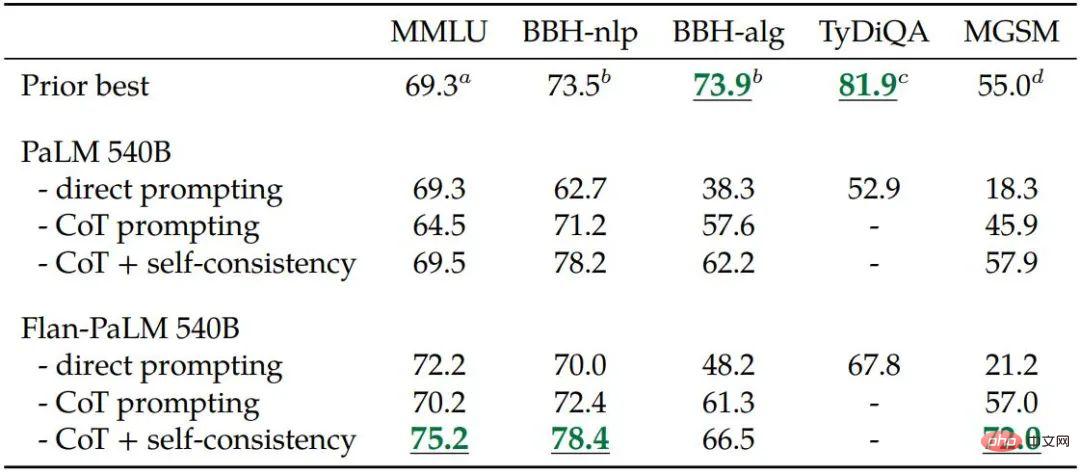

This study uses the 540B parameter model Training Flan-PaLM increases the number of fine-tuning tasks to more than 1800 and includes chain-of-thought (CoT; Wei et al., 2022b) data. The trained Flan-PaLM outperforms PaLM and reaches new SOTA on multiple benchmarks. In terms of reasoning capabilities, Flan-PaLM is able to leverage CoT and self-consistency (Wang et al., 2022c) to achieve 75.2% accuracy on large-scale multi-task language understanding (MMLU; Hendrycks et al., 2020) Rate.

In addition, Flan-PaLM significantly outperforms PaLM on a set of challenging open-ended generative problems, with significantly improved usability.

Overall, this Google study illustrates specific ways to use instruction fine-tuning to improve model performance.

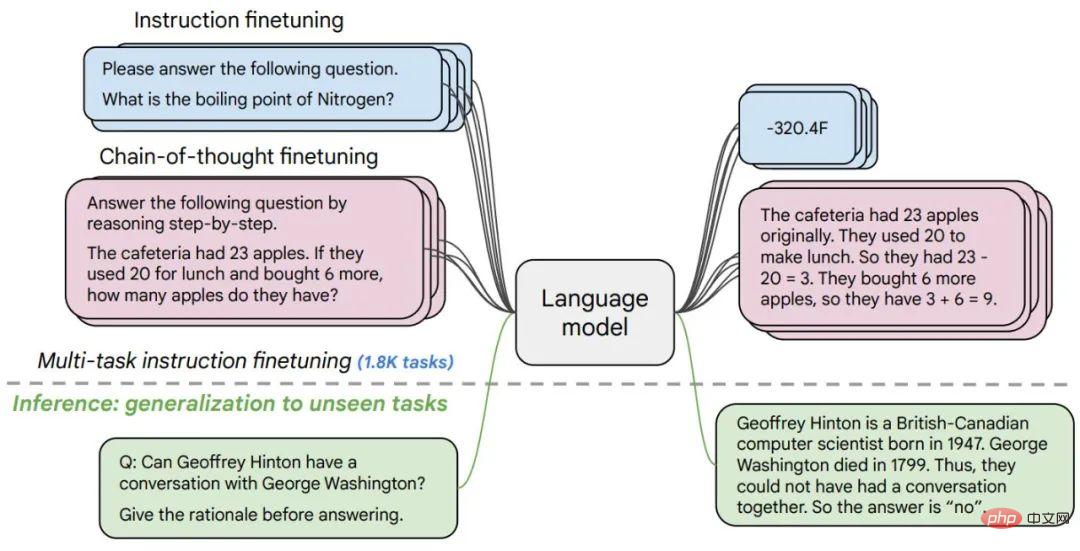

MethodSpecifically, this research mainly focuses on several aspects that affect instruction fine-tuning, including: (1) expanding the number of tasks, (2) expanding the model size , and (3) fine-tune the thought chain data.

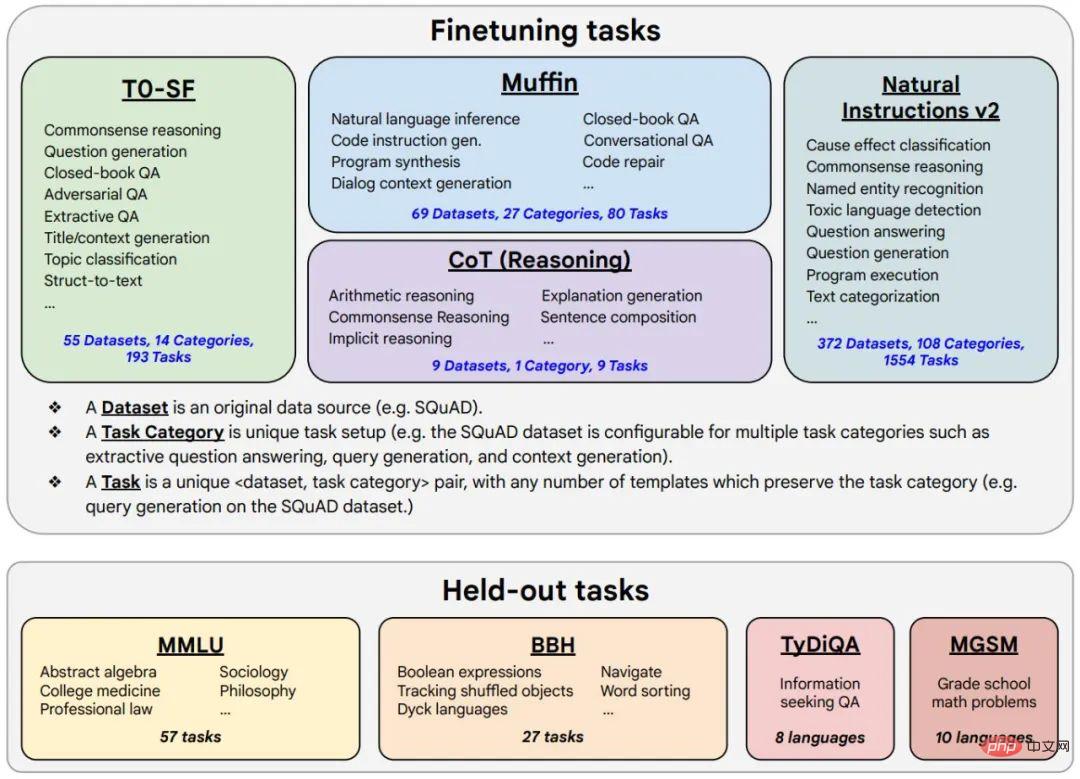

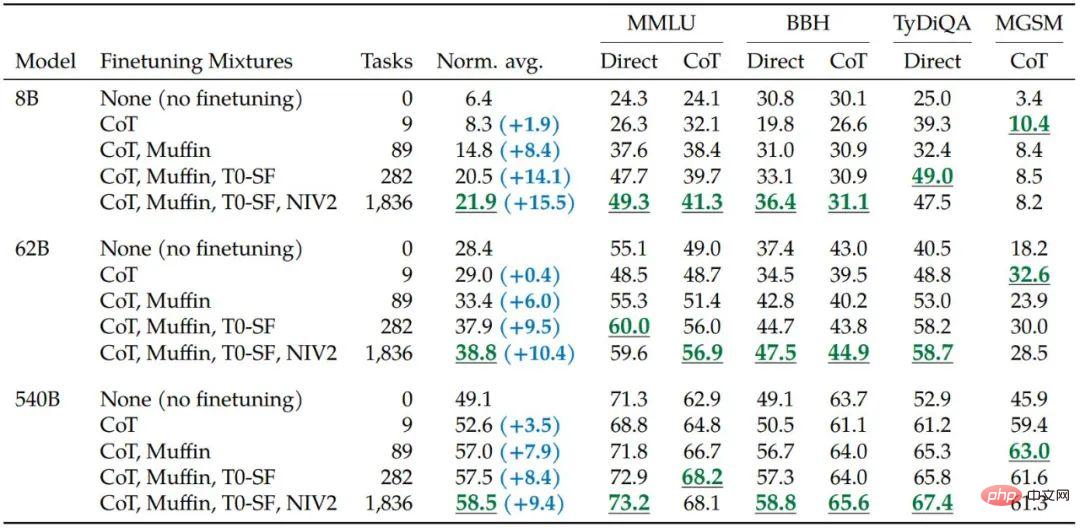

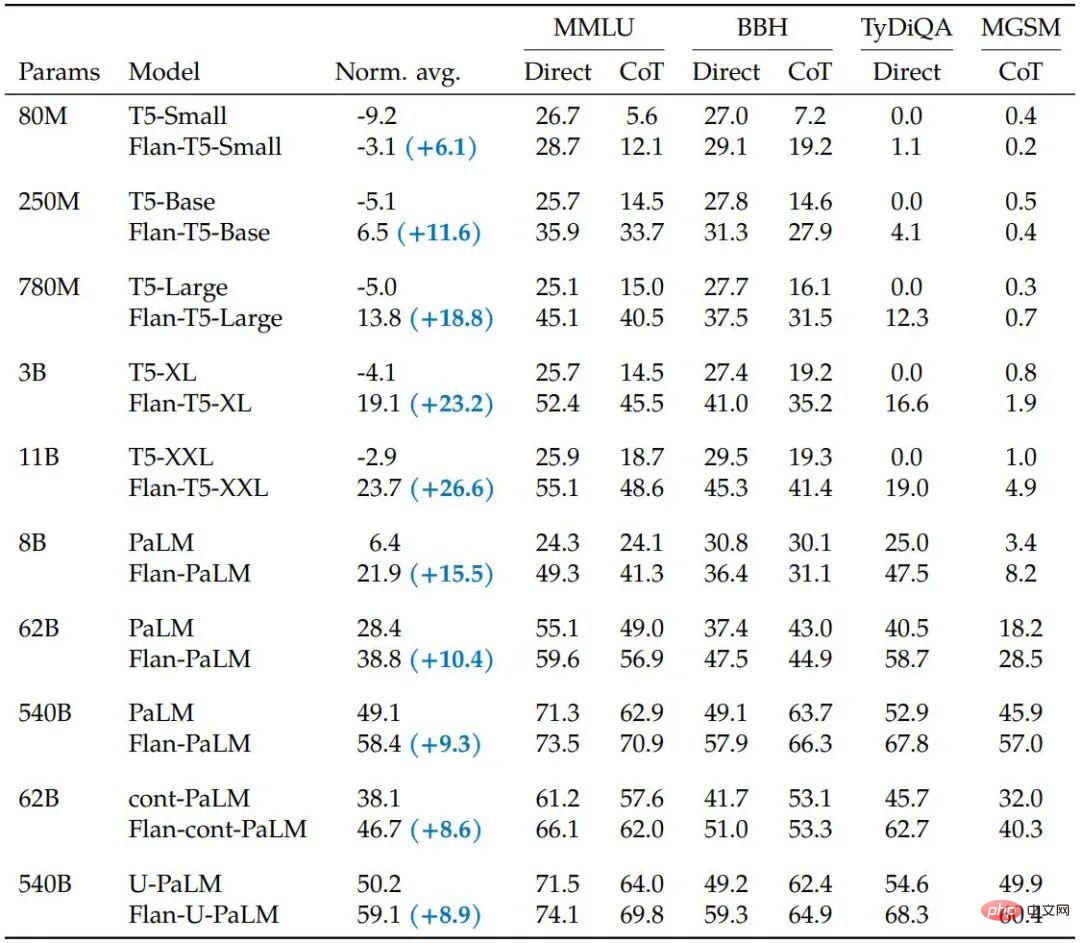

The study found that instruction fine-tuning with the above aspects significantly improved various model classes (PaLM, T5, U-PaLM), prompting settings (zero-shot, few-shot, CoT) and evaluation benchmarks (MMLU, BBH, TyDiQA, MGSM, open generation). For example, Flan-PaLM 540B with instruction fine-tuning significantly outperforms PALM 540B on 1.8K tasks (9.4% on average). Flan-PaLM 540B achieves state-of-the-art performance on several benchmarks, such as 75.2% on five MMLUs.

The researchers also disclosed the Flan-T5 checkpoint, which achieves strong few-shot performance even when compared to larger models such as PaLM 62B. Overall, instruction fine-tuning is a general approach to improve the performance and usability of pre-trained language models.

Figure 1. Researchers have fine-tuned various language models in more than 1,800 tasks. Fine-tuning in the absence of samples (zero and few samples) and with/without thought chains enables generalization across a range of evaluation scenarios.

Figure 2. Fine-tuning data includes 473 datasets, 146 task categories and a total 1836 tasks.

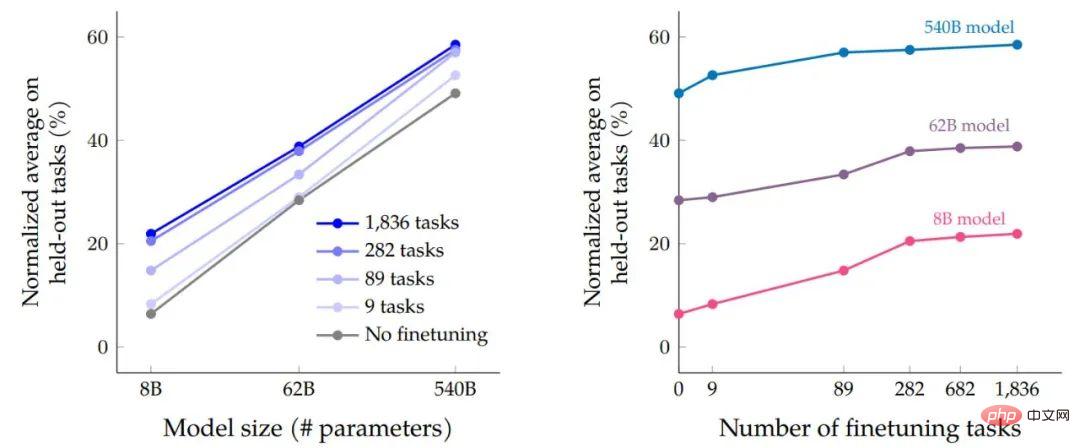

After the data fine-tuning and program fine-tuning processes are completed, the researchers compare scale expansion based on the performance of the model size on the task Impact. First, for all three model sizes, multitasking instruction fine-tuning results in large performance improvements compared to no fine-tuning, with gains ranging from 9.4% to 15.5%. Second, increasing the number of fine-tuning tasks can improve performance.

Finally, we can see that increasing the model size by an order of magnitude (8B → 62B or 62B → 540B) can significantly improve the performance of both fine-tuned and un-fine-tuned models.

The impact of multi-task instruction fine-tuning on accuracy relative to model size (amount of parameters) and the number and scale expansion of fine-tuning tasks Influence.

Increasing the number of tasks in the fine-tuning data improves Flan-PaLM on most evaluation benchmarks performance.

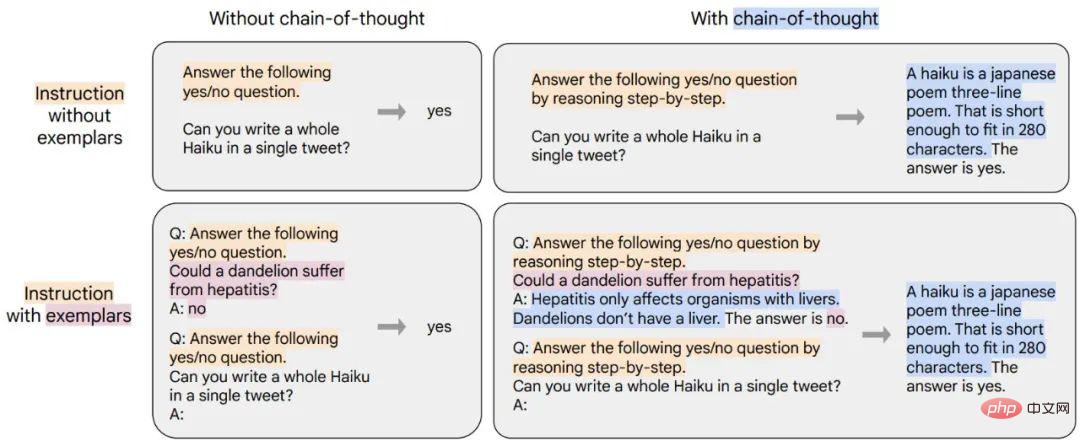

# Researchers demonstrate that including nine datasets annotated with Chains of Thoughts (CoT) in a fine-tuning mix improves inference capabilities. The table below shows that Flan-PaLM's CoT prompting capabilities outperform PaLM on the four retained evaluation benchmarks.

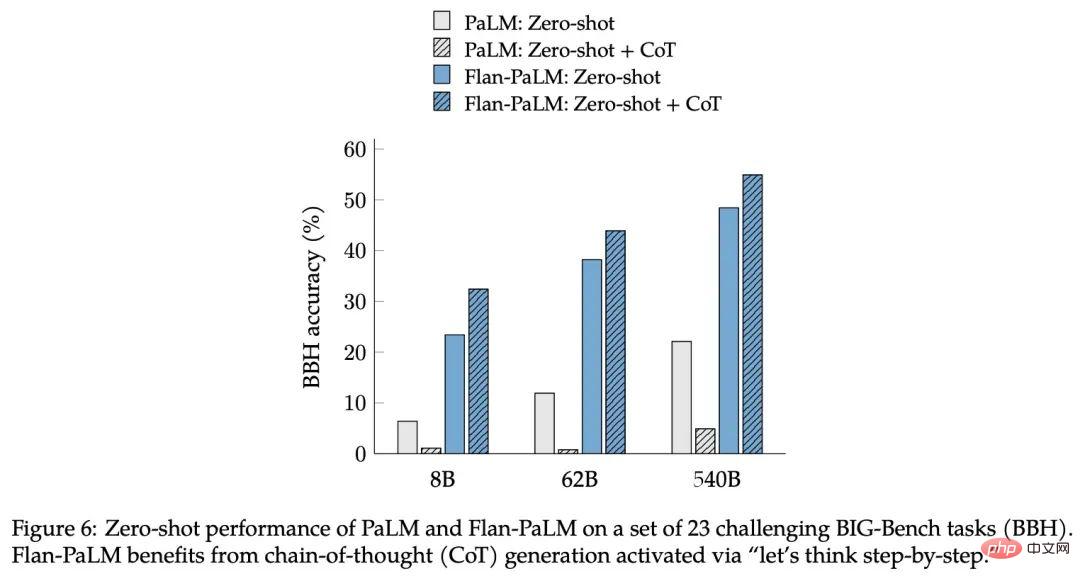

This study found that another benefit of fine-tuning instructions for CoT data is that it can achieve zero-shot inference. The model does not target CoT generates inference capabilities on its own with just a few samples, which may require extensive engineering tuning to implement correctly.

Figure 6: PaLM and Flan-PaLM on a set of 23 challenging BIG-Bench tasks (BBH ) zero-shot performance on. Flan-PaLM requires the generation of a Chain of Thoughts (CoT) activated by the "Let's think step by step" command.

To demonstrate the generality of the new method, Google trained T5, PaLM and U-PaLM, covering a range of model sizes from 80 million to 540 billion parameters, and found that all models can significantly improve performance.

Table 5. Instruction fine-tuning (Flan) improves performance over other continuous pre-training methods.

After testing, instruction fine-tuning significantly improved the normalized average performance of all model types, and the T5 model benefited from instruction fine-tuning compared to non-fine-tuned models. most. These results are quite strong for some benchmarks - for example, Flan-T5-XL achieved an MMLU score of 47.6% with only 3 billion parameters, surpassing GPT-3's 43.9% score with 175 billion parameters.

In addition to NLP benchmarks, language models are capable of generating long-form answers to open-ended question requests. In this regard, standard NLP benchmarks and the automated metrics used to evaluate them are insufficient to measure human preferences. The researchers evaluated this, creating an evaluation set of 190 examples. The evaluation set consists of questions posed to the model in a zero-shot manner across five challenging categories of 20 questions each: creativity, contextual reasoning, complex reasoning, planning, and explanation.

For 60 of these examples (from the complex reasoning, planning, and explaining categories), the study created a link with a thought chain trigger phrase (e.g., “Let’s think step by step. ”), as another evaluation of whether fine-tuning is done with zero-shot enabled on CoT. In addition to the 160 zero-shot inputs mentioned above, the study also included 30 inputs to test the few-shot capabilities where strong language models without instructional fine-tuning have been shown to perform well.

Researchers believe that both instruction fine-tuning and scale expansion can continuously improve the performance of large language models, and fine-tuning is crucial for reasoning capabilities and can also generalize model capabilities. By combining instruction fine-tuning with other model adaptation techniques such as UL2R, Google proposes the strongest model Flan-U-PaLM in this work.

Importantly, instruction fine-tuning does not significantly increase computing costs like model scale expansion. For example, for PaLM 540B, instruction fine-tuning only requires 0.2% of pre-training calculations, but it can Improves the normalized average across evaluation benchmarks by 9.4%. Small models that use directive nudges can sometimes outperform larger models without nudges.

For these reasons, researchers recommend instruction fine-tuning for almost all pre-trained language models.

The above is the detailed content of 3 billion outperformed GPT-3's 175 billion. Google's new model caused heated discussion, but it got Hinton's age wrong.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.