Technology peripherals

Technology peripherals

AI

AI

How many steps does it take to install an elephant in the refrigerator? NVIDIA releases ProgPrompt, allowing language models to plan plans for robots

How many steps does it take to install an elephant in the refrigerator? NVIDIA releases ProgPrompt, allowing language models to plan plans for robots

How many steps does it take to install an elephant in the refrigerator? NVIDIA releases ProgPrompt, allowing language models to plan plans for robots

For robots, Task Planning is an unavoidable problem.

If you want to complete a real-world task, you must first know how many steps it takes to install an elephant in the refrigerator.

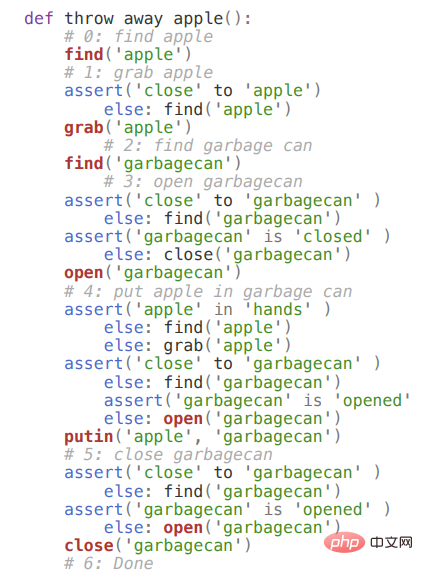

Even the relatively simplethrowing an apple task contains multiple sub-steps, and the robot must observe the position of the apple, if does not see the apple, we must continue to look for , then approach the apple, grab the apple,find and Near the trash can.

If the trash can is closed, you must open it first, and then Throw the apple in and close the trash can.

But thespecific implementation details of each task cannot be designed by humans. How to generate the action sequence through a command is enough. problem.

Usecommand to generate sequence ? Isn't this exactly the job of Language Model?

In the past, researchers have used large language models (LLMs) to score the potential next action space based on input task instructions and then generate action sequences.Instructions are described in natural language and do not contain additional domain information.

But such methods either need to enumerate all possible next actions for scoring, or the generated text has no restrictions in form, which may contain specific robots in the current environmentimpossibleaction.

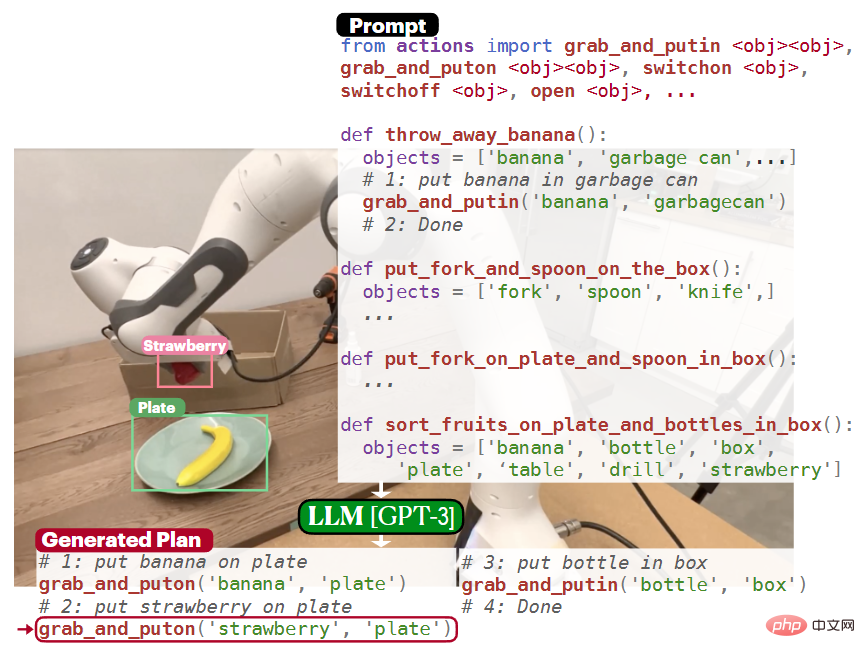

Recently, the University of Southern California and NVIDIA jointly launched a new modelProgPrompt, which also uses a language model to perform task planning on input instructions, which includes a The programmed prompt structure enables the generated plans to work in different environments, robots with different abilities, and different tasks.

generated python style code to prompt the language model which actions are available, what objects are in the environment, and which programs are executable.

For example, enter the"throw apple" command to generate the following program.

on the virtual home task, and the researchers also deployed the model on a Physical Robot Arm for Desktop Tasks on. Magical Language Model

Completing daily household tasks requires both a common sense understanding of the world and situational knowledge of the current environment.In order to create a task plan of "cooking dinner", the minimum knowledge that the agent needs to know includes:

Functions of objects, such as stoves and microwave ovens can be used heating; logical sequence of actions, the oven must be preheated before adding food; and task relevance of objects and actions, such as heating and finding ingredients are first related to "dinner" action.

But withoutstate feedback (state feedback), this kind of reasoning cannot be carried out.

The agent needs to knowwhere there is food in the current environment, such as whether there is fish in the refrigerator, or whether there is chicken in the refrigerator.

Autoregressive large-scale language models trained on large corpora can generate text sequences under the condition of input prompts and have significant multi-task generalization capabilities.

For example, if you enter "make dinner", the language model can generate subsequent sequences, such as opening the refrigerator, picking up the chicken, picking up the soda, closing the refrigerator, turning on the light switch, etc.

The generated text sequence needs to be mapped to the action space of the agent. For example, if the generated instruction is "reach out and pick up a jar of pickles", the corresponding executable action may be "pick up jar", the model then calculates a probability score for an action.

But in the absence of environmental feedback, if there is no chicken in the refrigerator and you still choose to "pick up the chicken", the task will fail because "making dinner" does not include Any information about the state of the world.

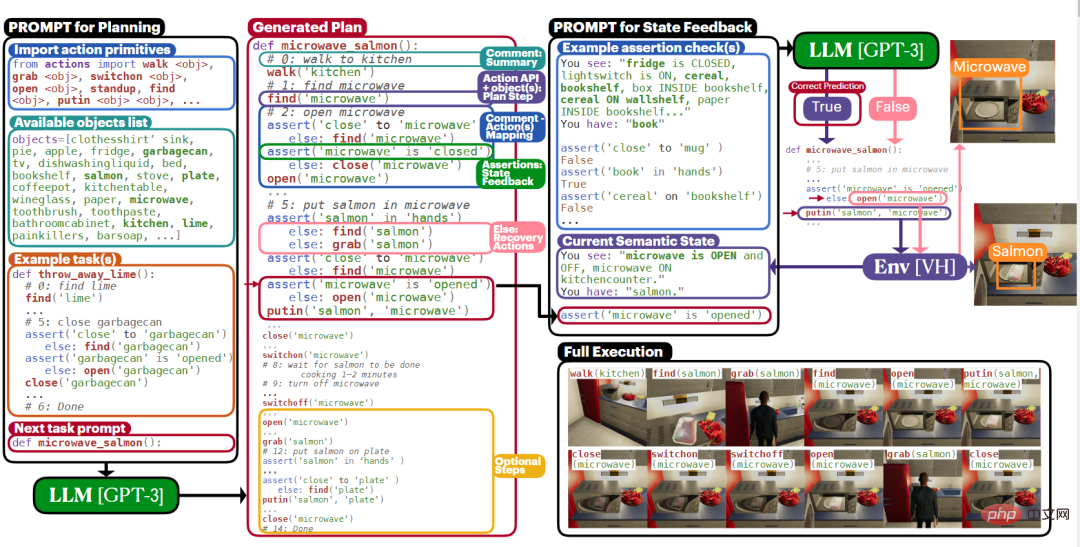

The ProgPrompt model cleverly utilizes programming language structures in task planning, because existing large-scale language models are usually conducted in the corpus of programming tutorials and code documents Pre-training.

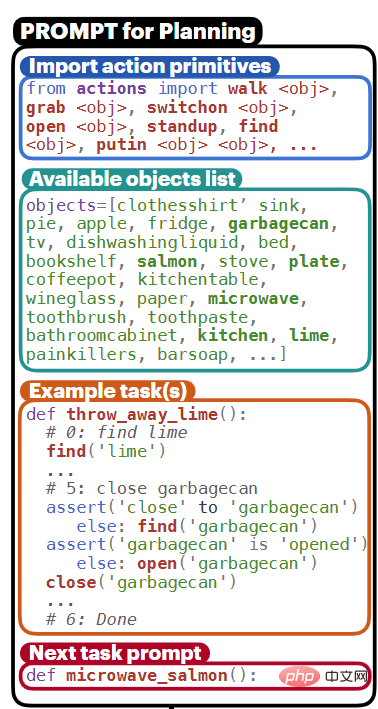

ProgPrompt provides the language model with a Pythonic program header as a prompt, importing the available action space, expected parameters, and available objects in the environment.

Then define such as make_dinner, throw_away_banana and other functions, the main body of which is to operate objects The action sequence is then incorporated by asserting the planned prerequisites , such as approaching the refrigerator before trying to open it, and responding to assertion failures with recovery actions Environment status feedback.

The most important thing is that the ProgPrompt program also includes comments written in natural language to explain the goals of the action, thereby improving the execution of the generated plan program Mission success rate.

ProgPrompt

With the complete idea, the overall workflow of ProgPrompt is clear, which mainly includes three parts, Pythonic function Construction , Constructing programming language prompts , Generation and execution of task plans .

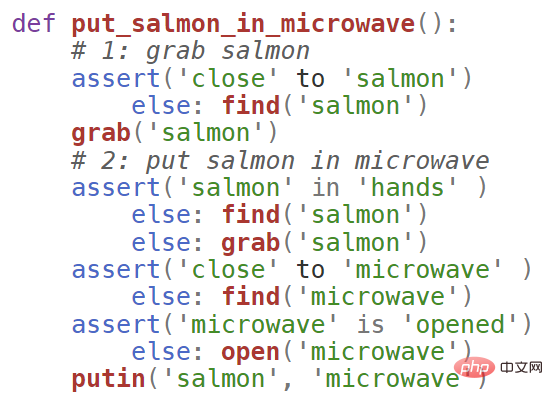

1. Express the robot plan as a Pythonic function

Planning functions include API calls to action primitives , summarizing actions and adding comments, and assertions to track execution.

Each action primitive requires an object as a parameter. For example, the "Put salmon into the microwave" task includes a call to find(salmon), where find is an action primitive. .

Use comments in the code to provide natural language summaries for subsequent action sequences. Comments help break down high-level tasks into appropriate The logical subtasks are "catch the salmon" and "put the salmon in the microwave".

Annotations can also allow the language model to understand the current goal and reduce the possibility of incoherent, inconsistent or repeated output, similar to a chain of thought Generate intermediate results.

Assertions (assertions) Provides an environment feedback mechanism to ensure that preconditions are true and to implement error recovery when they are not true, such as before a crawl action. The plan asserts that the agent is close to the salmon, otherwise the agent needs to perform a find action first.

2. Constructing programming language prompt

prompt needs to provide information about the environment to the language model and main action information, including observations, action primitives, examples, and generated a Pythonic prompt for language model completion.

Then, the language model predicts

in microwave salmon In this task, a reasonable first step that LLM can generate is to take out the salmon, but the agent responsible for executing the plan may not have such an action primitive.

In order for the language model to understand the action primitives of the agent, import them through the import statement in prompt, which also limits the output to functions available in the current environment.

To change the behavior space of the agent, you only need toupdate the import function list.

The variable objects provides all available objects in the environment as a list of strings.

#prompt also includes a number of fully executable program plans as examples. Each example task demonstrates how to complete a given task using the available actions and goals in a given environment. , such as throw_away_lime

3, generation and execution of task plan

given task After that, the plan is completely inferred by the language model based on the ProgPrompt prompt, and then the generated plan can be executed on the virtual agent or physical robot system. An interpreter is required to execute each action command according to the environment.

During execution, assertion checks are performed in a closed-loop manner and feedback is provided based on the current environment state.

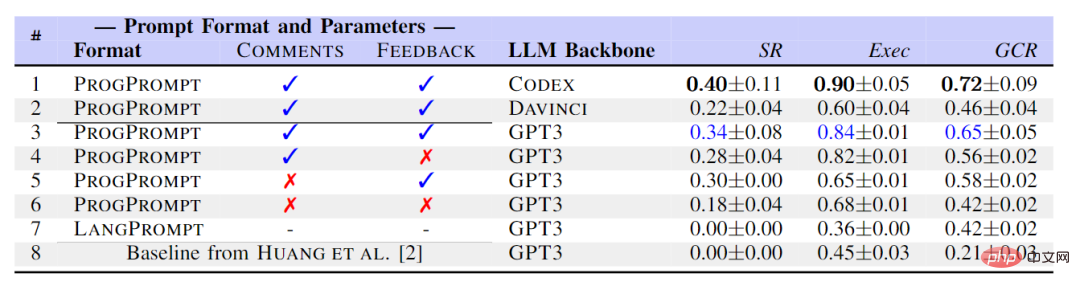

In the experimental part, the researchers evaluated the method on the Virtual Home (VH) simulation platform.

The status of VH includes a set of objects and corresponding attributes, such as salmon inside the microwave oven (in), or close to (agent_close_to), etc.

The action space includes grab, putin, putback, walk, find, open, close close) etc.

Finally, 3 VH environments were experimented, each environment included 115 different objects. The researchers created a data set containing 70 housework tasks, with a high level of abstraction and command It's all about "microwave salmon" and creating a ground-truth action sequence for it.

After evaluating the generated program on the virtual family, the evaluation indicators include success rate (SR), goal conditional recall (GCR) and executability (Exec). From the results It can be seen that ProgPrompt is significantly better than the baseline and LangPrompt. The table also shows how each feature improves performance.

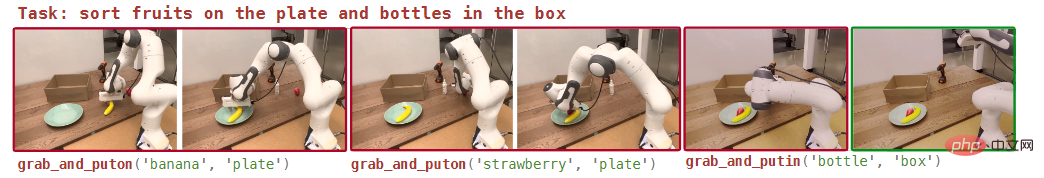

The researchers also conducted experiments in the real world, using a Franka-Emika panda robot with parallel claws, And assume that a pick-and-place strategy can be obtained.

This strategy takes two point clouds of the target object and the target container as input, and performs pick and place operations to place the object on or inside the container.

The system implementation introduces an open vocabulary object detection model ViLD to identify and segment objects in the scene, and build a list of available objects in the prompt.

Unlike in the virtual environment, the object list here is a local variable of each planning function, which allows more flexibility to adapt to new objects.

The plan output by the language model contains function calls in the form of grab and putin.

Due to real-world uncertainties, the assertion-based closed-loop option was not implemented in the experimental setup.

It can be seen that in the classification task, the robot was able to identify bananas and strawberries as fruits and generate planning steps to place them on the plate inside and put the bottle in the box.

The above is the detailed content of How many steps does it take to install an elephant in the refrigerator? NVIDIA releases ProgPrompt, allowing language models to plan plans for robots. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

Recently, Layer1 blockchain VanarChain has attracted market attention due to its high growth rate and cooperation with AI giant NVIDIA. Behind VanarChain's popularity, in addition to undergoing multiple brand transformations, popular concepts such as main games, metaverse and AI have also earned the project plenty of popularity and topics. Prior to its transformation, Vanar, formerly TerraVirtua, was founded in 2018 as a platform that supported paid subscriptions, provided virtual reality (VR) and augmented reality (AR) content, and accepted cryptocurrency payments. The platform was created by co-founders Gary Bracey and Jawad Ashraf, with Gary Bracey having extensive experience involved in video game production and development.

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

If 2023 is recognized as the first year of AI, then 2024 is likely to be a key year for the popularization of large AI models. In the past year, a large number of large AI models and a large number of AI applications have emerged. Manufacturers such as Meta and Google have also begun to launch their own online/local large models to the public, similar to "AI artificial intelligence" that is out of reach. The concept suddenly came to people. Nowadays, people are increasingly exposed to artificial intelligence in their lives. If you look carefully, you will find that almost all of the various AI applications you have access to are deployed on the "cloud". If you want to build a device that can run large models locally, then the hardware is a brand-new AIPC priced at more than 5,000 yuan. For ordinary people,