Technology peripherals

Technology peripherals

AI

AI

New work by Jeff Dean and others: Looking at language models from another angle, the scale is not large enough and cannot be discovered

New work by Jeff Dean and others: Looking at language models from another angle, the scale is not large enough and cannot be discovered

New work by Jeff Dean and others: Looking at language models from another angle, the scale is not large enough and cannot be discovered

In recent years, language models have had a revolutionary impact on natural language processing (NLP). It is known that extending language models, such as parameters, can lead to better performance and sample efficiency on a range of downstream NLP tasks. In many cases, the impact of scaling on performance can often be predicted by scaling laws, and most researchers have been studying predictable phenomena.

On the contrary, 16 researchers including Jeff Dean, Percy Liang, etc. collaborated on the paper "Emergent Abilities of Large Language Models". They discussed the phenomenon of large model unpredictability and This is called the emergent abilities of large language models. The so-called emergence means that some phenomena do not exist in the smaller model but exist in the larger model. They believe that this ability of the model is emergent.

Emergence as an idea has been discussed for a long time in fields such as physics, biology, and computer science. This paper starts with a general definition of emergence, adapted from Steinhardt's research, and is rooted in an article titled More Is Different by Nobel Prize winner and physicist Philip Anderson in 1972.

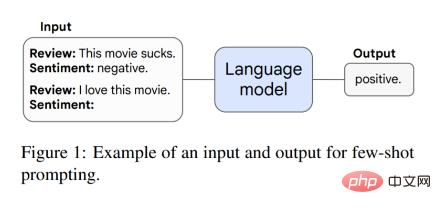

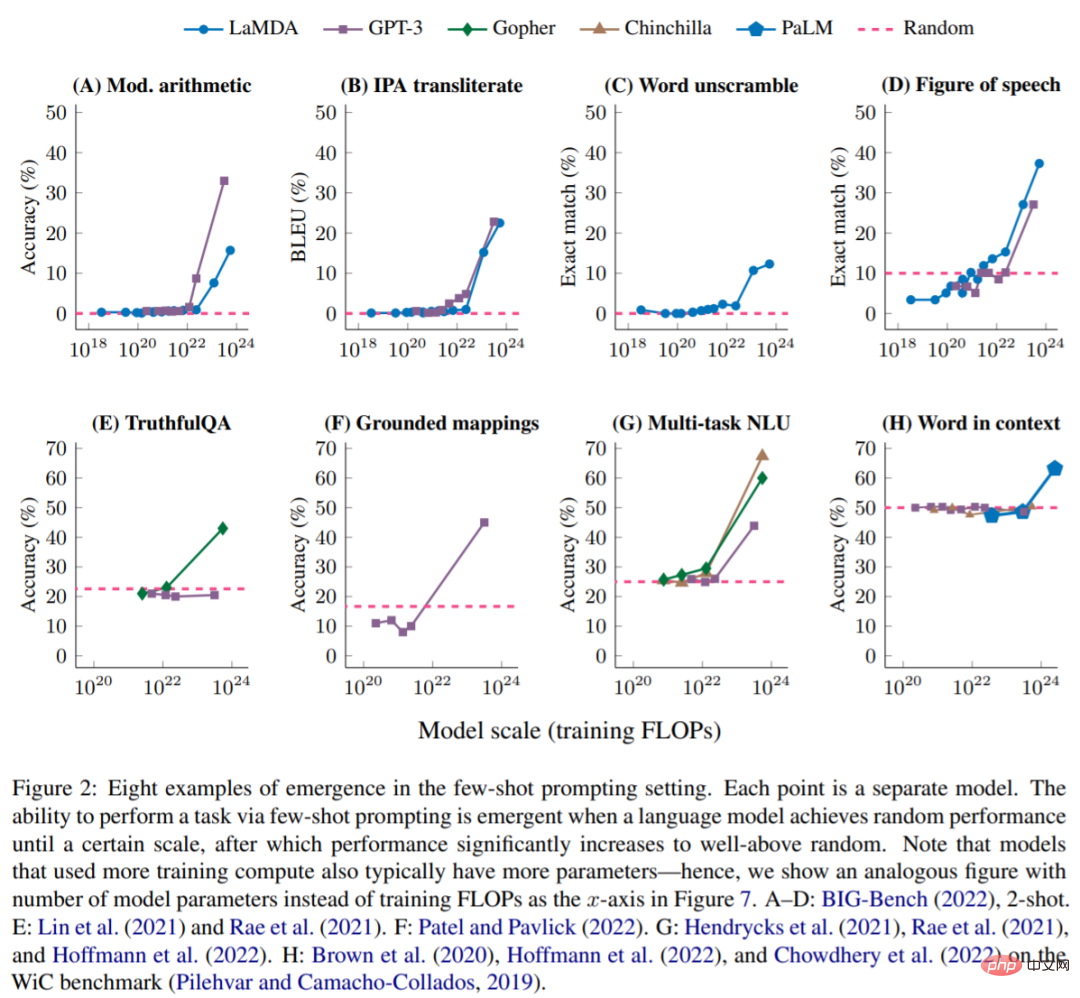

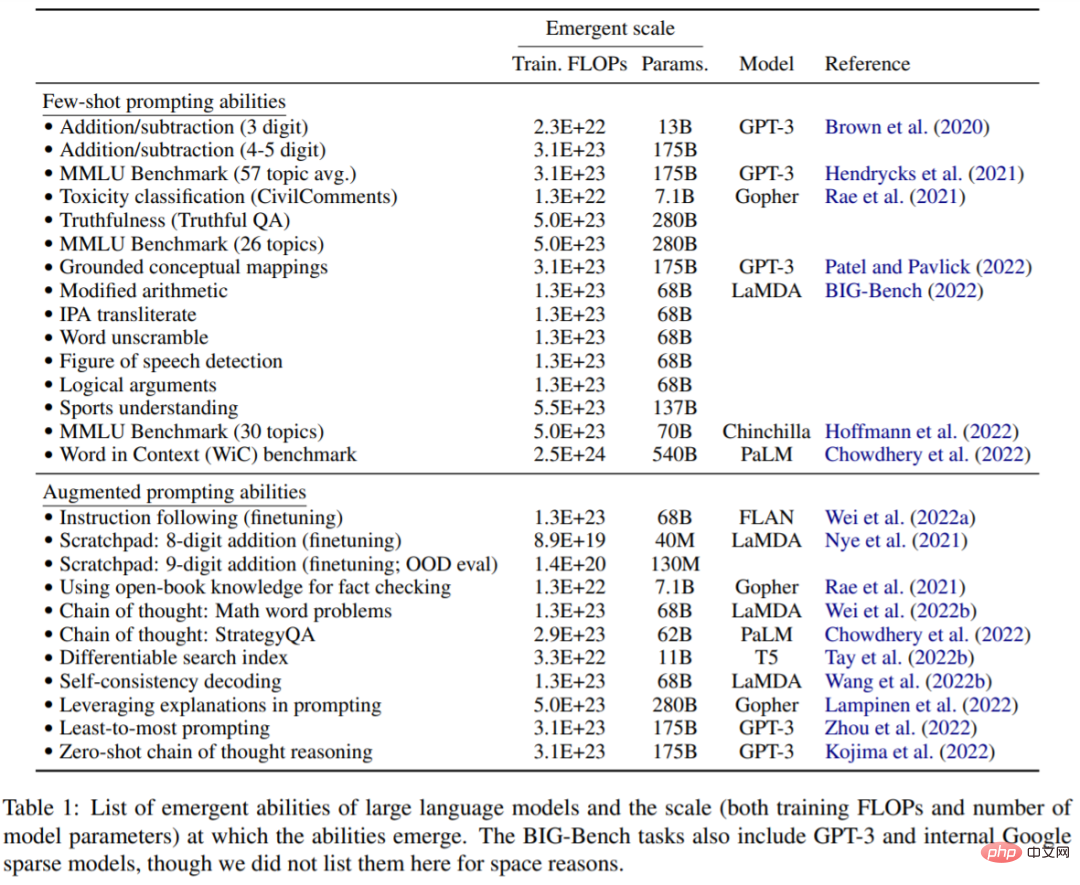

This article explores the emergence of model size, as measured by training calculations and model parameters. Specifically, this paper defines the emergent capabilities of large language models as capabilities that are not present in small-scale models but are present in large-scale models; therefore, large-scale models cannot be predicted by simply extrapolating the performance improvements of small-scale models . This study investigates the emergent capabilities of models observed in a range of previous work and classifies them into settings such as small-shot cueing and boosted cueing.

This emergent capability of the model inspires future research into why these capabilities are acquired and whether larger scales acquire more emergent capabilities and highlights this The importance of research.

Currently, although small sample hints are the most common way to interact with large language models, recent work has proposed several other hints and fine-tuning strategies to further enhance the capabilities of language models. This article also considers a technology to be an emergent capability if it does not show improvement or is harmful before being applied to a large enough model.

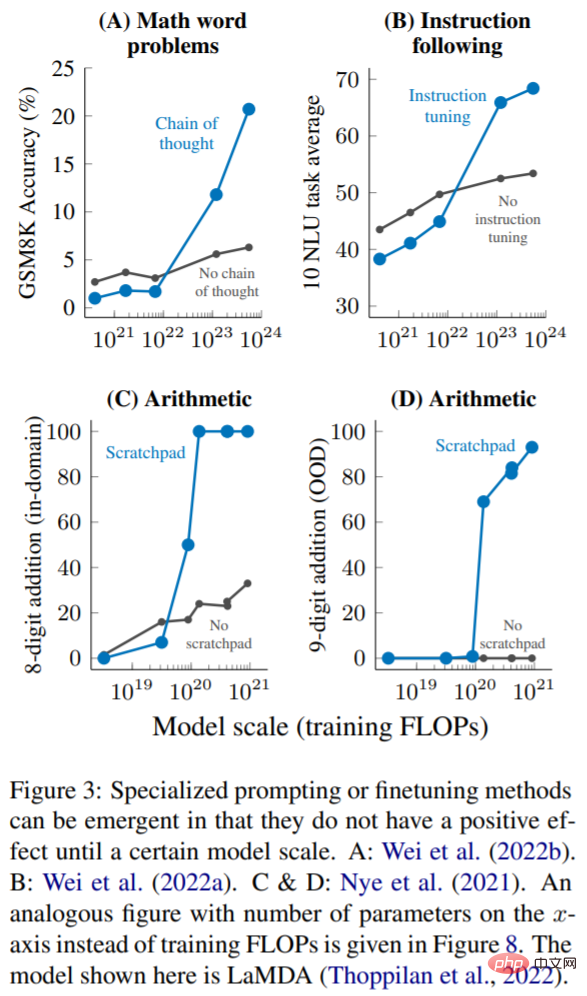

Multi-step reasoning: For language models and NLP models, reasoning tasks, especially those involving multi-step reasoning, have always been a big challenge. . A recent prompting strategy called chain-of-thought enables language models to solve this type of problem by guiding them to generate a series of intermediate steps before giving a final answer. As shown in Figure 3A, when scaling to 1023 training FLOPs (~100B parameters), the thought chain prompt only surpassed the standard prompt with no intermediate steps.

Instruction (Instruction following): As shown in Figure 3B, Wei et al. found that when the training FLOP is 7·10^21 (8B parameters) or smaller, the instruction fine-tuning (instruction following) -finetuning) technique hurts model performance and only improves performance when extending training FLOPs to 10^23 (~100B parameters).

Program execution: As shown in Figure 3C, in the in-domain evaluation of 8-bit addition, using scratchpad only helps ∼9 · 10^19 training FLOP (40M parameters) or larger models. Figure 3D shows that these models can also generalize to out-of-domain 9-bit addition, which occurs in ∼1.3 · 10^20 training FLOPs (100M parameters).

This article discusses the emergent power of language models, which so far has only been observed at certain computational scales Meaningful performance. This emergent capability of models can span a variety of language models, task types, and experimental scenarios. The existence of this emergence means that additional scaling can further expand the capabilities of language models. This ability is the result of recently discovered language model extensions. How they emerged and whether more extensions will bring more emergent capabilities may be important future research directions in the field of NLP.

For more information, please refer to the original paper.

The above is the detailed content of New work by Jeff Dean and others: Looking at language models from another angle, the scale is not large enough and cannot be discovered. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

SQLLIMIT clause: Control the number of rows in query results. The LIMIT clause in SQL is used to limit the number of rows returned by the query. This is very useful when processing large data sets, paginated displays and test data, and can effectively improve query efficiency. Basic syntax of syntax: SELECTcolumn1,column2,...FROMtable_nameLIMITnumber_of_rows;number_of_rows: Specify the number of rows returned. Syntax with offset: SELECTcolumn1,column2,...FROMtable_nameLIMIToffset,number_of_rows;offset: Skip

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

It is impossible to view MongoDB password directly through Navicat because it is stored as hash values. How to retrieve lost passwords: 1. Reset passwords; 2. Check configuration files (may contain hash values); 3. Check codes (may hardcode passwords).

Master the ORDER BY clause in SQL: Effectively sort data

Apr 08, 2025 pm 07:03 PM

Master the ORDER BY clause in SQL: Effectively sort data

Apr 08, 2025 pm 07:03 PM

Detailed explanation of the SQLORDERBY clause: The efficient sorting of data ORDERBY clause is a key statement in SQL used to sort query result sets. It can be arranged in ascending order (ASC) or descending order (DESC) in single columns or multiple columns, significantly improving data readability and analysis efficiency. ORDERBY syntax SELECTcolumn1,column2,...FROMtable_nameORDERBYcolumn_name[ASC|DESC];column_name: Sort by column. ASC: Ascending order sort (default). DESC: Sort in descending order. ORDERBY main features: Multi-column sorting: supports multiple column sorting, and the order of columns determines the priority of sorting. since

Navicat connects to database error code and solution

Apr 08, 2025 pm 11:06 PM

Navicat connects to database error code and solution

Apr 08, 2025 pm 11:06 PM

Common errors and solutions when connecting to databases: Username or password (Error 1045) Firewall blocks connection (Error 2003) Connection timeout (Error 10060) Unable to use socket connection (Error 1042) SSL connection error (Error 10055) Too many connection attempts result in the host being blocked (Error 1129) Database does not exist (Error 1049) No permission to connect to database (Error 1000)

How to write the latest tutorial on SQL insertion statement

Apr 09, 2025 pm 01:48 PM

How to write the latest tutorial on SQL insertion statement

Apr 09, 2025 pm 01:48 PM

The SQL INSERT statement is used to add new rows to a database table, and its syntax is: INSERT INTO table_name (column1, column2, ..., columnN) VALUES (value1, value2, ..., valueN);. This statement supports inserting multiple values and allows NULL values to be inserted into columns, but it is necessary to ensure that the inserted values are compatible with the column's data type to avoid violating uniqueness constraints.

Is there a stored procedure in mysql

Apr 08, 2025 pm 03:45 PM

Is there a stored procedure in mysql

Apr 08, 2025 pm 03:45 PM

MySQL provides stored procedures, which are a precompiled SQL code block that encapsulates complex logic, improves code reusability and security. Its core functions include loops, conditional statements, cursors and transaction control. By calling stored procedures, users can complete database operations by simply inputting and outputting, without paying attention to internal implementations. However, it is necessary to pay attention to common problems such as syntax errors, permission problems and logic errors, and follow performance optimization and best practice principles.