Technology peripherals

Technology peripherals

AI

AI

Google AI year-end summary No. 6: How is the development of Google robots without Boston Dynamics?

Google AI year-end summary No. 6: How is the development of Google robots without Boston Dynamics?

Google AI year-end summary No. 6: How is the development of Google robots without Boston Dynamics?

A Boston Dynamics backflip shows us the infinite possibilities brought by man-made robots. ##

##Although Google has Boston Dynamics took action, but Google still continued their path of robot development, not only approaching humans in "body", in "intelligence" "Also pursuing a better understanding of human instructions.

The Google Research year-end summary series "Google Research, 2022 & beyond" led by Jeff Dean has been updated to the sixth issue. The theme of the issue is "Robotics", written by Kendra Byrne, senior product manager and Google Robotics Research Scientist Jie Tan

In our lifetime, we will definitely see robotic technology participating in human daily life and helping to improve human health. Productivity and quality of life. Before robotic technologies can be widely used for everyday practical work in human-centered spaces (i.e. spaces designed for people, not machines), they need to be ensured that they can be done safely to help people.

In 2022, Google is focusing on the challenge of making robots more helpful to humans:

Let robots and humans communicate more effectively and naturally;- Make robots more helpful to humans Robots are able to understand and apply common sense knowledge in the real world;

- Expand the number of low-level skills required for robots to effectively perform tasks in unstructured environments.

- When LLM meets robots

One feature of large language models (LLM) is the ability to encode descriptions and context into a format that "both humans and machines can understand."

When LLM is applied to robotics, it allows users to assign tasks to robots only through natural language instructions; when combined with visual models and robot learning methods, LLM provides a A way to understand the context of a user's request and be able to plan the actions to be taken to complete the request.

One of the basic methods is to use LLM to prompt other pre-trained models to obtain information to build context of what is happening in the scene and make predictions for multi-modal tasks. The whole process is similar to the Socratic teaching method. The teacher asks students questions and guides them to answer them through a rational thinking process.

In the "Socrates Model", the researchers demonstrated that this approach can achieve state-of-the-art performance in zero-shot image description and video text retrieval tasks, and can also support new features, such as Answer free-form questions about videos and predict future activity, multimodal assisted dialogue, and robotic perception and planning.

Paper link: https://arxiv.org/abs/2204.00598

Paper link: https://arxiv.org/abs/2204.00598

In "Towards Helpful Robots: A Basic Language for Robot Usability" In the article, researchers collaborated with Everyday Robots to plan long-term tasks based on the PaLM language model in the robot usability model.

Blog link: https://ai.googleblog.com/2022/08/towards-helpful-robots-grounding.html

Blog link: https://ai.googleblog.com/2022/08/towards-helpful-robots-grounding.html

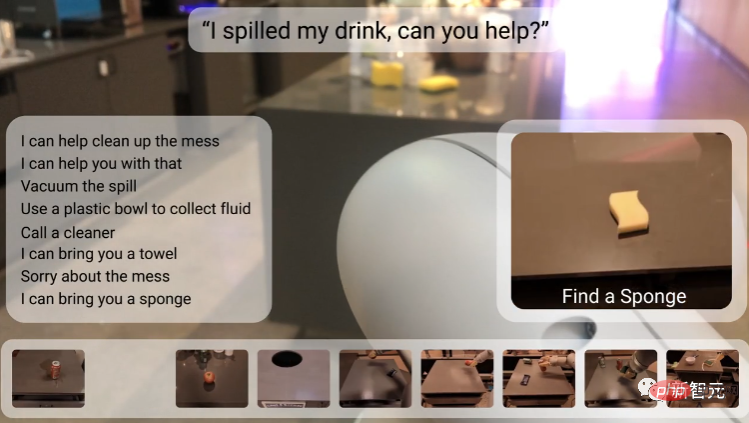

In the previous In the machine learning approach, the robot can only accept short hard-coded commands such as "pick up the sponge" and has difficulty reasoning about the steps required to complete the task. If the task is an abstract goal, such as "Can you help clean up these spills?" Something?" is even more difficult to deal with.

The researchers chose to use an LLM to predict the sequence of steps to complete a long-term task, along with an affordance model that represents the skills a robot can actually accomplish in a given situation.

The researchers chose to use an LLM to predict the sequence of steps to complete a long-term task, along with an affordance model that represents the skills a robot can actually accomplish in a given situation.

The value function in the reinforcement learning model can be used to build an affordance model, which is an abstract representation of the actions that a robot can perform in different states, thus integrating long-term tasks in the real world, such as "tidying up the bedroom" with completing the task. short-term skills needed such as selecting, placing and arranging items correctly.

Paper link: https://arxiv.org/abs/2111.03189

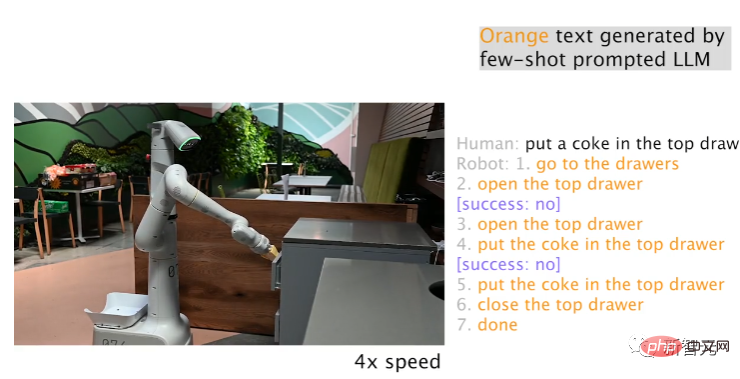

Having both LLM and affordance models does not mean that the robot can successfully complete Tasks, through inner monologue (Inner Monologue), can close the loop in LLM-based task planning; using other information sources, such as human feedback or scene understanding, can detect when the robot cannot complete the task correctly.

Paper link: https://arxiv.org/abs/2207.05608

Using a robot from Everyday Robots, researchers found that LLM can effectively By re-planning current or previous failed planning steps, the robot can recover from failure and complete complex tasks, such as "putting a Coke in the top drawer."

In LLM-based task planning, one of the outstanding capabilities is that the robot can respond to changes in the intermediate tasks of high-level goals: for example, the user can tell the robot what is happening Things that can change already planned actions by providing quick corrections or redirecting the robot to another task are particularly useful for letting users interactively control and customize robot tasks.

While natural language makes it easier for people to specify and modify robot tasks, there is also the challenge of reacting to human descriptions in real time.

Researchers propose a large-scale imitation learning framework for producing real-time, open-vocabulary, language-conditioned robots capable of processing more than 87,000 unique instructions with an estimated average success rate of 93.5 %; As part of this project, Google also released the largest language annotation robot data set Language-Table

Paper link: https://arxiv.org/ pdf/2210.06407.pdf

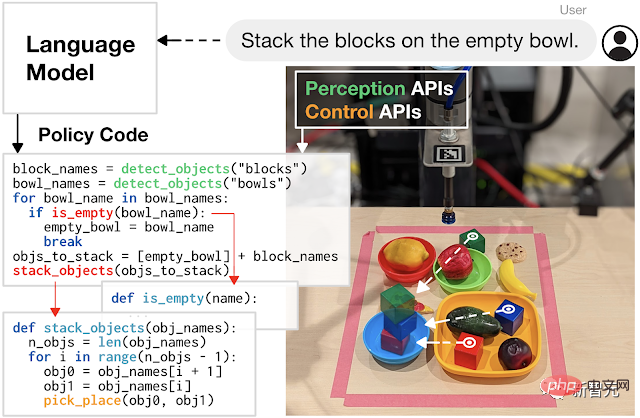

And using LLM to write code to control robot movements is also a promising research direction.

The coding method developed by the researchers demonstrates the potential to increase task complexity, allowing robots to autonomously generate new code to recombine API calls, synthesize new functions, and express Feedback loops to synthesize new behaviors at runtime.

Paper link: https://arxiv.org/abs/2209.07753

Transforming robot learning into a scalable data problem

Large language and multimodal models can help robots understand the environment in which they operate, such as what is happening in the scene and what the robot should do; but robots also need low-level physical skills to complete tasks in the physical world, such as picking up and precise placement of objects.

While humans often take these physical skills for granted, being able to perform various actions without thinking, they present a problem for robots.

For example, when a robot picks up an object, it needs to sense and understand the environment, deduce the spatial relationship and contact dynamics between the claw and the object, accurately drive the high-degree-of-freedom arm, and apply appropriate force to stabilize it. Grab objects without destroying them.

The difficulty in learning these low-level skills is known as Moravec's Paradox: Reasoning requires very little computation, but sensorimotor and perceptual skills require massive computational resources.

Inspired by the success of LLM, the researchers adopted a data-driven approach to transform the problem of learning low-level physics skills into a scalable data problem: LLM showed the generalizability and performance of large Transformer models Increases as data volume increases.

Paper link: https://robotics-transformer.github.io/assets/rt1.pdf

The researchers proposed the Robot Transformer-1 (RT-1) model and trained a robot operation strategy. The training data used was a large-scale real-world robot data set of 130,000 episodes, using the data from Everyday Robots 13 robots, covering more than 700 tasks, and show the same trend in robotics, namely that increasing the size and diversity of data improves model generalization to new tasks, environments, and objects.

Behind the language model and robot learning methods (such as RT-1), the Transformer model is trained based on Internet-scale data; but what is different from LLM is that ,Robotics faces the challenge of multi-modal representation of ,changing environments and limited computation.

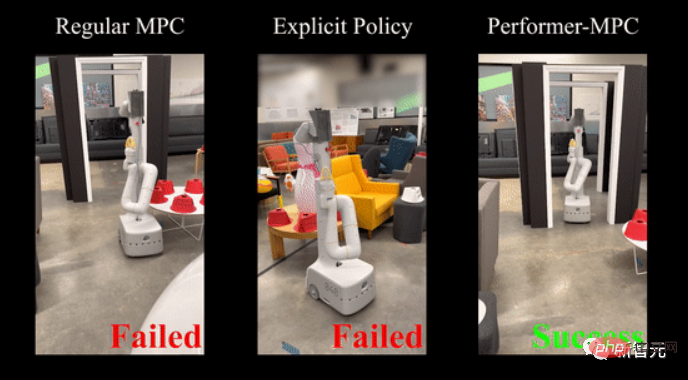

In 2020, Google proposed Performers, a method that can improve the computing efficiency of Transformer, affecting multiple application scenarios including robotics.

Recently researchers have extended this method and introduced a new class of implicit control strategies that combine the advantages of simulation learning and robust handling of system constraints (model pre-estimated control constraints).

Paper link: https://performermpc.github.io/

Compared with the standard MPC strategy, the experimental results show that the robot is better at achieving the goal There is more than 40% improvement, and there is more than 65% improvement in social indicators when navigating around humans; the Performance-MPC is 8.3 M-parameter model and the latency of the model is only 8 milliseconds, making it feasible to deploy Transformers on robots.

Google’s research team also demonstrated that data-driven methods are often applicable to different robotic platforms in different environments to learn a wide range of tasks, including mobile operation, navigation, locomotion and table tennis, etc., also point to a clear path for learning low-level robotics skills: scalable data collection.

Unlike the abundant video and text data on the Internet, robotics data is extremely scarce and difficult to obtain, and methods to collect and effectively use rich data sets that represent real-world interactions are key to a data-driven approach.

Simulation is a fast, safe and easy to parallelize option, but it is difficult to replicate the complete environment in simulation, especially the physical environment and human-computer interaction environment.

Paper link: https://arxiv.org/abs/2207.06572

In i-Sim2Real, researchers demonstrated a method to Bootstrapping from a simple human behavior model and alternating between simulation training and deployment in the real world to resolve the mismatch between simulation and reality and learn to play table tennis against human opponents at each iteration , human behavior models and strategies will be refined.

While simulations can assist in collecting data, collecting data in the real world is critical to fine-tuning simulation strategies or adapting existing strategies in new environments.

During the learning process, a robot can easily fail and potentially cause damage to itself and its surrounding environment. Especially in the early learning stages of exploring how to interact with the world, training data needs to be collected safely to make the robot Not only learn skills, but also recover autonomously from failures.

Paper link: https://arxiv.org/abs/2110.05457

The researchers proposed a secure RL framework based on the "Learner Strategy Switch between "Safe Recovery Strategy", which is optimized to perform the required tasks, and "Safe Recovery Strategy" to prevent the robot from being in an unsafe state; a reset strategy is trained so that the robot can recover from failure, such as learning after a fall Stand up on your own.

Although there is very little data on robots, there are many videos of humans performing different tasks. Of course, the structures of robots and humans are different, so it is important for robots to learn from humans. The idea raises the question of "transfer learning across different entities."

Paper link: https://arxiv.org/pdf/2106.03911.pdf

Researchers developed Cross-Embodiment Inverse reinforcement learning Reinforcement Learning), learning new tasks by observing humans, is not trying to replicate the task as accurately as humans, but learning high-level task goals and summarizing this knowledge in the form of a reward function. Demonstration learning allows robots to learn by watching Learn skills with videos readily available on the internet.

Another direction is to improve the data efficiency of learning algorithms so that they no longer rely solely on extended data collection: RL is improved by incorporating prior information, including predictive information, adversarial action priors, and guidance strategies efficiency of the method.

Paper link: https://arxiv.org/abs/2210.10865

Using a new structured dynamic system architecture to combine RL with The combination of trajectory optimization, supported by new solvers, has been further improved, with prior information helping to ease exploration challenges, better normalize the data and significantly reduce the amount of data required.

In addition, the robotics team has also invested a lot of money in more effective data simulation learning. Experiments have proven that a simple imitation learning method BC-Z can zero in on new tasks not seen in training. -shot generalization.

Paper link: https://arxiv.org/pdf/2210.02343.pdf

And also introduced an iterative imitation learning algorithm GoalsEye, from the game The combination of medium learning and target conditional behavior cloning is used for high-speed and high-precision table tennis games.

Paper link: https://sites.google.com/view/goals-eye

On the theoretical side, researchers studied representation simulation learning Dynamic system stability with sample complexity, and the role of capturing failures and recoveries in demonstration data to better regulate offline learning with small data sets.

Paper link: https://proceedings.mlr.press/v168/tu22a.html

Summary

Large-scale in the field of artificial intelligence The advancement of models has promoted a leap in robot learning capabilities.

The past year has seen the sense of context and sequence of events captured in LLM help address long-term planning for robotics and make it easier for robots to interact with people and complete tasks. You can also see scalable paths to learning robustness and generalizing robot behavior by applying the Transformer model architecture to robot learning.

Google has pledged to continue to open source data sets to continue developing useful robots in the new year.

The above is the detailed content of Google AI year-end summary No. 6: How is the development of Google robots without Boston Dynamics?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.