Technology peripherals

Technology peripherals

AI

AI

This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced

This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced

This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced

Recently, the large model research team of Zhiyuan Research Institute has open sourced the latest bilingual AltDiffusion model, bringing a strong impetus for professional-level AI text and graphics creation to the Chinese world:

Support Fine and long Chinese Prompts are advanced creations; no cultural translation is required, from the original Chinese language to Chinese painting with both form and spirit; and it has reached a low threshold in painting level, Chinese and English are aligned, the original Stable Diffusion level shocking visual effects, it can be said to be a world-class Chinese speaker AI painting master.

Innovative model AltCLIP is the cornerstone of this work, complementing the original CLIP model with three stronger cross-language capabilities. Both AltDiffusion and AltCLIP models are multi-language models. Chinese and English bilingualism are the first stage of work, and the code and models have been open source.

AltDiffusion

##https://github.com/FlagAI-Open/FlagAI/tree/ master/examples/AltDiffusion

AltCLIP

##https://github.com/ FlagAI-Open/FlagAI/examples/AltCLIP

HuggingFace space trial address:

https://huggingface.co/spaces/BAAI/bilingual_stable_diffusion

Technical Report

https://arxiv.org/abs/2211.06679Professional Chinese AltDiffusion

——Long Prompt fine painting with native Chinese style, meeting the high needs of Chinese AI creation mastersBenefit from the powerful Chinese and English bilingual alignment based on AltCLIP Ability, AltDiffusion has reached a level of visual effects similar to Stable Diffusion. In particular, it has the unique advantage of being better at understanding Chinese and being better at Chinese painting. It is very worthy of the expectations of professional Chinese AI text and picture creators.

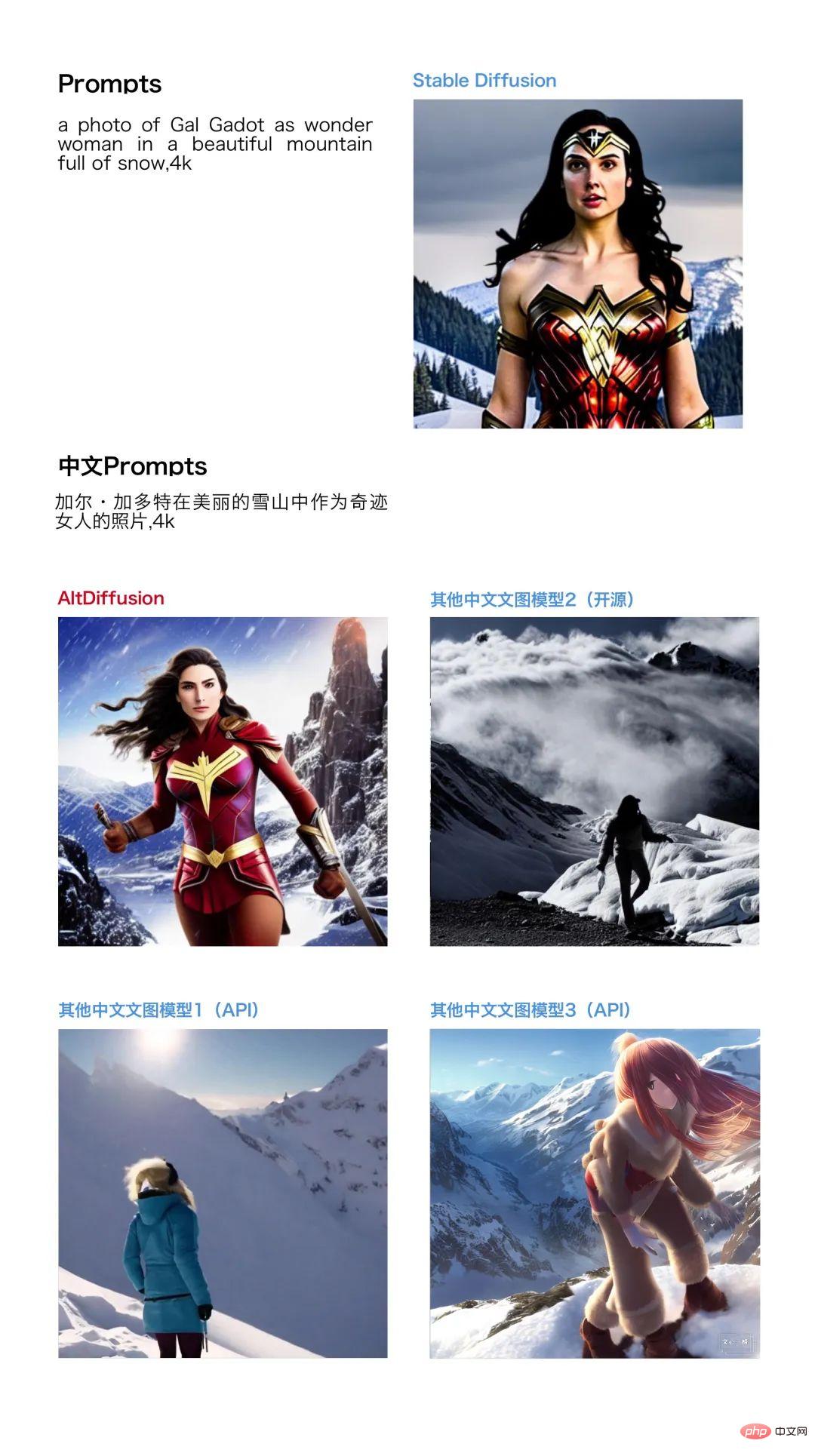

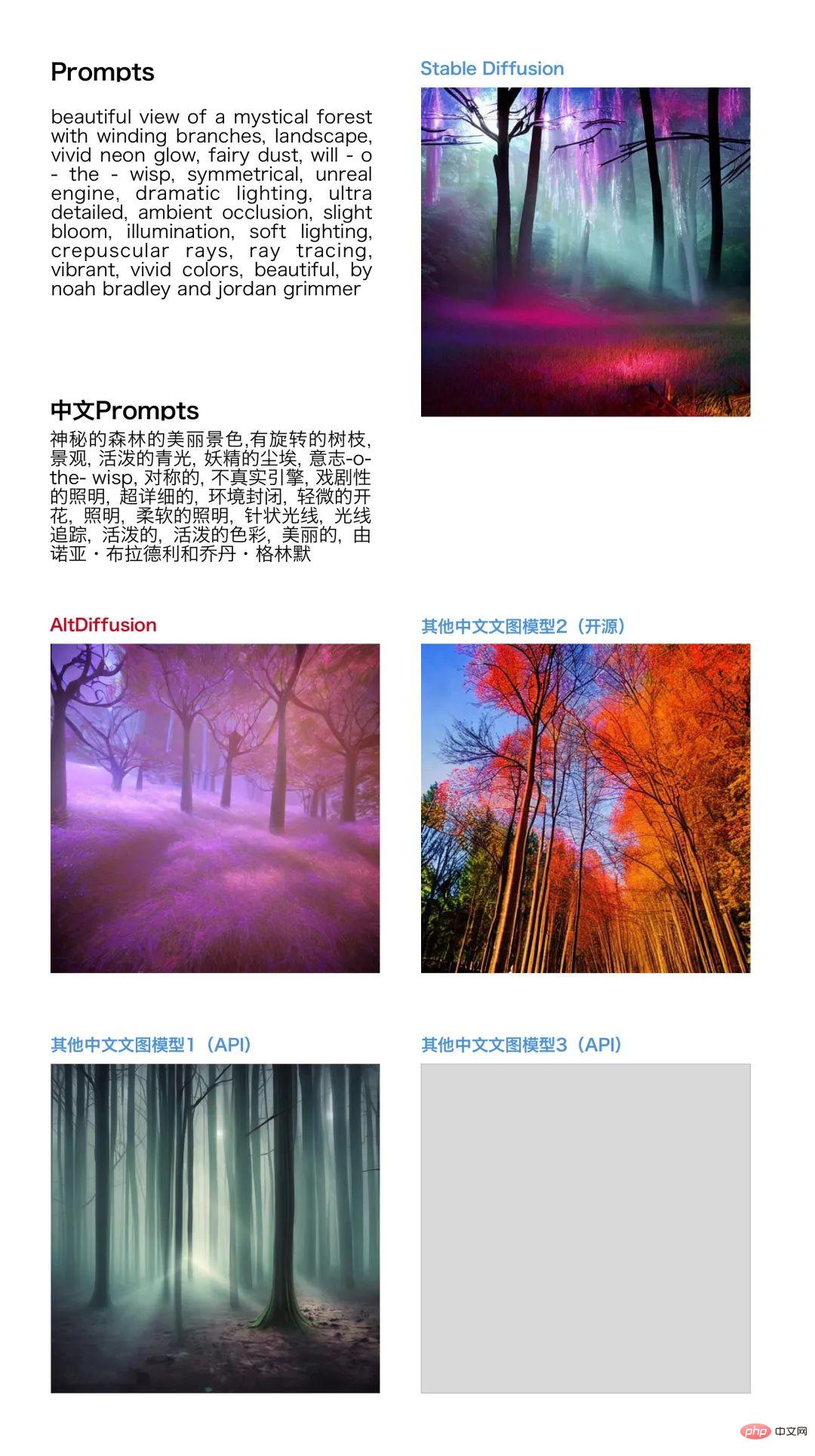

1. Long Prompt generation, the picture effect is not inferior

The length of Prompt is the watershed to test the model's ability to generate text and images. The longer the Prompt, the more difficult it is to test language understanding. , image and text alignment and cross-language capabilities.Under the same Chinese and English long prompt input adjustments, AltDiffusion is even more expressive in many image generation cases: the element composition is rich and exciting, and the details are described delicately and accurately.

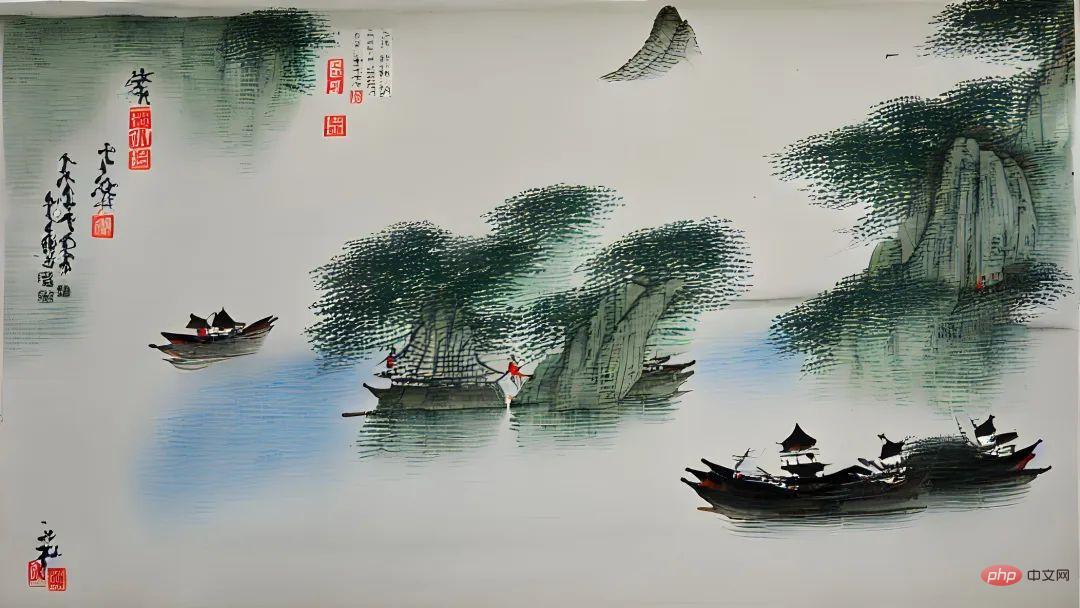

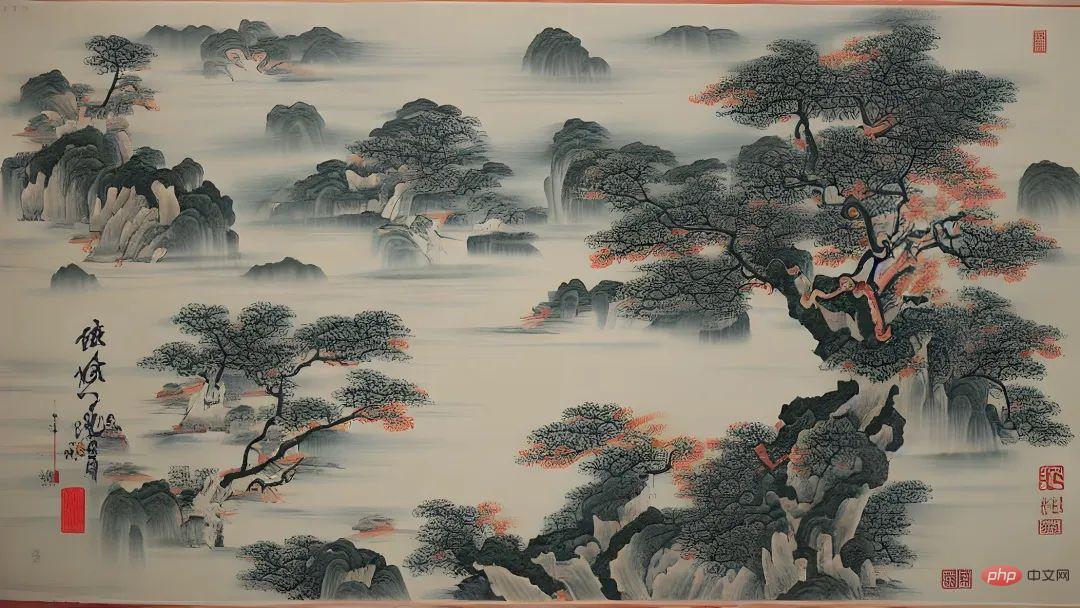

2. Understand Chinese better and be better at Chinese painting

2. Understand Chinese better and be better at Chinese painting

AltDiffusion understands Chinese better. It can describe the meaning in the Chinese cultural context and understand the creator's intention instantly. For example, the description of "The Grand Scene of the Tang Dynasty" avoids going off-topic due to cultural misunderstandings.

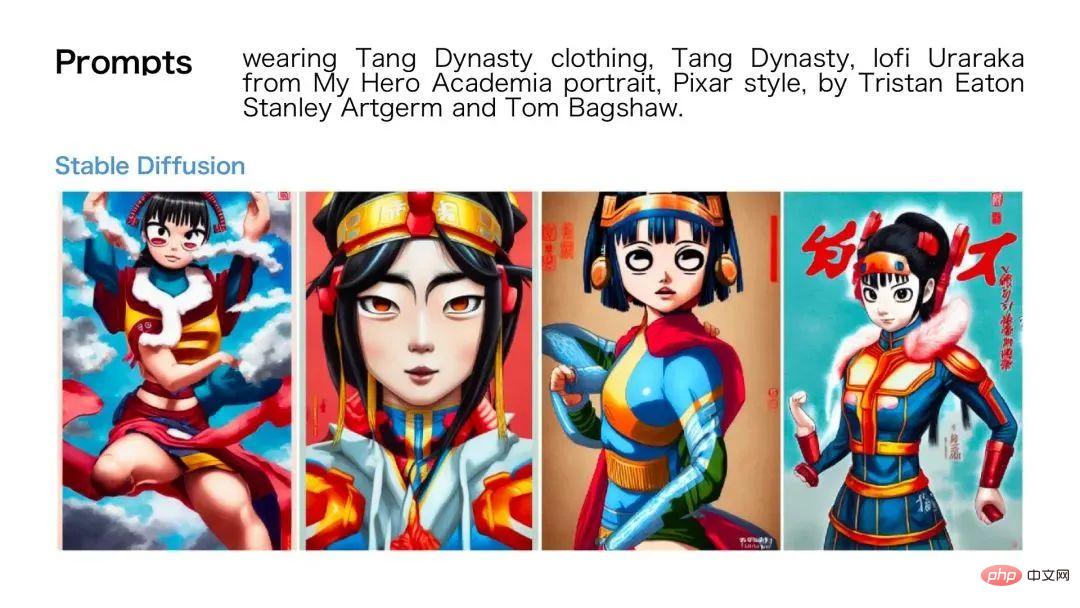

In particular, concepts originating from Chinese culture can be understood and expressed more accurately to avoid confusion between "Japanese style" and "Chinese style". A ridiculous situation. For example, when inputting prompts corresponding to the Tang suit character style with Stable Diffusion in Chinese and English, the difference is clear at a glance:

In the generation of a specific style, It will natively use the Chinese cultural context as the identity subject for style creation. For example, for the prompt with "ancient architecture" below, ancient Chinese architecture will be generated by default. The creative style is more in line with the identity of Chinese creators.

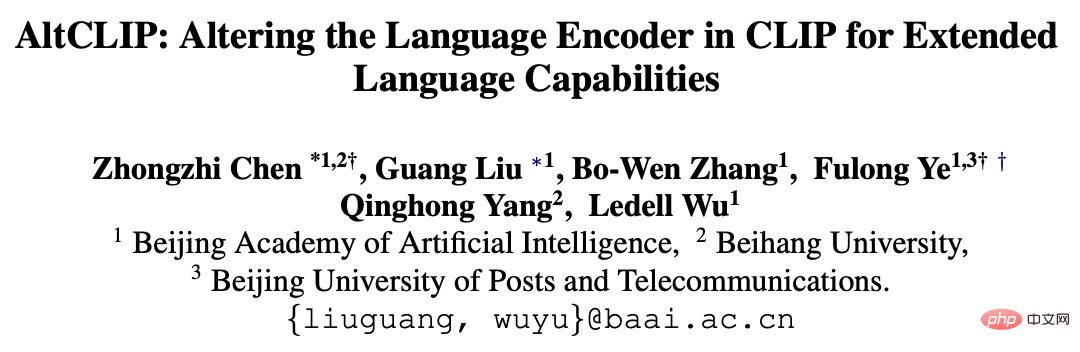

#3. Chinese and English bilingual, generated effect alignment

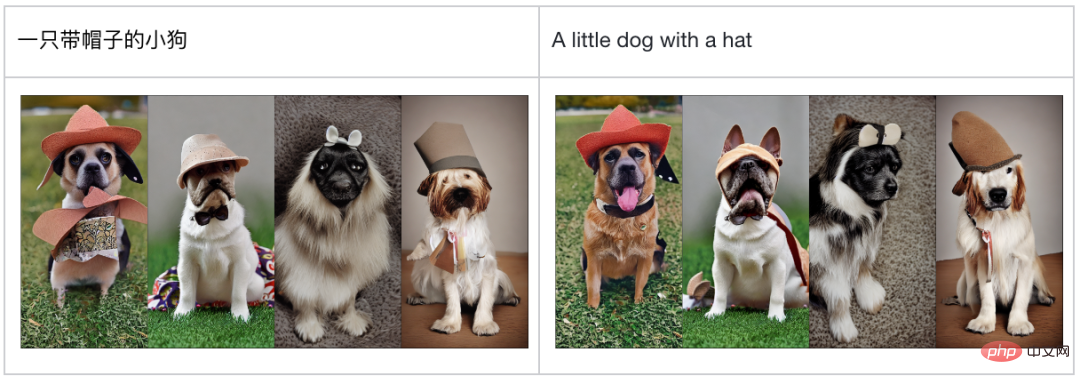

AltDiffusion is based on Stable Diffusion, by replacing the CLIP in the original Stable Diffusion into AltCLIP, and further trained the model using Chinese and English image and text pairs. Thanks to AltCLIP's powerful language alignment capabilities, the generation effect of AltDiffusion is very close to Stable Diffusion in English, and it also reflects consistency in Chinese and English bilingual performance.

For example, after inputting the Chinese and English Prompts of "puppy in a hat" into AltDiffusion, the generated picture effects are basically aligned with extremely high consistency:

After adding the descriptor "Chinese boy" to the "boy" picture, based on the original image of the little boy, it was accurately adjusted to become a typical "Chinese" child, which was displayed in the language control generation Produce excellent language understanding capabilities and accurate expression results.

Open up the original ecosystem of StableDiffusion

——Rich ecological tools and PromptsBook application can Excellent playability

Particularly worth mentioning is AltDiffusion’s ecological opening up capabilities:

All tools that support Stable Diffusion such as Stable Diffusion WebUI, DreamBooth, etc. can be applied to our The Chinese-English bilingual Diffusion model provides a wealth of choices for Chinese AI creation:

1. Stable Diffusion WebUI

An excellent web tool for text and image generation and text and image editing; When we turn the night view of Peking University into Hogwarts (prompt: Hogwarts), the dreamy magical world can be presented in an instant;

2. DreamBooth

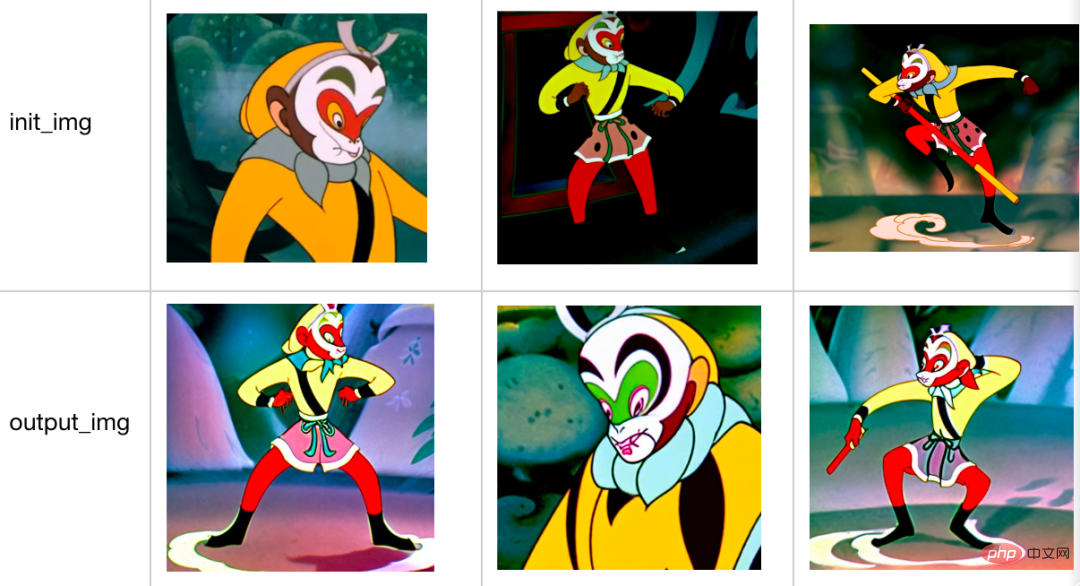

A tool to debug the model through a small number of samples to generate a specific style; through this tool, a specific style can be generated using a small number of Chinese images on AltDiffusion, such as the "Havoc in Heaven" style.

3. Make full use of the community Stable Prompts Book

Prompts are very important for generating models. Community users have accumulated rich generation effect cases through a large number of prompts attempts. . These valuable prompts experience are almost all applicable to AltDiffusion users!

In addition, you can also mix Chinese and English to match some magical styles and elements, or continue to explore Chinese Prompts suitable for AltDiffusion.

4. Convenient for Chinese creators to fine-tune

The open source AltDiffusion provides a basis for Chinese generation models. On this basis, you can use more Chinese in specific fields The data is used to fine-tune the model to facilitate expression by Chinese creators.

Based on the first bilingual AltCLIP

- Comprehensively enhance the three major cross-language capabilities, Chinese and English are aligned, Chinese is better, and the threshold is extremely low

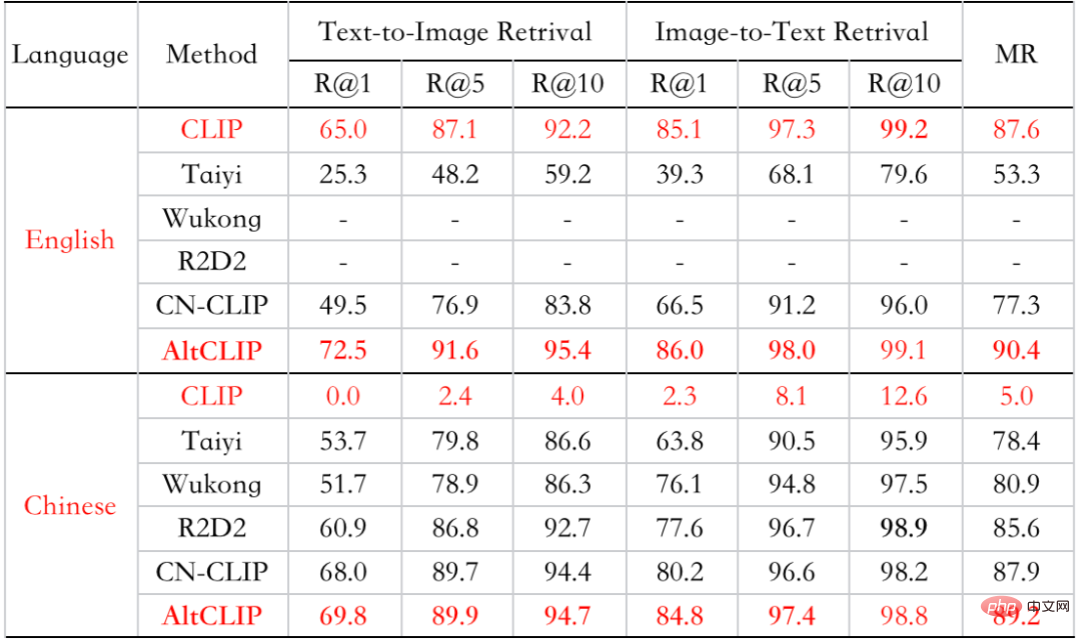

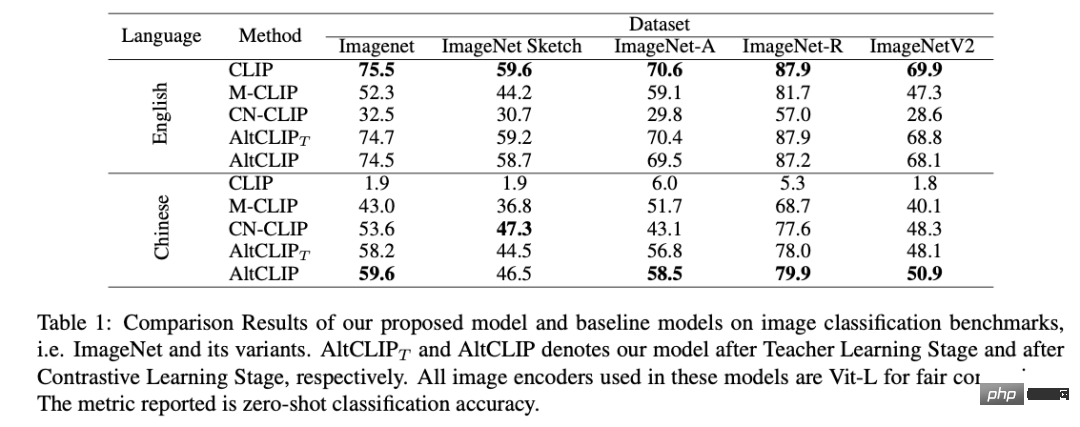

Language understanding, image and text alignment, and cross-language ability are the three necessary abilities for cross-language research.

AltDiffusion's many professional-level capabilities are derived from AltCLIP's innovative tower-changing idea, which has been fully enhanced in these three major capabilities: the Chinese and English language alignment capabilities of the original CLIP have been greatly improved. Seamlessly connects to all models and ecological tools built on the original CLIP, such as Stable Diffusion; at the same time, it is endowed with powerful Chinese capabilities to achieve better results in Chinese on multiple data sets. (Please refer to the technical report for detailed explanation)

It is worth mentioning that this alignment method greatly reduces the threshold for training multi-language and multi-modal representation models. Compared with redoing Chinese Or English image and text pair pre-training, which only requires about 1% of the computing resources and image and text pair data.

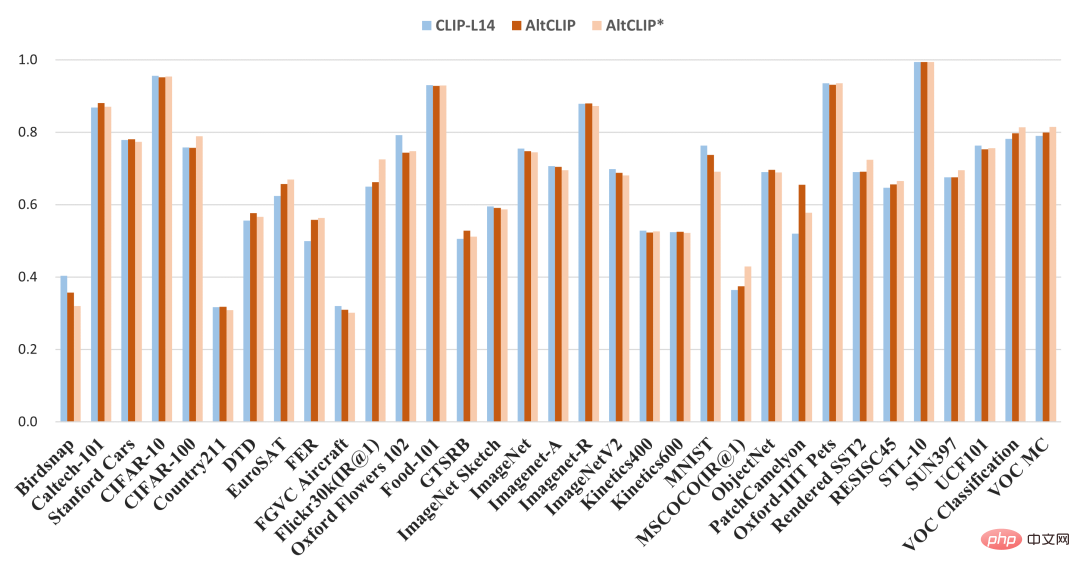

Achieved the same effect as the English original version in the comprehensive CLIP benchmark

In some retrieval data For example, Flicker-30K has better performance than the original version

##Flicker-30K has better performance than the original CLIP

The zero-shot result on Chinese ImageNet is the best

The above is the detailed content of This AI master who understands Chinese, the mountains and the bright moon painted are so amazing! The Chinese-English bilingual AltDiffusion model has been open sourced. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: