Technology peripherals

Technology peripherals

AI

AI

Don't be an illiterate painter! Google magically modified the 'Text Encoder': a small operation allows the image generation model to learn 'spelling'

Don't be an illiterate painter! Google magically modified the 'Text Encoder': a small operation allows the image generation model to learn 'spelling'

Don't be an illiterate painter! Google magically modified the 'Text Encoder': a small operation allows the image generation model to learn 'spelling'

In the past year, with the release of DALL-E 2, Stable Diffusion and other image generation models, the images generated by the text-to-image model have achieved great results in terms of resolution, quality, text fidelity, etc. This has made a huge improvement, greatly promoting the development of downstream application scenarios, and everyone has become an AI painter.

However, relevant research shows that the current generative model technology still has a major flaw: there is no way to present reliable visual text in images.

Research results show that DALL-E 2 is very unstable in generating coherent text characters in pictures, and the newly released Stable Diffusion model directly "cannot render" Readable text" is listed as a known limitation.

characters are misspelled: (1) California: All Dreams Welcome, (2) Canada: For Glowing Hearts, (3) Colorado : It's Our Nature, (4) St. Louis: All Within Reach.

Recently, Google Research released a new paper, trying to understand and improve the rendering efficiency of image generation models. Ability to quality visual text.

##Paper link: https://arxiv.org/abs/2212.10562

Researchers believe that the main reason why the current text-to-image generation model has text rendering defects is the lack of character-level input features.In order to quantify the impact of this input feature in model generation, the article designed a series of control experiments on whether the text encoder contains text input features (character-aware and character-blind )comparing.

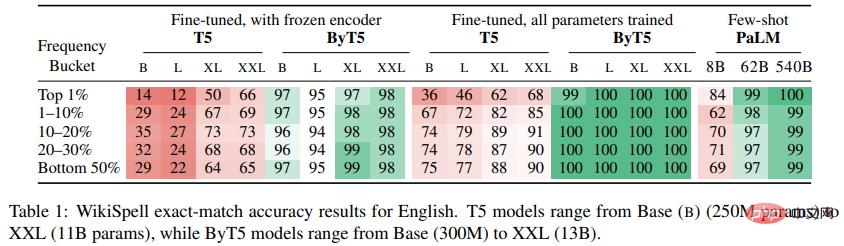

The researchers found that in the plain text domain, the character-aware model achieved significant performance gains on a new spelling task (WikiSpell).

After transferring this experience to the visual domain, the researchers trained a set of image generation models. Experimental results show that the character-aware model outperforms character-blind in a series of new text rendering tasks (DrawText benchmark).

And the character-aware model has reached a higher state of the art in visual spelling, with accuracy on uncommon words despite being trained on a much smaller number of examples. More than 30 percentage points higher than competing models.

Character-Aware Model

Language models can be divided into character-aware models that have direct access to the characters that make up their text input and character-blind models that have no access.Many early neural language models operated directly on characters without using multi-character tokens as markers.

Later models gradually shifted to vocabulary-based tokenization. Some models such as ELMo still retained character-aware, but other models such as BERT gave up character features in favor of more effective Pre-training.

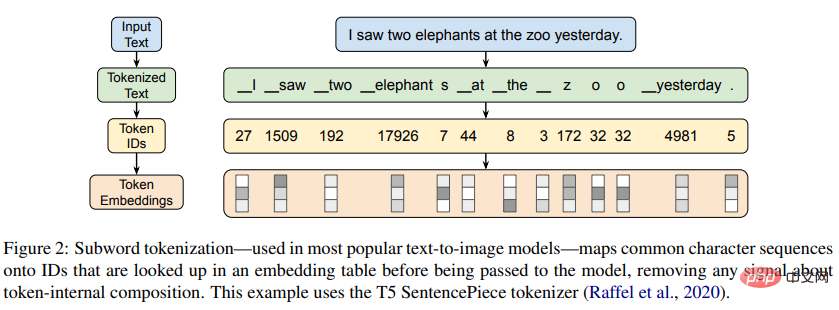

Currently, most widely used language models are character-blind, relying on data-driven subword segmentation algorithms, such as byte pair encoding (BPE) to generate subwords. The word pieces as a vocabulary.

Although these methods can fall back to character-level representation for uncommon sequences, they are still designed to compress common character sequences into indivisible units.

The main purpose of this paper is to try to understand and improve the ability of image generation models to render high-quality visual text.

To this end, the researchers first studied the spelling ability of current text encoders in isolation. From the experimental results, it can be found that although character-blind text encoders are very popular, they do not Receives direct signals about the character-level composition of their input, resulting in limited spelling abilities.

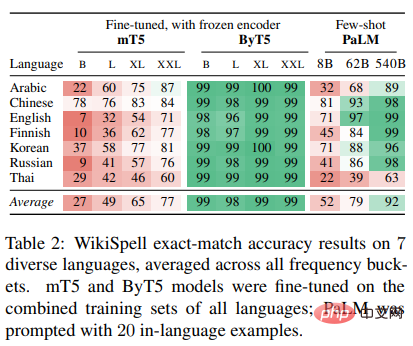

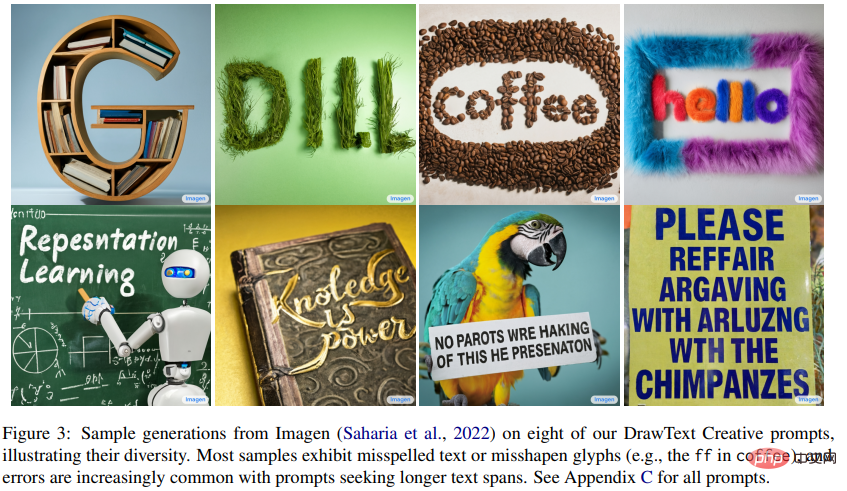

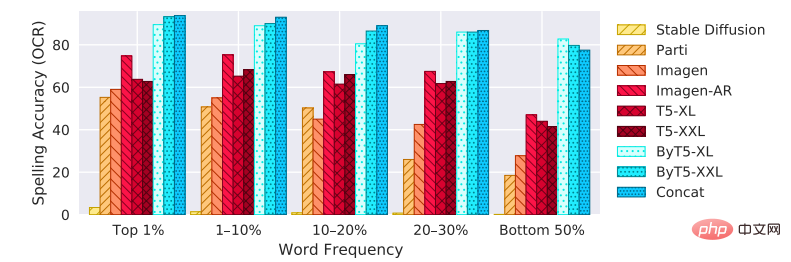

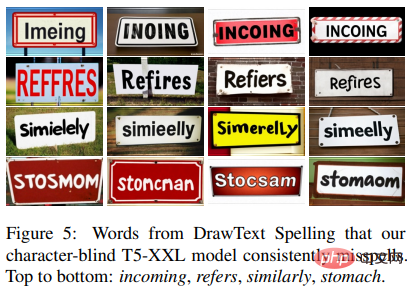

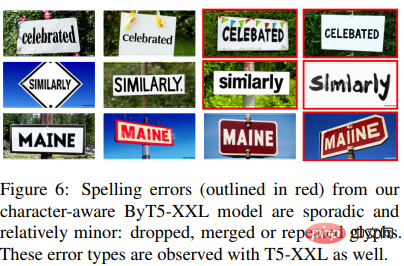

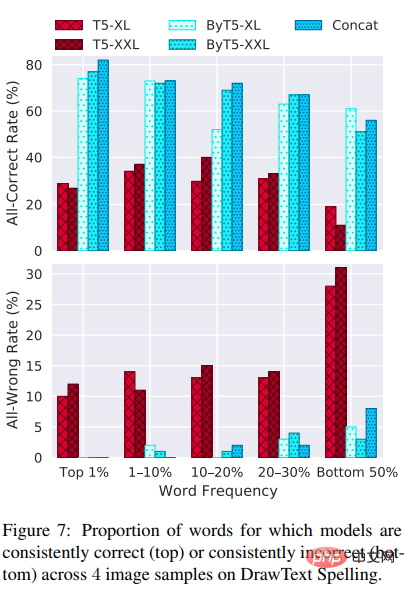

The researchers also tested the spelling capabilities of text encoders of varying sizes, architectures, input representations, languages, and tuning methods. This paper records for the first time the miraculous ability of the character-blind model to induce strong spelling knowledge (accuracy >99%) through network pre-training, but experimental results show that this ability It does not generalize well in languages other than English and is only achievable at scales exceeding 100B parameters, so it is not feasible for most application scenarios. On the other hand, character-aware text encoders enable powerful spelling capabilities at smaller scales. In applying these findings to image generation scenarios, the researchers trained a series of character-aware text-to-image models and demonstrated that they perform well on existing and new text Rendering significantly outperforms character-blind models in evaluations. But for pure character-level models, although the performance of text rendering has improved, for prompts that do not involve visual text, the image-text alignment will decrease. To alleviate this problem, researchers suggest combining character-level and token-level input representations so that the best performance can be achieved. Since text-to-image generative models rely on text encoders to produce representations for decoding, the researchers first sampled some words from the Wiktionary to create We use the WikiSpell benchmark and then explore the capabilities of the text encoder on a plain-text spelling evaluation task based on this dataset. For each example in WikiSpell, the input to the model is a word and the expected output is Its specific spelling (generated by inserting spaces between each Unicode character). Since the article is only interested in studying the relationship between the frequency of a word and the spelling ability of the model, the researchers based the words on mC4 The frequency of occurrence in the corpus divides the words in Wiktionary into five non-overlapping buckets: the most frequent 1% of words, the most frequent 1-10% of words, 10-20% of words, 20-30% of words, and the lowest 50% of words (including words that have never appeared in the corpus). Then sample 1000 words evenly from each bucket to create a test set (and a similar dev set). Finally, a training set of 10,000 words was built by combining two parts: 5,000 uniformly sampled from the bottom 50% bucket (the least common words), and another 5,000 are sampled proportionally to their frequency in mC4 (thereby biasing this half of the training set toward frequent words). The researchers exclude any words selected into the development set or test set from the training set, so the evaluation results are always for the excluded words. In addition to English, the researchers evaluated six other languages (Arabic, Chinese, Finnish, Korean, Russian, Thai), chosen to cover the impact model To learn various features of spelling ability, the dataset construction process described above was repeated for each language assessment. Researchers used the WikiSpell benchmark to evaluate the performance of multiple pre-trained text-only models at different scales, including T5 (one on English data pre-trained character-blind codec model); mT5 (similar to T5, but pre-trained on over 100 languages); ByT5 (a character-aware version of mT5 that operates directly on UTF-8 byte sequences) ; and PaLM (a larger decoding model, mainly pre-trained on English). In the pure English and multi-language experimental results, it can be found that the character-blind model T5 and mT5 perform better on the buckets containing the Top-1% most frequent words. Much different. This result seems counter-intuitive, as the model usually performs best on examples that appear frequently in the data, but due to the way the subword vocabulary is trained, frequently occurring words are usually represented is a single atomic token (or a small number of tokens), and so is the fact that 87% of the words in the top 1% bucket of English are represented by T5's vocabulary as a subword token. Thus, a low spelling accuracy score indicates that T5’s encoder does not retain enough spelling information about the subwords in its vocabulary. Secondly, for the character-blind model, scale is an important factor affecting spelling ability. Both T5 and mT5 gradually get better with increasing scale, but even at XXL scale, these models do not show particularly strong spelling abilities. Only when character-blind models reach the scale of PaLM do you start to see near-perfect spelling ability: the 540B-parameter PaLM model achieves > in all frequency bins of English 99% accuracy, even though it only sees 20 examples in the prompt (whereas T5 shows 1000 fine-tuned examples). However, PaLM performs worse on other languages, probably due to much less pre-training data for these languages. Experiments on ByT5 show that the character-aware model exhibits more powerful spelling capabilities. ByT5's performance at Base and Large sizes lagged only slightly behind XL and XXL (though still at least within the 90% range), and the frequency of a word didn't seem to have much of an impact on ByT5's spelling abilities. ByT5's spelling performance far exceeds the results of (m)T5, is even comparable to the English performance of PaLM with 100 times more parameters, and exceeds PaLM's performance in other languages Performance. It can be seen that the ByT5 encoder retains a considerable amount of character-level information, and this information can be retrieved from these frozen parameters according to the needs of the decoding task. From the COCO dataset released in 2014 to the DrawBench benchmark in 2022, from FID, CLIP score to human preference and other indicators, how to evaluate text- The to-image model has always been an important research topic. But there has been a lack of related work in text rendering and spelling evaluation. To this end, researchers proposed a new benchmark, DrawText, designed to comprehensively measure the text rendering quality of text-to-image models. The DrawText benchmark consists of two parts, measuring different dimensions of model capabilities: 1) DrawText Spell, Evaluated by ordinary word renderings of a large collection of English words; The researchers sampled 100 words each from the English WikiSpell frequency buckets and inserted them into a standard template to construct a total 500 tips. For each prompt, 4 images are extracted from the candidate models and evaluated using human ratings and optical character recognition (OCR)-based metrics. 2) DrawText Creative, evaluated through text rendering of visual effects. Visual text is not limited to common scenes like street signs, text can appear in many forms, such as scrawling, painting, carving, sculpture, etc. If image generation models support flexible and accurate text rendering, this will enable designers to use these models to develop creative fonts, logos, layouts, and more. To test the ability of the image generation model to support these use cases, the researchers worked with a professional graphic designer to build 175 different prompts requiring text to be rendered in a range of creative styles and settings. Many cues are beyond the capabilities of current models, with state-of-the-art models exhibiting misspelled, dropped, or repeated words. The experimental results show that among the nine image generation models used for comparison, the character-aware model ( ByT5 and Concat) outperform other models regardless of model size, especially on uncommon words. Imagen-AR shows the benefit of avoiding cropping, and despite taking 6.6 times longer training time, it still performs worse than the character-aware model. Another clear difference between the models is whether they consistently misspell a given word across multiple samples. It can be seen in the experimental results that no matter how many samples are taken, the T5 model has many misspelled words, which the researchers believe indicates that the text Character knowledge is missing in the encoder. In contrast, the ByT5 model basically only has sporadic errors. Can be quantified by measuring the rate at which the model is consistently correct (4/4) or consistently incorrect (0/4) across all four image samples This observation. A sharp contrast can be seen, especially on common words (top 1%), that is, the ByT5 model never consistently makes errors, And the T5 model consistently gets it wrong on 10% or more of the words.

WikiSpell Benchmark

Text Generation Experiment

DrawText Benchmark

Image generation experiment

The above is the detailed content of Don't be an illiterate painter! Google magically modified the 'Text Encoder': a small operation allows the image generation model to learn 'spelling'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.