Most breakthroughs in the field of artificial intelligence in recent years have been driven by self-supervised learning, such as the MLM (Masked Language Model) proposed in BERT, which re-predicts some words in the text by masking them , allowing massive unlabeled text data to be used to train models, and has since opened a new era of large-scale pre-training models. However, self-supervised learning algorithms also have obvious limitations. They are usually only suitable for data in a single modality (such as images, text, speech, etc.), and require a lot of computing power to learn from massive data. In contrast, humans learn significantly more efficiently than current AI models and can learn from different types of data.

##In January 2022, Meta AI released the self-supervised learning framework data2vec , integrating the three modal data (voice, visual and text) through a framework, there is a trend of unifying multi-modality. Recently Meta AI released data2cec 2.0 version , mainly improves the previous generation in terms of performance: with the same accuracy, the training speed is up to 16 times higher than other algorithms!

#Paper link: https://ai.facebook.com/research/publications/e fficient-self-supervised-learning-with-contextualized-target-representations-for-vision-speech-and-language

Code link:https://github.com/facebookresearch/fairseq/tree/main/examples/data2vecdata2vec 1.0

Currently Said that most machine learning models are still based on supervised learning models, which require specialized annotators to label target data. However, for some tasks (such as thousands of human languages on the earth), collecting labeled data is not feasible.

In contrast, self-supervised learning does not need to tell the model what is right and wrong, but allows the machine to learn images by observing the world , the structure of speech and text. Related research results have promoted speech (e.g., wave2vec 2.0), computer vision (e.g., masked autoencoders), and natural language processing (e.g., BERT) and other fields.

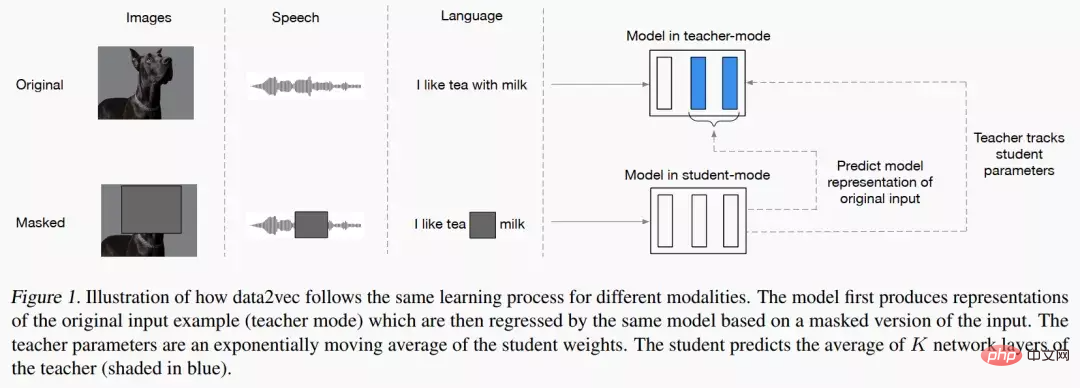

The main idea of data2vec is to first build a teacher network and first calculate the target representation from images, text or speech. The data is then masked to obscure parts of the input, and the process is repeated with a student network to predict the representations obtained by the teacher model.

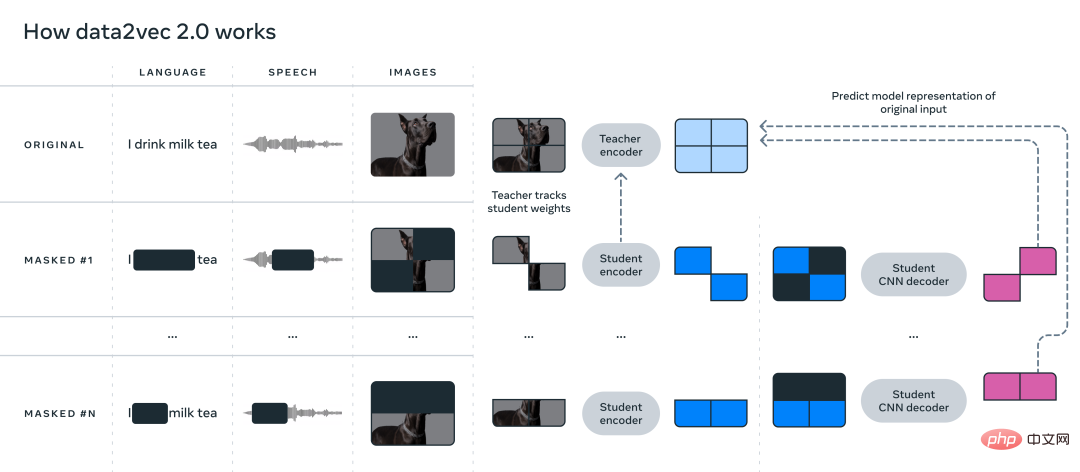

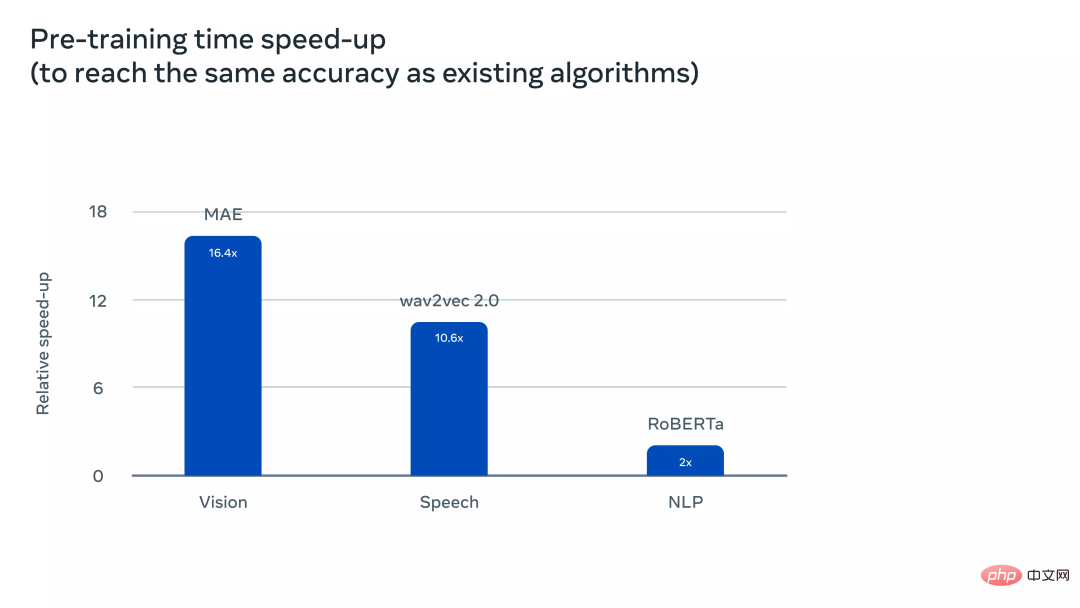

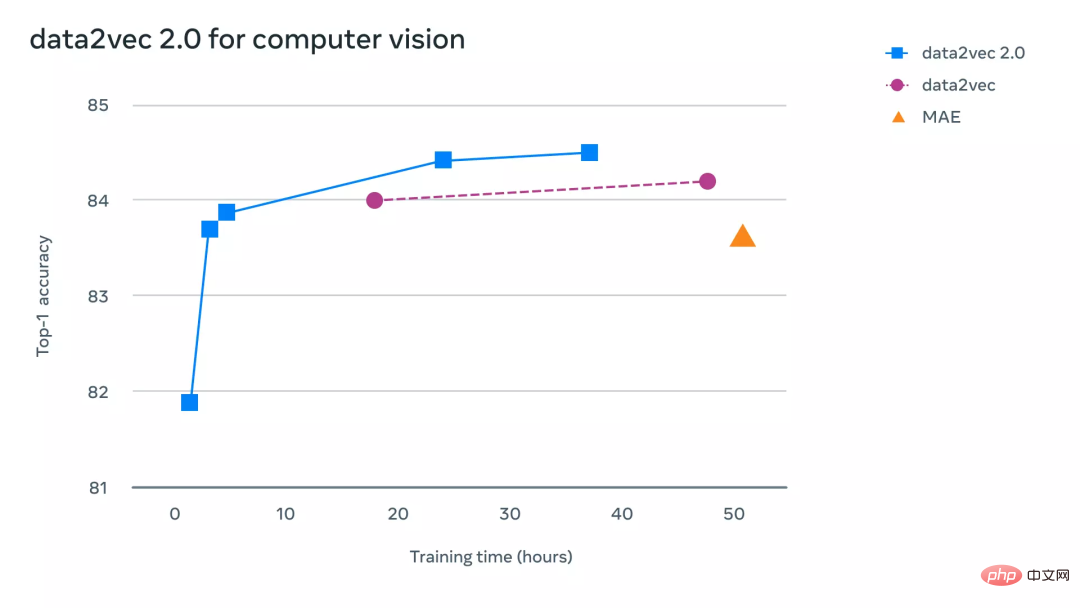

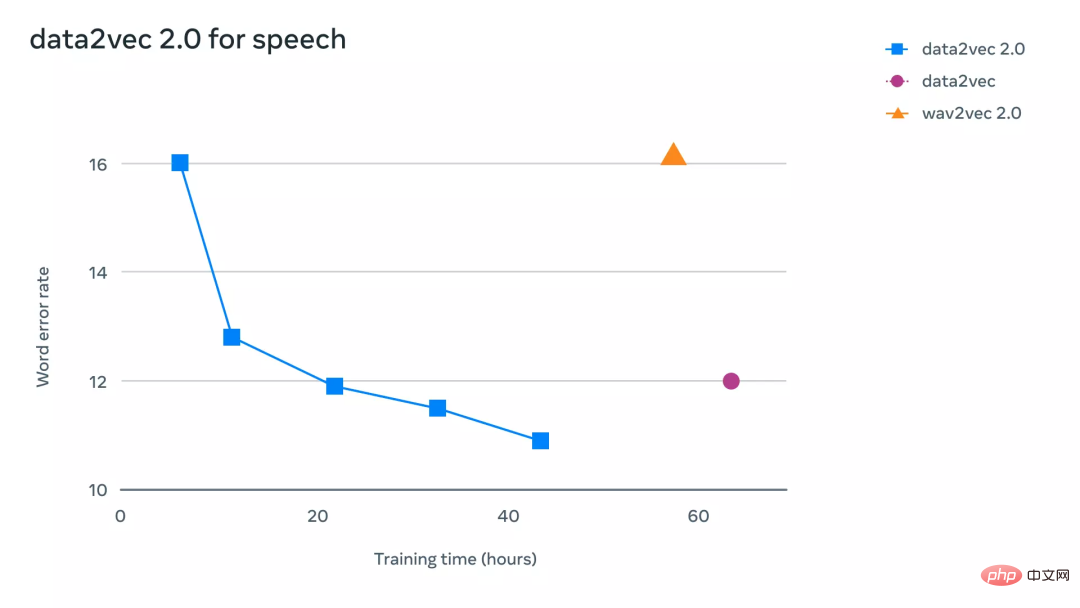

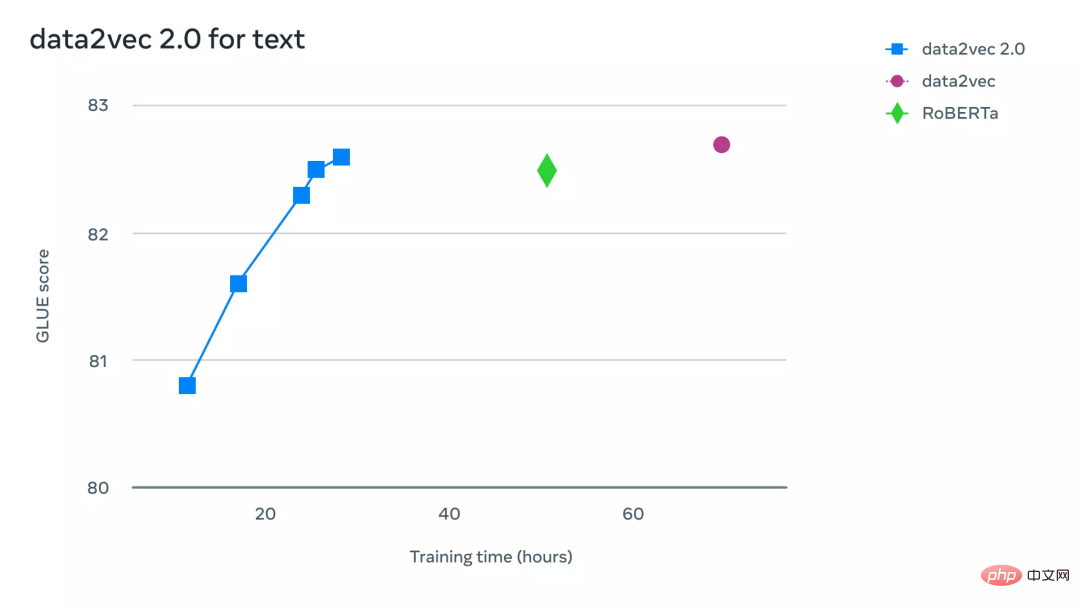

In other words, the student model can only predict the representation of "complete input data" while accepting "incomplete input information". In order to ensure the consistency of the two models, the parameters of the two models are shared, but the parameters of the Teacher model will be updated faster in the early stages of training. In terms of experimental results, data2vec has significantly improved performance compared to the baseline model on speech, vision, text and other tasks. data2vec proposes a general The self-supervised learning framework unifies the learning of three modal data: speech, vision and language. The main pain point solved by data2vec2.0 is that building a self-supervised model requires a large amount of GPU computing power to complete the training. Similar to the original data2vec algorithm, data2vec 2.0 predicts contextualized representations of data, or layers of neural networks, rather than predicting pixels in images, words in text segments, or speech. Unlike other common algorithms, these so-called target representations are contextual, which means that the algorithm needs to The entire training example is taken into account. For example, the model learns the representation of the word bank based on the entire sentence containing bank, making it easier to deduce the correct meaning of the word. , such as distinguishing whether it specifically refers to a "financial institution" or "land by the river." The researchers believe that contextualized goals will facilitate richer learning tasks and enable data2vec 2.0 to learn faster than other algorithms. ##data2vec 2.0 improves the efficiency of the original data2vec algorithm in the following three ways: 1. Construct a target representation for a specific training example and reuse the representation on the masked version. In the masked version, different parts of the training examples are randomly hidden. The representations learned by both versions are then fed into the student model, which predicts the same contextualized target representation for different mask versions, effectively amortizing the computational effort required to create the target representation. 2. Similar to the masked autoencoder (MAE), the encoder network in the student model does not work. The blank parts of the training examples (blanked out). In the image experiments, approximately 80% of the sections were blank, resulting in significant computational cycle savings. 3. Use a more effective decoder model that no longer relies on the Transformer network, but relies on a multi-layer Convolutional network. In order to more intuitively understand how much more efficient data2vec 2.0 is than data2vec and other similar algorithms, researchers in Computer Vision Extensive experiments are conducted on benchmarks related to , speech and text tasks. In the experiment, the final accuracy and the time required for pre-training the model were mainly considered. The experimental environment was on the same hardware (GPU model, number, etc.) to measure the running speed of the algorithm. On computer vision tasks, the researchers evaluated data2vec 2.0 on the standard ImageNet-1K image classification benchmark, the dataset from which the model can learn Image representation. Experimental results show that data2vec 2.0 can equal the accuracy of masked autoencoder (MAE), but is 16 times faster. If you continue to give the data2vec 2.0 algorithm more running time, it can achieve higher accuracy and still be faster than MAE. On the speech task, the researchers tested it on the LibriLanguage speech recognition benchmark, and it was more than 11 times more accurate than wave2vec 2.0. For natural language processing tasks, the researchers evaluated data2vec 2.0 on the General Language Understanding Evaluation (GLUE) benchmark, requiring only half the training time It can achieve the same accuracy as RoBERTa, a reimplementation of BERT.  ##

##data2vec 2.0

The above is the detailed content of Multimodality unified again! Meta releases self-supervised algorithm data2vec 2.0: training efficiency increased by up to 16 times!. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the framework used by vscode

Introduction to the framework used by vscode

Why does wifi have an exclamation mark?

Why does wifi have an exclamation mark?

How to open csv file

How to open csv file

How to solve dns_probe_possible

How to solve dns_probe_possible

Introduction to python higher-order functions

Introduction to python higher-order functions

How to use the notnull annotation

How to use the notnull annotation

Top 10 most secure digital currency exchanges in 2024

Top 10 most secure digital currency exchanges in 2024

What to do if the documents folder pops up when the computer is turned on

What to do if the documents folder pops up when the computer is turned on