Pre-training without attention; In-Context Learning driven by GPT

Paper 1: ClimateNeRF: Physically-based Neural Rendering for Extreme Climate Synthesis

- ##Author: Yuan Li et al

- Paper address: https://arxiv.org/pdf/2211.13226.pdf

Abstract: This paper introduces a new method of fusing physical simulations with NeRF models of scenes to generate realistic movies of the physical phenomena in these scenes. In terms of concrete results, the method can realistically simulate the possible effects of climate change - what would a playground look like after a small-scale flood? What about after the great flood? What about after the blizzard?

## Recommended: Fog, winter, floods, new NeRF models render physically realistic blockbusters.

Paper 2: Pretraining Without Attention

- ##Author: Junxiong Wang et al

- Paper address: https://arxiv.org/pdf/2212.10544.pdf

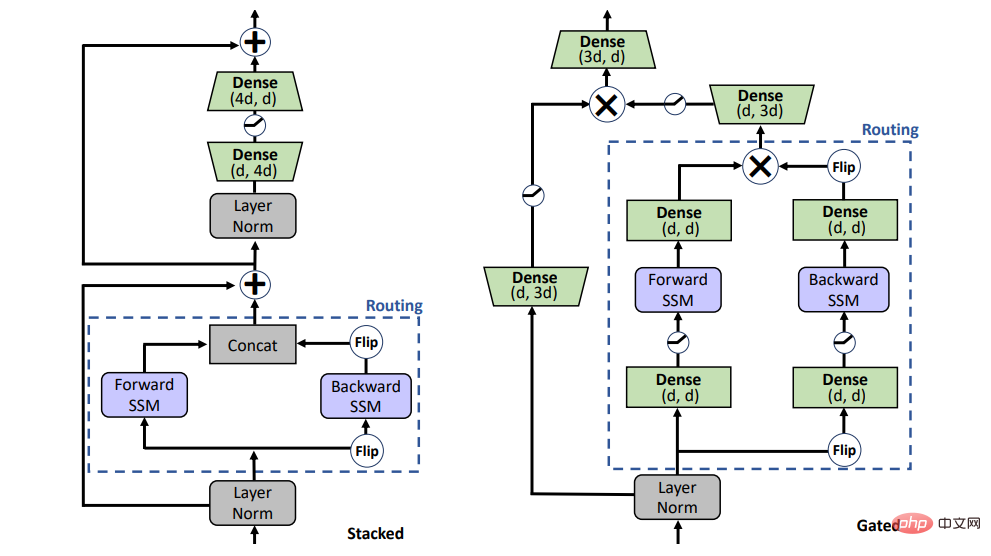

This paper proposes a Bidirectional Gating SSM (BiGS) model, which combines the Routing layer based on the State Space Model (SSM) and the model architecture based on the multiplication gate, which can replicate the BERT prediction without using attention. training results, and can be extended to long-range pre-training of 4096 tokens, without the need for approximation.

Pre-training requires no attention, and scaling to 4096 tokens is not a problem, comparable to BERT.

Paper 3: One Model to Edit Them All: Free-Form Text-Driven Image Manipulation with Semantic Modulations

- Author: Yiming Zhu et al

- Paper address: https://arxiv.org/pdf/2210.07883.pdf

Recently, using text to guide image editing has achieved great progress and attention, especially based on denoising diffusion models such as StableDiffusion or DALLE wait. However, GAN-based text-image editing still has some problems waiting to be solved. For example, in the classic StyleCILP, a model must be trained for each text. This single-text-to-single-model approach is inconvenient in practical applications. This article proposes FFCLIP and solves this problem. For flexible different text inputs, FFCLIP only needs one model to edit the image accordingly, without the need to retrain the model for each text. , and achieved very good results on multiple data sets. This article has been accepted by NeurIPS 2022.

Recommendation:

A new paradigm for text and picture editing, a single model enables multi-text guided image editing.

Paper 4: SELF-INSTRUCT: Aligning Language Model with Self Generated Instructions

- Author: Yizhong Wang et al

- Paper address: https://arxiv.org/pdf/2212.10560v1.pdf

The University of Washington and other institutions recently jointly published a paper. The proposed new framework SELF-INSTRUCT improves the performance of pre-trained language models by guiding the model's own generation process. Ability to follow instructions. SELF-INSTRUCT is a semi-automated process that performs instruction tuning on a pre-trained LM using instruction signals from the model itself. Recommendation: No need for manual annotation, the self-generated instruction framework breaks the cost bottleneck of LLM such as ChatGPT. Paper 5: Ab Initio Calculation of Real Solids via Neural Network Ansatz Abstract: Machine learning can process massive amounts of data, solve scientific problems in complex scenarios, and lead scientific exploration to reach areas that were impossible in the past. New areas touched upon. For example, DeepMind uses the artificial intelligence software AlphaFold to make highly accurate predictions of almost all protein structures known to the scientific community; the particle image velocimetry (PIV) method based on deep learning proposed by Christian Lagemann has greatly improved the original purely manual setting of parameters. The application scope of the model is of vital significance to research in many fields such as automobiles, aerospace, and biomedical engineering. Recently, the work "Ab initio calculation of real solids via neural network ansatz" by the ByteDance AI Lab Research team and Chen Ji's research group at the School of Physics at Peking University provides a method for studying condensed matter. A new idea in physics, this work proposes the industry's first neural network wave function suitable for solid systems, realizes first-principles calculations of solids, and pushes the calculation results to the thermodynamic limit. It strongly proves that neural networks are efficient tools for studying solid-state physics, and also indicates that deep learning technology will play an increasingly important role in condensed matter physics. Relevant research results were published in the top international journal Nature Communication on December 22, 2022. Recommendation: The industry’s first neural network wave function suitable for solid systems was published in a Nature sub-journal. Paper 6: Why Can GPT Learn In-Context? Language Models Secretly Perform Gradient Descent as Meta-Optimizers Abstract: In-Context Learning (ICL) has achieved great success on large pre-trained language models, but its working mechanism is still a Unanswered questions. In this article, researchers from Peking University, Tsinghua University, and Microsoft understand ICL as a kind of implicit fine-tuning, and provide empirical evidence to prove that ICL and explicit fine-tuning perform similarly at multiple levels. Recommended: Why does In-Context Learning, driven by GPT, work? The model performs gradient descent secretly. Paper 7: Experimental Indications of Non-classical Brain Functions Abstract: For decades, scientists have been exploring the computing and thinking mechanisms of the human brain. However, the structure of the human brain is too complex, containing tens of billions of neurons, equivalent to trillions of chips, so it is difficult for us to find out. Roger Penrose, who won the Nobel Prize in Physics for his contribution to the study of black holes, once boldly proposed the idea of "quantum consciousness", that is, the human brain itself is a quantum structure, or a quantum computer. But this view has been questioned. A recent study from Trinity University Dublin suggests that our brains perform quantum computations, arguing that there is entanglement in the human brain mediated by brain functions related to consciousness. If these brain functions must operate in a non-classical way, then this means that consciousness is non-classical, i.e. the brain's cognitive processes involve quantum computations. Recommendation: The brain’s thinking is quantum computing. There is new evidence for this speculation. ArXiv Weekly Radiostation Heart of Machine cooperates with ArXiv Weekly Radiostation initiated by Chu Hang and Luo Ruotian, and selects more important papers this week based on 7 Papers, including 10 selected papers in each of NLP, CV, and ML fields. And provide an audio summary of the paper, the details are as follows: 10 NLP PapersAudio: 00:0020:18 ##10 selected NLP papers this week Yes: 1. Does unsupervised grammar induction need pixels?. (from Serge Belongie, Kilian Q. Weinberger, Jitendra Malik, Trevor Darrell) 2. Understanding Stereotypes in Language Models: Towards Robust Measurement and Zero-Shot Debiasing. (from Bernhard Schölkopf) 3. Tackling Ambiguity with Images: Improved Multimodal Machine Translation and Contrastive Evaluation. (from Cordelia Schmid, Ivan Laptev) 4. Cross-modal Attention Congruence Regularization for Vision-Language Relation Alignment. (from Ruslan Salakhutdinov, Louis-Philippe Morency) 5. Original or Translated? On the Use of Parallel Data for Translation Quality Estimation. (from Dacheng Tao) 6. Toward Human- Like Evaluation for Natural Language Generation with Error Analysis. (from Dacheng Tao) 7. Can Current Task-oriented Dialogue Models Automate Real-world Scenarios in the Wild?. (from Kyunghyun Cho ) 8. On the Blind Spots of Model-Based Evaluation Metrics for Text Generation. (from Kyunghyun Cho) 9. Beyond Contrastive Learning: A Variational Generative Model for Multilingual Retrieval. (from William W. Cohen) 10. The Impact of Symbolic Representations on In-context Learning for Few-shot Reasoning. (from Li Erran Li, Eric Xing) 10 CV PapersAudio:##00:0023:15 1. Revisiting Residual Networks for Adversarial Robustness: An Architectural Perspective. (from Kalyanmoy Deb) 2. Benchmarking Spatial Relationships in Text-to -Image Generation. (from Eric Horvitz) 3. A Brief Survey on Person Recognition at a Distance. (from Rama Chellappa) 4. MetaCLUE: Towards Comprehensive Visual Metaphors Research. (from Leonidas Guibas, William T. Freeman) 5. Aliasing is a Driver of Adversarial Attacks. (from Antonio Torralba) 6. Reversible Column Networks. (from Xiangyu Zhang) 7. Hi-LASSIE: High-Fidelity Articulated Shape and Skeleton Discovery from Sparse Image Ensemble . (from Ming-Hsuan Yang) 8. Learning Object-level Point Augmentor for Semi-supervised 3D Object Detection. (from Ming-Hsuan Yang) 9. Unleashing the Power of Visual Prompting At the Pixel Level. (from Alan Yuille) 10. From Images to Textual Prompts: Zero-shot VQA with Frozen Large Language Models. (from Dacheng Tao, Steven C.H. Hoi)

The above is the detailed content of Pre-training without attention; In-Context Learning driven by GPT. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile