Technology peripherals

Technology peripherals

AI

AI

Thoughts on AI network security brought about by ChatGPT's popularity

Thoughts on AI network security brought about by ChatGPT's popularity

Thoughts on AI network security brought about by ChatGPT's popularity

1. Artificial Intelligence Development Track

Before the concept of artificial intelligence (Aritificial Intelligene) was first proposed by John McCarthy at the Darmouth College Summer Academic Symposium in 1956, humans were already replacing humans with machines. Continuous exploration on the road to engaging in heavy and repetitive work.

In February 1882, Nikola Tesla completed the AC generator idea that had troubled him for 5 years, and exclaimed with joy, "From now on, human beings are no longer enslaved to heavy manual labor. Machines will liberate them, and so will the whole world."

In 1936, in order to prove that there are undecidable propositions in mathematics, Alan Turing proposed the idea of a "Turing machine". In 1948, he described most of the content of connectionism in the paper "INTELLIGENT MACHINERY". Then in 1950, he published "COMPUTING MACHINERY AND INTELLIGENCE" and proposed the famous "Turing Test". In the same year, Marvin Minsky and his classmate Dunn Edmund built the world's first neural network computer.

In 1955, von Neumann accepted the invitation to give the Silliman Lecture at Yale University. The contents of the lecture were later compiled into the book "THE COMPUTER AND THE BRAIN".

Artificial intelligence has experienced three development climaxes since it was proposed in 1956 to today.

The first development climax: from 1956 to 1980, symbolism (Symbolism) represented by expert systems and classic machine learning dominated. Also known as the first generation of artificial intelligence, symbolism proposes a reasoning model based on knowledge and experience to simulate human rational intelligent behaviors, such as reasoning, planning, decision-making, etc. Therefore, a knowledge base and reasoning mechanism are established in the machine to simulate human reasoning and thinking behavior.

The most representative achievement of symbolism was the defeat of world champion Kasparov by the IBM chess program Deep Blue in May 1997. There are three factors for success: the first factor is knowledge and experience. Deep Blue analyzed 700000 The chess games played by human masters and all 5-6 endgames are summarized into the rules of chess.

Then through the game between the master and the machine, the parameters in the evaluation function are debugged and the master's experience is fully absorbed. The second element is the algorithm. Deep Blue uses the alpha-beta pruning algorithm, which is very fast. The third factor is computing power. IBM used the RS/6000SP2 machine at the time, which could analyze 200 million steps per second and predict 8-12 steps forward per second on average.

The advantage of symbolism is that it can imitate the human reasoning and thinking process, is consistent with the human thinking process, and can draw inferences from one example, so it is interpretable. However, symbolism also has very serious shortcomings. First, expert knowledge is very scarce and expensive. Second, expert knowledge needs to be input into the machine through manual programming, which is time-consuming and labor-intensive. Third, there is a lot of knowledge that is difficult to express, such as traditional Chinese medicine experts taking pulses. Such experiences are difficult to express, so the scope of application of symbolism is very limited.

The second development climax: 1980~1993, represented by symbolism and connectionism;

The third development climax: 1993~1996, deep learning With the great success of computing power and data, connectionism has become very popular;

Deep learning simulates human perception, such as vision, hearing, touch, etc., through deep neural network models. Deep learning has two advantages: the first advantage is that it does not require domain expert knowledge and the technical threshold is low; the second advantage is that the larger the scale of the upgraded network, the larger the data that can be processed.

One of the most typical examples of deep learning is the Go program. Before October 2015, Go programs made using symbolism, that is, knowledge-driven methods, could reach the highest level of amateur 5-dan. By October 2015, the Go program defeated the European champion, and by March 2016, it defeated the world champion. By October 2017, AlphaGo defeated AlphaGo. AlphaGo used deep learning to achieve a triple jump in the level of Go programs, from amateur to professional level, from professional level to world champion, and from world champion to world champion. More than the world champion.

AlphaGo achieved a triple jump in two years. Its success mainly comes from three aspects: big data, algorithm, and computing power. AlphaGo learned 30 million existing chess games, and played another 30 million games with itself, for a total of 60 million chess games. It used Monte Carlo tree search, reinforcement learning, deep learning and other algorithms, using a total of 1202 CPUs and 280 GPU to calculate.

Deep learning also has great limitations, such as being uninterpretable, unsafe, not easy to generalize, and requiring a large number of samples. For example, a picture of a human face may be recognized as a dog after some modifications. Why this happens is beyond human understanding. This is inexplicability.

In 2016, Actionism (Actionism) represented by reinforcement learning gained great attention after the emergence of AlphaZero, and it was hailed as the only way to general artificial intelligence.

Symbolism, represented by logical reasoning, drives intelligence with knowledge, and connectionism, represented by deep learning, drives intelligence with data. Both have major flaws and limited application scope.

Behaviorism represented by reinforcement learning comprehensively utilizes the four elements of knowledge, data, algorithms and computing power to integrate the human brain's feedback, lateral connections, sparse discharge, attention mechanism, multi-modality, memory, etc. The introduction of the mechanism is expected to overcome the shortcomings of the first two generations of artificial intelligence and gain wider application.

2. Several mechanisms of human brain work

[Prediction and feedback mechanism]

The brain observes the world and establishes memory models through a period of life; in daily life, the brain will Automatically and subconsciously compare previous memory models and predict what will happen next. When it detects a situation that is inconsistent with the prediction, it will cause feedback from the brain.

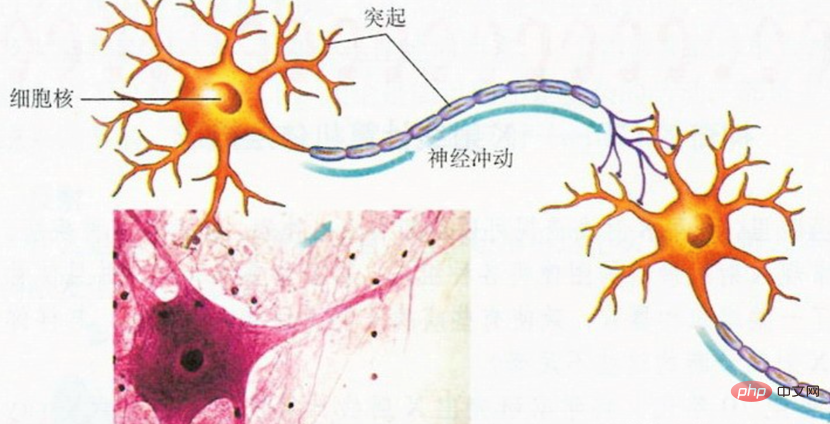

The reason why brain cells are able to transmit information is because they have magical tentacles-dendrites and axons. With short dendrites, brain cells can receive information transmitted from other brain cells, and with long axons, brain cells can transmit information to other brain cells (as shown in the figure below).

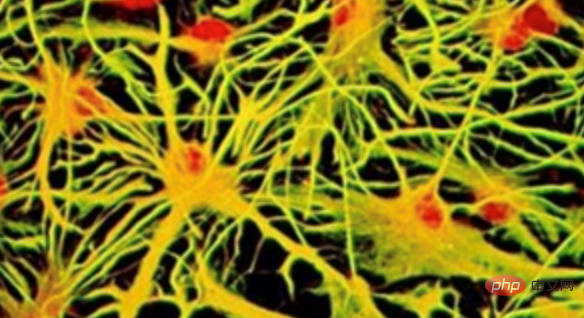

Information is continuously transmitted between brain cells, forming human feelings and thoughts. The entire brain is a large network of interconnected brain cells, as shown in the figure below:

In the field of machine learning, in order to To obtain such an artificial neural network, first of all, we must specify the structure of a neural network, how many neurons there are in the network, and how the neurons are connected. Next, you need to define an error function. The error function is used to evaluate how well the network is currently performing and how the neuron connections in it should be adjusted to reduce errors. Synaptic strength determines neural activity, neural activity determines network output, and network output determines network error.

Currently, “back propagation” is the most commonly used and successful deep neural network training algorithm in the field of machine learning. Networks trained with backpropagation occupy a mainstay position in the recent wave of machine learning, achieving good results in speech and image recognition, language translation, etc.

It also promotes the progress of unsupervised learning, which has become indispensable in image and speech generation, language modeling and some high-order prediction tasks. Cooperating with reinforcement learning, backpropagation can complete many control problems (control problems) such as mastering Atari games and defeating top human players in Go and poker.

The backpropagation algorithm sends error signals into feedback connections to help the neural network adjust synaptic strength. It is very commonly used in the field of supervised learning. But feedback connections in the brain seem to have different functions, and much of the brain's learning is unsupervised. Therefore, can the backpropagation algorithm explain the brain's feedback mechanism? There is currently no definitive answer.

[Intrabrain connections]

The special connection method between neurons in the human brain is an important direction in studying the uniqueness of the human brain. Magnetic resonance imaging is a key tool in this research. This technique can visualize the long fibers that extend from neurons and connect different brain areas without opening the skull. These connections act like wires that carry electrical signals between neurons. Together, these connections are called a connectome, and they provide clues about how the brain processes information.

Assuming that each nerve cell is connected to all other nerve cells, this one-to-many connection group is the most efficient. But this model requires a lot of space and energy to accommodate all the connections and maintain their normal operation, so it is definitely not feasible. Another mode is a one-to-one connection, where each neuron is connected to only a single other neuron. This kind of connection is less difficult, but it is also less efficient: information must pass through a large number of nerve cells like stepping stones to get from point A to point B.

"Real life is somewhere in between," says Yaniv Assaf of Tel Aviv University, who published a survey of the connectomes of 123 mammalian species in Nature Neuroscience. The team found that the number of stepping stones needed to get information from one location to another is roughly equal in the brains of different species, and the connections used are similar. However, there are differences in the way in which the layout of connections in the brain is realized among different species. In species with a few long-distance connections between the two hemispheres, there tend to be more shorter connections in each hemisphere, and adjacent brain regions within the hemisphere communicate frequently.

[Memory]

There are billions of nerve cells in the human brain. They interact with each other through synapses, forming extremely complex interconnections. Memory is the mutual calls between brain nerve cells. Some of the mutual calls are short-lived, some are long-lasting, and some are somewhere in between.

There are four basic forms of interaction between brain neurons:

- Simple excitation: When one neuron is excited, it excites another connected neuron.

- Simple inhibition: Excitation of one neuron increases the sensory threshold of another connected neuron.

- Positive feedback: The excitement of one neuron stimulates the excitement of another connected neuron, which in turn directly or indirectly lowers the excitation threshold of the former, or feeds back a signal to the sensory synapse of the former.

- Negative feedback: The excitement of one neuron stimulates the excitement of another connected neuron, which in turn directly or indirectly increases the excitation threshold of the former, causing the excitability of the former to decrease.

There are many types of neuron cells with different activities in the human brain, which are respectively responsible for short-term, medium-term and long-term memory.

Active neuron cells are responsible for short-term memory. Their number is small and determines a person's short-term response ability. When this type of cell is stimulated by a nerve signal, its sensing threshold will temporarily decrease, but its synapses generally do not proliferate, and the decrease in sensing threshold can only last for a few seconds to minutes, and then returns to normal levels.

Neutral neuron cells are responsible for medium-term memory, with a medium number, and determine a person's learning adaptability. When this kind of cell is stimulated by an appropriate amount of nerve signals, synaptic proliferation will occur. However, this synaptic proliferation is slow and requires multiple stimulations to form significant changes, and the proliferation state can only last for days to weeks. More prone to degradation.

Lazy neuron cells are responsible for long-term memory. Their larger number determines a person’s ability to accumulate knowledge. This kind of cell will only undergo synaptic proliferation when it is stimulated by a large number of repeated nerve signals. This synaptic proliferation is extremely slow and requires many repeated stimulations to form significant changes, but the proliferation state can be maintained for months to decades. , not easy to degrade.

When a brain neuron cell is stimulated and excited, its synapses will proliferate or the induction threshold will decrease. The synapses of brain neuron cells that are frequently stimulated and repeatedly excited will be smaller than other synapses. Brain neuron cells that are less stimulated and excited have stronger signal sending and signal receiving abilities.

When two neuronal cells with synapses adjacent to each other are stimulated at the same time and become excited at the same time, the synapses of the two neuron cells will proliferate at the same time, so that the adjacent synapses between them The interaction between pairs is enhanced. When this synchronized stimulation is repeated many times, the interaction between adjacent synaptic pairs of two neuron cells reaches a certain intensity (reaches or exceeds a certain threshold), then there will be a The propagation phenomenon of excitement means that when any neuron cell is stimulated and becomes excited, it will cause another neuron cell to become excited, thus forming a mutual echo connection between neuron cells, which is a memory connection.

Therefore, memory refers to recallability, which is determined by the smoothness of the connection between neuron cells, that is, the strength of the connection between neuron cells is greater than the induction threshold, forming an explicit connection between neuron cells. This is the nature of brain memory.

[Attention Mechanism]

When the human brain reads, it is not a strict decoding process, but close to a pattern recognition process. The brain will automatically ignore low-possibility and low-value information, and will also automatically correct the reading content to "the version that the brain thinks is correct" based on contextual information. This is the so-called human brain attention.

"Attention Mechanism" is a data processing method that simulates human brain attention in machine learning. It is widely used in various types of machines such as natural language processing, image recognition, and speech recognition. Learning tasks. For example, machine translation often uses the "LSTM attention" model. LSTM (Long Short Term Memory) is an application of RNN (Recurrent Neural Network). It can be simply understood that each neuron has an input gate, an output gate, and a forget gate. The input gate and output gate connect the LSTM neurons end to end, while the forget gate weakens or forgets the meaningless content. The "attention mechanism" is applied to the forgetting gate of LSTM, making machine reading closer to human reading habits, and also making the translation results contextual.

【Multimodal Neurons】

Fifteen years ago, Quiroga and others discovered that the human brain has multimodal neurons. These neurons respond to abstract concepts surrounding a high-level theme rather than to specific visual features. Among them, the most famous one is the "Halle Berry" neuron, which only responds to photos, sketches, and text of the American actress "Halle Berry." This example has been used in "Scientific American" and "The New York Times" [ 11].

The CLIP released by OpenAI uses multi-modal neurons to achieve a general vision system that is comparable to ResNet-50 in performance. On some challenging data sets, CLIP's performance exceeds that of existing systems. Some visual systems.

Machine learning introduces multi-modal neurons, which refers to the deep multi-dimensional semantic understanding of multi-modal data and information such as text, sound, pictures, videos, etc., including data semantics, knowledge semantics, and visual semantics , speech-semantics integration, natural language semantics and other multi-faceted semantic understanding technologies. For example, visual semantics can allow machines to go from seeing clearly to understanding videos and extract structured semantic knowledge.

3. The basic composition of the intelligent system

The automatic driving system is a typical intelligent system. The American SAE automatic driving classification standard divides the automatic driving system into five levels according to the degree of automation:

Level |

Name |

Definition |

| ##L0 | No automation | The driver performs all operational tasks such as steering, braking, accelerating or decelerating. |

| L1 | Driver Assistance | The driver is in the vehicle The automated driving system still handles all acceleration, braking and monitoring of the surrounding environment. |

| L2 | Partial automation | Automatic driving system can Assists steering or acceleration functions and allows drivers to be relieved of some of their tasks. The driver must be ready to take control of the vehicle at all times and remain responsible for most safety-critical functions and all environmental monitoring. |

| L3 | Conditional automation | The vehicle automated driving system itself Controls all monitoring of the environment. Driver attention is still important at this level, but can be disengaged from "safety-critical" functions such as braking. |

L4 |

Highly automated |

The vehicle’s autonomous driving system will first The driver is notified when it is safe to do so before the driver switches the vehicle into this mode. It can't judge between more dynamic driving situations, such as traffic jams or merging onto the highway. Autonomous vehicle systems are capable of steering, braking, accelerating, monitoring the vehicle and road, and responding to events, determining when to change lanes, turn, and use signals. |

L5 |

Full automation |

Autopilot system controls everything mission-critical, monitoring the environment and identifying unique driving conditions, such as traffic jams, without requiring driver attention. |

We can see from the classification of vehicle autonomous driving systems that the L0 level of the intelligent system is entirely human decision-making, the L1~L2 level is where machines do data collation and analysis based on full amounts of data, and humans make inferences, judgments and decisions, which is the so-called data Drive mode, L3~L4 is a machine that performs data sorting, analysis, logical reasoning, judgment and decision-making based on all data, but requires human intervention at the appropriate time. L5 is a completely intelligent machine without human intervention, which is the so-called intelligent drive mode .

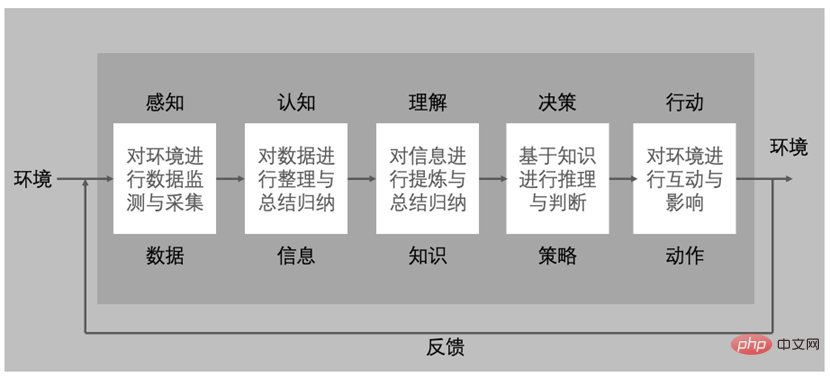

For a machine to be intelligent, that is, for a machine to become an intelligent system, it must at least have the components shown in the figure below: perception, cognition, understanding, decision-making, and action.

The role of the sensing component is to monitor and collect data from the environment, and the output is data. The essence is to digitize the physical space and completely map the physical space to the data space.

The role of the cognitive component is to organize and summarize data and extract useful information.

The function of the understanding component is to further refine and summarize the extracted information to obtain knowledge. Knowledge understood by humans is expressed in natural language, and for machines, it is expressed in “models” trained on data sets that represent the problem space.

The role of the decision-making component is to conduct reasoning and judgment based on knowledge. For the machine, it is to use the trained model to perform reasoning and judgment in the new data space and generate strategies for the target tasks.

The role of the action component is to interact with the environment based on strategies and have an impact on the environment.

The function of the feedback component is to form feedback after the action acts on the environment, and the feedback promotes the perception system to perceive more data, thereby continuously acquiring more knowledge, making better decisions on target tasks, and forming a closed-loop continuous Iterative evolution.

4. Intelligent security

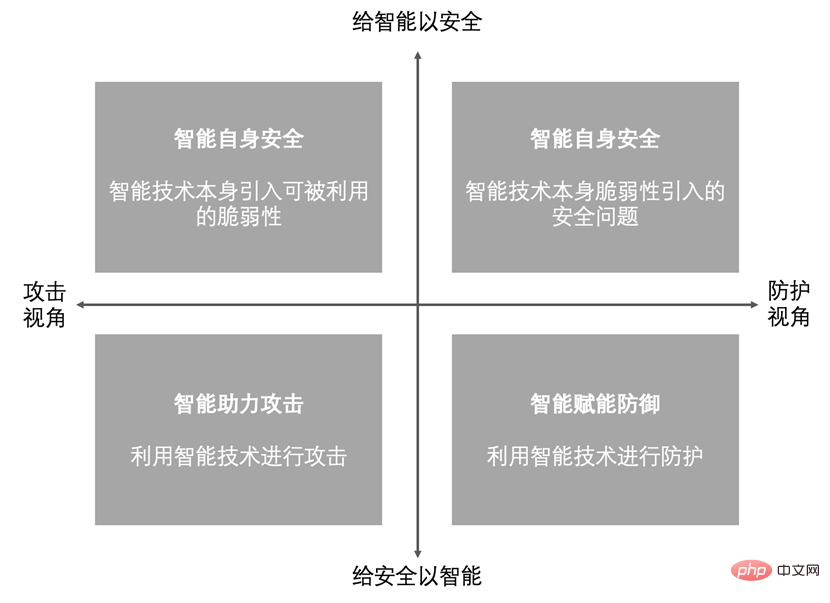

The combination of artificial intelligence and network security always has two dimensions and four quadrants[9]: vertically, one end is to provide security to intelligence, and the other end is to provide security With intelligence; horizontally, one end is the attack perspective and the other end is the defense perspective. As shown in the figure below, the four quadrants represent the four functions of the combination of the two:

Intelligent self-security includes the introduction of intelligent technology itself that can be exploited The vulnerability and security issues introduced by the vulnerability of smart technology itself. Mainly include business security, algorithm model security, data security, platform security, etc. using artificial intelligence.

The security issues of algorithm models mainly include model training integrity threats, test integrity threats, lack of model robustness, model bias threats, etc., such as bypass attacks (manipulating model decisions and results through adversarial samples) ), poisoning attack (injecting malicious data to reduce model reliability and accuracy), inference attack (inferring whether specific data was used for model training), model extraction attack (exposing algorithm details through malicious query commands), model reversal attack (through output Data inference (input data), reprogramming attacks (changing AI models for illegal purposes), attribution inference attacks, Trojan attacks, backdoor attacks, etc. Data security mainly includes data leakage based on model output and data leakage based on gradient updates; platform security includes hardware device security issues and system and software security issues.

The defense technologies for these insecurity issues of artificial intelligence mainly include algorithm model self-security enhancement, AI data security and privacy leakage defense, and AI system security defense. Algorithm model self-security enhancement technologies include training data-oriented defenses (such as adversarial training, gradient hiding, blocking transferability, data compression, data randomization, etc.), model-oriented defenses (such as regularization, defensive distillation, feature crowding, etc.) compression, deep shrinking network, hiding defense, etc.), specific defense, robustness enhancement, interpretability enhancement, etc.; AI data security and privacy leakage defense technologies mainly include model structure defense, information confusion defense, query control defense, etc.

Give security to intelligence refers to the new vulnerabilities brought by the intelligent technology itself, which can be exploited by attackers and may introduce new security risks for defenders.

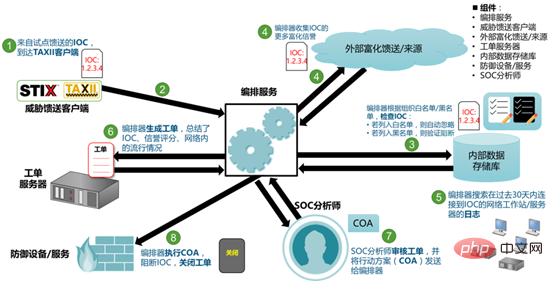

Give security intelligence means that attackers can use intelligent technology to carry out attacks, and defenders can use intelligent technology to improve security protection capabilities. Mainly reflected in security response automation and security decision-making autonomy. There are currently two mainstream methods to improve security response automation:

- SOAR, Security Orchestration, Automation and Response, security orchestration, automation and response;

- OODA, Obeserve-Orient-Decide -Act, observe-adjust-decision-action, IACD (Integrated Adaptive Cyber Defense Framework) is based on OODA as the framework.

The following figure is a schematic diagram of a SOAR-centered automatic response workflow:

In 1994, cognitive scientist Steven Pinker wrote in "The Language Instinct" that "for artificial intelligence, difficult problems are easy to solve. Simple problems are difficult to solve." "Simple complex problem" means that the problem space is closed, but the problem itself has high complexity. For example, playing Go is a simple complex problem. "Complex simple problem" means that the problem space is infinitely open, but the problem itself is not very complex.

For example, network security issues are complex and simple problems. Because the technologies and methods of security attacks are changing all the time, it is impossible to exhaustively list them. However, when it comes to a specific network attack, there are often traces. Circular.

Today’s intelligent technology is often stronger than humans in the field of “simple complex problems”, but for “complex simple problems”, artificial intelligence often fails due to space explosion caused by generalization boundaries.

Unfortunately, cybersecurity problems are complex simple problems, and the application of artificial intelligence in the cybersecurity problem space faces challenges. Especially the Moravec Paradox (a phenomenon discovered by artificial intelligence and robotics scholars that is contrary to common sense.

Unlike traditional assumptions, the unique high-order intelligence abilities of humans only require very little Computational abilities, such as reasoning, but unconscious skills and intuition require a great deal of computing power.) This is even more evident in the field of cybersecurity.

The application of artificial intelligence technology to network security has the following challenges: problem space is not closed, sample space is asymmetric, inference results are either inaccurate or uninterpretable, model generalization ability declines, and cross-domain thinking integration challenges.

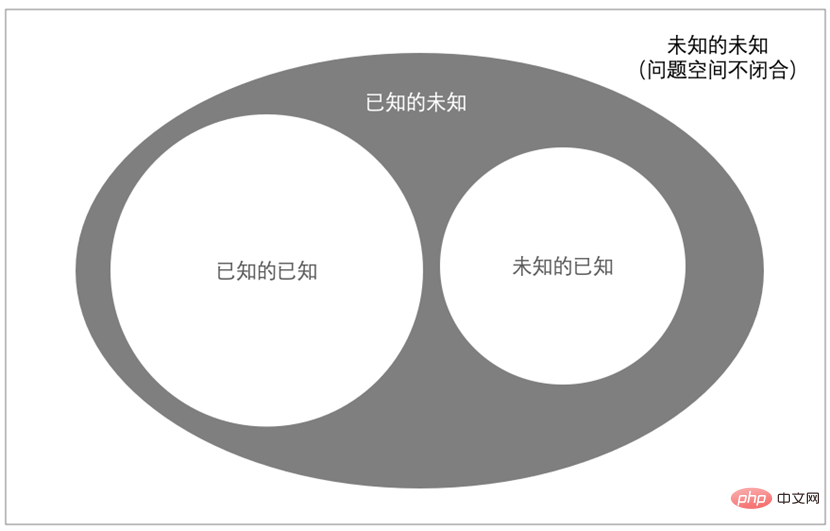

1. The problem space is not closed

As shown in the figure above, the problem space of network security includes known and unknown. The known includes known knowns, such as a known vulnerability, and the unknown known, such as a known and exposed security vulnerability that has not yet been discovered; unknown includes known unknowns, such as software systems that must There is a certain security vulnerability, unknown unknown, such as simply not knowing what risks or threats there will be.

2. Sample space asymmetry

The unknown unknown is an unavoidable dilemma in network security, which makes the network security problem space not closed, which leads to negative data (such as attack data, risk data etc.) leads to the asymmetry of the feature space, which in turn leads to the inability of the feature space to truly represent the problem space. A model is a hypothesis about the world in an existing data space and is used to make inferences in a new data space. Today's artificial intelligence technology can well solve the nonlinear complex relationship between input and output, but it is seriously asymmetric for the problem space where the sample space is relatively open.

3. Uninterpretability of reasoning results

Artificial intelligence applications aim to output decision-making judgments. Explainability refers to the extent to which humans can understand the reasons for decisions. The more explainable an AI model is, the easier it is for people to understand why certain decisions or predictions were made. Model interpretability refers to the understanding of the internal mechanisms of the model and the understanding of the model results. In the modeling phase, it assists developers in understanding the model, compares and selects models, and optimizes and adjusts the model if necessary; in the operational phase, it explains the internal mechanism of the model to the decision-maker and explains the model results.

In the modeling stage, artificial intelligence technology has a contradiction between decision accuracy and decision interpretability. Neural networks have high decision accuracy but poor interpretability. Decision trees have strong interpretability but poor accuracy. not tall. Of course, there are already ways to combine the two, to achieve a balance between the two to a certain extent.

In the operational stage, explain the internal mechanism of the model and the interpretation of decision-making results to the decision-maker, involving ethical dilemmas in data privacy, model security, etc.

4. Generalization ability decline

In the 1960s, the Bell-LaPadula security model pointed out that “when and only if the system starts in a safe state, and It will never fall into an unsafe state, it will be safe."

Artificial intelligence technology uses models to represent problem spaces, but since the essence of security is the confrontation between resources and intelligence, the security problem space is never closed. A model that performs well on the training set is not suitable for large-scale In the real environment, there will be constant confrontation as soon as it goes online, and then it will continue to fall into a state of failure, and the model's generalization ability will decline.

5. Intelligent Security Autonomy Model

Knowledge and reasoning are the basis of human intelligence. To realize reasoning and decision-making, computers need to solve three problems: knowledge representation and reasoning form, uncertainty Sexual knowledge representation and reasoning, common sense representation and reasoning.

Card games are incomplete information games, and playing cards with a computer is much more difficult than playing chess. In 2017, artificial intelligence defeated humans in 6-player no-limit Texas Hold'em poker. Card games are probabilistic and deterministic problems, and the real environment is completely uncertain and even a confrontational environment. Therefore, autonomous decision-making in complex environments is very challenging.

The challenges of autonomous decision-making in confrontational scenarios mainly come from two aspects: the dynamics of the environment and the complexity of the task. The dynamics of the environment include uncertain conditions, incomplete information, dynamic changes in situations, and real-time games; the complexity of tasks includes information collection, offense, defense, reconnaissance, harassment, etc.

Autonomous decision-making in confrontational scenarios usually uses common sense and logical deduction to make up for incomplete information, and then generates plans by integrating human domain knowledge and reinforcement learning results to assist in making correct decisions.

Autonomous decision-making in complex environments also needs to solve the problem of how to adapt to environmental changes and make decision-making changes accordingly. For example, autonomous driving builds a model after identifying objects, and makes real-time driving planning based on this, but it is difficult to deal with emergencies. Therefore, autonomous driving also requires driving knowledge and experience, and this experiential knowledge needs to be learned in the process of continuous interaction with the environment, that is, reinforcement learning.

Therefore, the autonomous decision-making ability of threat detection and protection of an intelligently empowered security system is one of the key indicators to measure its intelligence. Referring to the classification of autonomous driving systems, an intelligent safety autonomy model can be constructed.

| ##Level | Name

|

Definition |

| L0 | No autonomy | Defensive countermeasures completely rely on manual efforts by security experts. |

| L1 | Security expert assistance | The protection system has been completed Detection and defense of known attacks and threats, optimization of accuracy rate, false negative rate and false positive rate, threat analysis and source tracing, etc. need to be done manually by security experts. |

| L2 | Partial autonomy | The protection system detects and protects known attacks and threats, and can also perceive unknown threats, but other aspects such as optimization of accuracy, false negative rate and false positive rate, threat analysis and source tracing require security experts. Performed manually. |

L3 |

Conditional Autonomization |

The protection system has been The detection and protection of known and unknown attacks and threats can also continuously optimize the accuracy rate, false negative rate, and false positive rate to resist independent learning and upgrades. However, threat analysis, source tracing, response, etc. need to be manually performed by security experts. |

L4 |

Highly autonomous |

The protection system completes all In the detection, decision-making, protection, analysis, and source tracing of attacks and threats, security experts intervene and respond to a small amount in the process. |

L5 |

Completely autonomous |

The protection system is completed autonomously The detection, decision-making, protection, analysis, and source tracing of all attacks and threats do not require the intervention and response of security experts. |

The above is the detailed content of Thoughts on AI network security brought about by ChatGPT's popularity. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year