Technology peripherals

Technology peripherals

AI

AI

Microsoft multi-modal ChatGPT is coming? 1.6 billion parameters to handle tasks such as looking at pictures and answering questions, IQ tests, etc.

Microsoft multi-modal ChatGPT is coming? 1.6 billion parameters to handle tasks such as looking at pictures and answering questions, IQ tests, etc.

Microsoft multi-modal ChatGPT is coming? 1.6 billion parameters to handle tasks such as looking at pictures and answering questions, IQ tests, etc.

In the field of NLP, large language models (LLMs) have successfully served as common interfaces in various natural language tasks. As long as we can convert the input and output to text, we can adapt the LLM-based interface to a task. For example, the summary task takes in documents and outputs summary information. So, we can feed input documents into a summary language model and generate a summary.

Despite the successful application of LLM in NLP tasks, researchers still struggle to use it natively for multi-modal data such as images and audio. As a fundamental component of intelligence, multimodal perception is a necessary condition for achieving general artificial intelligence, both for knowledge acquisition and dealing with the real world. More importantly, unlocking multimodal input can greatly expand the application of language models in more high-value fields, such as multimodal robotics, document intelligence, and robotics.

Therefore, the Microsoft team introduced a multi-modal large-scale language in the paper "Language Is Not All You Need: Aligning Perception with Language Models" Model (MLLM) - KOSMOS-1, which can perceive general modalities, follow instructions (i.e. zero-shot learning), and learn in context (i.e. few-shot learning) . The research goal is to align perception with LLM so that the model can see and talk. The researchers trained KOSMOS-1 from scratch according to the method of METALM (see the paper "Language models are general-purpose interfaces").

- ##Paper address: https://arxiv.org/ pdf/2302.14045.pdf

- Project address: https://github.com/microsoft/unilm

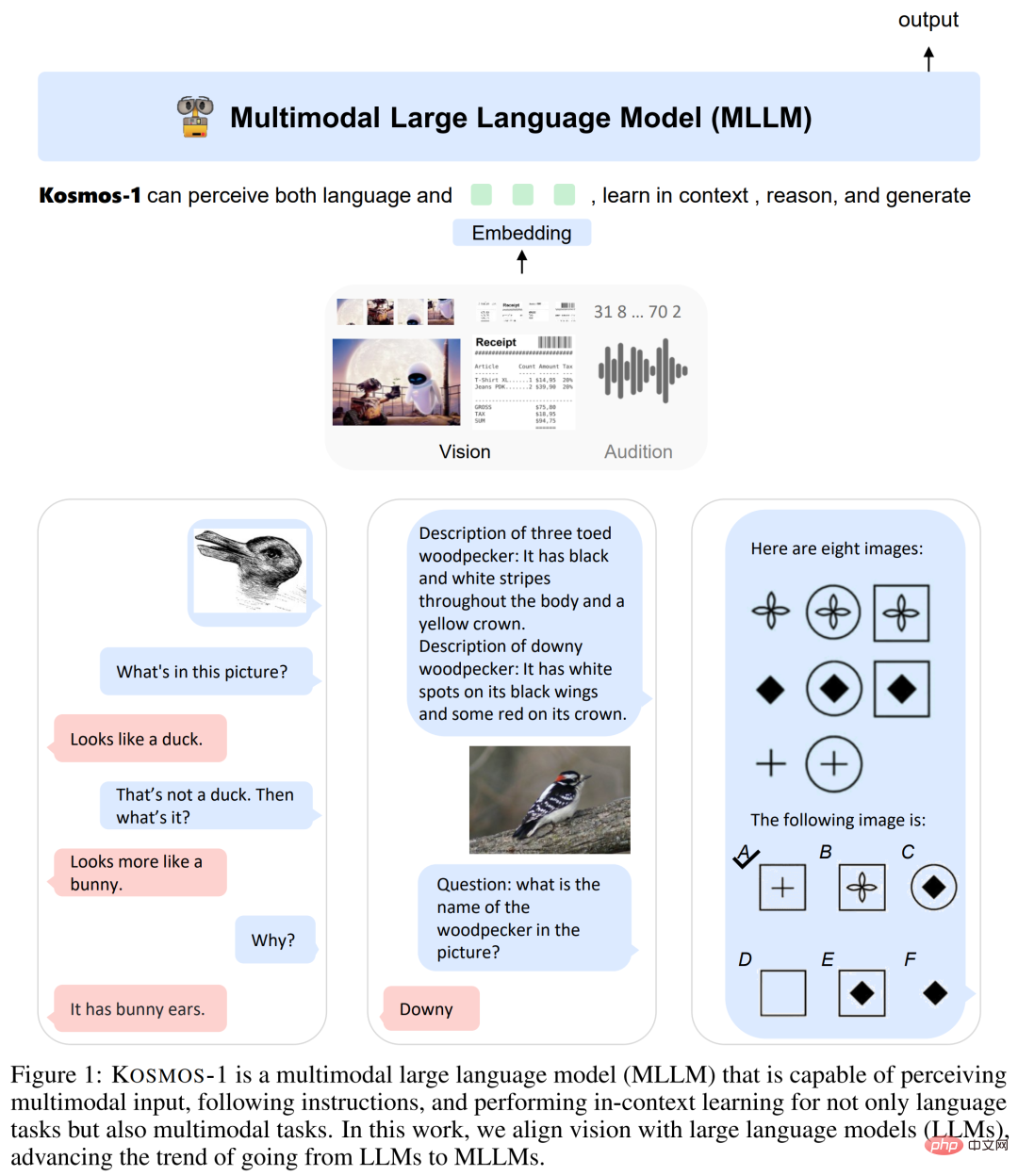

As shown in Figure 1 below, the researcher uses a Transformer-based language model as a general interface and connects it with the perception module. They trained the model on a web-scale multimodal corpus, which includes text data, arbitrarily interleaved images and text, and image-caption pairs. In addition, the researchers calibrated cross-modal instruction following ability by transmitting pure language data.

Finally, the KOSMOS-1 model natively supports language, perceptual language and visual tasks in zero-shot and few-shot learning settings, as shown in Table 1 below.

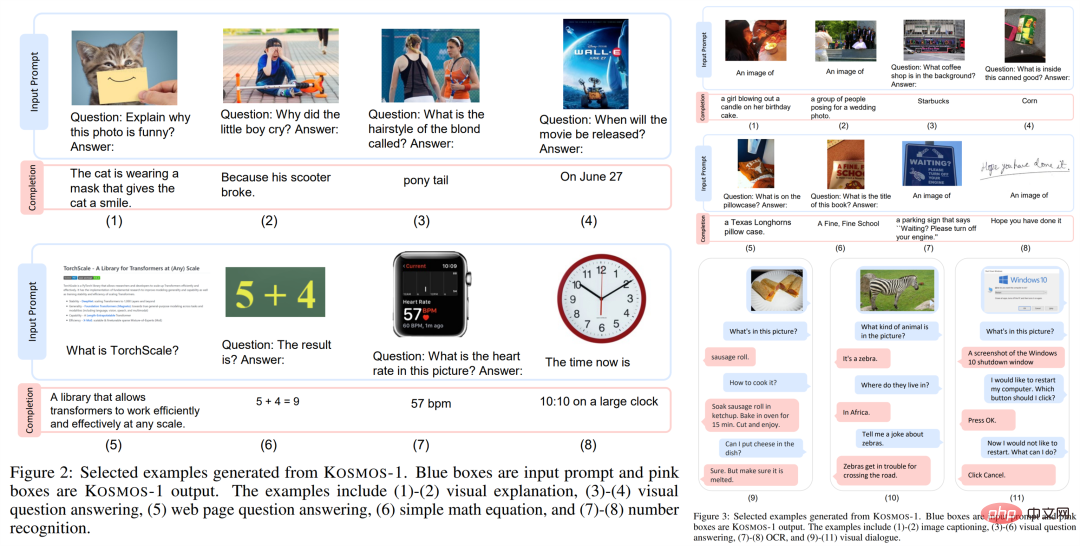

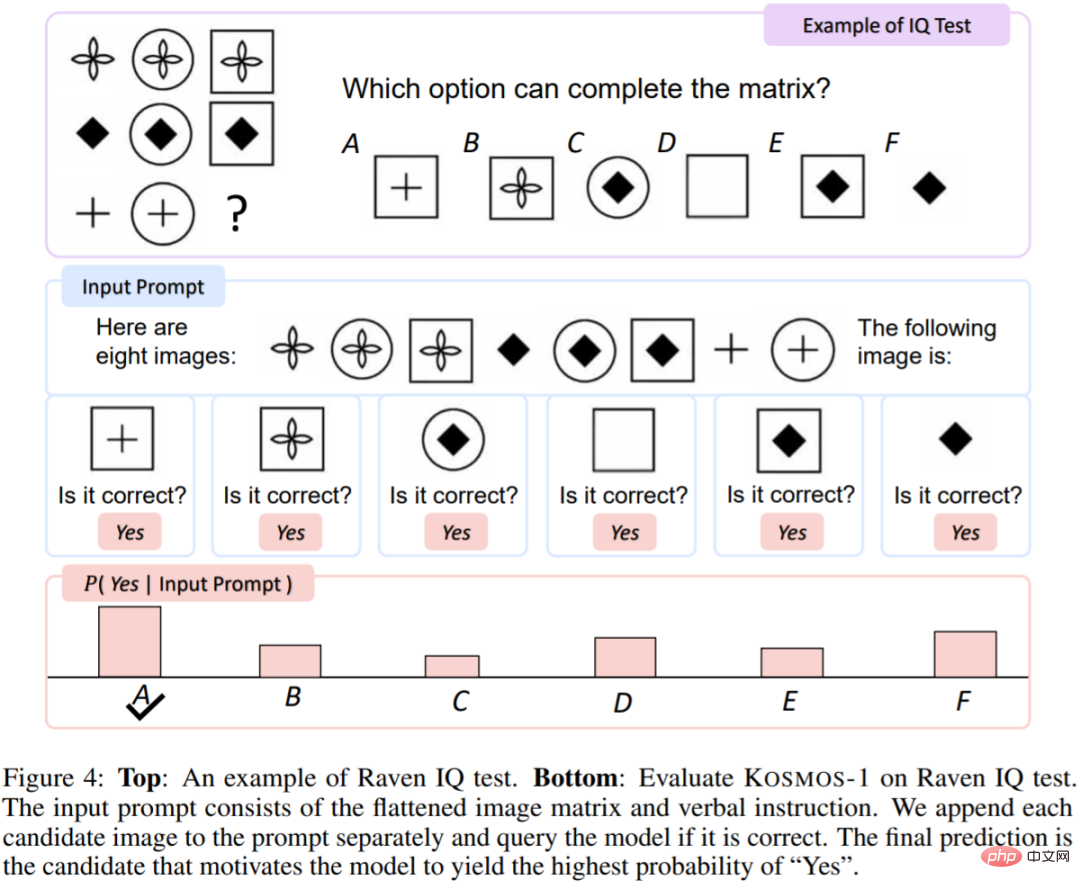

The researchers show some generated examples in Figures 2 and 3 below. In addition to various natural language tasks, the KOSMOS-1 model is able to natively handle a wide range of perceptually intensive tasks, such as visual dialogue, visual explanation, visual question answering, image subtitles, simple mathematical equations, OCR and Zero-shot image classification with description. They also established an IQ test benchmark based on the Raven's Progressive Matrices (RPM) to assess MLLM's non-verbal reasoning abilities.

#These examples demonstrate that native support for multimodal perception provides new opportunities to apply LLM to new tasks . In addition, compared with LLM, MLLM achieves better commonsense reasoning performance, indicating that cross-modal transfer facilitates knowledge acquisition.

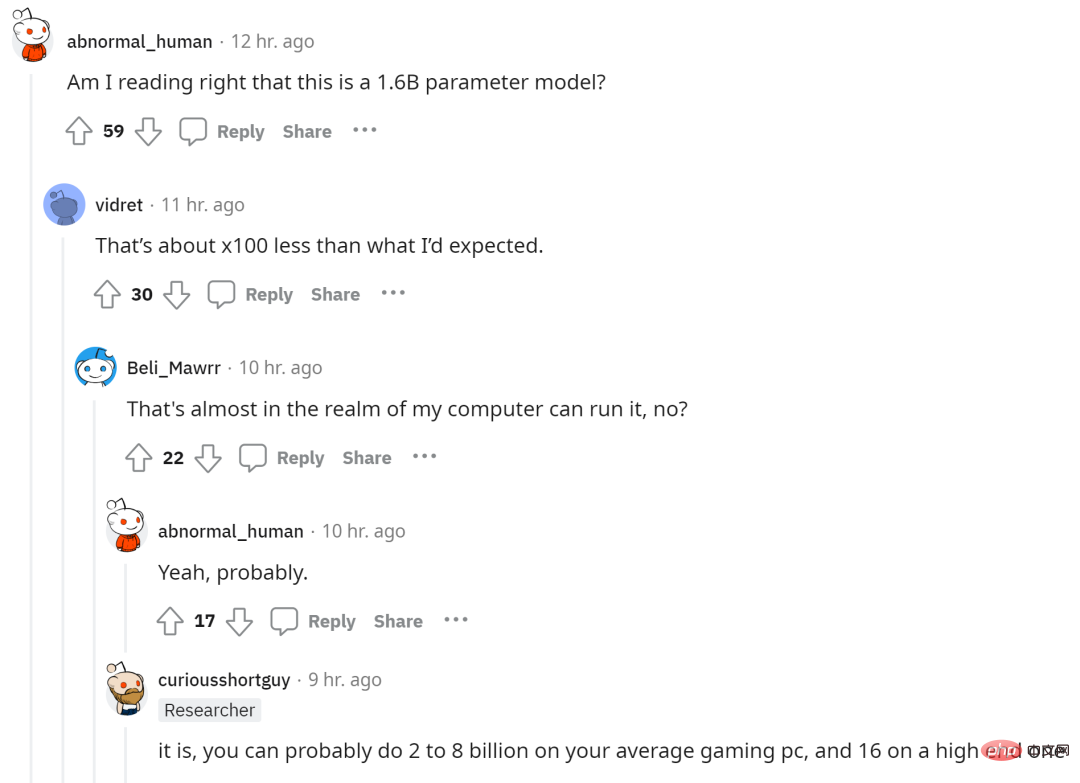

Since the number of parameters of the KOSMOS-1 model is 1.6 billion, some netizens expressed the hope of running this large multi-modal model on their computers.

KOSMOS-1: A multimodal large-scale language model

As shown in Figure 1, KOSMOS-1 is a multimodal language model that can both perceive general modalities and follow Instructions can also learn and generate output in context. Specifically, the backbone of KOSMOS-1 is a causal language model based on Transformer. In addition to text, other modalities can also be embedded and input into the model. As shown in the figure below, in addition to language, there are also embeddings of vision, speech, etc. Transformer decoders serve as a general interface for multimodal inputs. Once the model is trained, KOSMOS-1 can also be evaluated on language tasks and multi-modal tasks in zero-shot and few-shot settings.

Transformer The decoder perceives the modality in a unified way, and the input information will be flattened into a sequence with special tokens. For example, indicates the beginning of the sequence, and indicates the end of the sequence. The special tokens  and represent the start and end of the encoded image embedding.

and represent the start and end of the encoded image embedding.

The embedding module encodes text tokens and other input modalities into vector representations. For input token, the study uses a lookup table to map it into embeddings. For continuous signal modalities (e.g., images and audio), the input can also be represented as discrete codes.

After that, the obtained input sequence embedding is fed to the Transformer-based decoder. The causal model then processes the sequence in an autoregressive manner, resulting in the next token. In summary, the MLLM framework can flexibly handle various data types as long as the inputs are represented as vectors.

Model training

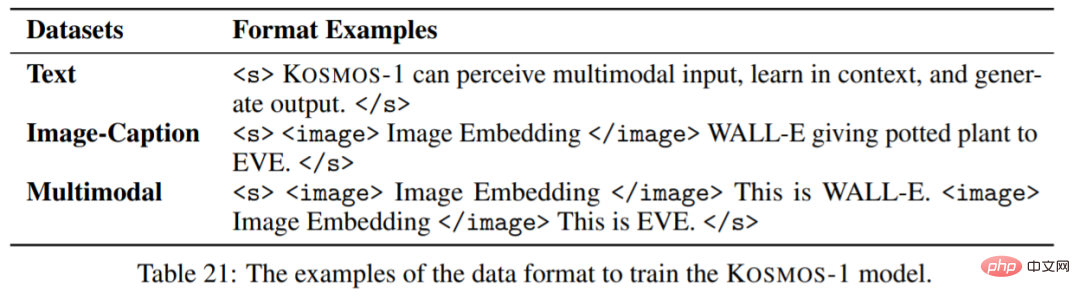

The first is the training data set. Datasets include text corpora, image-subtitle pairs, and image and text cross-datasets. Specifically, the text corpus includes The Pile and Common Crawl (CC); the image-caption pairs include English LAION-2B, LAION-400M, COYO-700M and Conceptual Captions; the image and text cross-multimodal data set comes from Common Crawl snapshot .

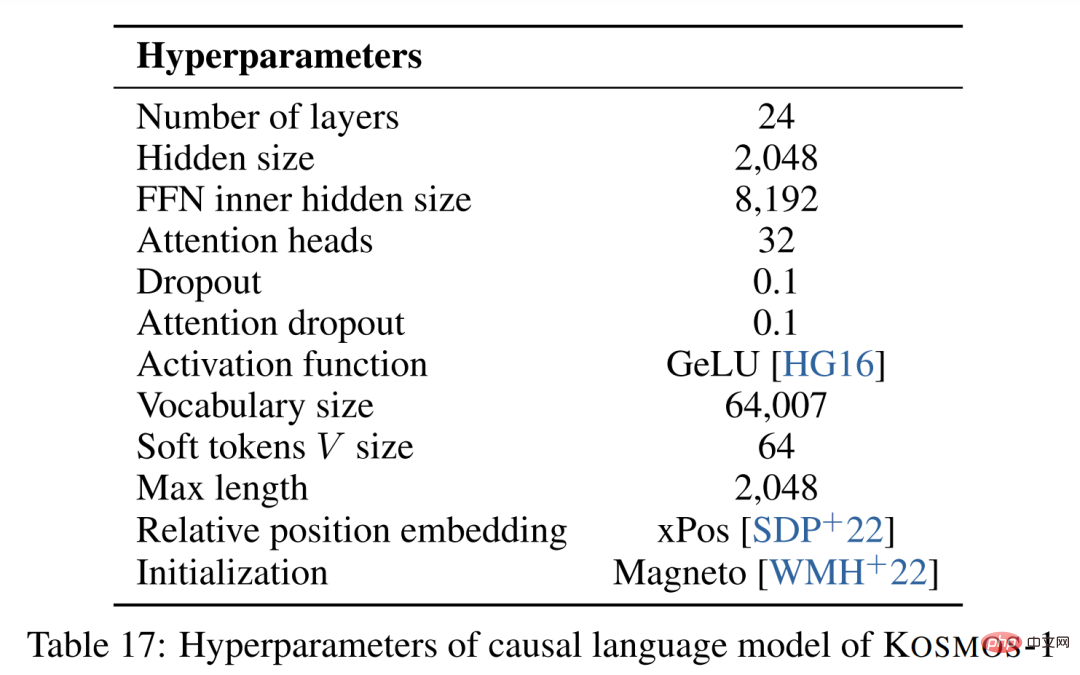

The data set is there, and then there is the training settings. The MLLM component contains 24 layers, hidden dimensions of 2048, 8192 FFNs, 32 attention heads, and parameter size of 1.3B. To enable better model convergence, image representations are obtained from the pre-trained CLIP ViT-L/14 model with 1024 feature dimensions. Images are preprocessed to 224 × 224 resolution during training. Additionally, all CLIP model parameters except the last layer are frozen during training. The total number of parameters for KOSMOS-1 is approximately 1.6B.

Experimental results

This study conducted a series of rich experiments To evaluate KOSMOS-1: language tasks (language understanding, language generation, OCR-free text classification); cross-modal transfer (common sense reasoning); non-verbal reasoning (IQ test); perceptual-language tasks (image subtitles, visual question and answer, Web Q&A); visual tasks (zero-shot image classification, zero-shot image classification with description).

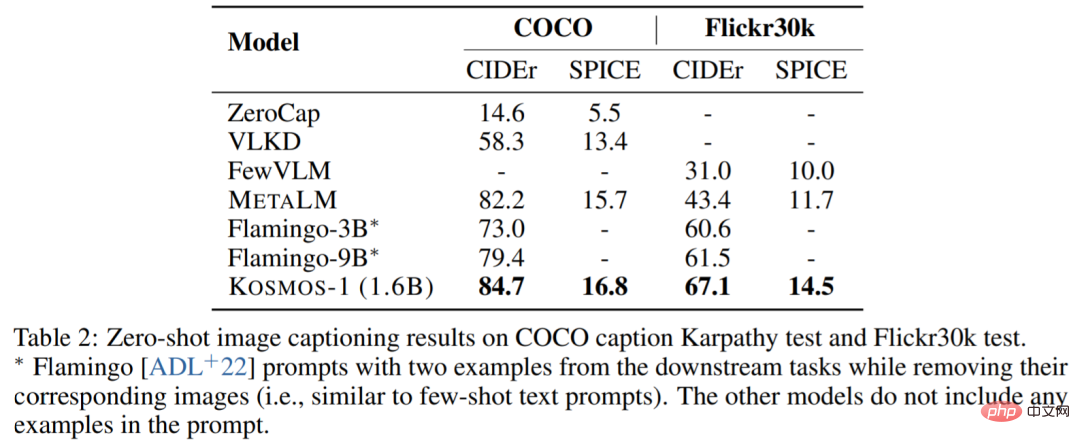

Image subtitles. The following table shows the zero-sample performance of different models on COCO and Flickr30k. Compared with other models, KOSMOS-1 has achieved significant results, and its performance is also good even on the basis that the number of parameters is much smaller than Flamingo.

The following table shows the performance comparison of few samples:

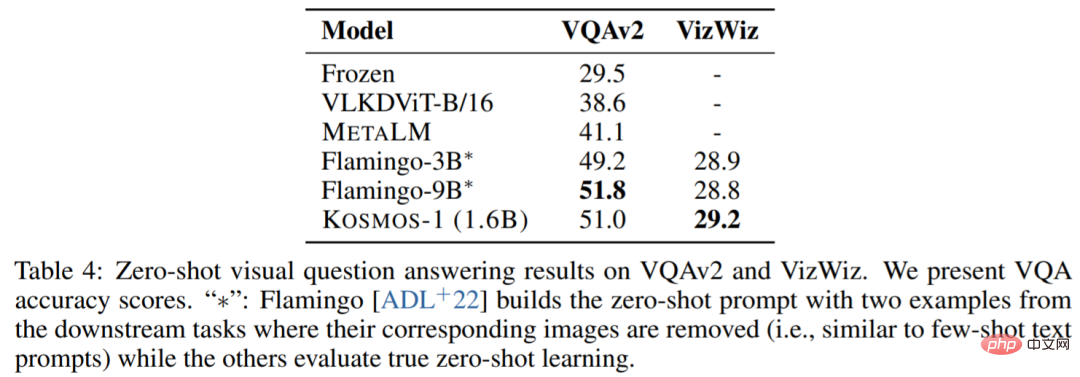

Visual Q&A. KOSMOS-1 has higher accuracy and robustness than Flamingo-3B and Flamingo-9B models:

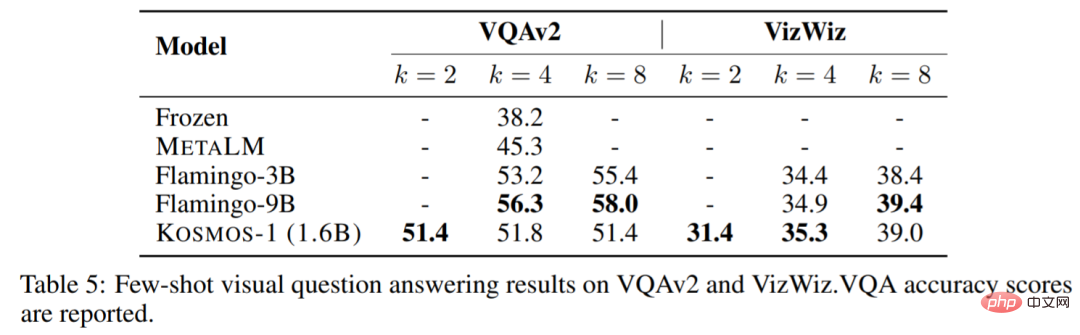

The following table shows the performance comparison of few samples:

IQ Test. The Raven's Reasoning Test is one of the most common tests used to assess nonverbal reasoning. Figure 4 shows an example.

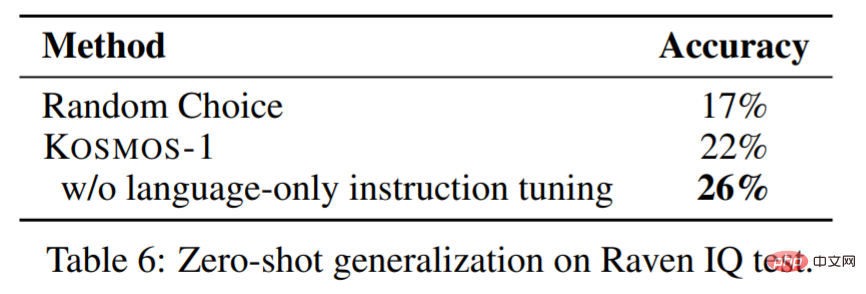

Table 6 shows the evaluation results on the IQ test data set. KOSMOS-1 is able to perceive abstract conceptual patterns in a nonverbal environment and then reason out subsequent elements among multiple choices. To our knowledge, this is the first time a model has been able to perform such a zero-sample Raven IQ test.

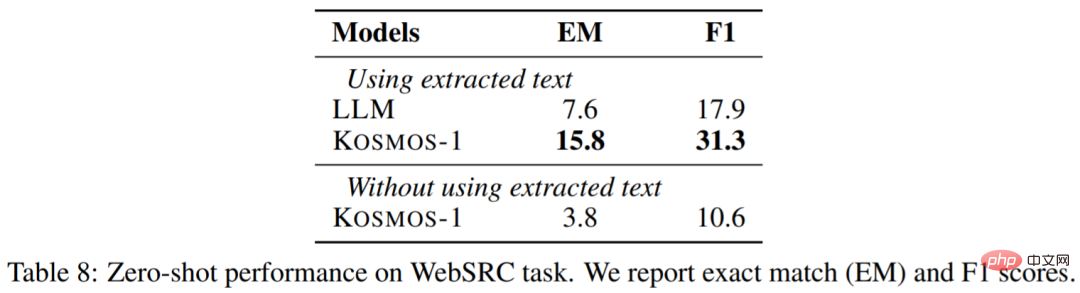

##Web Q&A. Web Q&A aims to find answers to questions from web pages. It requires the model to understand both the semantics and the structure of the text. The results are as follows:

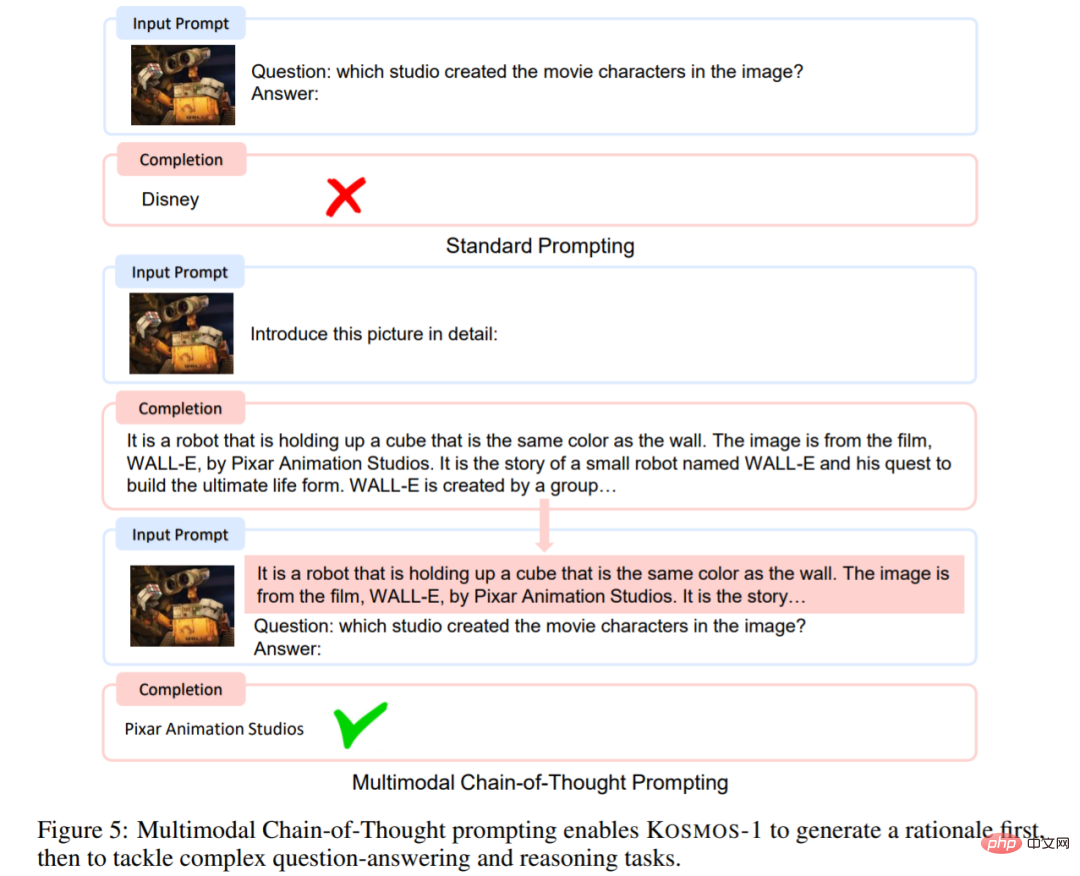

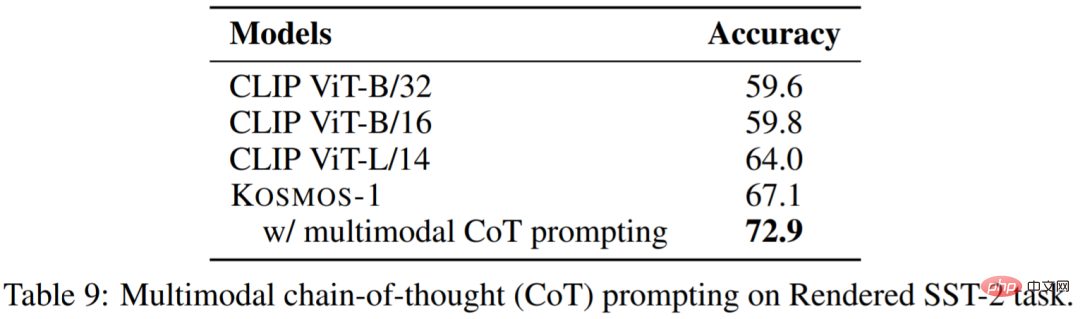

##Multimodal thinking chain prompts. Inspired by the thinking chain prompts, this article conducted an experiment in this regard. As shown in Figure 5, this article decomposes the language perception task into two steps. Given an image in the first stage, cues are used to guide the model to generate output that meets the requirements to produce the final result.

The above is the detailed content of Microsoft multi-modal ChatGPT is coming? 1.6 billion parameters to handle tasks such as looking at pictures and answering questions, IQ tests, etc.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1205

1205

24

24

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Binance is the overlord of the global digital asset trading ecosystem, and its characteristics include: 1. The average daily trading volume exceeds $150 billion, supports 500 trading pairs, covering 98% of mainstream currencies; 2. The innovation matrix covers the derivatives market, Web3 layout and education system; 3. The technical advantages are millisecond matching engines, with peak processing volumes of 1.4 million transactions per second; 4. Compliance progress holds 15-country licenses and establishes compliant entities in Europe and the United States.

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

After ETH upgrade, novices should adopt the following strategies to avoid losses: 1. Do their homework and understand the basic knowledge and upgrade content of ETH; 2. Control positions, test the waters in small amounts and diversify investment; 3. Make a trading plan, clarify goals and set stop loss points; 4. Profil rationally and avoid emotional decision-making; 5. Choose a formal and reliable trading platform; 6. Consider long-term holding to avoid the impact of short-term fluctuations.

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

The top exchanges include: 1. Binance, the world's largest trading volume, supports 600 currencies, and the spot handling fee is 0.1%; 2. OKX, a balanced platform, supports 708 trading pairs, and the perpetual contract handling fee is 0.05%; 3. Gate.io, covers 2700 small currencies, and the spot handling fee is 0.1%-0.3%; 4. Coinbase, the US compliance benchmark, the spot handling fee is 0.5%; 5. Kraken, the top security, and regular reserve audit.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play