Technology peripherals

Technology peripherals

AI

AI

Training cost optimization practice of Tencent advertising model based on 'Tai Chi'

Training cost optimization practice of Tencent advertising model based on 'Tai Chi'

Training cost optimization practice of Tencent advertising model based on 'Tai Chi'

#In recent years, big data enlargement models have become the standard paradigm for modeling in the AI field. In the advertising scene, large models use more model parameters and use more training data. The models have stronger memory capabilities and generalization capabilities, opening up more space for improving advertising effects. However, the resources required for large models in the training process have also increased exponentially, and the storage and computing pressure are huge challenges to the machine learning platform.

Tencent Taiji Machine Learning Platform continues to explore cost reduction and efficiency improvement solutions. It uses hybrid deployment resources in advertising offline training scenarios to greatly reduce resource costs, and provides Tencent Advertising with 50W core cheap hybrid deployment every day. resources, helping Tencent Advertising reduce the cost of offline model training resources by 30%. At the same time, through a series of optimization methods, the stability of co-location resources is equal to that of normal resources.

1. Introduction

In recent years, with the great success of large models sweeping various big data orders in the field of NLP, big data enlarged models have become A standard paradigm for modeling in the AI domain. Modeling of search, advertising, and recommendation is no exception. With hundreds of billions of parameters at every turn, T-sized models have become the standard for major prediction scenarios. Large model capabilities have also become the focus of the arms race among major technology companies.

In the advertising scene, large models use more model parameters and use more training data. The model has stronger memory ability and generalization ability, which improves the advertising effect. Lifting upward opens up more space. However, the resources required for large models in the training process have also increased exponentially, and the storage and computing pressure are huge challenges to the machine learning platform. At the same time, the number of experiments that the platform can support directly affects the algorithm iteration efficiency. How to provide more experimental resources at a lower cost is the focus of the platform's efforts.

Tencent Taiji Machine Learning Platform continues to explore cost reduction and efficiency improvement solutions. It uses hybrid deployment resources in advertising offline training scenarios to greatly reduce resource costs, and provides Tencent Advertising with 50W core cheap hybrid deployment every day. resources, helping Tencent Advertising reduce the cost of offline model training resources by 30%. At the same time, through a series of optimization methods, the stability of co-location resources is equal to that of normal resources.

2, Introduction to Taiji Machine Learning Platform

Taiji Machine Learning Platform is committed to allowing users to focus more on business AI problem solving and application, one-stop solution for algorithm engineers to solve engineering problems such as feature processing, model training, and model services in the AI application process. Currently, it supports key businesses such as in-company advertising, search, games, Tencent Conference, and Tencent Cloud.

Taiji Advertising Platform is a high-performance machine learning platform designed by Taiji Advertising System that integrates model training and online reasoning. The platform has the training and reasoning capabilities of trillions of parameter models. At present, the platform supports Tencent advertising recall, rough ranking, fine ranking, dozens of model training and online inference; at the same time, the Taiji platform provides one-stop feature registration, sample supplementary recording, model training, model evaluation and online testing capabilities, greatly improving Improve developer efficiency.

- Training platform: Currently, model training supports two training modes: CPU and GPU. It uses self-developed efficient operators and mixed precision training. With 3D parallel and other technologies, the training speed is increased by 1 order of magnitude compared with the industry’s open source systems.

- Inference Framework: Taiji’s self-developed HCF (Heterogeneous Computing Framework) heterogeneous computing framework, through the hardware layer, compilation layer and software Layer joint optimization provides ultimate performance optimization.

3. Specific implementation of cost optimization

(1) Introduction to the overall plan

With the continuous development of the Tai Chi platform, the number and types of tasks are increasing day by day, and the resource requirements are also increasing. In order to reduce costs and increase efficiency, the Tai Chi platform on the one hand improves platform performance and increases training speed; on the other hand, we also look for cheaper resources to meet the growing demand for resources.

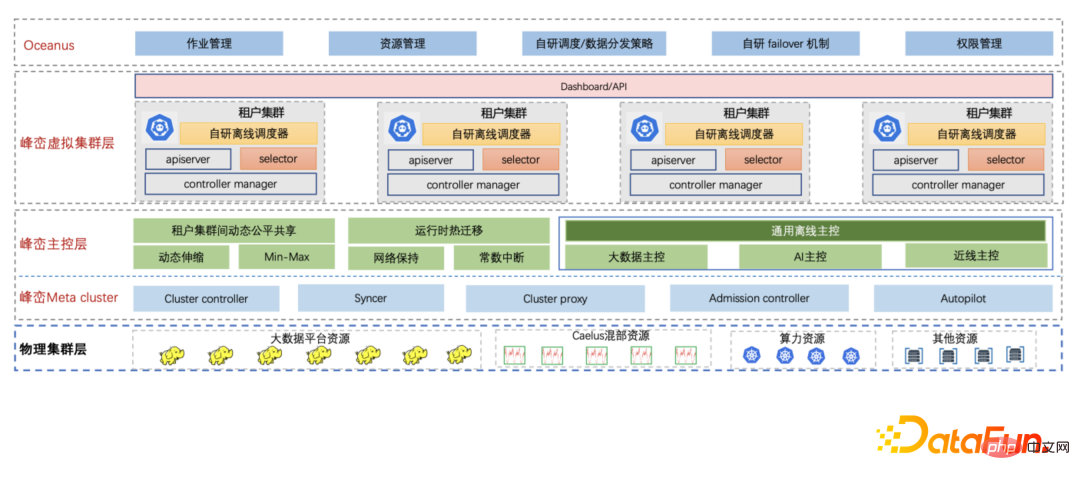

Fengluan - Tencent's internal cloud-native big data platform uses cloud-native technology to upgrade the company's entire big data architecture. In order to meet the continuously growing resource demand of big data business, Fengluan has introduced co-location resources, which can not only meet the resource demand, but also greatly reduce resource costs. Fengluan provides a series of solutions for co-location resources in different scenarios, turning unstable co-location resources into stable resources that are transparent to the business. Fengluan's co-location capability supports three types of co-location resources:

- Reuse online idle resources. Due to peaks and troughs of online resources, overestimation of resource usage, and cluster resource fragmentation, cluster resource utilization is low and there are a large number of idle resources. Fengluan taps these temporary idle resources to run big data tasks, and is currently deploying them in scenarios such as online advertising, storage, social entertainment, and games.

- #Flexible lending of offline resources. Some tasks on the big data platform also have a tidal phenomenon. During the day, when the resource usage of the big data cluster is low, Fengluan supports the temporary flexible lending of some resources, and then takes back these resources before the peak of the big data cluster arrives. This scenario is very suitable for solving the problem of online tasks temporarily requiring a large amount of resources during holidays and major promotions. Fengluan currently supports major holidays such as the Spring Festival and 618.

- Reuse computing resources. Computing resources are mined from the idle resources of the mica machine in the form of low-quality CVM. The so-called low-quality CVM refers to starting a CVM virtual machine with a lower CPU priority on the mica machine. This virtual machine can be preempted by other virtual machines in real time. Based on the resource information provided by the underlying computing power, Fengluan has made a lot of optimizations in aspects such as scheduling, overload protection, and computing power migration. Currently, big data tasks with millions of cores are running stably on computing power resources.

At the same time, Fengluan introduces cloud-native virtual cluster technology to shield the dispersion characteristics caused by the underlying co-location resources coming from different cities and regions. The Taiji platform directly connects to the Fengluan tenant cluster, which corresponds to a variety of underlying co-location resources. The tenant cluster has an independent and complete cluster perspective, and the Taiji platform can also be seamlessly connected.

(2) Resource co-location plan

Online idle resources

Fengluan has self-developed the Caelus full-scenario offline co-location solution. By co-locating online operations and offline operations, it fully taps the idle resources of online machines and improves the utilization of online machine resources. , while reducing the resource cost of offline operations.

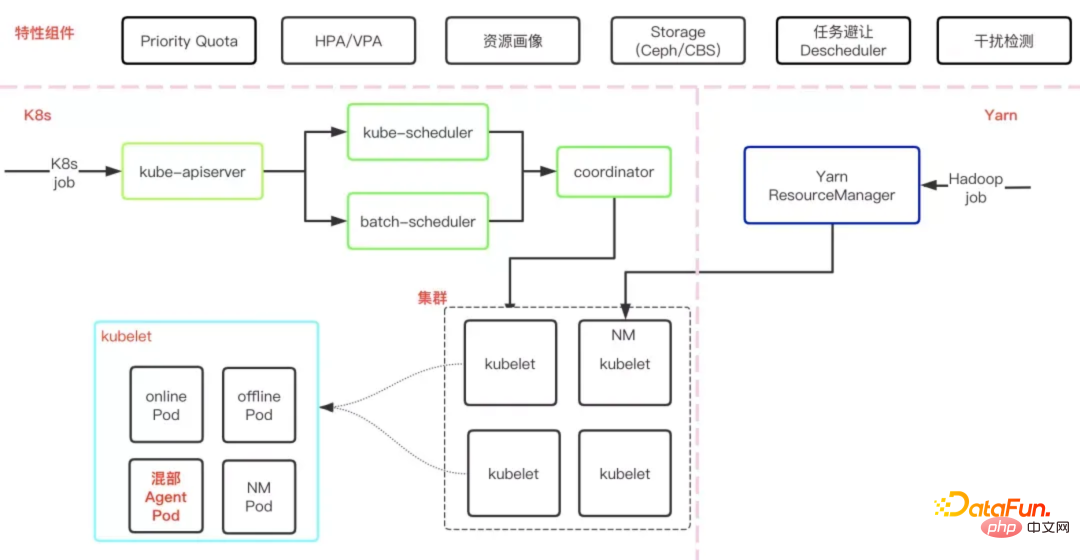

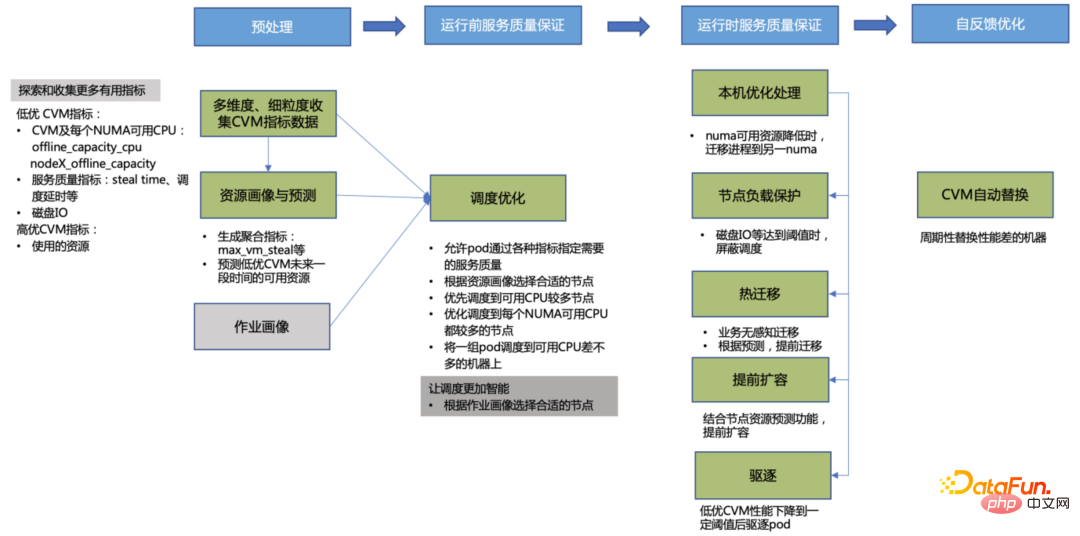

As shown in the figure below, it is the basic architecture of Caelus. Each component and module cooperates with each other to ensure the quality of co-location in many aspects.

First of all, Caelus ensures the service quality of online operations in all aspects, which is also one of the important prerequisites for co-location. For example, through rapid interference detection and processing mechanisms, it actively senses online services quality, timely processing, and supports plug-in expansion methods to support specific interference detection requirements of the business; through full-dimensional resource isolation, flexible resource management strategies, etc., high priority of online services is ensured.

Secondly, Caelus ensures the SLO of offline jobs in many aspects, such as: matching appropriate resources to jobs through co-location resources and offline job portraits to avoid resource competition; optimizing offline job eviction strategies and prioritizing eviction , supports graceful exit, and the strategy is flexible and controllable. Unlike big data offline jobs, which are mostly short jobs (minutes or even seconds), most Tai Chi jobs take longer to run (hours or even days). Through long-term resource prediction and job portraits, we can better guide scheduling to find suitable resources for jobs with different running times and different resource requirements, and avoid jobs being evicted after running for hours or even days, resulting in loss of job status, waste of resources and time. When an offline job needs to be evicted, runtime live migration will be used first to migrate the job instance from one machine to another, while keeping the memory status and IP unchanged. There will be almost no impact on the job, which greatly improves the job efficiency. SLO. In order to better utilize co-location resources, Caelus also has more capabilities. For details, see Caelus full-scenario offline co-location solution( https://www.php.cn/link/caaeb10544b465034f389991efc90877).

Tidal Resources

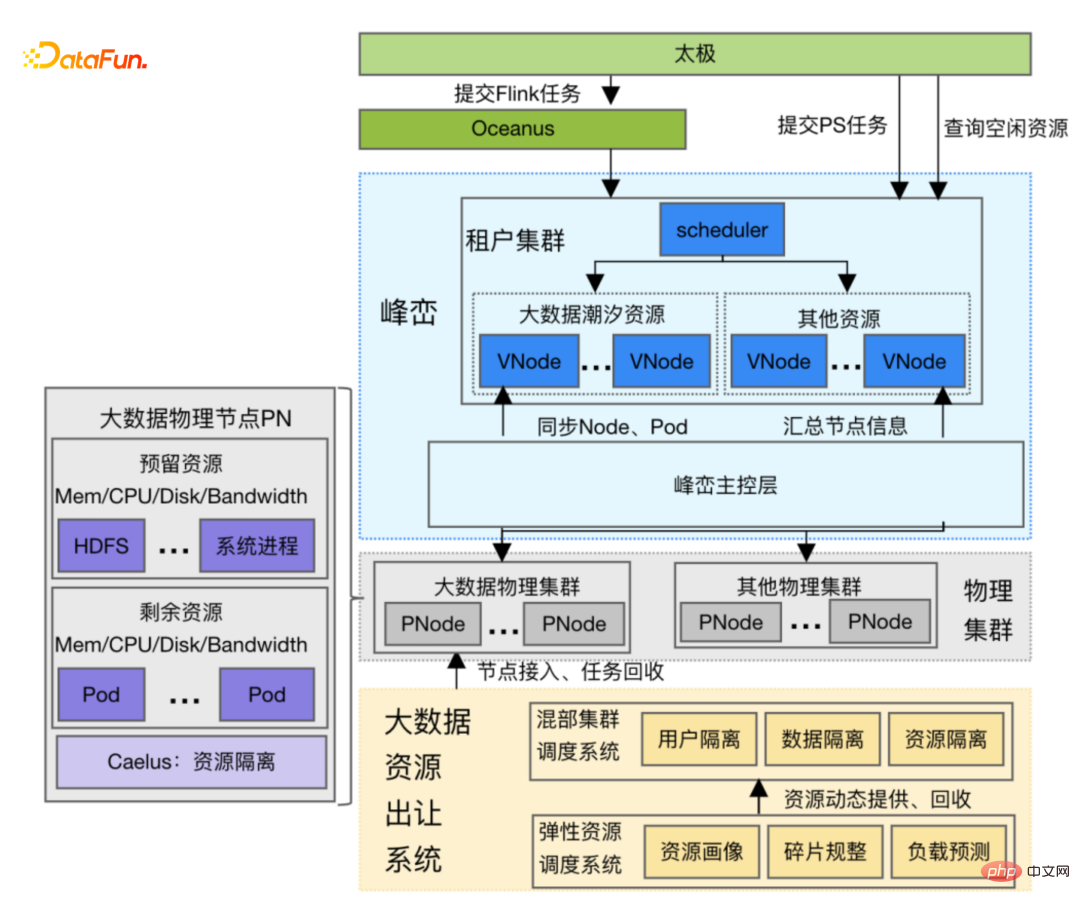

Big data tasks are generally relatively large during the day. There are less tasks at night, so Fengluan transfers some of the idle big data resources during the day to the Taiji platform, and recycles these resources at night. We call this resource tidal resources. The characteristic of Tidal resources is that the big data tasks on the nodes are almost completely exited, but the big data storage service HDFS is still retained on the nodes, and the HDFS service cannot be affected when running Tai Chi jobs. When the Taiji platform uses tidal resources, it needs to reach an agreement with the Fengluan platform. The Fengluan platform will screen a batch of nodes in advance based on historical data at a fixed time point. After the big data task gracefully exits, it will notify the Taiji platform that new nodes have joined, and the Taiji platform will start to The tenant cluster submits more tasks. Before the borrowing time arrives, Fengluan notifies Taiji Platform that some nodes need to be recycled, and Taiji Platform returns the nodes in an orderly manner.

As shown in the figure below, the mining, management and use of tidal resources involve the division of labor and cooperation of multiple systems:

- Big data resource transfer system: This system will be based on the machine learning algorithm based on the different job running conditions on each machine and the cluster's operating data in the past period. Find the most suitable machine nodes to be offline to meet specific resource requirements and have the least impact on running jobs, then prohibit scheduling new jobs to these nodes and wait for the jobs running on the nodes to finish running, maximizing Reduce the impact on big data operations.

- Caelus co-location system: Although big data jobs are no longer running on the machine resources vacated by the transfer system, there are still big data jobs running on them. HDFS service also provides data reading and writing services. In order to protect the HDFS service, the Caelus co-location system is introduced, using HDFS as an online service, and ensuring that the HDFS service quality is not affected through Caelus's series of online service assurance methods (such as detecting whether it is affected through HDFS key indicators).

- Use Tide resources through virtual clusters: These transferred machine resources will be managed and scheduled uniformly by Fengluan, and used as virtual clusters The method is provided to the Taiji platform and provides the K8S native interface. This shields the differences in underlying resources from the upper platform and ensures that applications use resources in the same way.

- Connected with application layer breakpoint resume training: Tidal resources will be recycled at night to run big data jobs. In order to reduce In order to reduce the impact of recycling, the breakpoint resume training functions of the peak and application layers have been opened up to realize resource switching without interrupting training, and the continued operation of the business will not be affected after switching.

Computing resources

The characteristic of computing resources is that it presents an exclusive CVM to the business. It is relatively friendly for business users. However, the challenge of using computing resources is that the CPU resources of low-quality CVM at the mica machine level will be suppressed by online CVM at any time, resulting in very unstable computing resources:

- Computing power Machine instability: Computing machines may go offline due to fragmented resource consolidation, insufficient power in the computer room, etc.

- Low priority of computing resources: In order to ensure that the service quality of normal CVM machines is not affected, jobs on computing resources have the lowest priority and will be unconditionally assigned to jobs on high-quality resources. Give in, resulting in extremely unstable performance.

- High eviction frequency: Various reasons (insufficient computing resource performance, insufficient disk space, disk stuck, etc.) will trigger active eviction of pods, increasing the probability of pod failure.

In order to solve the problem of instability of computing power resources, various capabilities are expanded through the peak and mountain main control layer, and computing power resources are optimized from many aspects to improve the stability of computing power. :

##① Resource portrait and prediction: Explore and collect various machine performance indicators and generate Aggregation indicators predict the available resources of low-quality CVM in the future. This information is used by the scheduler to schedule pods and the eviction component to evict pods to meet the pod's resource requirements.

② Scheduling optimization: In order to ensure the service quality of Tai Chi operations, there are more optimizations in the scheduling strategy based on the needs of the operations and the characteristics of the resources. Operation performance has been improved by more than 2 times.

- Same-city scheduling: Schedule PST and training jobs to the same computer room in the same city, minimizing the network delay between job instances, and the cost of network bandwidth in the same city is also lower. Played a role in reducing costs.

- Single-machine scheduling optimization: Combined with the results of resource prediction and indicators such as CPU stealtime, select a CPU with better performance for the job to bind cores to better improve the job performance.

- Hierarchical scheduling: Automatically label and classify all managed resources, and automatically schedule jobs with high disaster recovery requirements such as Job Manager to be relatively stable resources.

- Tuning scheduling parameters: Based on resource portraits and prediction data, the scheduler prioritizes nodes with better performance and more stability for jobs. In addition, in order to solve the echelon expiration problem caused by inconsistent steps, instances of the same job are scheduled to machines with similar performance

③ Runtime service quality assurance

- The active eviction phase introduces runtime hot migration to make the business basically imperceptible: in order to cope with resource instability and application being killed due to pod eviction solve the problem, implement runtime hot migration, and provide a variety of hot migration strategies to meet the needs of different scenarios. According to current online data, when using the migration priority strategy, for containers with large memory, the interruption time of live migration is more than 10 seconds. We also implemented a constant interrupt time independent of memory size (a recovery-first strategy). Currently, more than 20,000 pods are successfully actively migrated every day, and cross-cluster hot migration is supported, which greatly reduces the impact of eviction.

- Optimize the eviction strategy to minimize the impact of eviction: every time each machine is evicted, the pods started after the eviction will be given priority to avoid affecting the already started Each task only evicts one node at a time to avoid eviction of the upstream and downstream of a single task, causing task-level restart; when a pod is evicted, it will link with the upper-layer Flink framework to proactively notify Flink for quick single-point recovery.

④ Self-feedback optimization: Through resource portraits, machines with poor performance are periodically replaced and connected with the underlying platform to achieve The smooth detachment of CVM gives Fengluan the opportunity to migrate application instances one by one without affecting the business, reducing the impact on the instances.

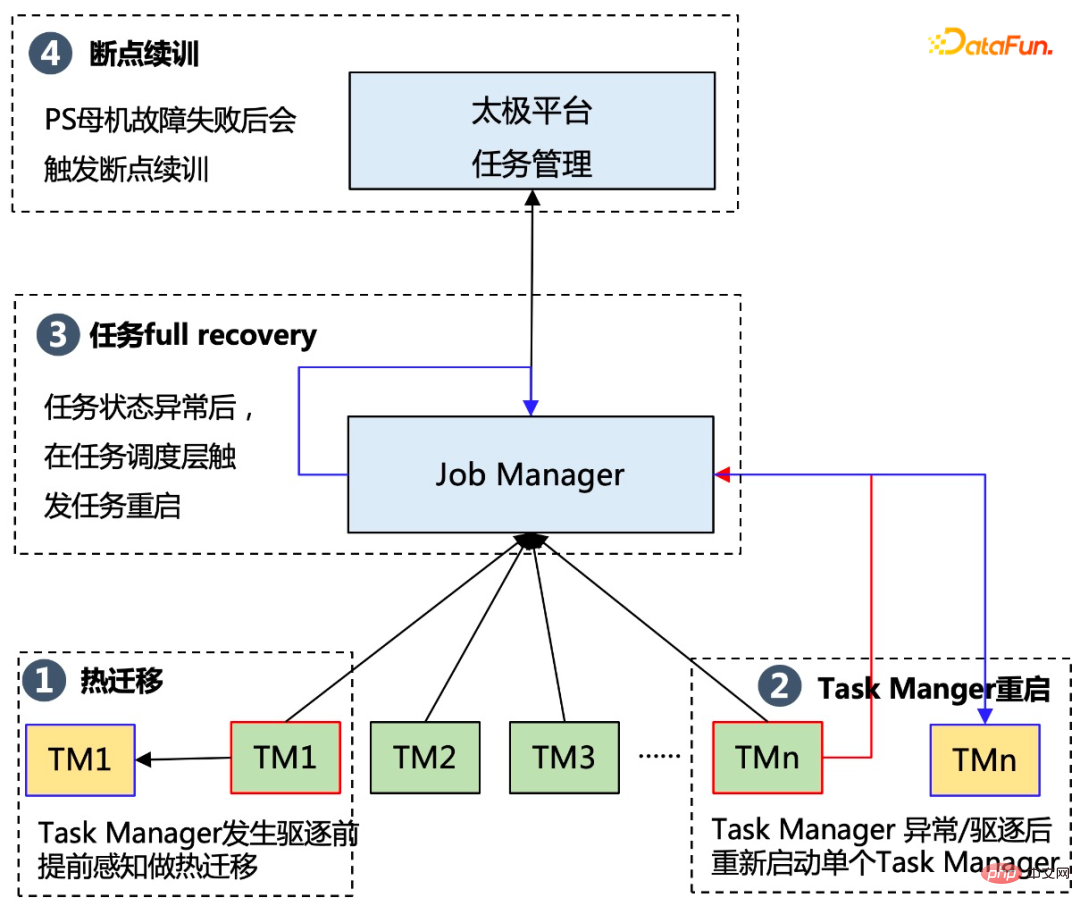

⑤ Improve the disaster recovery capability of the Flink layer and support single point restart and hierarchical scheduling

##TM (Task Manager)'s single-point restart capability prevents Task failure from causing the entire DAG to fail, and can better adapt to the computing power preemptive feature; hierarchical scheduling avoids excessive job waiting caused by gang scheduling, and avoids the waste of excessive application of TM Pods.

(3) Application layer optimization solution

Business fault tolerance

Offline training tasks should be used A major premise of cheap resources is that they cannot affect the normal operation of the original tasks on the resources, so co-location resources have the following key challenges:

- Co-location resources are mostly temporary resources. Will frequently go offline;

- Co-location resources will unconditionally give in to high-quality resources, resulting in extremely unstable machine performance;

- Co-location resources The automatic eviction mechanism also greatly increases the failure probability of nodes and pods.

In order to ensure that tasks can run stably on co-location resources, the platform uses a three-level fault tolerance strategy. Specifically, The solution is as follows:

- Hot migration technology: sense in advance before the Task Manager is about to be evicted, and migrate the corresponding Task Manager to another pod; at the same time, use memory compression, streaming concurrency, cross-cluster hot migration, etc. The ability to continuously optimize the success rate of thermal migration.

- Task Manager Restart: When a Task Manager in the task fails due to exception or eviction, the entire task will not fail and exit directly, but will save the status of the Task Manager first. , and then restart the Task Manager, thereby reducing the probability of failure of the entire task.

- Task Full Recovery: When a task is in an unrecoverable state due to abnormal Flink status, the restart of the Job Manager will be triggered. In order to ensure the stability of the Job Manager, the platform Deploy on independent resources with good stability to ensure normal task status.

- Breakpoint continuation training: If the previous fault tolerance strategies fail, the platform will restart the task based on a certain ckpt in history.

Through the fault tolerance of the business layer, the stability of tasks running on co-location resources has increased from less than 90% at the beginning to 99.5% at the end. Basic and ordinary exclusive resources The stability of the above tasks remains the same.

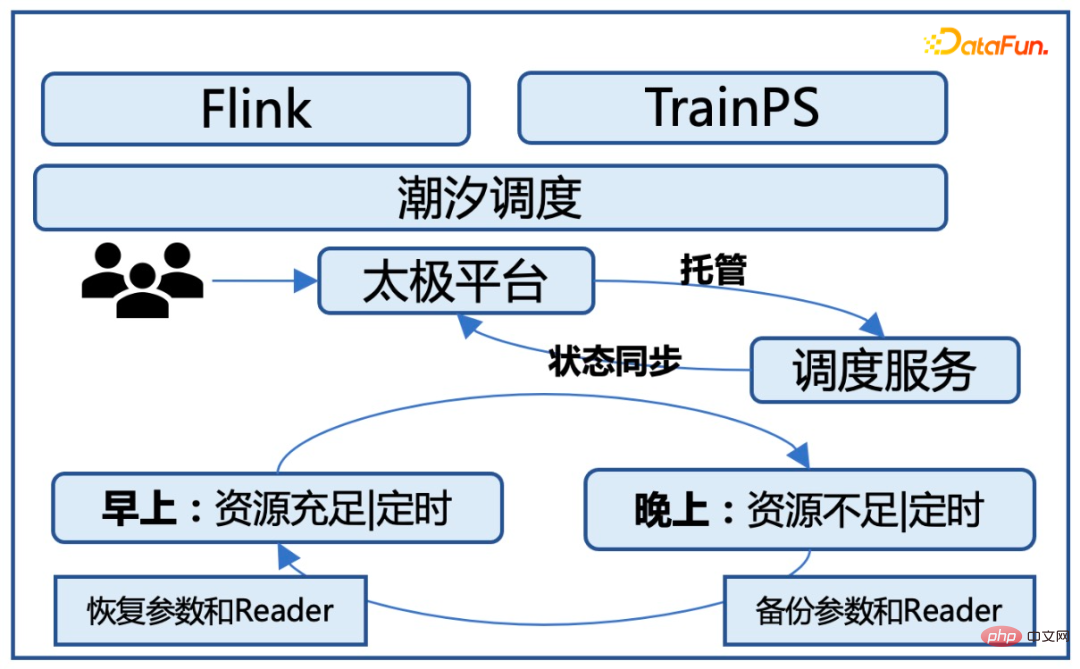

Task Tidal Scheduling

In view of the tidal resource requirements, offline training tasks can only be used during the day and need to be provided for online business use at night, so the Tai Chi platform It is necessary to automatically start training tasks during the day based on the availability of resources; make cold backup of tasks at night and stop corresponding training tasks at the same time. At the same time, the priority of each task scheduling is managed through the task management queue. New tasks started at night will automatically enter the queue state and wait for new tasks to be started the next morning.

Core Challenge:

- Tidal Phenomenon: Resources can be used during the day Provided for offline tasks and need to be recycled at night.

- Dynamic changes in resources: During the day, resources are also unstable and will change at any time. Generally, there are fewer resources in the morning, and then resources gradually Increase, and resources reach their peak at night.

Solution:

- Resource-aware scheduling strategy: During the gradual increase of resources in the morning, tidal scheduling The service needs to sense resource changes and follow up on resource status to start tasks to be continued training.

- Model automatic backup capability: Before resource recycling at night, all tasks running on the current platform need to be backed up step by step, which puts pressure on the storage and bandwidth of the platform. Very large, because there are hundreds of tasks on the platform, and the size of each task's cold backup ranges from a few hundred G to several terabytes. If cold backup is done at the same time, hundreds of terabytes of data need to be transmitted and stored in a short time. Both storage and network are huge challenges; so we need to have a reasonable scheduling strategy and gradually store the model.

- Intelligent resource scheduling capabilities: Compared with traditional training, tidal scheduling has model backup for each task when resources are recycled at night and when the task is newly started every morning The overhead is additional overhead. In order to reduce this additional overhead, when scheduling, we need to evaluate which tasks can be completed on the same day and which tasks need to be run for multiple days. For tasks that can be completed on the same day, we give priority to allocating more resources to them. , to ensure that the task is completed on the same day.

#Through these optimizations, we can ensure that tasks can run stably on tidal resources and are basically unaware of the business layer. At the same time, the running speed of the task will not be greatly affected, and the additional overhead caused by task start and stop scheduling is controlled within 10%.

4. Online effects and future prospects

Taiji’s offline hybrid distribution optimization solution has been implemented in Tencent advertising scenarios, providing 30W core all-weather for Tencent advertising offline model research and training every day Mixed deployment resources, 20W core tidal resources, support advertising recall, rough ranking, fine ranking multi-scenario model training. In terms of resource cost, for tasks with the same computational load, the resource cost of hybrid deployment is 70% of that of ordinary resources. After optimization, system stability and physical cluster task success rate are basically the same.

In the future, on the one hand, we will continue to increase the use of hybrid computing resources, especially the application of hybrid computing resources; on the other hand, the company's online business is becoming GPU-based, so In mixed resource applications, in addition to traditional CPU resources, online GPU resources will also be tried to be used during offline training.

That’s it for today’s sharing, thank you all.

The above is the detailed content of Training cost optimization practice of Tencent advertising model based on 'Tai Chi'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

Big data structure processing skills: Chunking: Break down the data set and process it in chunks to reduce memory consumption. Generator: Generate data items one by one without loading the entire data set, suitable for unlimited data sets. Streaming: Read files or query results line by line, suitable for large files or remote data. External storage: For very large data sets, store the data in a database or NoSQL.

C++ development experience sharing: Practical experience in C++ big data programming

Nov 22, 2023 am 09:14 AM

C++ development experience sharing: Practical experience in C++ big data programming

Nov 22, 2023 am 09:14 AM

In the Internet era, big data has become a new resource. With the continuous improvement of big data analysis technology, the demand for big data programming has become more and more urgent. As a widely used programming language, C++’s unique advantages in big data programming have become increasingly prominent. Below I will share my practical experience in C++ big data programming. 1. Choosing the appropriate data structure Choosing the appropriate data structure is an important part of writing efficient big data programs. There are a variety of data structures in C++ that we can use, such as arrays, linked lists, trees, hash tables, etc.

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

AEC/O (Architecture, Engineering & Construction/Operation) refers to the comprehensive services that provide architectural design, engineering design, construction and operation in the construction industry. In 2024, the AEC/O industry faces changing challenges amid technological advancements. This year is expected to see the integration of advanced technologies, heralding a paradigm shift in design, construction and operations. In response to these changes, industries are redefining work processes, adjusting priorities, and enhancing collaboration to adapt to the needs of a rapidly changing world. The following five major trends in the AEC/O industry will become key themes in 2024, recommending it move towards a more integrated, responsive and sustainable future: integrated supply chain, smart manufacturing

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

In today's big data era, data processing and analysis have become an important support for the development of various industries. As a programming language with high development efficiency and superior performance, Go language has gradually attracted attention in the field of big data. However, compared with other languages such as Java and Python, Go language has relatively insufficient support for big data frameworks, which has caused trouble for some developers. This article will explore the main reasons for the lack of big data framework in Go language, propose corresponding solutions, and illustrate it with specific code examples. 1. Go language

Getting Started Guide: Using Go Language to Process Big Data

Feb 25, 2024 pm 09:51 PM

Getting Started Guide: Using Go Language to Process Big Data

Feb 25, 2024 pm 09:51 PM

As an open source programming language, Go language has gradually received widespread attention and use in recent years. It is favored by programmers for its simplicity, efficiency, and powerful concurrent processing capabilities. In the field of big data processing, the Go language also has strong potential. It can be used to process massive data, optimize performance, and can be well integrated with various big data processing tools and frameworks. In this article, we will introduce some basic concepts and techniques of big data processing in Go language, and show how to use Go language through specific code examples.

AI, digital twins, visualization... Highlights of the 2023 Yizhiwei Autumn Product Launch Conference!

Nov 14, 2023 pm 05:29 PM

AI, digital twins, visualization... Highlights of the 2023 Yizhiwei Autumn Product Launch Conference!

Nov 14, 2023 pm 05:29 PM

Yizhiwei’s 2023 autumn product launch has concluded successfully! Let us review the highlights of the conference together! 1. Intelligent inclusive openness, allowing digital twins to become productive Ning Haiyuan, co-founder of Kangaroo Cloud and CEO of Yizhiwei, said in his opening speech: At this year’s company’s strategic meeting, we positioned the main direction of product research and development as “intelligent inclusive openness” "Three core capabilities, focusing on the three core keywords of "intelligent inclusive openness", we further proposed the development goal of "making digital twins a productive force". 2. EasyTwin: Explore a new digital twin engine that is easier to use 1. From 0.1 to 1.0, continue to explore the digital twin fusion rendering engine to have better solutions with mature 3D editing mode, convenient interactive blueprints, and massive model assets

Big data processing in C++ technology: How to use in-memory databases to optimize big data performance?

May 31, 2024 pm 07:34 PM

Big data processing in C++ technology: How to use in-memory databases to optimize big data performance?

May 31, 2024 pm 07:34 PM

In big data processing, using an in-memory database (such as Aerospike) can improve the performance of C++ applications because it stores data in computer memory, eliminating disk I/O bottlenecks and significantly increasing data access speeds. Practical cases show that the query speed of using an in-memory database is several orders of magnitude faster than using a hard disk database.