Technology peripherals

Technology peripherals

AI

AI

'Midjourney in the field of video'! AI video generation rookie Gen-2 internal beta work leaked, netizens said it was too realistic

'Midjourney in the field of video'! AI video generation rookie Gen-2 internal beta work leaked, netizens said it was too realistic

'Midjourney in the field of video'! AI video generation rookie Gen-2 internal beta work leaked, netizens said it was too realistic

Gen-2, the AI video generation tool that claims to be able to shoot blockbusters in one sentence, has begun to reveal its true colors.

A Twitter blogger has taken the lead in qualifying for internal testing.

This is the result he generated using the prompt word "a well-shaped or symmetrical man being interviewed in a bar":

I saw a man in a dark shirt looking straight at him While talking to the other person, his eyes and expressions reveal seriousness and frankness, and the person opposite him nods in agreement from time to time.

The overall video is very coherent and the picture quality is very clear. At first glance, it feels like a real interview!

Another version of the same prompt word generation is not bad:

This time the camera is closer, the background is more realistic, and the characters are still expressive.

After reading this set of works, some netizens exclaimed:

It’s incredible, just relying on text prompts can produce such results!

Some people said bluntly:

This is the arrival of Midjourney in the video field.

Blogger's actual test of Gen-2

This blogger is named Nick St. Pierre, who specializes in sharing his works made with AI on Twitter.

In addition to the realistic style seen at the beginning, he also released a set of Gen-2 science fiction works.

For example, "Astronauts Travel Through Space":

"An armed soldier runs down the corridor of a spaceship, a dark shadow destroys the wall behind him" :

"A family of microchip-eating robots in a human zoo":

"An army of humanoid robots colonize the frozen plains":

(It has the momentum of the White Walker army attacking the Great Wall in Game of Thrones...)

"The last man on earth watched the invading spaceship land over Tokyo. ”:

……

All of the above can be completed with just one prompt word, without the need to refer to other pictures and videos.

Although the effect of this set of science fiction is slightly inferior to that of "Bar Man Being Interviewed", the magical thing is that it can match the "chaotic" flavor of the AI image generation model at the beginning. It's almost the same - it seems like I can see the shadow of AI such as Stable diffusion at that time.

For example, Nick St. Pierre said:

Gen-2 is still in its infancy, and it will definitely be better in the future.

We also found some results on the Internet tested by other people who have qualified for internal testing:

As a newcomer in the field of AIGC, its iteration speed and quality are also quite fast:

The Gen-1 version was just born in February, and it can only be used for Already have videos for editing;

Now Gen-2 can directly generate videos using text and images as prompt words.

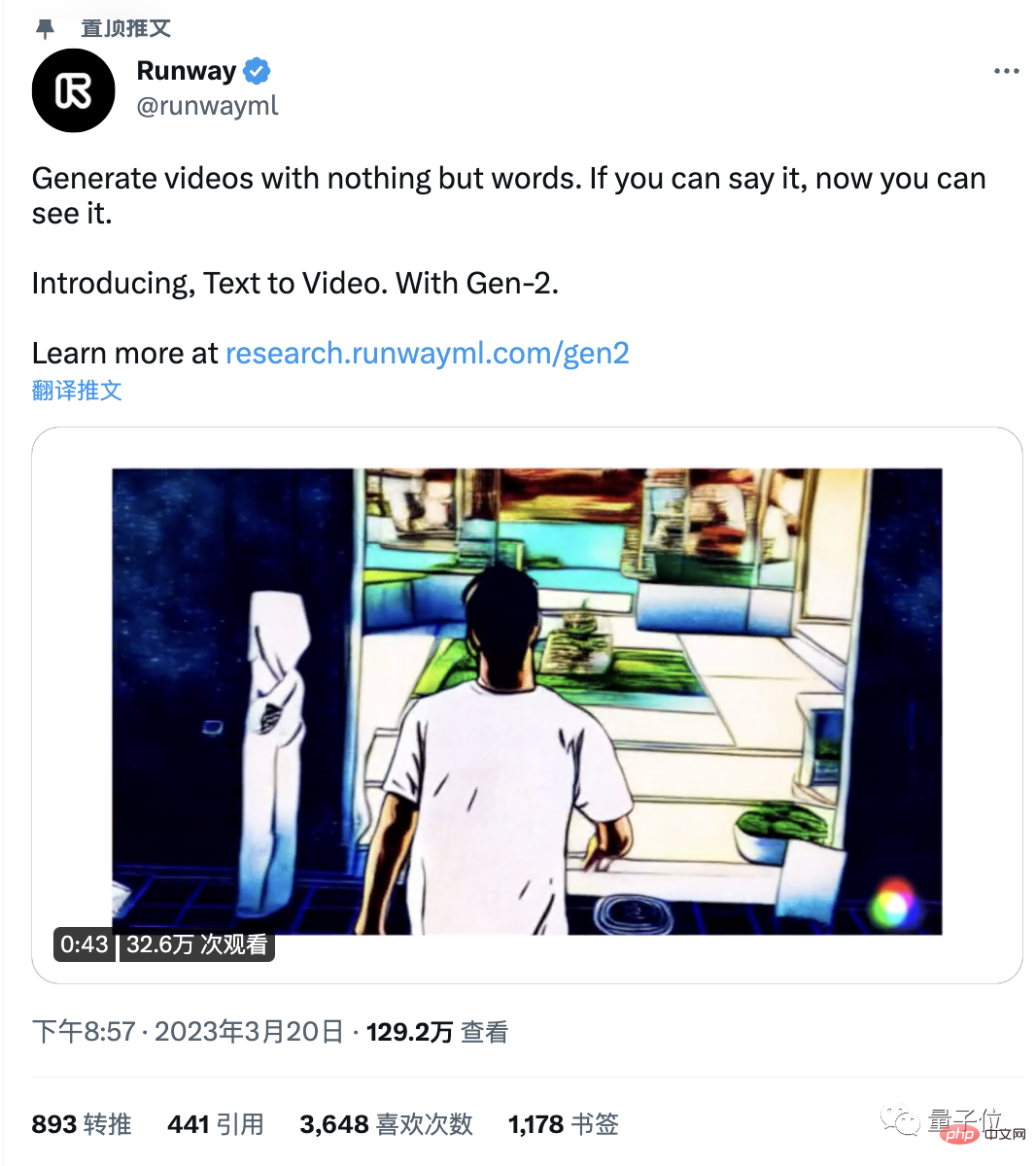

Officially calls it "the next step in generative AI", and the slogan is also very domineering:

say it, see it. (As long as you can tell it, I can let you see it)

The update of Gen-2 brings eight major functions in one go:

Venture video, text reference image generation Video, static image to video conversion, video style transfer, storyboard (Storyboard), Mask (such as turning a walking white dog into a Dalmatian), rendering and personalization (such as turning a head-shaking guy into a turtle in seconds) ).

Its appearance, like AI painting, allows people to see the huge changes hidden in the fields of film and television, games and marketing.

The development company behind it is also worth mentioning, it is Runway.

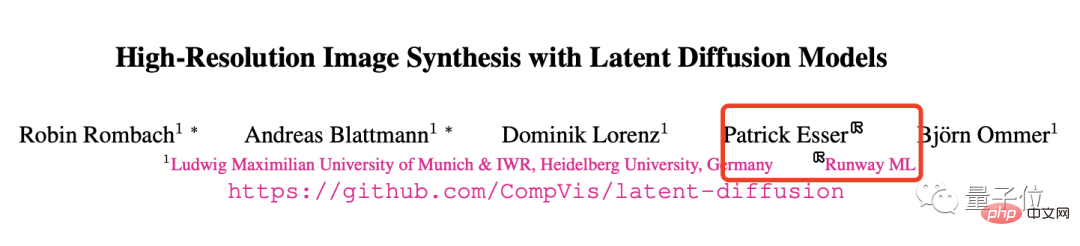

Runway was established in 2018. It has provided technical support for the special effects of "The Instant Universe" and also participated in the development of Stable Diffusion (a promising stock).

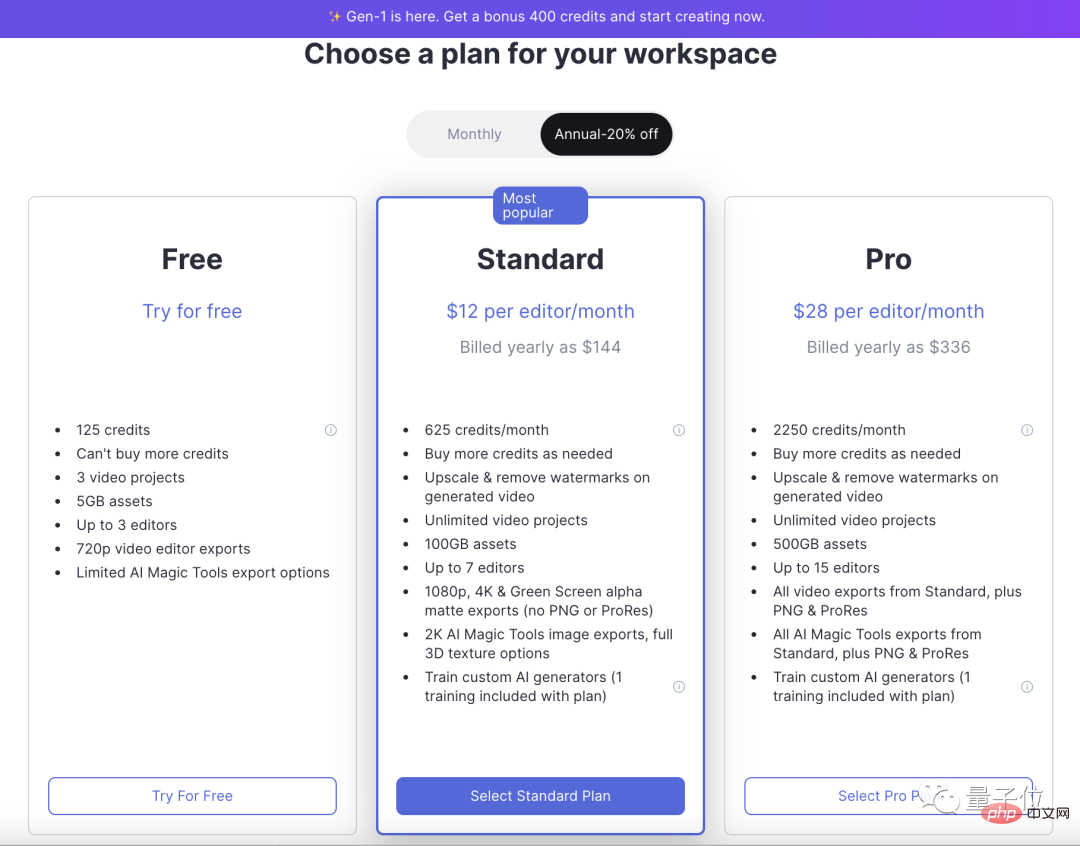

Tips: Gen-1 can already be played (after 125 opportunities are used up, you can only pay monthly), Gen-2 has not yet been officially released to the public. .

In addition to the Gen series, Microsoft Asia Research Institute has also recently released an AI that can generate super-long videos based on text: NUWA-XL.

With only 16 simple descriptions, it can get an 11-minute animation:

Move forward a little Son, on the same day that Gen-2 was released, Alibaba Damo Academy also open sourced the text-to-video AI with 1.7 billion parameters:

The effect is Aunt Jiang’s:

……

It can be predicted that not only image generation, but also the video field will become lively.

Ahem, so will it be the next wave of mass AI carnival?

Reference link:

[1]https://www.php.cn/link/4d7e0d72898ae7ea3593eb5ebf20c744

[2]https://www.php.cn/link/e00944d55e6432ccf20f9fda2492b6fd

[3]https://www.php.cn/link/ce653013fadbb2ff27530d3de3790f1b

[4]https://www.php.cn/link/6e3adb1ae0e02c934766182313b6775d

[5]https://www .php.cn/link/b9b72b29352f3764ea4dec130772bd9d

[6]https://www.php.cn/link/79d37fb2893b428f7ea4ed3b07a84096

The above is the detailed content of 'Midjourney in the field of video'! AI video generation rookie Gen-2 internal beta work leaked, netizens said it was too realistic. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

FP8 and lower floating point quantification precision are no longer the "patent" of H100! Lao Huang wanted everyone to use INT8/INT4, and the Microsoft DeepSpeed team started running FP6 on A100 without official support from NVIDIA. Test results show that the new method TC-FPx's FP6 quantization on A100 is close to or occasionally faster than INT4, and has higher accuracy than the latter. On top of this, there is also end-to-end large model support, which has been open sourced and integrated into deep learning inference frameworks such as DeepSpeed. This result also has an immediate effect on accelerating large models - under this framework, using a single card to run Llama, the throughput is 2.65 times higher than that of dual cards. one

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative