Conditional GAN (cGAN) Atrous Convolution (AC) Channel Attention with Weighted Blocks (CAW).

This paper proposes a breast tumor segmentation and classification method for ultrasound images (cGAN AC CAW) based on deep adversarial learning. Although the paper was proposed in 2019, he proposed a method of using GAN for segmentation at that time It is a very novel idea. The paper basically integrated all the technologies that could be integrated at the time, and achieved very good results, so it is very worth reading. In addition, the paper also proposed a SSIM of typical adversarial loss and l1 norm loss as loss function.

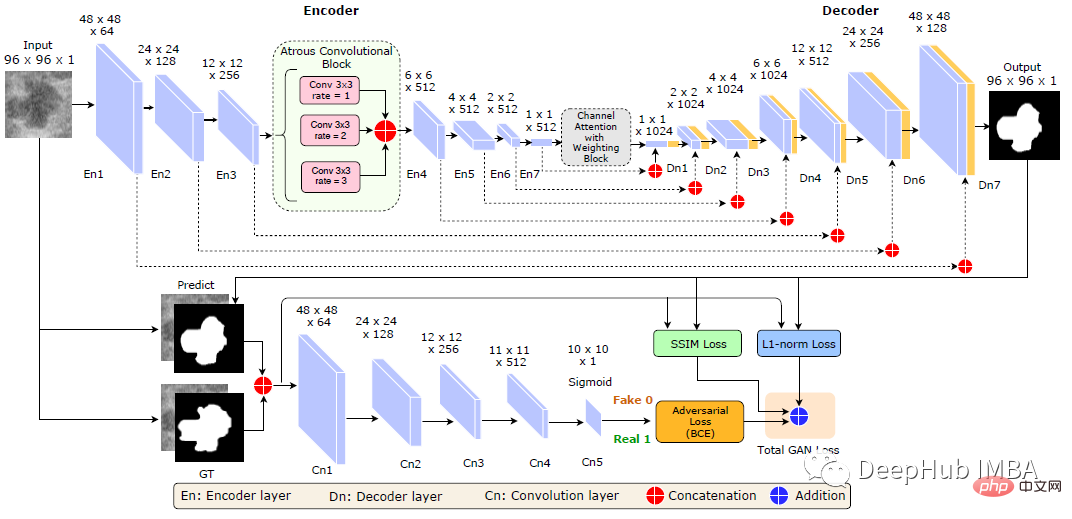

The generator network contains an encoder part : Consists of seven convolutional layers (En1 to En7) and a decoder: seven deconvolutional layers (Dn1 to Dn7).

Insert an atrous convolution block between En3 and En4. Dilation ratios 1, 6 and 9, kernel size 3×3, stride 2.

There is also a channel attention layer with channel weighting (CAW) block between En7 and Dn1.

CAW block is a collection of channel attention module (DAN) and channel weighting block (SENet), which increases the representation ability of the highest level features of the generator network.

It is a sequence of convolutional layers.

The input to the discriminator is a concatenation of the image and a binary mask marking the tumor region.

The output of the discriminator is a 10×10 matrix with values ranging from 0.0 (completely fake) to 1.0 (real).

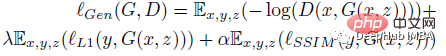

The loss function of generator G consists of three terms: adversarial loss (binary cross-entropy loss), l1-norm to promote the learning process and improve segmentation mask boundaries SSIM loss of shape:

#where z is a random variable. The loss function of discriminator D is:

Enter each image into the trained Generate a network, obtain the tumor boundary, and then calculate 13 statistical features from this boundary: fractal dimension, lacunarity, convex hull, convexity, circularity, area, perimeter, centroid, minor and major axis length, smoothness, Hu moments (6) and central moments (order 3 and below)

uses exhaustive feature selection (Exhaustive feature selection) algorithm to select the optimal feature set. The EFS algorithm shows that fractal dimension, lacunarity, convex hull, and centroid are the four optimal features.

These selected features are fed into a random forest classifier, which is then trained to distinguish between benign and malignant tumors.

The data set contains 150 malignant tumors and 100 benign tumors contained in the image. For training the model, the data set was randomly divided into training set (70%), validation set (10%) and test set (20%).

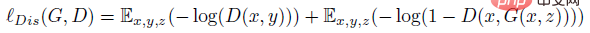

This model (cGAN AC CAW) outperforms other models on all metrics. Its Dice and IoU scores are 93.76% and 88.82% respectively.

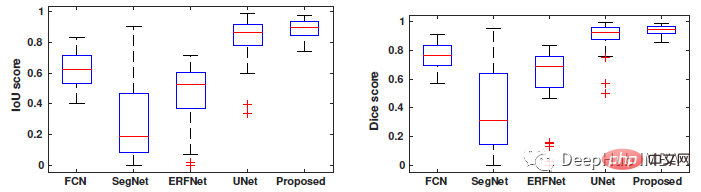

Box plot comparison of IoU and Dice of the paper model with segmentation heads such as FCN, SegNet, ERFNet and U-Net.

The value range of this model for Dice coefficient is 88% ~ 94%, and the value range for IoU is 80% ~ 89%, while other deep segmentation methods FCN , SegNet, ERFNet and U-Net have a larger value range.

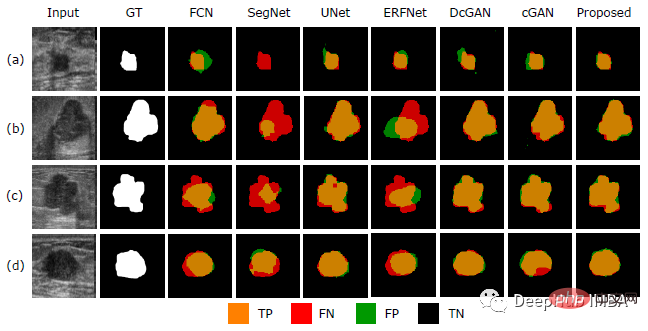

Segmentation results As shown in the figure above, SegNet and ERFNet produced the worst results, with a large number of false negative areas (red), and some false positive areas (green).

While U-Net, DCGAN, and cGAN provide good segmentation, the model proposed in the paper provides more accurate breast tumor boundary segmentation.

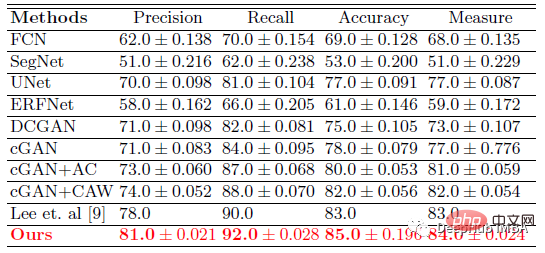

#The proposed breast tumor classification method is better than [9], with a total accuracy of 85%.

The above is the detailed content of Recommended paper: Segmentation and classification of breast tumors in ultrasound images based on deep adversarial learning. For more information, please follow other related articles on the PHP Chinese website!

The eight most commonly used functions in excel

The eight most commonly used functions in excel

The eight most commonly used functions in excel

The eight most commonly used functions in excel

The role of php probe

The role of php probe

okx trading platform official website entrance

okx trading platform official website entrance

Introduction to the framework used by vscode

Introduction to the framework used by vscode

How to solve the problem of 400 bad request when the web page displays

How to solve the problem of 400 bad request when the web page displays

GameProtectNet solution

GameProtectNet solution

The purpose of memcpy in c

The purpose of memcpy in c

How to open jar files

How to open jar files