Overview of ensemble methods in machine learning

Imagine you are shopping online and you find two stores selling the same product with the same ratings. However, the first one was rated by just one person and the second one was rated by 100 people. Which rating would you trust more? Which product will you choose to buy in the end? The answer for most people is simple. The opinions of 100 people are certainly more trustworthy than the opinions of just one. This is called the “wisdom of the crowd” and is why the ensemble approach works.

Ensemble method

Typically, we only create a learner (learner = training model) from the training data (i.e., we only create a learner from the training data to train a machine learning model). The ensemble method is to let multiple learners solve the same problem and then combine them together. These learners are called basic learners and can have any underlying algorithm, such as neural networks, support vector machines, decision trees, etc. If all these base learners are composed of the same algorithm then they are called homogeneous base learners, whereas if they are composed of different algorithms then they are called heterogeneous base learners. Compared to a single base learner, an ensemble has better generalization capabilities, resulting in better results.

When the ensemble method consists of weak learners. Therefore, basic learners are sometimes called weak learners. While ensemble models or strong learners (which are combinations of these weak learners) have lower bias/variance and achieve better performance. The ability of this integrated approach to transform weak learners into strong learners has become popular because weak learners are more readily available in practice.

In recent years, integrated methods have continuously won various online competitions. In addition to online competitions, ensemble methods are also applied in real-life applications such as computer vision technologies such as object detection, recognition, and tracking.

Main types of ensemble methods

How are weak learners generated?

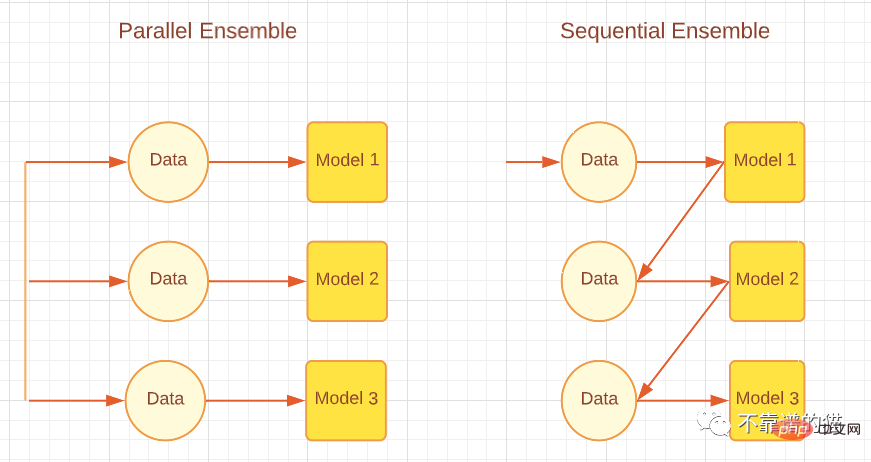

According to the generation method of the base learner, integration methods can be divided into two major categories, namely sequential integration methods and parallel integration methods. As the name suggests, in the Sequential ensemble method, base learners are generated sequentially and then combined to make predictions, such as Boosting algorithms such as AdaBoost. In the Parallel ensemble method, the basic learners are generated in parallel and then combined for prediction, such as bagging algorithms such as random forest and stacking. The following figure shows a simple architecture explaining parallel and sequential approaches.

According to the different generation methods of basic learners, integration methods can be divided into two categories: sequential integration methods and parallel integration methods. As the name suggests, in the sequential ensemble method, base learners are generated in order and then combined to make predictions, such as Boosting algorithms such as AdaBoost. In parallel ensemble methods, base learners are generated in parallel and then combined together for prediction, such as bagging algorithms such as Random Forest and Stacking. The figure below shows a simple architecture explaining both parallel and sequential approaches.

Parallel and sequential integration method

The sequential learning method uses the dependency between weak learners to improve the overall performance in a residual decreasing manner, so that Late learners pay more attention to the mistakes of former learners. Roughly speaking (for regression problems), the reduction in ensemble model error obtained by boosting methods is mainly achieved by reducing the high bias of weak learners, although a reduction in variance is sometimes observed. On the other hand, the parallel ensemble method reduces the error by combining independent weak learners, that is, it exploits the independence between weak learners. This reduction in error is due to a reduction in the variance of the machine learning model. Therefore, we can summarize that boosting mainly reduces errors by reducing the bias of the machine learning model, while bagging reduces errors by reducing the variance of the machine learning model. This is important because which ensemble method is chosen will depend on whether the weak learners have high variance or high bias.

How to combine weak learners?

After generating these so-called base learners, we do not select the best of these learners, but combine them together for better generalization, and the way we do this is in ensemble plays an important role in the method.

Averaging: When the output is a number, the most common way to combine base learners is averaging. The average can be a simple average or a weighted average. For regression problems, the simple average will be the sum of the errors of all base models divided by the total number of learners. The weighted average combined output is achieved by giving different weights to each base learner. For regression problems, we multiply the error of each base learner by the given weight and then sum it.

Voting: For nominal output, voting is the most common way to combine base learners. Voting can be of different types such as majority voting, majority voting, weighted voting and soft voting. For classification problems, a supermajority vote gives each learner one vote, and they vote for a class label. Whichever class label gets more than 50% of the votes is the predicted result of the ensemble. However, if no class label gets more than 50% of the votes, a reject option is given, which means that the combined ensemble cannot make any predictions. In relative majority voting, the class label with the most votes is the prediction result, and more than 50% of the votes are not necessary for the class label. Meaning, if we have three output labels, and all three get results less than 50%, such as 40% 30% 30%, then getting 40% of the class labels is the prediction result of the ensemble model. . Weighted voting, like weighted averaging, assigns weights to classifiers based on their importance and the strength of a particular learner. Soft voting is used for class outputs with probabilities (values between 0 and 1) rather than labels (binary or other). Soft voting is further divided into simple soft voting (a simple average of probabilities) and weighted soft voting (weights are assigned to learners, and the probabilities are multiplied by these weights and added).

Learning: Another combination method is combination through learning, which is used by the stacking ensemble method. In this approach, a separate learner called a meta-learner is trained on a new dataset to combine other base/weak learners generated from the original machine learning dataset.

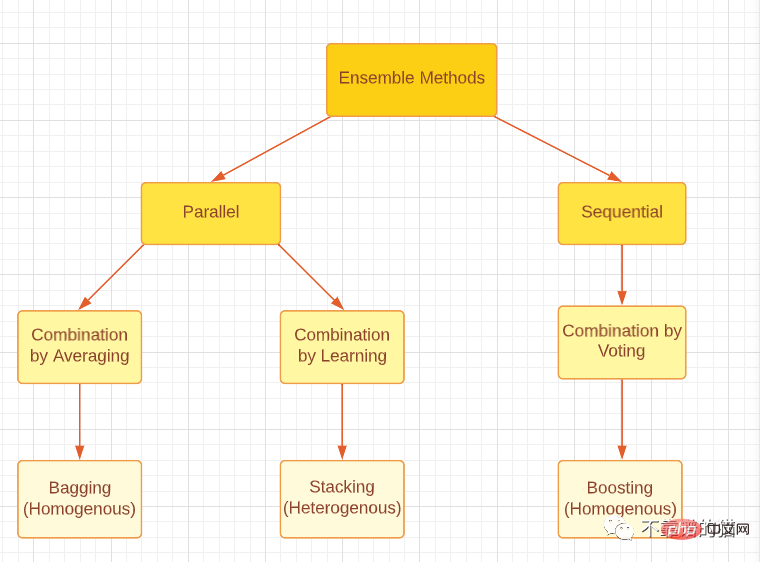

Please note that whether boosting, bagging or stacking, all three ensemble methods can be generated using homogeneous or heterogeneous weak learners. The most common approach is to use homogeneous weak learners for Bagging and Boosting, and heterogeneous weak learners for Stacking. The figure below provides a good classification of the three main ensemble methods.

Classify the main types of ensemble methods

Ensemble diversity

Ensemble diversity refers to the differences between the underlying learners How big it is, which has important implications for generating good ensemble models. It has been theoretically proven that, through different combination methods, completely independent (diverse) base learners can minimize errors, while completely (highly) related learners do not bring any improvements. This is a challenging problem in real life, as we are training all weak learners to solve the same problem by using the same dataset, resulting in high correlation. On top of this, we need to make sure that weak learners are not really bad models, as this may even cause the ensemble performance to deteriorate. On the other hand, combining strong and accurate basic learners may not be as effective as combining some weak learners with some strong learners. Therefore, a balance needs to be struck between the accuracy of the base learner and the differences between the base learners.

How to achieve integration diversity?

1. Data processing

We can divide our data set into subsets for basic learners. If the machine learning dataset is large, we can simply split the dataset into equal parts and feed them into the machine learning model. If the data set is small, we can use random sampling with replacement to generate a new data set from the original data set. Bagging method uses bootstrapping technique to generate new data sets, which is basically random sampling with replacement. With bootstrapping we are able to create some randomness since all generated datasets must have some different values. However, note that most values (about 67% according to theory) will still be repeated, so the data sets will not be completely independent.

2. Input features

All datasets contain features that provide information about the data. Instead of using all features in one model, we can create subsets of features and generate different datasets and feed them into the model. This method is adopted by the random forest technique and is effective when there are a large number of redundant features in the data. Effectiveness decreases when there are few features in the dataset.

3. Learning parameters

This technology generates randomness in the basic learner by applying different parameter settings to the basic learning algorithm, that is, hyperparameter tuning. For example, by changing the regularization terms, different initial weights can be assigned to individual neural networks.

Integration Pruning

Finally, integration pruning technology can help achieve better integration performance in some cases. Ensemble Pruning means that we only combine a subset of learners instead of combining all weak learners. In addition to this, smaller integrations can save storage and computing resources, thereby improving efficiency.

Finally

This article is just an overview of machine learning ensemble methods. I hope everyone can conduct more in-depth research, and more importantly, be able to apply the research to real life.

The above is the detailed content of Overview of ensemble methods in machine learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

Last week, amid the internal wave of resignations and external criticism, OpenAI was plagued by internal and external troubles: - The infringement of the widow sister sparked global heated discussions - Employees signing "overlord clauses" were exposed one after another - Netizens listed Ultraman's "seven deadly sins" Rumors refuting: According to leaked information and documents obtained by Vox, OpenAI’s senior leadership, including Altman, was well aware of these equity recovery provisions and signed off on them. In addition, there is a serious and urgent issue facing OpenAI - AI safety. The recent departures of five security-related employees, including two of its most prominent employees, and the dissolution of the "Super Alignment" team have once again put OpenAI's security issues in the spotlight. Fortune magazine reported that OpenA