Technology peripherals

Technology peripherals

AI

AI

Turing Award winner Geoffrey Hinton: My fifty-year deep learning career and research methods

Turing Award winner Geoffrey Hinton: My fifty-year deep learning career and research methods

Turing Award winner Geoffrey Hinton: My fifty-year deep learning career and research methods

He has never formally taken a computer course. He studied physiology and physics as an undergraduate at Cambridge University. During this period, he turned to philosophy, but in the end he got a bachelor's degree in psychology. degree; he once became a carpenter because he was tired of studying, but after encountering setbacks, he returned to the University of Edinburgh and obtained a doctorate in artificial intelligence, an "unpopular major"; his poor mathematics made him feel desperate when doing research. After becoming a professor, he always asked his graduate students for advice on neuroscience and computing science that he did not understand.

The academic path seems to be staggering, but Geoffrey Hinton has become the one with the last laugh. He is known as the "Godfather of Deep Learning" and has won the highest honor in the field of computer science. Honorary "Turing Award".

Hinton was born into a wealthy scientific family in the UK, but the academic career and ups and downs he experienced throughout his life were rich and bizarre.

His father, Howard Everest Hinton, was a British entomologist, and his mother, Margaret, was a teacher. They were both communists. His uncle is the famous economist Colin Clark, who invented the economic term "Gross National Product", and his great-great-grandfather is the famous logician George Boole. His invention of Boolean algebra laid the foundation of modern computer science.

Influenced by a rich family background of scientists, Hinton has had independent thinking ability and tenacity since childhood, and has shouldered the responsibility of inheriting the family honor. His mother gave him two choices, "either to become a scholar or to be a loser." He had no reason to choose to lie down. Even though he went through many struggles in college, he still completed his studies.

In 1973, he studied for a doctorate in artificial intelligence under Langer Higgins at the University of Edinburgh in the UK. But at that time, almost no one believed in neural networks, and his mentor persuaded him to give up researching this technology. . The doubts around him were not enough to shake his firm belief in neural networks. In the following ten years, he successively proposed the backpropagation algorithm and Boltzmann machine, but he would have to wait decades more for deep learning. Ushering in a big explosion, his research will be widely known by then.

After graduating from Ph.D., Hinton also experienced hardships in life. He and his first wife Ros (a molecular biologist) went to the United States and obtained a teaching position at Carnegie Mellon University. However, due to dissatisfaction with the Reagan administration and the fact that artificial intelligence research was basically supported by the U.S. Department of Defense , they went to Canada in 1987, and Hinton began teaching at the School of Computer Science at the University of Toronto and conducting research on the machine and brain learning project at the Canadian Institute for Advanced Research at CIFAR.

Unfortunately, in 1994, his wife Ros died of ovarian cancer, leaving Hinton alone to raise their two young children, one of whom also suffered from ADHD. ADHD and other learning disabilities. Later, he remarried his current wife, Jackie (an art historian), but a similar blow approached again. Jackie also suffered from cancer a few years ago.

He himself also suffers from severe lumbar spine disease, which prevents him from sitting down like normal people. He has to stand and work most of the time. As a result, he also refuses to fly. , because he is required to sit upright when taking off and landing, which also limits his ability to make academic reports in other places.

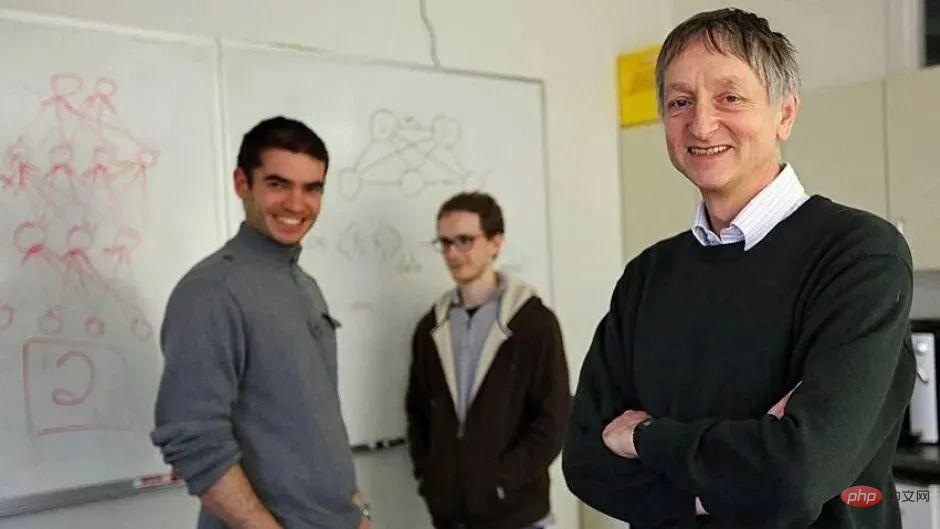

##From left to right are Ilya Sutskever, Alex Krizhevsky and Geoffrey Hinton

After nearly half a century of persistence in technology and tempering in life, finally, the dawn of 2012 dawned. AlexNet proposed by him and his students Alex Krizhevsky and Ilya Sutskever shocked the industry, thus reshaping the field of computer vision and launching a new round of The golden age of deep learning.

Also at the end of 2012, he and the two students established the trio company DNN-research and sold it to Google for a "sky-high price" of US$44 million. He also transformed from a scholar to a vice president of Google and an Engineering Fellow.

In 2019, Hinton, an AI professor with a non-computer science background, won the Turing Award together with Yoshua Bengio and Yann LeCun.

After going through many hardships, the 74-year-old "godfather of deep learning" is still fighting on the front line of AI research. He is not afraid of the doubts raised by other scholars and will frankly admit those that have not been realized. Judgment and prophecy. No matter what, he still believes that ten years after the rise of deep learning, this technology will continue to release its energy, and he is also thinking and looking for the next breakthrough point.

So where does his firm belief in neural networks come from? Amid the current skepticism that deep learning has "hit a wall," how does he view the next stage of AI development? What message does he have for the younger generation of AI researchers?

Recently, in The Robot Brains Podcast hosted by Pieter Abbeel, Hinton very candidly shared his academic career, the future of deep learning and research experience, as well as the auction of DNN-research The inside story. Here's what he had to say.

8-year-old Hinton

The thing that has had the most profound impact on me is education received in childhood. My family has no religious beliefs, and my father is a communist, but considering that science education in private schools is better, when I was 7 years old, he insisted on sending me to an expensive Christian private school. Everyone there except me Children believe in God.

As soon as I got home, my family said that religion was all nonsense. Of course, maybe because I have a strong self-awareness, I didn’t believe it myself and realized that belief in God was wrong, and Develop a habit of questioning others. Of course, many years later, they do discover that their original beliefs were wrong and realize that God may not really exist.

However, if I tell you now to have faith, faith is important, it may sound ironic, but we do need to have faith in scientific research, so that even if others say that you are Even if you are wrong, you can still keep walking on the right path.

1 In the 1970s, I studied neural networks "The Lonely Warrior"

My educational background is very rich. In my freshman year at Cambridge University, I was the only student who majored in physics and physiology at the same time, which laid a certain foundation in science and engineering for my subsequent scientific research career.

However, I was not very good at mathematics, so I had to give up studying physics. However, I was very curious about the meaning of life, so I turned to study philosophy. After achieving certain results, I started studying again. Read psychology.

My last year at Cambridge was very difficult and unhappy, so I dropped out as soon as I finished my exams and went to work as a carpenter. In fact, I prefer being a carpenter to doing anything else.

When I was in high school, after classes during the day, I would go home and do some carpentry work. That was my happiest moment. Slowly, I became a carpenter, but after about six months of working, I found that the money a carpenter earned was too little to make a living, even though there was more to a carpenter than meets the eye. Decoration is much easier and the money comes quickly, so while working as a carpenter, I also do part-time decoration work. Unless you are a senior carpenter, the money you make as a carpenter is definitely not as good as decorating.

I didn’t realize I wasn’t cut out for this until one day I met a really good carpenter. A coal company asked this carpenter to make a door for a dark and damp basement. In view of the special environment, he arranged the wood in the opposite direction to offset the deformation of the wood due to moisture expansion. This is something I had never thought of before. The way. He can also cut a piece of wood into squares with a handsaw. He explained to me: If you want to cut the wood into squares, you have to align the saw and the wood with the room.

At that time, I felt that I was too far behind him, so I thought maybe I should go back to school to study artificial intelligence.

Later, I went to the University of Edinburgh to study for a PhD in neural networks, and my supervisor was the famous Professor Christopher Longute-Higgins. When he was in his 30s, he figured out the structure of borohydride and almost won the Nobel Prize for it. It was really amazing. Until now, I still don’t know what he was studying. I only know that it is related to quantum mechanics. The factual basis of this research is that "the rotation of the identity operator is not 360 degrees, but 720 degrees."

He used to be very interested in the relationship between neural networks and holograms, but after I arrived at the University of Edinburgh, he suddenly lost interest in neural networks, mainly because he was studying After reading the paper by Winograd (an American computer scientist), he was completely convinced that neural networks had no development prospects and should be converted to symbolic artificial intelligence. That paper had a great influence on him.

In fact, he did not agree with my research direction and wanted me to do some research that would be easier to win awards. However, he was a good person and still told me to be firm in my own direction and never stopped me from doing it. Study neural networks.

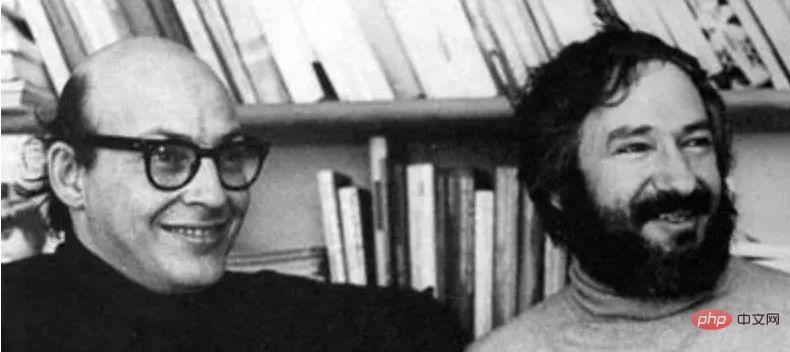

Marvin Minsky and Seymour Papert

In the early 1970s, around Everyone asked me, Marvin Minsky and Seymour Papert both said that neural networks have a bleak future, so why do they continue to do so? To be honest, I feel lonely.

In 1973, I gave a speech to a group for the first time, and the content was about how to use neural networks to do real recursion. In the first project, I discovered that if you want a neural network to draw a graph, divide the graph into multiple parts, and these parts of the graph can be drawn by similar neural hardware, then the neural center that stores the entire graph is The position, orientation and size of the overall graphic need to be remembered.

If the neural network that is drawing the graph suddenly stops running, and you want to use another neural network to continue drawing the graph, then you need a place to store the graph and the work progress, and then You can continue drawing work. The difficulty now is how to make the neural network realize these functions. Obviously, just copying neurons is not enough, so I want to design a system to adapt in real time and record the work progress through fast weight. In this way, by restoring the relevant state, you can continue to complete the task.

So I created a set of neural networks that allow for true recursive calls by reusing the same neurons and weights as for the advanced calls recursion. However, I'm not good at speaking, so I feel like no one understands what I'm saying.

They said that why should we perform recursion in neural networks when we can obviously use Lisp recursion. What they don't know is that there's a whole bunch of things that can't be solved unless neural networks can implement things like recursion. Now, this has become an interesting problem again, so I'll wait another year until this problem becomes a 50-year-old curio, and then I write a research paper on fast weights.

At that time, not everyone was opposed to neural networks. If we go back to the 1950s, researchers such as von Neumann and Turing still believed in neural networks. They were both very interested in the way the brain works. Turing, in particular, believed in the enhancement of neural networks. training, which also gave me confidence in my research direction.

It is a pity that they died young. If they could live a few more years, their wisdom would be enough to influence the development of a field. The UK may have already made a breakthrough in this regard, and maybe artificial intelligence The current situation will be very different.

2 From pure academic to Google employee

The main reason for working at Google is that my son is disabled and I have to make money for him.

In 2012, I felt that I could make a lot of money by giving lectures on Coursera, so I opened a neural network-related course. The early Coursera software was not easy to use, and I was not very good at operating software, so I often felt irritable.

Initially I reached an agreement with the University of Toronto. If these courses can make money, then the university will give part of the money to the lecturers. Although they did not clearly state the specific share ratio, some people said it would be half and half, so I happily accepted it.

During the course recording process, I asked the school to record a video for me, but they asked me, "Do you know how expensive it is to make a video?" Of course I do, because I have been making videos myself, but the school still hasn’t provided any support. However, after I started classes (I was already in a bind), the provost decided unilaterally, without consulting me or anyone else, that the school would take all the money, and I would not get a penny. It was a complete violation of the original agreement.

They asked me to record the class and said that it was part of my teaching work, but it actually did not belong to my teaching scope, but was just a course based on related lectures I had given before. Therefore, I never used Coursera again in my subsequent teaching work. That incident made me so angry that I began to consider pursuing another career.

At this moment, many companies suddenly extended an olive branch to us and were willing to sponsor a large amount of funds or support us in establishing a company. This shows that there are still many companies that care about us. The research content is of great interest.

Since the state government has already given us a research grant, we no longer want to make extra money and focus on our own research. But the experience of the school defrauding me of money made me want to make more money, so I later auctioned off the recently established DNN-research.

This transaction occurred during the NIPS (Neural Information Processing Systems Conference) in December 2012. The conference was held at an entertainment venue by Lake Tahoe. The lights were shining in the basement and a group of people Shirtless gamblers chanted in the smoke-filled room, "You won 25,000, this is all yours"... Meanwhile, a company was being auctioned upstairs.

It was like acting in a movie, exactly like what I saw on social media, it was really great. The reason why we auctioned the company was because we had no idea of its own value, so I consulted an intellectual property lawyer. He said that there are two ways: one is to directly hire a professional negotiator to negotiate with those companies. Large companies negotiate, but this may encounter unpleasantness; the second is to launch an auction.

As far as I know, this is the first time in history that a small company like ours has conducted an auction. I ended up bidding through Gmail because I had been working at Google that summer and I knew they weren't going to randomly steal users' emails, and I still think so even now. But Microsoft expressed dissatisfaction with our decision.

The auction process is as follows: companies participating in the auction must send their bids to us via Gmail, and we will then send them to the other participants along with a Gmail timestamp. The starting bid was $500,000, then someone bid $1 million, and we were so happy to see the bids go up and realize that we were worth much more than we thought. When the bidding reached a certain level (which we thought were astronomical at the time), we were more inclined to work at Google and stopped the auction.

Coming to work at Google was the right choice. I have been working here for nine years now. When I have worked here for ten years, they should give me an award. After all, there are only a handful of people who have worked here for that long.

People prefer working at Google to other companies, and I do too. The main reason I like this company is that the Google Brain team is great. I am more focused on studying how to build large-scale learning systems and studying the working mechanism of the brain. Google Brain not only has the rich resources needed to study large-scale systems, but also can communicate and learn with many outstanding talents.

I am one of those straight-tempered people, and Jeff Dean is a smart man, and it is a pleasure to get along with him. He wanted me to do some basic research and try to come up with new algorithms, which is what I loved doing. I am not good at managing large teams. In contrast, I am more willing to improve the accuracy of language recognition by one percentage point. Bringing a new revolution to this field is what I have always wanted to do.

3 The next big thing in deep learning

The development of deep learning depends on stochastic gradient descent in large networks with massive data and powerful computing power. Based on this, some ideas have taken better root, such as random deactivation (dropout) and many current studies, but all of this is inseparable from powerful computing power, massive data, and stochastic gradient descent.

It is often said that deep learning has encountered a bottleneck, but in fact it has been moving forward. I hope skeptics will write down what deep learning cannot do now. Five years from now, we will prove that deep learning can do these things.

Of course, these tasks must be strictly defined. For example, Hector Levesque (Professor of Computer Science at the University of Toronto) is a typical AI person, and he himself is very good. Hector established a standard, the Winograd sentence, one example of which is, "The trophy does not fit in the suitcase because it is too small; the trophy does not fit in the suitcase because it is too big."

If you want to translate these two sentences into French, you must understand that in the first case, "it" refers to the suitcase, and in the second case, "it" refers to is a trophy because they are different genders in French, and the early neural network machine translation was random, so when the machine translated the above sentence into French, the machine could not correctly identify the gender. But this situation has been improving. At least Hector gave a very clear definition of neurons and pointed out what they can do. It's not perfect, but it's at least much better than random translation. I wish skeptics would raise more questions like this.

I think that the very successful paradigm of deep learning will continue to thrive: adjusting a large number of real-valued parameters based on the gradient of some objective function, but we probably won't use Backpropagation mechanism is used to obtain gradients, while the objective function may be more local and diffuse.

My personal guess is that the next big AI event will definitely be the learning algorithm of spiking neural networks. It can solve the discrete decision of whether to perform a pulse and the continuous decision of when to perform the pulse, so that the pulse time can be used to perform interesting calculations, which is actually difficult to do in non-impulsive neural networks. It was a major regret of my research career that I had not been able to study the learning algorithm of spiking neural networks in depth before.

I have no intention of studying AGI, and I try to avoid defining what AGI is, because there are various problems behind the AGI vision, but just by expanding the number of neurons with parameters or neurons Connectivity doesn’t yet enable general artificial intelligence.

AGI envisions a human-like intelligent robot that is as smart as humans. I don’t think intelligence will necessarily develop this way, but I hope it develops more in a symbiotic way. I think maybe we will design intelligent computers, but they won't be autonomous like humans. If their purpose is to kill other people, then they probably have to be autonomous, but hopefully we won't go in that direction.

4 Believe in research intuition and be driven by curiosity

Everyone’s way of thinking is different, and we may not necessarily understand our own thinking process. I like to go with my gut, and I prefer to use analogies when doing research. I believe that the basic way of human reasoning is based on using the right features in large vectors to make analogies, which is what I do myself.

I often test out a study over and over on my computer to see what works and what doesn’t. It is indeed important to understand the underlying mathematical logic of things and conduct basic research, and it is also necessary to make some arguments, but these are not what I want to do.

Let’s do a small test: If there are two lectures at the NIPS conference, one is about using a new, smart and elegant method to prove a known conclusion; The other one is about a new and powerful learning algorithm, but the logic behind the algorithm is currently unknown.

If you had to choose one of these two lectures to attend, what would you choose? Compared to the second lecture, the first lecture may be easier to accept. People seem to be more curious about new ways to prove known things, but I will go to the second lecture. After all, in the field of neural networks, almost all progress has been made. It comes from people's instantaneous intuition when making mathematical deductions, rather than conventional reasoning.

So do you have to trust your gut? I have a criteria - either you have great intuition or you don't. If you don’t have a keen intuition, it doesn’t matter what you do; but if you have a keen intuition, you should trust your intuition and do what you think is right.

Of course, keen intuition comes from your understanding of the world and a lot of hard work. When you accumulate a lot of experience with the same thing, you will develop intuition.

I suffer from mild manic depression, so I usually wander between two conditions: moderate self-criticism can make me very creative, while extreme self-criticism can make me mildly depressed. . But I think this is more efficient than just a single emotion. When you feel irritated, just ignore the obvious and move on, confident that something interesting and exciting is waiting for you to discover. When you feel caught off guard when faced with a problem, you must persevere, clarify your thoughts, and carefully consider the quality of your ideas.

Because of this alternation of emotions, I often tell everyone that I have figured out the working mechanism of the brain, but after a while, I was disappointed to find that my previous conclusion was wrong. , but this is how things should develop, just like the two lines of William Blake's poem, "weaving joy and sorrow over my sacred heart."

I think the same is true of the nature of scientific research work. If you are not excited by success and not frustrated by failure, you are not a researcher in the true sense. .

In my research career, although I sometimes feel that I am completely unable to figure out some algorithms, I have never really felt lost or hopeless. In my opinion, no matter what the end result is, there is always something worth doing. Excellent researchers always have a lot they want to do, but they just don’t have the time.

When I was teaching at the University of Toronto, I found that the undergraduate students majoring in computer science were very good, and many undergraduate students majoring in cognitive science who minored in computer science also performed quite well. Some students are not good at technology, but they still do a good job in research. They love computer science and really want to understand how human cognition is formed. They have endless interest.

Scientists like Blake Richards (assistant professor at the Montreal Neurological Institute) know exactly what problem they want to solve, and then just move in that direction. Today, many scientists don’t know what they want to do.

Looking back, I think young people should find the direction they are interested in instead of simply learning some skills. Driven by your own interests, you will take the initiative to master some necessary knowledge to find the answers you want, which is more important than blindly learning technology.

Now that I think about it, I should have learned more mathematics when I was young. Linear algebra would have been much easier if I had done so.

Mathematics often makes me feel hopeless, which makes it difficult to read some papers, especially understanding a lot of symbols. It is really a big challenge, so I don’t read too much. Multiple papers. Regarding neuroscience issues, I usually ask Terry Sejnowski (Professor of Computational Neurology). For computer science issues, I ask graduate students to explain them to me. When I need to use mathematics to prove whether a study is feasible, I always find a suitable method.

The idea of making the world a better place by doing research is great, but I enjoy exploring the upper limits of human creativity even more. I really want to understand how the brain works, I believe we need some new ideas, such as learning algorithms for spiking neural networks to understand how the brain works.

I believe that the best research work is done by a large group of graduate students and provided with rich resources. Scientific research work requires youthful vitality, endless motivation, and a strong interest in research.

You must be driven by curiosity to do the best basic research. Only then will you be motivated to ignore obvious obstacles and estimate the results you will achieve. If it is a general study, creativity is not the most important thing.

It's always a good idea to figure out what a bunch of smart people are working on, and then you can do different research. If you have already made some progress in a certain field, you don't need other new ideas, you just need to dig deeper into the existing research to succeed. But if you want to work on some new ideas, like building big hardware, that's also great, although the road ahead may be a bit rocky.

The above is the detailed content of Turing Award winner Geoffrey Hinton: My fifty-year deep learning career and research methods. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

How to use CNN and Transformer hybrid models to improve performance

Jan 24, 2024 am 10:33 AM

How to use CNN and Transformer hybrid models to improve performance

Jan 24, 2024 am 10:33 AM

Convolutional Neural Network (CNN) and Transformer are two different deep learning models that have shown excellent performance on different tasks. CNN is mainly used for computer vision tasks such as image classification, target detection and image segmentation. It extracts local features on the image through convolution operations, and performs feature dimensionality reduction and spatial invariance through pooling operations. In contrast, Transformer is mainly used for natural language processing (NLP) tasks such as machine translation, text classification, and speech recognition. It uses a self-attention mechanism to model dependencies in sequences, avoiding the sequential computation in traditional recurrent neural networks. Although these two models are used for different tasks, they have similarities in sequence modeling, so