Technology peripherals

Technology peripherals

AI

AI

Amazon enters the ChatGPT war with high profile and releases Titan large model and AI programming assistant for free. CEO: Change all experiences

Amazon enters the ChatGPT war with high profile and releases Titan large model and AI programming assistant for free. CEO: Change all experiences

Amazon enters the ChatGPT war with high profile and releases Titan large model and AI programming assistant for free. CEO: Change all experiences

Overnight, Amazon came to overtake in a corner.

When the world's major technology giants are embracing today's most popular large model, AIGC, Amazon only gives one impression: stealth.

Although AWS has been providing machine learning computing power to large model star companies such as Hugging Face and Stability AI, Amazon rarely discloses the details of the cooperation. Some netizens have counted that in the past period of financial reporting meetings, Amazon mentioned AI almost zero times.

But now, Amazon’s attitude has changed dramatically.

On April 13, Amazon CEO Andy Jassy released the 2022 annual shareholder letter, saying that he was confident that Amazon could control costs and continue to invest in new There is confidence in growth areas. In his letter, he stated that Amazon will invest heavily in the currently popular fields of large-scale language models (LLM) and generative artificial intelligence (AI) in the future.

# Over the past few decades, Amazon has used machine learning in a variety of applications, Jassy said. The company is now developing its own large language model, which has the potential to improve "almost any customer experience."

Before he finished speaking, Amazon’s large model and services were unveiled.

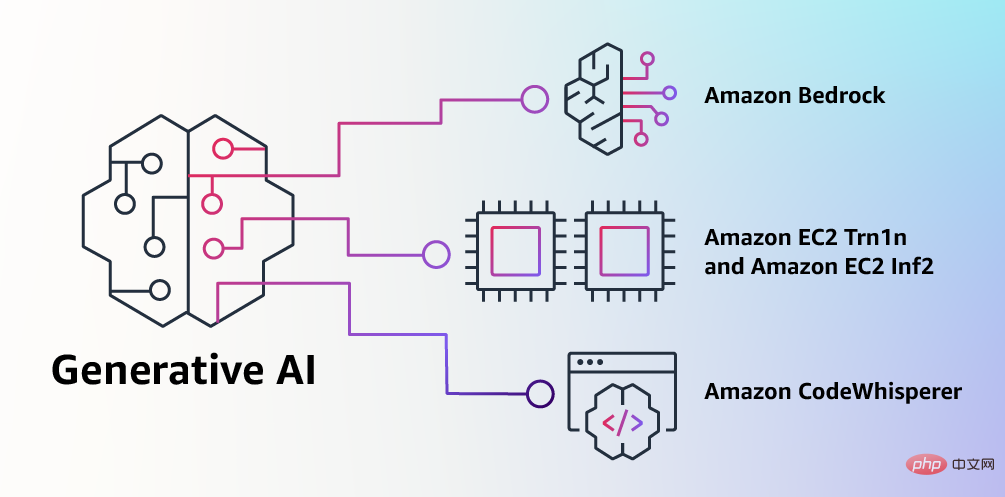

"Most companies want to use large language models, but truly useful language models require billions of dollars and years to develop. Training, people don't want to go through that," Andy Jassy said. "So they're looking to take an already huge base model and then be able to customize it for their own purposes. That's Bedrock."

Amazon version of ChatGPT: Yes part of its cloud services.

Big Models

In its latest announcement, AWS introduced a new set of models — collectively called “Amazon Titan.”

Titan series models are divided into two types, one is a text model for content generation, and the other is an embedding model that can create vector embeddings for creating efficient search functions, etc. .

The text generation model is similar to OpenAI’s GPT-4 (but not necessarily identical in terms of performance) and can perform tasks such as writing blog posts and emails, summarizing documents, and extracting information from databases. Wait for the task. Embedding models translate textual inputs (such as words and phrases) into numerical representations, called embeddings, that contain the semantics of the text.

ChatGPT and Microsoft Bing chatbots based on the OpenAI language model sometimes produce inaccurate information due to a behavior called "hallucination" where the output looks Very convincing, but actually irrelevant to the training data.

AWS Vice President Bratin Saha told CNBC that Amazon “cares deeply” about accuracy and making sure its Titan model produces high-quality responses.

Customers will be able to customize Titan models with their own data. But another vice president said the data would never be used to train Titan models to ensure other customers, including competitors, didn't end up benefiting from the data.

Sivasubramanian and Saha declined to talk about the size of the Titan models or identify the data Amazon used to train them, and Saha wouldn't describe the procedures Amazon follows to remove problematic parts of the model's training data. process.

Cloud Service

The release of the Titan model is actually part of Amazon’s “Bedrock” plan. Amazon, the world's largest cloud infrastructure provider, obviously will not leave such a rapidly growing field to rivals such as Google and Microsoft.

The Bedrock initiative comes a month after OpenAI released GPT-4. At that time, Microsoft had invested billions of dollars in OpenAI and provided computing power to OpenAI through its Azure cloud service. This is the strongest competition Amazon's AWS business has ever faced.

The Bedrock cloud service is similar to the engine behind the ChatGPT chatbot powered by Microsoft-backed startup OpenAI. Amazon Web Services will provide access to models like Titan through its Bedrock generative AI service.

The initial basic model set supported by the service also includes models from AI21, Anthropic and Stability AI, as well as Amazon’s self-developed new Titan series models. Bedrock’s debut is a precursor to the partnerships AWS has struck with generative AI startups over the past few months.

The key benefit of Bedrock is that users can integrate it with the rest of the AWS cloud platform. This means organizations will be able to more easily access data stored in the Amazon S3 object storage service and benefit from AWS access control and governance policies.

Amazon isn’t currently revealing how much the Bedrock service will cost because it’s still in a limited preview. A spokesperson said customers can add themselves to a waiting list. Previously, Microsoft and OpenAI have announced prices for using GPT-4, starting at a few cents per 1,000 tokens, with one token equivalent to about four English characters, while Google has yet to announce pricing for its PaLM language model.

AI programming assistant, free and open to individuals

We know that programming will be one of the areas where generative AI technology will be rapidly applied. Today, software developers spend a lot of time writing fairly plain and undifferentiated code, and a lot of time learning complex new tools and techniques that are always evolving. As a result, developers have very little time to actually develop innovative features and services.

To deal with this problem, developers will try to copy code snippets from the Internet and then modify them, but they may inadvertently copy invalid code and code with security risks. This method of searching and copying also wastes developers’ time on business construction.

Generative AI can greatly reduce this arduous work by "writing" mostly undifferentiated code, allowing developers to write code faster and have more time to focus. On to more creative programming jobs.

In 2022, Amazon announced the launch of a preview version of Amazon CodeWhisperer. This AI programming assistant uses an embedded basic model to generate code suggestions in real time based on developers' comments described in natural language and existing code in the IDE, improving work efficiency. After the preview version was released, developers received an enthusiastic response. Compared with developers who did not use the programming assistant, users completed tasks 57% faster on average and had a 27% higher success rate.

Now, Amazon announces that CodeWhisperer is officially available and open to all individual users for free without any qualifications or Limitation on usage time. Also includes citation tracking and 50 security scans per month. Users only need to register by email and do not need an Amazon cloud service account. Enterprise customers can choose the Professional version which includes more advanced management features.

In addition to supporting Python, Java, JavaScript, TypeScript and C#, CodeWhisperer has added support for Go, Kotlin, Rust, PHP and SQL, etc. Support for 10 development languages. Developers can access CodeWhisperer through the Amazon Toolkit plug-in in integrated development environments such as VS Code, IntelliJ IDEA, Amazon Cloud9, and can also be used in the Amazon Lambda console.

Amazon says that in addition to learning from billions of lines of public code, CodeWhisperer is also trained on Amazon’s code. Therefore it is currently the most accurate, fastest, and secure way to generate code for Amazon cloud services, including Amazon EC2 and more.

The code generated by AI programming assistants may contain hidden security vulnerabilities, so CodeWhisperer provides built-in security scanning capabilities (implemented through automatic inference) and is the only one to do so . This feature finds hard-to-detect vulnerabilities and recommends remediation, such as those in the Top 10 Open Web Application Security Project (OWASP) and those that do not comply with cryptographic library best practices.

Additionally, to help developers develop code responsibly, CodeWhisperer filters out code suggestions that may be considered biased or unfair. At the same time, because customers may need to refer to open source code sources or obtain permission to use them, CodeWhisperer is also the only programming assistant that can filter and mark suspected open source code suggestions.

Summary

Amazon has been in the AI field for more than 20 years, and AWS already has more than 100,000 AI customers. Amazon has been using a tweaked version of Titan to serve search results through its homepage, Sivasubramanian said.

However, Amazon is just one of the big companies to launch generative AI capabilities after ChatGPT emerged and became popular. Expedia, HubSpot, Paylocity, and Spotify are all committed to integrating OpenAI technology, while Amazon is not. “We always act when everything is ready, and all the technology is already there,” Sivasubramanian said. Amazon wants to ensure Bedrock is easy to use and cost-effective thanks to its use of a custom AI processor.

Currently, companies such as C3.ai, Pegasystems and Salesforce are preparing to introduce Amazon Bedrock.

The above is the detailed content of Amazon enters the ChatGPT war with high profile and releases Titan large model and AI programming assistant for free. CEO: Change all experiences. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

The top ten recommendations for 2025 are authoritatively released by the currency trading platform app

Apr 21, 2025 pm 04:36 PM

The top ten recommendations for 2025 are authoritatively released by the currency trading platform app

Apr 21, 2025 pm 04:36 PM

The top ten Apps of the 2024 currency trading platform are: 1. Binance, the world's largest trading volume, suitable for professional traders; 2. Gate.io, supports a wide range of digital assets and provides pledge services; 3. OKX, has many innovative functions, supports multi-chain trading; 4. Coinbase, has a friendly interface, suitable for beginners; 5. FTX, focuses on derivative trading, and provides low-cost tools; 6. Huobi, high liquidity, and global layout; 7. Crypto.com, comprehensive services, rich reward plans; 8. Bybit, derivative trading platform, suitable for high-frequency trading; 9. KuCoin, a new token initial platform, low fees; 10. Phem

How long does it take to recharge digital currency to arrive? Recommended mainstream digital currency recharge platform

Apr 21, 2025 pm 08:00 PM

How long does it take to recharge digital currency to arrive? Recommended mainstream digital currency recharge platform

Apr 21, 2025 pm 08:00 PM

The time for recharge of digital currency varies depending on the method: 1. Bank transfer usually takes 1-3 working days; 2. Recharge of credit cards or third-party payment platforms within a few minutes to a few hours; 3. The time for recharge of digital currency transfer is usually 10 minutes to 1 hour based on the blockchain confirmation time, but it may be delayed due to factors such as network congestion.

PU Prime wins Global 2025 Award for Best Partner Program Brokerage

Apr 21, 2025 pm 02:51 PM

PU Prime wins Global 2025 Award for Best Partner Program Brokerage

Apr 21, 2025 pm 02:51 PM

PUPrime won the title of Best Partner Program Brokerage Company in 2025! PUPrime, a world-renowned online trading platform, was recently selected as the best partner program brokerage company of 2025 by the authoritative financial magazine Global Business and Finance. This award is an affirmation of PUPrime's outstanding performance, innovation and partnerships in the financial sector. Global Business and Finance Magazine is known for its in-depth coverage of international business and finance. Its editorial team consists of experienced journalists and industry experts to provide readers with professional analysis and news on global markets, investment strategies, corporate financing and economic policies. The magazine's annual awards are designed to recognize individuals, companies that have achieved outstanding achievements in business and finance

BlockDag's TestNet brings codeless Web3 tools to the crowd

Apr 21, 2025 pm 06:27 PM

BlockDag's TestNet brings codeless Web3 tools to the crowd

Apr 21, 2025 pm 06:27 PM

In the turbulent cryptocurrency market, some projects have performed strongly, others have faced challenges. This week, ShibaInu (SHIB) and Cardano (ADA) were under downward pressure – SHIB fell 8%, and ADA briefly fell below $0.60 after large-scale whale activity. However, some projects rose against the trend. Although Shibarium has completed a milestone of billion-date deals, ShibaInu (SHIB) has a limited rebound in price. SHIB price fell 8% this week and is currently trading at $0.001226. On-chain data shows that about 62% of SHIB holders are currently in a loss-making state. This text has been false

Web3 social media platform TOX collaborates with Omni Labs to integrate AI infrastructure

Apr 21, 2025 pm 07:06 PM

Web3 social media platform TOX collaborates with Omni Labs to integrate AI infrastructure

Apr 21, 2025 pm 07:06 PM

Decentralized social media platform Tox has reached a strategic partnership with OmniLabs, a leader in artificial intelligence infrastructure solutions, to integrate artificial intelligence capabilities into the Web3 ecosystem. This partnership is published by Tox's official X account and aims to build a fairer and smarter online environment. OmniLabs is known for its intelligent autonomous systems, with its AI-as-a-service (AIaaS) capability supporting numerous DeFi and NFT protocols. Its infrastructure uses AI agents for real-time decision-making, automated processes and in-depth data analysis, aiming to seamlessly integrate into the decentralized ecosystem to empower the blockchain platform. The collaboration with Tox will make OmniLabs' AI tools more extensive, by integrating them into decentralized social networks,