Technology peripherals

Technology peripherals

AI

AI

Use AI to help hundreds of millions of blind people 'see the world' again!

Use AI to help hundreds of millions of blind people 'see the world' again!

Use AI to help hundreds of millions of blind people 'see the world' again!

In the past, restoring sight to blind people was often regarded as a medical "miracle."

With the explosive breakthrough of multi-modal intelligence technology represented by "machine vision natural language understanding", AI has brought new possibilities to assist blindness, and more blindness Users will use the perception, understanding and interaction capabilities provided by AI to "see the world" again in another way.

AI helps the blind, allowing more people to "see the world" again

Generally speaking, visually impaired people who cannot see with their eyes The channel through which patients perceive the outside world is through other senses besides vision, such as hearing, smell, and touch. Information from these other modalities helps the visually impaired alleviate the problems caused by vision defects to a certain extent. However, scientific research shows that among the external information humans obtain, vision accounts for as much as 70% to 80%.

Therefore, building a machine vision system based on AI to help visually impaired patients have visual perception and visual understanding of the external environment is undoubtedly the most direct and effective solution.

In the field of visual perception, current single-modal AI models have surpassed human levels in image recognition tasks, but this type of technology can currently only achieve recognition and recognition within the visual modality. Understanding, it is difficult to complete cross-modal learning, understanding and reasoning that intersects with other sensory information. To put it simply, it can only be perceived but not understood.

To this end, David Marr, one of the founders of computational vision, proposed the core issue of visual understanding research in the book "Vision", believing that the visual system should be constructed in two dimensions of the environment. or three-dimensional representation and can be interacted with. Interaction here means learning, understanding and reasoning.

It can be seen that excellent AI blindness assistance technology is actually a systematic project that includes intelligent sensing, intelligent user intention reasoning and intelligent information presentation. Only in this way can information accessibility be built interactive interface.

In order to improve the generalization ability of the AI model and enable the machine to have cross-modal image analysis and understanding capabilities, multi-modal algorithms represented by "machine vision natural language understanding" began rise and develop rapidly.

This algorithm model for the interaction of multiple information modalities can significantly improve AI’s perception, understanding and interaction capabilities. Once mature and applied to the field of AI blindness assistance, it will benefit the digital world. Hundreds of millions of blind people can "see the world" again.

According to WHO statistics, at least 2.2 billion people in the world are visually impaired or blind, and my country is the country with the most blind people in the world, accounting for 18%-20% of the total number of blind people in the world. The number of newly blind people reaches 450,000 every year.

The "domino effect" caused by the visual question and answer task for the blind

First-person perspective perception technology is of great significance to AI for assisting the blind. It does not require blind people to step out as participants to operate smart devices. Instead, it can start from the blind person's real perspective and help scientists build algorithm models that are more in line with blind people's cognition. This has prompted the emergence of the basic research task of visual question answering for blind people.

The visual question and answer task for blind people is the starting point and one of the core research directions for academic research on AI assistance for blindness. However, under the current technical conditions, the blind visual question and answer task, as a special type of visual question and answer task, faces greater difficulties in improving accuracy compared with ordinary visual question and answer tasks.

On the one hand, the types of questions for blind visual question and answer are more complex, including target detection, text recognition, color, attribute recognition and other types of problems, such as distinguishing meat in the refrigerator, consulting Instructions for taking medicines, choosing unique colored shirts, introducing book contents, etc.

On the other hand, due to the particularity of the blind person as a perceptual interaction subject, it is difficult for the blind person to grasp the distance between the mobile phone and the object when taking pictures, which often results in out-of-focus situations. Or although the object is captured, not all of it is captured, or key information is not captured, which greatly increases the difficulty of effective feature extraction.

At the same time, most of the existing visual question and answer models are based on question and answer data training in a closed environment. They are severely limited by sample distribution and are difficult to generalize to question and answer scenarios in the open world. , it is necessary to integrate external knowledge for multi-stage reasoning.

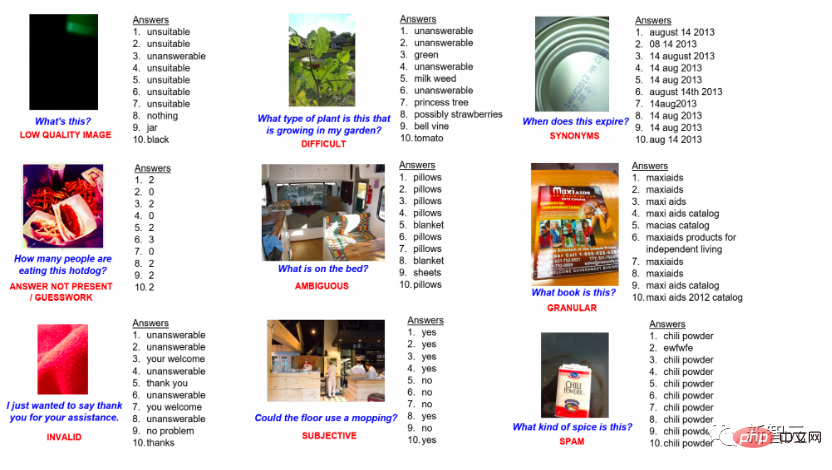

Blind visual question and answer data

##Secondly, with the development of blind visual question and answer research, scientists During the research process, it was discovered that visual question answering will encounter derivative problems caused by noise interference. Therefore, how to accurately locate noise and complete intelligent reasoning also faces major challenges.

Blind people often make a large number of errors in visual question-and-answer tasks involving image-text pairing because they do not have visual perception of the outside world. For example, when a blind person goes shopping in a supermarket, it is easy for a blind person to ask the wrong questions due to the similar appearance and feel of the products, such as picking up a bottle of vinegar and asking who the manufacturer of soy sauce is. This kind of language noise often causes existing AI models to fail, requiring AI to have the ability to analyze noise and available information from complex environments.

Finally, the AI assisted blind system should not only answer the current doubts of the blind, but also have the ability to reason about intelligent intentions and present intelligent information, and intelligent interaction technology is an important research direction. Algorithm research is still in its infancy.

The research focus of intelligent intent reasoning technology is to infer that visually impaired users want to express their interactive intentions by allowing the machine to continuously learn the language and behavioral habits of visually impaired users. For example, through the action of a blind person holding a water glass and sitting down, it can predict the next action of placing the water glass on the table, and through the blind person asking questions about the color or style of clothes, it can predict possible travel, etc.

The difficulty of this technology is that due to the randomness of the user's expression and expression actions in time and space, the psychological model of interactive decision-making also brings There is randomness, so how to extract effective information input by users from continuous random behavioral data and design a dynamic non-deterministic multi-modal model to achieve the best presentation of different tasks is very critical.

Focus on basic research on AI to assist blindness, Inspur Information has won international recognition for many of its studiesThere is no doubt that AI is the major breakthrough in the above basic research fields. The key to early implementation of technology to assist blindness. Currently, the cutting-edge research team from Inspur Information is making every effort to promote the further development of AI blindness assistance research through multiple algorithm innovations, pre-training models and basic data set construction.

In the field of blind visual question and answer task research, VizWiz-VQA is a global multi-modal top blind visual question and answer challenge jointly launched by scholars from Carnegie Mellon University and other institutions. It adopts The "VizWiz" blind vision data set trains the AI model, and then the AI gives answers to random picture and text pairs provided by the blind people. In the visual question and answer task for the blind, Inspur Information Frontier Research Team solved many common problems in the visual question and answer task for the blind.

First of all, since the pictures taken by the blind are blurry and have less effective information, the questions are usually more subjective and vague, and it is a challenge to understand the appeals of the blind and give answers. sex.

The team proposed a dual-stream multi-modal anchor point alignment model, which uses key entities and attributes of visual target detection as anchor points to connect pictures and questions to achieve multi-modal semantic enhancement.

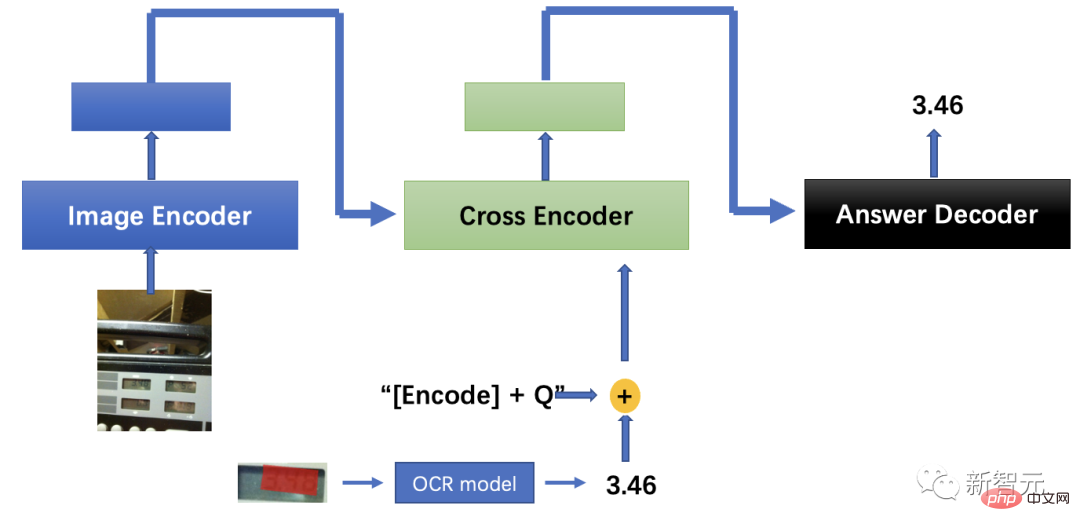

Secondly, in view of the problem that it is difficult for blind people to ensure the correct direction when taking pictures, by automatically correcting the image angle and character semantic enhancement, combined with optical character detection and recognition technology to solve the problem of "is "What" is a question of understanding.

Finally, the pictures taken by blind people are usually blurry and incomplete, which makes it difficult for general algorithms to determine the type and purpose of the target object, and the model needs to be more capable. Sufficient common sense ability to reason about the user's true intentions.

To this end, the team proposed an algorithm that combines answer-driven visual positioning with large model image and text matching, and proposed a multi-stage cross-training strategy. During reasoning, the cross-trained visual positioning and image-text matching models are used to infer and locate the answer area; at the same time, the regional characters are determined based on the optical character recognition algorithm, and the output text is sent to the text encoder, and finally the text of the image-text matching model is The decoder got the answer that the blind man asked for help, and the final accuracy of the multi-modal algorithm was 9.5 percentage points ahead of human performance.

Multi-modal visual question answering model solution

One of the biggest obstacles to the current application of visual positioning research is the intelligent processing of noise. In real scenes, text descriptions are often noisy. , such as human slips of the tongue, ambiguity, rhetoric, etc. Experiments have found that text noise can cause existing AI models to fail.

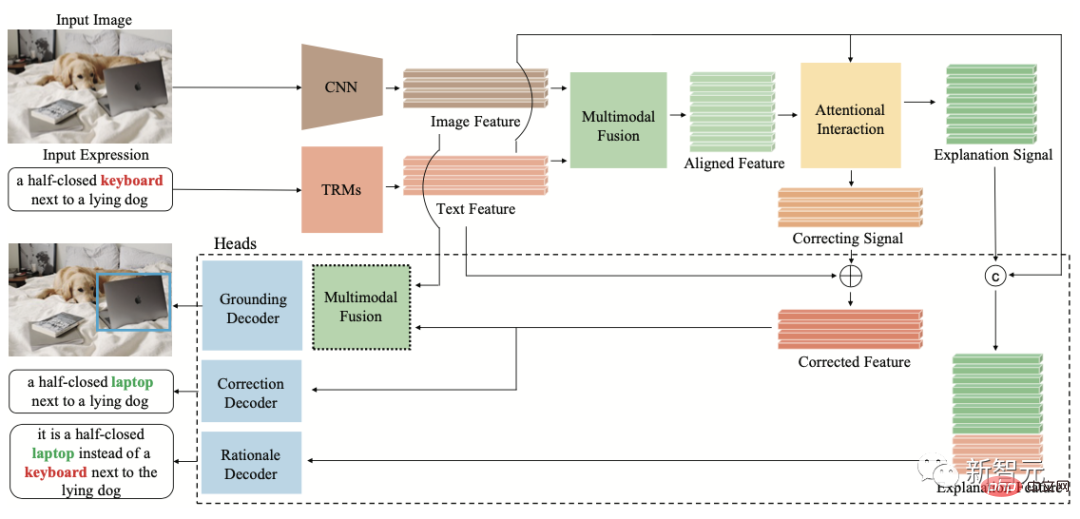

To this end, Inspur Information Frontier Research Team explored the multi-modal mismatch problem caused by human language errors in the real world, and proposed the visual positioning text denoising reasoning task FREC for the first time. The model is required to correctly locate the visual content corresponding to the noise description and further reason about the evidence that the text is noisy.

FREC provides 30,000 images and more than 250,000 text annotations, covering a variety of noises such as slips of the tongue, ambiguity, subjective deviations, etc. It also provides interpretable noise correction, noisy evidence, etc. Label.

##FCTR structure diagram

At the same time, the team also built the first The interpretable denoising visual positioning model FCTR improves the accuracy by 11 percentage points compared with the traditional model under noisy text description conditions.

This research result has been published at the ACM Multimedia 2022 conference, which is the top conference in the international multimedia field and the only CCF-recommended Class A international conference in this field.

##Paper address: https://www.php.cn/link/9f03268e82461f179f372e61621f42d9

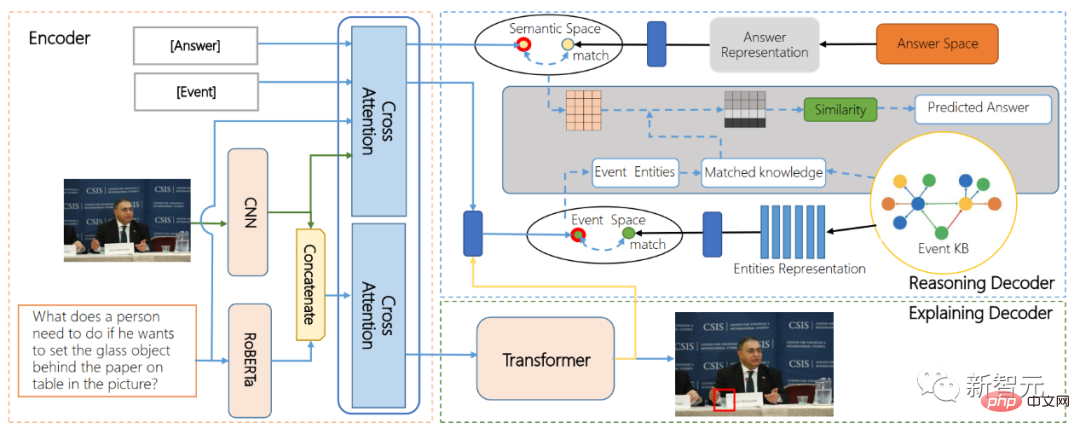

In order to explore AI’s ability to interact with thoughts based on images and text, Inspur Information Frontier Research Team has proposed a new research direction for the industry, proposing the explainable agent visual interaction question and answer task AI-VQA, through Establish logical links to search in a huge knowledge base, and expand existing content of images and texts.Currently, the team has built an open source data set for AI-VQA, which contains more than 144,000 large-scale event knowledge bases, 19,000 interactive behavior cognitive reasoning questions fully manually annotated, and key Interpretable annotations such as objects, supporting facts, and reasoning paths.

ARE structure diagram

At the same time, the first smart phone proposed by the team The body interaction behavior understanding algorithm model ARE (encoder-decoder model for alternative reason and explanation) realizes end-to-end interaction behavior positioning and interaction behavior impact reasoning for the first time. Based on multi-modal image and text fusion technology and knowledge graph retrieval algorithm, it realizes long-term A visual question answering model for causal chain reasoning ability.The greatness of technology is not only to change the world, but more importantly, how to benefit mankind and make more impossible things possible.

For the blind, being able to live independently like other people through AI technology to assist the blind, rather than being treated specially, reflects the greatest goodwill of technology.

Now that AI is shining into reality, technology is no longer as cold as a mountain, but full of the warmth of humanistic care.

Standing at the forefront of AI technology, Inspur Information hopes that research on artificial intelligence technology can attract more people to continue to promote the implementation of artificial intelligence technology, so that multi-modal AI can help The wave of blindness extends to more scenarios such as AI anti-fraud, AI diagnosis and treatment, AI disaster early warning, etc., creating more value for our society.

Reference link: ##https://www.php.cn/link/9f03268e82461f179f372e61621f42d9

The above is the detailed content of Use AI to help hundreds of millions of blind people 'see the world' again!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: